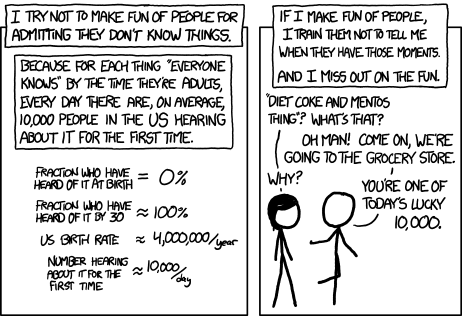

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

Stampy's Interactive AGI Safety FAQ

Additionally, this will serve as a way to spread the project Rob Miles' volunteer team[1] has been working on: Stampy - which will be (once we've got considerably more content) a single point of access into AGI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that! You can help by adding other people's questions and answers to Stampy or getting involved in other ways!

We're not at the "send this to all your friends" stage yet, we're just ready to onboard a bunch of editors who will help us get to that stage :)

We welcome feedback[2] and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase. You are encouraged to add other people's answers from this thread to Stampy if you think they're good, and collaboratively improve the content that's already on our wiki.

We've got a lot more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

PS: Based on feedback that Stampy will be not serious enough for serious people we built an alternate skin for the frontend which is more professional: Alignment.Wiki. We're likely to move one more time to aisafety.info, feedback welcome.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

Guidelines for Answerers:

- Linking to the relevant canonical answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

- ^

Via the feedback form.

Interesting.

I'm not sure I understood the first part and what f(A,B) is. In the example that you gave B is only relevant with respect of how much it affects A ("damage the reputability of the AI risk ideas in the eye of anyone who hasn't yet seriously engaged with them and is deciding whether or not to"). So, in a way you are still trying to maximize |A| (or probably a subset of it: people who can also make progress on it (|A'|)). But in "among other things" I guess that you could be thinking of ways in which B could oppose A, so maybe that's why you want to reduce it too. The thing is: I have problems visualizing most of B opposing A and what that subset (B') could even be able to do (as I said, outside reducing |A|). I think that that is my biggest argument, that B' is a really small subset of B and I don't fear them.

Now, if your point is that to maximize |A| you have to keep in mind B and so it would be better to have more 'legitimacy' on the alignment problem before making it viral you are right. So is there progress on that? Is the community building plan to convert authorities in the field to A before reaching the mainstream then?

Also are people who try to disprove the alignment problem in B? If that's the case I don't know if our objective should be to maximize |A'|. I'm not sure if we can reach a superintelligence with AI, so I don't know if it wouldn't be better to think about maximize the people trying to solve OR dissolve the alignment problem. If we consider that most people probably wouldn't feel strongly about one side of the other (debatable), then I don't think is that big of a deal bringing the discussions more to the mainstream. If AI risk arguments include that not matter how uncertain researchers are about the problem giving what's at stake we should lower the chances, then I even see B and B' smaller. But maybe I'm too optimistic/ marketplacer/ memer.

Lastly, the maximum size of A is smaller the shorter the timelines. Are people with short timelines the ones trying to reach the most people in the short term?