Let's talk about the sharp increase in EA funding. I feel anxious about it. I don't think most of my feelings here are rational, but they do affect my actions, so it would be irrational not to take them seriously.

Some thoughts (roughly ordered from general to personal):

- More money is good. It means we have more resources to work on the world's most pressing problems. Yay

- I have seen little evidence that FTX Future Fund (FFF) or EA Infrastructure Fund (EAIF) have lowered their standards for mainline grants

- Several people have gotten in touch with me about regranting money and it seems it would be easier for the median EA to get say $10,000 in funding than it was a year ago. It's possible that this change is more to do with me than FFF though. And also, perhaps this is a good thing. I think it's positive EV to help well aligned people with finance to help them work on important projects faster.

- I'm really really really really grateful to EA Billionaries (in this case Sam Bankman-Fried) for working hard, taking risks and giving away money. I want to both express thanks and also say that working out how do deal with money as a community is important, reasonable work

- The joke "sign up to FTX Funds, I guess" is probably a bad meme. eg "I'm thinking of trying to learn to rollerblade" "sign up to FTX Future Fund?" suggests that FFF has a very low standard for entry. It is a good joke, but I don't think a helpful one.

- Perhaps my anxiety is that I feel like an imposter around large amounts of money - "you can't fund my project, I'm just me". As Aveek said on twitter, sometimes I feel "I wouldn't trust the funding strategy of any organisation that is willing to fund me". This is false, but sometimes I believe it, maybe you do to?

- Perhaps this anxiety is that I worry about EA being seen to be full of grifters. Like the person who is the wealthiest on their street, I don't want bad press from people saying "EAs are spendthrift". I don't think we are, but there is a part of the movement that does get paid more and spend more than many charity workers. I don't know what to do about this.

- My inner Stefan Shubert says "EA is doing well and this is a good thing, don't be so hard on yourself" and I do think there is a chance that this is unnecessary pessimism

- I talk about it on twitter here. I don't mind that I did this, but I think the forum is generally a better place to have the discussion than twitter. Happy to take criticism

- I have hang-ups about money in general. For several years after university I lived on about $12k a year (which is low by UK standards, though high by world ones). It's pretty surreal to be able to even consider applying for say 5x this as a salary. It's like going to a fancy restaurant for the first time ("the waiters bring the food to the table?") I just can't shake how surreal this all is.

- I think it's good to surface and discuss this but I'm open to the idea that it isn't and will blow over.

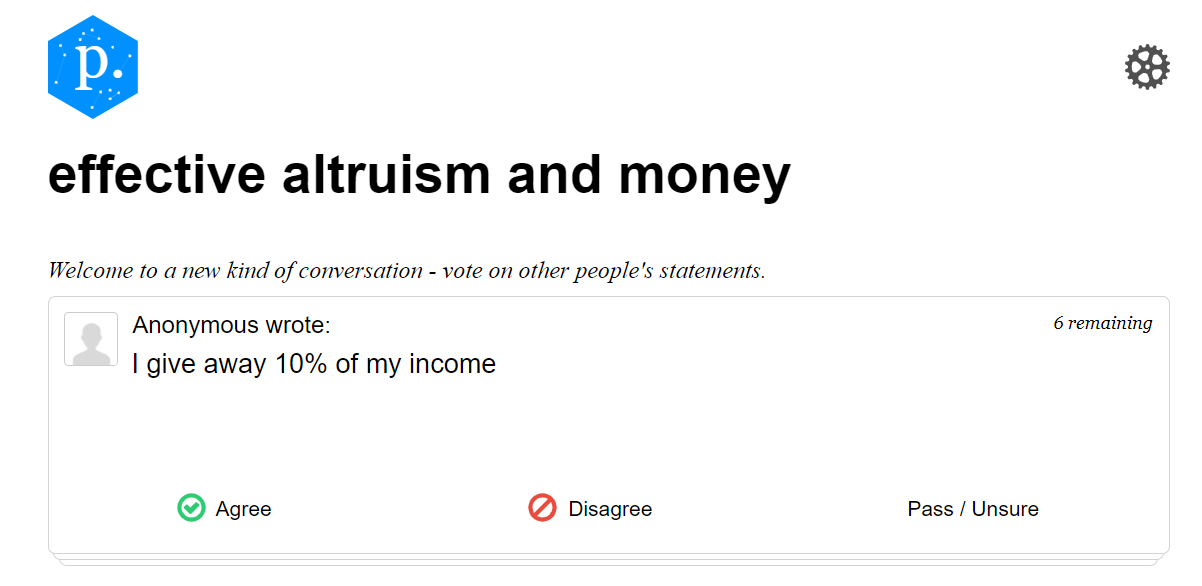

I've made a Polis poll here if you want to see how different views cluster

I've spent time in the non-EA nonprofit sector, and the "standard critical story" there is one of suppressed anger among the workers. To be clear, this "standard critical story" is not always fair, accurate, or applicable. By and large, I also think that, when it is applicable, most of the people involved are not deliberately trying to play into this dynamic. It's just that, when people are making criticisms, this is often the story I've heard them tell, or seen for myself.

It goes something like this:

Part of the core EA thesis is that we want to have a different relationship with money and labor: pay for impact, avoid burnout, money is good, measure what you're doing, trust the argument and evidence rather than the optics. I expect that anybody reading this comment is very familiar with this thesis.

It's not a thesis that's optimized for optics or for warm fuzzies. So it should not be surprising that it's easy to make it look bad, or that it provokes anxiety.

This is unfortunate, though, because appearances and bad feelings are heuristics we rely on to avoid getting sucked into bad situations.

Personally, I think the best way to respond to such anxieties is to spend the energy you put into worrying to critically, carefully investigate some aspect of EA . We make constant calls for criticism, either from inside or outside. Part of the culture of the movement involves an unusual level of transparency, and responsiveness to the argument rather than the status of the arguer.

One way to approach this would simply be to make a hypothesis (i.e. the bar for grants is being lowered, we're throwing money at nonsense grants), and then see what evidence you can gather for and against it.

Another way would be to identify a hypothesis for which it's hard to gather evidence either way. For example, let's say you're worried that an EA org is run by a bunch of friends who use their billionaire grant money to pay each other excessive salaries and and sponsor Bahama-based "working" vacations. What sort of information would you need in order to support this to the point of being able to motivate action, or falsify it to the point of being able to dissolve your anxiety? If that information isn't available, then why not? Could it be made available? Identifying a concrete way in which EA could be more transparent about its use of money seems like an excellent, constructive research project.

Overall I like your post and think there's something to be said for reminding people that they have power; and in this case, the power is to probe at the sources of their anxiety and reveal ground-truth. But there is something unrealistic, I think, about placing the burden on the individual with such anxiety; particularly because answering questions about whether Funder X is lowering / raising the bar too much requires in-depth insider knowledge which - understandably - people working for Funder X might not want to reveal for a number of reasons, such as:

I'm also just a bit averse, from experience, of replying to people's anxieties with "solve it yourself". I was on a graduate scheme where pretty much every response to an issue raised - often really systemic, challenging issues which people haven't been able to solve for years, or could be close to whistle-blowing issues - was pretty much "well how can you tackle this?"* The takeaway mesage then feels something like "I'm a failure if I can't see the way out of this, even if this is really hard, because this smart more experienced person has told me it's on me". But lots of these systemic issues do not have an easy solution, or taking steps towards action are either emotionally / intellectually hard or frankly could be personally costly.

From experience, this kind of response can be empowering, but it can also inculcate a feeling of desperation when clever and can-do attitude people (like most EAs) are advised to solve something without support or guidance, especially when this is near intractable. I'm not saying this is what the response of 'research it yourself' is - in fact, you very much gave guidance - but I think the response was not sufficiently mindful of the barriers to doing this. Specifically, I think it would be really difficult for a small group of capable people to research this a priori, unless there were other inputs and support like e.g. significant cooperation from Funder X they're looking to scrutinise, or advice from other people / orgs who've done this work. Sometimes that is available, but it isn't always and I'd argue it's kind of a condition for success / not getting burned out trying to get answers on the issue that's been worrying you.

Side-note: I've deliberately tried to make this commentary funder neutral because I'm not sure how helpful the focus on FTx is. In fairness to them, they may be planning to publish their processes / invite critique (or have done so in private?), or are planning to take forward rigorous evaluation of their grants like GiveWell did? So would rather frame this as an invitation to comment if they haven't already, because it felt like the assumptions throughout this thread are "they ain't doing zilch about this" which might not be the case.

*EDIT: In fact, sometimes a more appropriate response would have been "yes, this is a really big challenge you've encountered and I'm sorry you feel so hopeless over it - but the feeling reflects the magnitude of the challenge". I wonder if that's something relevant to the EA community as well; that aspects of moral uncertainty / uncertainty about whether what we're doing is impactful or not is just tough, and it's ok to sit with that feeling.

Moved this comment to a shortform post here.

Note that patient philanthropy includes investing in resources besides money that will allow us to do more good later; e.g. the linked article lists "global priorities research" and "Building a long-lasting and steadily growing movement" as promising opportunities from a patient longtermist view.

Looking at the Future Fund's Areas of Interest, at least 5 of the 10 strike me as promising under patient philanthropy: "Epistemic Institutions", "Values and Reflective Processes", "Empowering Exceptional People", "Effective Altruism", and "Research That Can Help Us Improve"

Two factual nitpicks:

2. The money's not described by AF as "no strings attached." From their FAQ:

You're wanting transparency about this fellowship's strategy for attracting applicants, and how it'll get objective information on whether or not this is an effective use of funds.

Glancing over the FAQ, the AF seems to be trying to identify high-school-age altruistically-minded geniuses, and introduce them to the ideas of Effective Altruism. I can construct an argument in favor of this being a good-but-hard-to-measure idea in line with the concept of hits-based investment, but I don't see one on their web page. In this case, I think the feedback loop for evaluating if this was a good idea or not involves looking at how these young people spend their money, and what they accomplish over the next 10 years.

It also seems to me that if, as you say, we're still funding constrained in an important way, then experimenting with ways to increase our donor base (as by sharing our ideas with smart young people likely to be high earners) and make more efficient use of our funds (by experimenting with low-cost and potentially very impactful hits-based approaches like this) is the right choice.

I could easily be persuaded that this program or general approach is too flawed, but I'd want to see a careful analysis that looks at both sides of the issue.

Thanks for the corrections, fixed. I agree that the hits-based justification could work out, just would like to see more public analysis of this and other FTX initiatives.

FYI, this just links to this same Forum post for me.

Thanks, fixed.

The subquestion of high salaries at EA orgs is interesting to me. I think it pushes on an existing tension between a conception of the EA community as a support network for people who feel the weight of the world's problems and are trying to solve them, vs. a conception of the EA community as the increasingly professional project of recruiting the rest of the world to work on those problems too.

If you're thinking of the first thing, offering high salaries to people "in the network" seems weird and counterproductive. After all, the truly committed people will just donate the excess, minus a bunch of transaction costs, and meanwhile you run the risk of high salaries attracting people who don't care about the mission at all, who will unhelpfully dilute the group.

Whereas if you're thinking of the second thing, it seems great to offer high salaries. Working on the world's biggest problems should pay as much as working at a hedge fund! I would love to be able to whole-heartedly recommend high-impact jobs to, say, college acquaintances who feel some pressure to go into high-earning careers, not just to the people who are already in the top tenth of a percentile for commitment to altruism.

I really love the EA-community-as-a-support-network-for-people-who-feel-the-weight-of-the-world's-problems-and-are-trying-to-solve-them. I found Strangers Drowning a very moving read in part for its depiction of pre-EA-movement EAs, who felt very alone, struggled to balance their demanding beliefs with their personal lives, and probably didn't have as much impact as they would have had with more support. I want to hug them and tell them that it's going to be okay, people like them will gather and share their experiences and best practices and coping skills and they'll know that they aren't alone. (Even though this impulse doesn't make a lot of logical sense in the case of, say, young Julia Wise, who grew up to be a big part of the reason why things are better now!) I hope we can maintain this function of the EA community alongside the EA-community-as-the-increasingly-professional-project-of-recruiting-the-rest-of-the-world-to-work-on-those-problems-too. But to the extent that these two functions compete, I lean towards picking the second one, and paying the salaries to match.

I think this distinction is well-worded, interesting, and broadly correct.

I separately think there's a bunch of timesaving activities that "people "in the network" can spend money on," though of course it depends a bunch on details of whether you think the marginal EA direct work hour's value is closer to $10, $100, or $1000.

Even at $1000/h, spending substantially above 100k/year still feels kinda surprising to me, but no longer crazy, and I can totally imagine people smart about ekeing out effectiveness managing to spend that much or more.

Fwiw that doesn't seem true to me. I think there are many people who wouldn't donate the excess (or at least not most of it) but who are still best described as truly committed.

Thanks for writing this. I share some of this uneasiness - I think there are reputational risks to EA here, for example by sponsoring people to work in the Bahamas. I'm not saying there isn't a potential justification for this but the optics of it are really pretty bad.

This also extends to some lazy 'taking money from internet billionaires' tropes. I'm not sure how much we should consider bad faith criticisms like this if we believe we're doing the right thing, but it's an easy hit piece (and has already been done, e.g. a video attacked someone from the EA community running for congress about being part-funded by Sam Bankman-Fried - I'm deliberately not linking to it here because it's garbage).

Finally, I worry about wage inflation in EA. EA already mostly pays at the generous end of nonprofit salaries, and some of the massive EA orgs pay private-sector level wages (reasonably, in my view - if you're managing $600m/year at GiveWell, it's not unreasonable to be well-paid for that). I've spent most of my career arguing that people shouldn't have to sacrifice a comfortable life if they want to do altruistic work - but it concerns me that entry level positions in EA are now being advertised at what would be CEO-level salaries at other nonprofits. There is a good chance, I think, that EA ends up paying professional staff significantly more to do exactly the same work to exactly the same standard as before, which is a substantive problem; and there is again a real reputational risk here.

Suppose there was no existing nonprofit sector, or perhaps that everyone who worked there was an unpaid volunteer, so the only comparison was to the private sector. Do you think that the optimal level of compensation would differ significantly in this world?

In general I'm skeptical that the existence of a poorly paid fairly dysfunctional group of organisations should inspire us to copy them, rather than the much larger group of very effective orgs who do practice competitive, merit based compensation.

I was struck by this argument so ran a Twitter poll.

71% of the responding EAs thought that EA charities should be more like for-profits.

59% of the responding non-EAs took the same view. (Though this group was small.)

Obviously this poll isn't representative so should be taken with a grain of salt.

A quick note about the use of "bad faith criticisms" — I don't think it's the case that every argument against "taking money from internet billionaires" is bad faith, where bad faith is defined as falsely presenting one's motives, consciously using poor evidence or reasoning, or some other intentional duplicitousness.

It seems perfectly possible for one to coherently and in good faith argue that EA should not take money from billionaires. Perhaps in practice you find such high-quality good faith takes lacking, but in any case I think it's important not to categorically dismiss them as bad faith.

Agreed. I wasn't clear in the original post but I particularly had in mind this one attack ad, which is intellectually bad faith.

I share these concerns, especially given the prevailing discrepancy in salaries across cause areas, (e.g. ACE only just elevated their ED salary to ~$94k, whereas several mid-level positions at Redwood are listed at $100k - $170k) and I imagine likely to become more dramatic with money pouring specifically into meta and longtermist work. My concern here is that cause impartial EAs are still likely to go for the higher salary, which could lead to an imbalance in a talent-constrained landscape.

I have the opposite intuition. I think it's good to pay for impact, and not just (e.g.) moral merit or job difficulty. It's good for our movement if prices carry signals about how much the work is valued by the rest of the movement. If anything I'd be much more worried about nonprofit prices being divorced from impact/results (I've tried to write a post about this like five times and gave up each time).

I think that I agree with many aspects of the spirit of this, but it is fairly unclear to me that if organizations just tried to pay market rates for people to the extent that is possible it would result in this - I don't think funding is distributed across priorities according to the values of the movement as a whole (or even via some better conception of priorities where more engaged people were weighted more highly or something, etc.), and I think different areas in the movement have different philosophies around compensation, so it seems like there are other factors warping funding being ideally distributed. It seems really unclear to me if EA salaries currently are actually carrying signals about impact, as opposed to mostly telling us something about the funding overhang/relative ease of securing funding in various spaces (which I think is uncorrelated with impact to some extent). I guess to the extent that salaries seem correlated with impact (which I think is possibly happening but am uncertain), I'm not sure the reason is that it is the EA job market pricing in impact.

I'm pretty pro compensation going up in the EA space (at least to some extent across the board, and definitely in certain areas), but I think my biggest worry is that it might make it way harder to start new groups - the amount of seed funding a new organization needs to get going when the salary expectations are way higher (even in a well funded area) seems like a bigger barrier to overcome, even just psychologically, for entrepreneurial people who want to build something.

Though also I think a big thing happening here is that lots of longtermism/AI orgs. are competing with tech companies for talent, and other organizations are competing with non-EA businesses that pay less than tech companies, so the salary stratification is just naturally going to happen.

I agree, pricing in impact seems reasonable. But do you think this is currently happening? if so, by what mechanism? I think the discrepancies between Redwood and ACE salaries are much more likely explained by norms at the respective orgs and funding constraints rather than some explicit pricing of impact.

I agree the system is far from perfect and we still have a lot of room to grow. Broadly I think donors (albeit imperfectly) give more money to places they think are expected to have higher impact, and an org prioritizes (albeit imperfectly) having higher staffing costs if they think staffing on the margin is relatively more important to the org's marginal success.

I think we're far from that idealistic position now but we can and are slowly moving towards it.

Sometimes people in EA will target scalability and some will target cost-effectiveness. In some cause areas scalability will matter more and in some cost-effectiveness will matter more. E.g. longtermists seem more focused on scalability of new projects than those working on global health. Where scalability matters more there is more incentive for higher salaries (oh we can pay twice as much and get 105% of the befit – great). As such I expect there to be an imbalance in slaries between cases areas.

This has certainly been my experience with the people funding me to do longtermist work giving the feedback that they don’t care about cost-effectiveness if I can scale a bit quicker at higher costs I should do so and the people paying me to do neartermist work having a more frugal approach to spending resources.

As such my intuition aligns with Rockwell that:

On the other hand some EA folk might actively go for lower salaries with the view that they will likely have a higher counterfactual impact in roles that pay less or driven by some sense of self-scarificingness being important.

As an aside, I wonder if you (and OP) mean a different thing by "cause impartial" than I do. I interpret "cause impartial" as "I will do whatever actions maximize the most impact (subject to personal constraints), regardless of cause area." Whereas I think some people take it to mean a more freeform approach to cause selection that's more like "Oh I don't care what job I do as long as it has the "EA" stamp?" (maybe/probably I'm strawmanning here).

I think that's a fair point. I normally mean the former (the impact maximising one) but in this context was probably reading it in the context OP used it more like that later (the EA stamp one). Good to clarify what was meant here, sorry for any confusion.

I feel like this isn't the definition being used here, but when I use cause neutral, I mean what you describe, and when use cause impartial I mean something like and intervention that will lift all boats without respect to cause.

Thanks! That's helpful.

I think there's a problem with this. Salary as a signal for perceived impact may be good, but I'm worried some other incentives may creep in. Say you're paying a higher amount for AI safety work, and now another billionaire becomes convinced in the AI safety case and start funding you too. You could use that money for actual impact, or you could just pay your current or future employees more. And I don't see a real way to discern the two.

I also share these worries.

A further and related worry I have resulting from this is that is it is anti-competitive - it makes critiquing the EA movement that much harder. If you're an 'insider' or 'market leader' EA organisation you (by definition) have the ability to pay these very high salaries. This means you can, to some degree, 'price out' challenger organisations who have new ideas.

These are very standard economic worries but, for some reason, I don't hear the EA landscape being described in these terms.

[own views etc]

I think the 'econ analysis of the EA labour market' has been explored fairly well - I highly recommend this treatment by Jon Behar. I also find myself (and others) commonly in the comment threads banging the drum for it being beneficial to pay more, or why particular ideas to not do so (or pay EA employees less) are not good ones.

Notably, 'standard economic worries' point in the opposite direction here. On the standard econ-101 view, "Org X struggles as competitor Org Y can pay higher salaries", or "Cause ~neutral people migrate to 'hot' cause area C, attracted by higher pay" are desirable features, rather than bugs, of competition. Donors/'consumers' demand more of Y's product than X's (or more of C generally), and the price signal of higher salaries acts to attract labour to better satisfy this demand (both from reallocation within the 'field', and by incentivizing outsiders to join in). In aggregate, both workers and donors expect to benefit from the new status quo.

In contrast, trying to intervene in the market to make life easier for those losing out in this competition is archetypally (and undesirably) anti-competitive. The usual suggestion (implied here, but expressly stated elsewhere) is unilateral or mutual agreement between orgs to pay their employees less - or refrain from paying them more. The usual econ-101 story is this is a bad idea as although this can anoint a beneficiary (i.e. Those who run and donate to Org X, who feel less heat from Org Y potentially poaching their staff), it makes the market more inefficient overall, and harms/exploits employees (said activity often draws the ire of antitrust regulators). To cash out explicitly who can expect to lose out:

Also, on the econ-101 story, Orgs can't unfairly screw each other by over-cutting each other on costs. If a challenger can't compete with an incumbent on salary, their problem really is they can't convince donors to give it more money (despite its relatively discounted labour), which implies donors agreeing with the incumbent, not the challenger, that it is the better use of marginal scarce resources.

Naturally, there are corner cases where this breaks down - e.g. if labour supply was basically inelastic, upping pay just wastes money: none of these seem likely. Likewise how efficient the 'EA labour market' is unclear - but if inefficient and distorted, the standard econ reflex would be hesitant this could be improved by adding in more distortions and inefficiencies. Also, as being rich does not mean being right, economic competition could distort competition in the marketplace of ideas. But even if the market results are not synonymous with the balance of reason, they are probably not orthogonal either. If Animal-welfare-leaning Alice goes to Redwood over ACE, it also implies she's not persuaded the intrinsic merit of ACE is that much greater to warrant a large altruistic donation from her in terms of salary sacrifice; if Mike the mega donor splashes the cash on AI policy but is miserly about mental health, this suggests he thinks the former is more promising than the latter. Even if the economic weighting (wealth) was completely random, this noisily approximates equal weight voting on the merits - I'd guess it weakly correlates with epistemic accuracy.

So I think Org (or cause) X, if it is on the wrong side of these dynamics, should basically either get better or accept the consequences of remaining worse, e.g.:

Appealing for subsidies of various types seems unlikely to work (as although they are in Org X's interest, they aren't really in anyone else's) and probably is -EV from most idealized 'ecosystem wide' perspectives.

[also speaking in a personal capacity, etc.]

Hello Greg.

I suspect we're speaking at cross-purposes and doing different 'econ 101' analyses. If the EA world were one of perfect competition (lots of buyers and sellers, competition of products, ease of entry and exit, buyers have full information, equal market share) I'd be inclined to agree with you. In that case, I would effectively be arguing for less competitive organisations to get subsidies.

That is not, however, the world I observe. Suppose I describe a market along the following lines. One or two firms consume over 90% of the goods whilst also being sellers of goods. There are only a handful of other sellers. The existing firms coordinate their activities with each other, including the sellers mostly agreeing not to directly compete over products. Access to the market and to information about the available goods is controlled by the existing players. Some participants fear (rightly or wrongly) that criticising the existing players or the structure of the market will result in them being blacklisted.

Does such a market seem problematically uncompetitive? Would we expect there to be non-trivial barriers to entry for new firms seeking to compete on particular goods? Does this description bear any similarity to the EA world? Unfortunately, I fear the answer to all three of the questions is yes.

So, to draw it back to the original point, for the market incumbents to offer very high salaries to staff is one way in which such firms might use their market power to 'price out' the competition. Of course, if one happened to think that it would bad, all things considered, for that competition to succeed, then of course one might not mind this state of affairs.

Just to understand your argument - is the reputational risk your only concern, or do you also have other concerns? E.g. are you saying it's intrinsically wrong to pay such wages?

"There is a good chance, I think, that EA ends up paying professional staff significantly more to do exactly the same work to exactly the same standard as before, which is a substantive problem;"

At least in this hypothetical example it would seem naively ineffective (not taking into account things like signaling value) to pay people more salary for same output. (And fwiw here I think qualities like employee wellbeing is part of "output". But it is unclear how directly salary helps that area.)

Right, though I guess many would argue that by paying more you increase the output - either by attracting more productive staff or by increasing the productivity of existing staff (by allowing them to, e.g. make purchases that buy time). (My view is probably that the first of those effects is the stronger one.)

But of course the relative strength of these different considerations is tricky to work out. There is no doubt some point beyond which it is ineffective to pay staff more.

One thing you didn't mention is grant evaluation. I personally do not mind grants being given out somewhat quickly and freely in the beginning of a project. But before somebody asks for money again, they should need to have their last grant evaluated to see whether it accomplished anything. My sense is that this is not common (or thorough) enough, even for bigger grants. I think as the movement gets bigger, this seems pretty likely to lead to unaccountability.

Maybe more happens behind the scenes than I realize though, and there actually is a lot more evaluation than I think.

There should also be more transparency about how funding decisions are made, now that very substantial budgets are available. We don't want to find out at some point that many funding decisions have been made on less-than-objective grounds.

EA funding has reached a level where 'evaluating evaluations' should soon become an important project.

For me the reputational risks that Jack Lewars mentioned worry me a lot. Like how will the media portray effective altruism in the future? We're definitely going be seen as less sympathetic in leftist circles, being funded by billionaires and all.

Another concern for me is how this will change what type of people we'll attract to the movement. In the past the movement attracted people who were willing to live on a small amount of money because they care so much about others in this world. Now I'm worried that there will be more people who are less aligned with the values, who are in it at least partly for the money.

In another way it feels unfair. Why do I get to ask for 10k with a good chance of success, while friends of mine struggling with money who aren't EA aligned can't? I don't feel any more special or deserving than them in a way. I feel unfairly privileged somehow.

There's also this icky feeling I get with accepting money: what if my project isn't better than cash transfers to the extreme poor? Even if the probabilities of success are high enough, the thought of just "wasting money" while it could have gone to extremely poor people massively improving their lives just saddens me.

I'm not trying to make logical arguments here for or against something, I'm just sharing the feelings and worries going through my head.

I think it's good that this is being discussed publicly for the world to see. Then it at least doesn't seem to non-EA's who read this that we're all completely comfortable with it. I'm still of the opinion that all this money is great and positive news for the world and I'm very grateful for the FTX Future Fund, but it does come with these worries.

I appreciate that you're not trying to make logical arguments, but rather share your feelings. But in any event, my view is that this isn't an important consideration.

If they're doing something that's high-impact, then there might be such a case - but I assume you mean that they are not. And I think it's better to look at a global scale - and on such a scale, most people in the West are doing relatively well. So I don't think that's the group we should be worried about neglecting.

Can someone clarify this "taking money from billionaires bad" thing? I know some people are against billionaires existing, but surely diverting money from them to altruistic causes would be the ideal outcome for someone who doesn't want that money siloed? The only reason I can think of (admittedly I don't know a lot about real leftism, economics, etc.) that someone wouldn't want to, assuming said billionaire isn't e.g. micromanaging for evil purposes, is a fear of moral contamination-- or rather, social-moral contamination... Being contaminated with the social rejection and animosity that the billionaire "carries" within said complaining group. To me this seems like a type of reputation management that is so costly as to be unethical/immoral. It's like, "I'm afraid people won't like me if I talk to Johnny, so I can't transport Johnny's fifty dollars to the person panhandling outside." Surely we can set that sort of thing aside for the sake of actual material progress? Am I missing something? Because if it essentially amounts to "Johnny is unpopular and if I talk to him I might become unpopular too," we shouldn't set a norm of operating under those fears. That seems cowardly.

Hi Jeroen, thank you for a wonderfully worded comment. I think this is an excellent overview of different feelings of uneasiness people have surrounding the influx of funding within EA. I share most of these feelings, and know other that do.

The logical arguments these feelings relate to are another discussion (I have many thoughts), for now I find it really interesting data to see other people are experiencing feelings of uneasiness and why.

My personal responses to these:

I don't have a satisfying answer to this that calms my worries, but one response could be that the the damage done to the reputation of EA won't outweigh the good being done by all the money that goes to good causes.

Don't have a good answer to this. Perhaps just that I'm overestimating the scale of this happening.

Stefan Schubert worded it well: "I think it's better to look at a global scale - and on such a scale, most people in the West are doing relatively well. So I don't think that's the group we should be worried about neglecting."

This is just me being risk averse. Even if an EV calculation works out, it's difficult shake off the uncomfortable feeling of potentially being one of the unsuccesful projects as part of hits-based giving.

I generally feel that "reputational risk" explains far too much. You can say it all the time. I'd prefer we had a better sense of how damaging different sizes of scandal can be.

I think openly and transparently discussing EA money and its use is the opposite of a reputational risk. The more money there is, the more dangerous handling it behind curtains becomes, and even being perceived like that is bad for EA.

Reputational risks are maybe in sharing ideas that would be perceived as immoral in the general society, or being jerks to people. Not being open about our points of uncertainty.

Here are the consensus statements on the polis poll.

I grow up in a relatively poor family in Italy. I learned English from scratch at 21. I graduated at 26 (way above the UK/US standards). I was able to survive at uni thanks to scholarships and sacrifices. Exactely one year ago I used to eat everyday in a desolate university canteen in Milan because I didn't have the money to afford to eat out more than twice per month.

Now I am seriously reasoning about 7 digits funds, renting an office for my project's HQ in central London, and having a salary that is much higher than the sum of all my family's salaries.

Long story short: yes, that could definitely make someone pretty anxious.

While I do not have anything to contribute to the conversation, I want to comment under my real name that I have experienced this same financial history and these same feelings. It is emotionally overwhelming to see how far one has come, it's socially confusing to suddenly be in a new socioeconomic class, and it's a computational burden to learn how to manage an income that is far greater than required for bare survival (a US standard for bare survival, not a global standard). I have actually sought therapy for this specific topic. There are people who specialize in this. It is my opinion that the absolute first priority if you can possibly help it is to get a "lay of the land" in regards to the scope and detail of your own emotions and subsequent biases, and take concrete action toward regulating your emotions in any potentially highly consequential areas. (Some of your feelings around this may actually constitute trauma. Being poor and the instability it induces trains one to stay constantly alert for danger, dissociates one from one's unpleasant surroundings, etc. In my observation, trauma is the single most dangerous inter/personal force on the planet.) It is not only okay, but desirable, to slow down one's decision-making and regulate oneself, even if there is opportunity cost. Reasoning under both ambiguity and (subjective) pressure is a very dangerous situation to be in, and I have concluded that it is best to address this psychological milieu before doing anything else, since impaired judgement has high and broad potential for negative impact.

If you want to talk about this in private, I am willing to do that as well. I know the reason that I don't like to discuss this publicly it that there is a "preferential attachment" (networks sense) effect where resources are concerned in many social settings, and I am reluctant to stigmatize myself as having ever been poor-- perhaps when I am further into my career and have been extremely financially secure for longer than I was financially insecure, I will not have the same concerns regarding any signaling that could disrupt my acceptance within a higher social class. I encourage persons who are already in that position to take on this burden, which will endanger you much less.

I have long and often complained about the cowardice of people who would not respond to a true and vulnerable call from another in public, even when the person is trying to help the demographic of the silent person. I don't AT ALL want to post this comment and frankly dread this being a search result for my name, but I don't see a reason that I should be exempt from this moral duty to undersign your experience as being at least twice-anecdotal: a product (at least partially) of external factors that bear consideration, not something generated from within you alone.

Thank you for writing this. I found the solidarity moving. I don't know if I'll respond privately or not, but thank you.

Currently the visualisation on the pol.is questions looks like this. Anyone feel free to write a summary.

And the full report is here:

https://pol.is/report/r8rhhdmzemraiunhbmnmt

This is interesting but I have no idea how to interpret it.

I haven't used Polis before, but from looking at the report, I believe that Groups A and B (I don't seem to see C) are automatically generated based on people with similar sentiments (voting similarly on groups of statements). It seems like Group A unconcerned about money and thinks more of it is good (for example, only 31% agree with "It's not that more money is bad, but is a bit unnerving that there seems to be so much more of it" compared with 88% in group B), and Group B is concerned about money, which includes everything from a concern around a small number of funders controlling the distribution of funding in EA to believing that personal sacrifice is an important part of EA (perhaps in contrast to wanting to offer people high salaries).

Typical caveats of it being a small and potentially unrepresentative sample size, but if I'm interpreting the data correctly, I think it's interesting that a lot of people seem to be concerned about money in general, rather than specific things. Perhaps there actually are more granular differences in opinion and there could be a way to highlight those more nuanced shared perspectives if the number of sentiment groups can be changed. Not sure what happened to Group C depicted in the screenshot.

At some times I looked at the report there were two groups, at other there were three.

What I meant about interpretation is, which opinions are correlated and why? How would the different groups be characterized in general terms? Is there some demographic/professional/etc. axis that separates them? (Obviously we can only guess about the last one).

I think I already shared this comment on facebook with you, but here I am re-upping my complaints about polis and feature suggestions for ways that it would be cool to explore groups and axes in more detail: https://github.com/compdemocracy/polis/discussions/1368

(UPDATE: I got a really great, prompt response from the developers of Polis. Turns out I was misinterpreting how to read their "bullseye graph", and Polis actually provides a lot more info than I thought for understanding the two primary axes of disagreement.)

If you have your own thoughts after conducting various experiments with polis (I thought this was interesting and I liked seeing the different EA responses), perhaps you too should ping the developers with your ideas!

FFF is new, so that shouldn't be a surprise.

If the forum had a private section that only members could read, I would post this there.

I've moved this post to "personal blogpost", which might somewhat help with that. That said, I'm fine with having this post on the frontpage.

I don't mind it getting visibility, but I'd understand if people didn't want it visible to the public. If you think it's fine for the front page, leave it there. I'm unsure

Thank you!

I posted a main comment but I'll say it explicitly here - I think it's good for this post and others like it to be public.

Just a short comment: I liked your Polis poll in this post a lot! I would like to see more polls like this in order to get a better understand of what "the EA community" thinks about certain topics, instead of having to rely on annecdotes by EAs who are willing and motivated enough to write a EAF post. Adding a Polis poll might even become a good practice for EAF posts where someone annecdotally describes their opinion. As soon as some enough results for the poll come in, the author could even add some findings into their post (e.g. "[Poll Result: XYZ]").

I agree though it's got quite a high friction at the moment to understand the results.

I would prefer that I'd put each of my points in the article as a separate comment. But I think the article would have had much less traction that way.

Yes, you need to measure value drift by other standards than live on little money. It may make sense that college students think that they can live on [12k/20k] forever but then they realize that 100k is also good, plus they can donate like 25% and still feel so achieved and have so many various services that enable them to focus on work and self-care they can just inspire others to also study hard/get outside of their comfort zones and maybe, after a few years of learning/interning/helping out, they can get to some 50/60k and even then they can donate if they feel like it and get used to getting the basic efficiency-improving services, which other people are happy to do, since it's a job [in the UK/paid in GBP]. [I may be confusing £ and $.] So, it may be optimal to pay people skilled in EA-related disciplines a slight decrease from what they would gain if instead of developing capacity to work commercially they spend substantial less-paid/unpaid time gaining EA-related capabilities, because they can focus, inspire, and also donate while not feeling bad about 'taking too much.'

There are different people and if you can 'legally/in an accepted way' pay for the change of institutional focus, you pay for the connections at the institutions. For example, if you'd like Harvard to become more interested in impact, you hire some people from Harvard, who could otherwise gain maybe $200k and they influence the institution through networks. This is great because academia influences the world order. You have also people who develop solutions and work for much less, such as the charity entrepreneurs, especially in nations with higher PP than the UK. Then you have the officers who run the solutions and work for very little, maybe someone can distribute nets for $2k/year or gladly liaise with local institutions for $0.2/hour in places of very high PP and especially poor other opportunities. You still need someone to coordinate them who can live in a relative peace, having some personal security/stability paid. So, not everyone is getting large sums.

The funds are welcoming with applications but try applying: your project needs to be especially promising in impact to gain funding. For example, if you want to roller blade, this can constitute a reputational loss risk for yourself (not really but may reduce the ability of fund managers in evaluating grants or not really but there may be other colleagues who can help you develop your project before you apply for funding) - of course it is a joke but the general reasoning applies. The funds' scopes enables self-selection of persons who have the ability to relatively independently solve some of the world's most important problems - it may be just a coincidence a lot are in EA (or just the thinking about effective impartial impact making is less prominent elsewhere). Thus, funds are highly selective.

You need some deference to established desiderata to not shove it into the faces of private and public companies that eh yes we just want to reduce your traditional power we think it is more enjoyable even for you but also importantly for everyone, because impact is just very cool and enjoyable: you would get blacklisted or incur reputational loss. Instead, you need to fund some companies that even contribute to the issues so that people focus on these, especially if they are controversial or/and aggressive in gaining attention, at least as a way to entertain the donors in the community who would otherwise think well it is dope to go to Mars and think what a shame even talk about poverty efficient solutions and inclusion, just every time someone brings up the topic I leave since I dislike momentum loss. Of course, there would be an issue if the money 'flies to Mars' year after year not only early on then just not so fun anymore. Therefore, it may be necessary to have fun giving to attention-captivating causes likeable by powerful individuals because it captivates their attention and participation.

The fund managers to an extent reflect the spirit of the community, so if you have an issue, it is probably due to a spirit in the community, which you should feel free to either bring up or consider brought up otherwise. Thus, fund managers are held accountable by the community to make good decisions so as to fly the money where they make the most positive inclusive impact.