Ryan Kidd

Bio

Participation6

- Co-Executive Director at ML Alignment & Theory Scholars Program (2022-present)

- Co-Founder & Board Member at London Initiative for Safe AI (2023-present)

- Manifund Regrantor (2023-present) | RFPs here

- Advisor, Catalyze Impact (2023-present) | ToC here

- Advisor, AI Safety ANZ (2024-present)

- Ph.D. in Physics at the University of Queensland (2017-2023)

- Group organizer at Effective Altruism UQ (2018-2021)

Give me feedback! :)

Posts 24

Comments40

Hi, this Ryan Kidd answering on behalf of MATS Research!

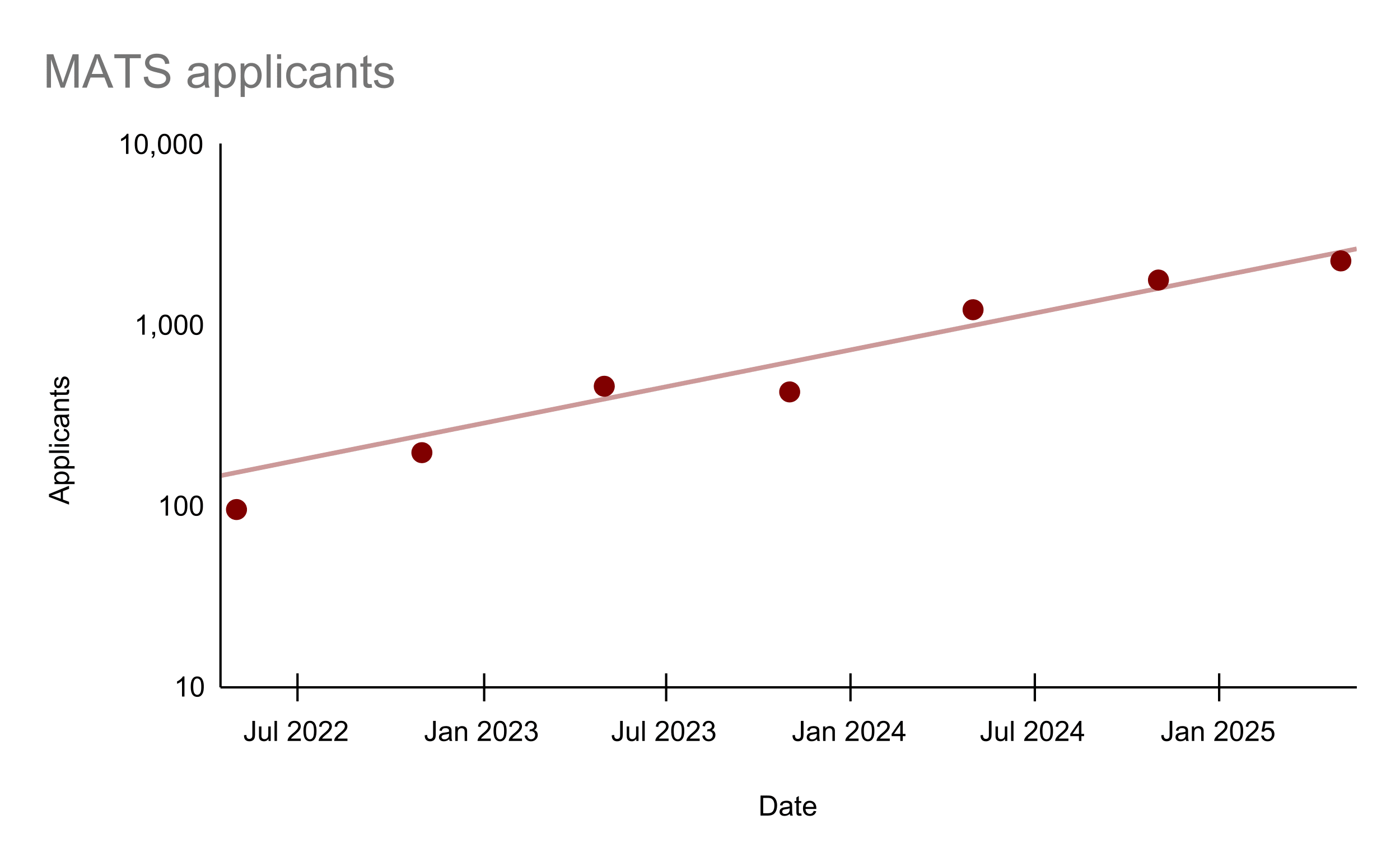

MATS is currently fundraising for our 2026 programs and beyond. We are the largest AI safety research fellowship and talent pipeline, supporting 100 fellows twice a year. Some impact stats:

- 446 alumni and 120+ arXiv papers (h-index 37) in 3.5 years.

- 80% of our pre-2025 alumni work on AI safety and 10% founded AI safety orgs or teams (e.g., Apollo, Timaeus).

- Participants in our last program rated it 9.4/10 on average.

- Former team members have gone on to (re)found Constellation's Astra Fellowship, manage Anthropic's AI safety external partnerships, and help other AI safety orgs scale.

We are well-funded by Coefficient Giving, but have big scaling plans! We want to run an additional fellowship in Fall 2026, expand Summer and Winter 2026 programs to 120 fellows each, and launch a 1-2 year residency program for senior researchers. Each additional fellow costs $40.8k.

Some testimonials:

- "Apollo almost certainly would not have happened without MATS." Marius Hobbhahn (CEO, Apollo Research)

- "It's my number one recommendation to people considering work on Al safety." Jesse Hoogland (Executive Director, Timaeus)

Please reply here or contact us if you have any questions!

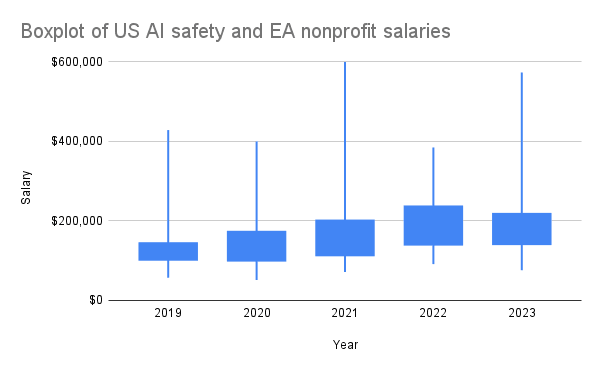

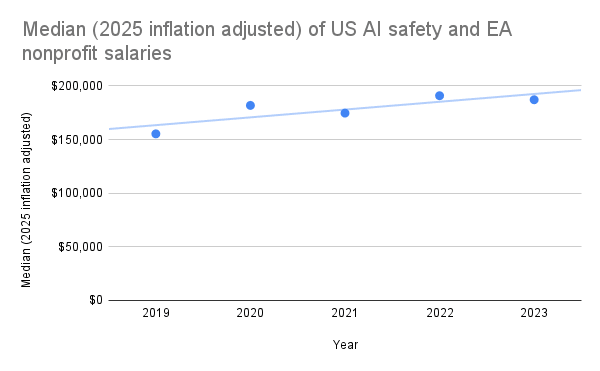

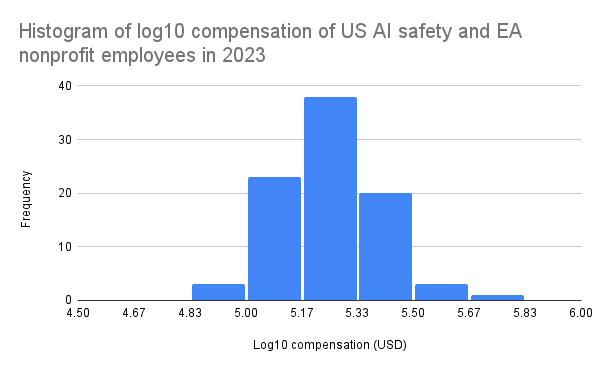

As part of MATS' compensation reevaluation project, I scraped the publicly declared employee compensations from ProPublica's Nonprofit Explorer for many AI safety and EA organizations (data here) in 2019-2023. US nonprofits are required to disclose compensation information for certain highly paid employees and contractors on their annual Form 990 tax return, which becomes publicly available. This includes compensation for officers, directors, trustees, key employees, and highest compensated employees earning over $100k annually. Therefore, my data does not include many individuals earning under $100k, but this doesn't seem to affect the yearly medians much, as the data seems to follow a lognormal distribution, with mode ~$178k in 2023, for example.

I generally found that AI safety and EA organization employees are highly compensated, albeit inconsistently between similar-sized organizations within equivalent roles (e.g., Redwood and FAR AI). I speculate that this is primarily due to differences in organization funding, but inconsistent compensation policies may also play a role.

I'm sharing this data to promote healthy and fair compensation policies across the ecosystem. I believe that MATS salaries are quite fair and reasonably competitive after our recent salary reevaluation, where we also used Payfactors HR market data for comparison. If anyone wants to do a more detailed study of the data, I highly encourage this!

I decided to exclude OpenAI's nonprofit salaries as I didn't think they counted as an "AI safety nonprofit" and their highest paid current employees are definitely employed by the LLC. I decided to include Open Philanthropy's nonprofit employees, despite the fact that their most highly compensated employees are likely those under the Open Philanthropy LLC.

If I were building a grantwriting bootcamp, my primary concerns would be:

- Where will successful grantees work?

- I've found that independent researchers greatly benefit from a shared office space and community, for social connection, high-quality peer feedback, and centralizing operations costs.

- Current AI safety offices seem to be overflowing. We likely need further, high-capacity AI safety offices to support the influx of independent researchers from Open Phil's RFPs.

- I think that, in general, employment in a highly effective organization is more impactful than independent research for the majority of projects and researchers. While I greatly support the new Open Phil RFPs, I hope that more of their grants go towards setting up highly effective organizations, like nonprofit FROs, that can absorb and scale talent.

- I see the primary benefit of the MATS extension program as a means of providing further research mentorship (albeit with more accountability and autonomy than the main program) with longer time horizons to complete research projects. The infrastructure we provide is quite significant and increasing the number of independent researchers without also scaling long-term support systems will likely not see optimal results.

- How will successful grantees obtain mentorship and high-quality feedback loops?

- Even with the optimal project proposal, emerging researchers seem to benefit substantially from high quality mentorship, particularly over the course of a research project. I do not believe that all of this support should be front-loaded.

- I would support an accompanying long-term peer support or mentorship program after the grantwriting bootcamp. I apologize if you were already planning this!

- Who will employ grantees on the conclusion of their research?

- This is a significant question to MATS as well. I currently believe that high-quality research during the program is a strong enough output alone to justify the cost. However, ideally, most MATS alumni would find employment post-program. The main roadblocks to this employment seem to be software engineering skills (which points to ARENA-like coding bootcamps as a solution) and high-quality peer-reviewed publications (which usually need strong mentorship).

- At the moment, I think "better grant proposals" is not a significant bottleneck to MATS alumni getting jobs. Rather, I think coding skills and high quality publications are the limiting factors. Also, I think there are far too few jobs to go around compared to the scale of the AI safety problem, so I also support more startup accelerators.

Thanks for publishing this, Arb! I have some thoughts, mostly pertaining to MATS:

Why do we emphasize acceleration over conversion? Because we think that producing a researcher takes a long time (with a high drop-out rate), often requires apprenticeship (including illegible knowledge transfer) with a scarce group of mentors (with high barrier to entry), and benefits substantially from factors such as community support and curriculum. Additionally, MATS' acceptance rate is ~15% and many rejected applicants are very proficient researchers or engineers, including some with AI safety research experience, who can't find better options (e.g., independent research is worse for them). MATS scholars with prior AI safety research experience generally believe the program was significantly better than their counterfactual options, or was critical for finding collaborators or co-founders (alumni impact analysis forthcoming). So, the appropriate counterfactual for MATS and similar programs seems to be, "Junior researchers apply for funding and move to a research hub, hoping that a mentor responds to their emails, while orgs still struggle to scale even with extra cash."