The COVID-19 pandemic has highlighted the continuing vulnerability of our civilization to serious harm from novel diseases, but it has also highlighted that our civilization's wealth and technology offer unprecedented ability to contain pandemics. Logical extrapolation of DNA/RNA sequencing technology, physical barriers and sterilization, and robotics point the way to a world in which any new natural pandemic or use of biological weapons can be immediately contained, at increasingly affordable prices. These measures are agnostic as to the nature of pathogens, low in dual use issues, and can be fully established in advance, and so would seem to mark an end to any risk period for global catastrophic biological risks (GCBRs). An attainable defense-dominant 'win condition' for GCBRs means that we should think about GCBRs more in terms of a possible 'time of perils' and not an indefinite risk that sets a short life expectancy for our civilization.

Diagnostic technology is advancing towards cheap universal detection of novel pathogens

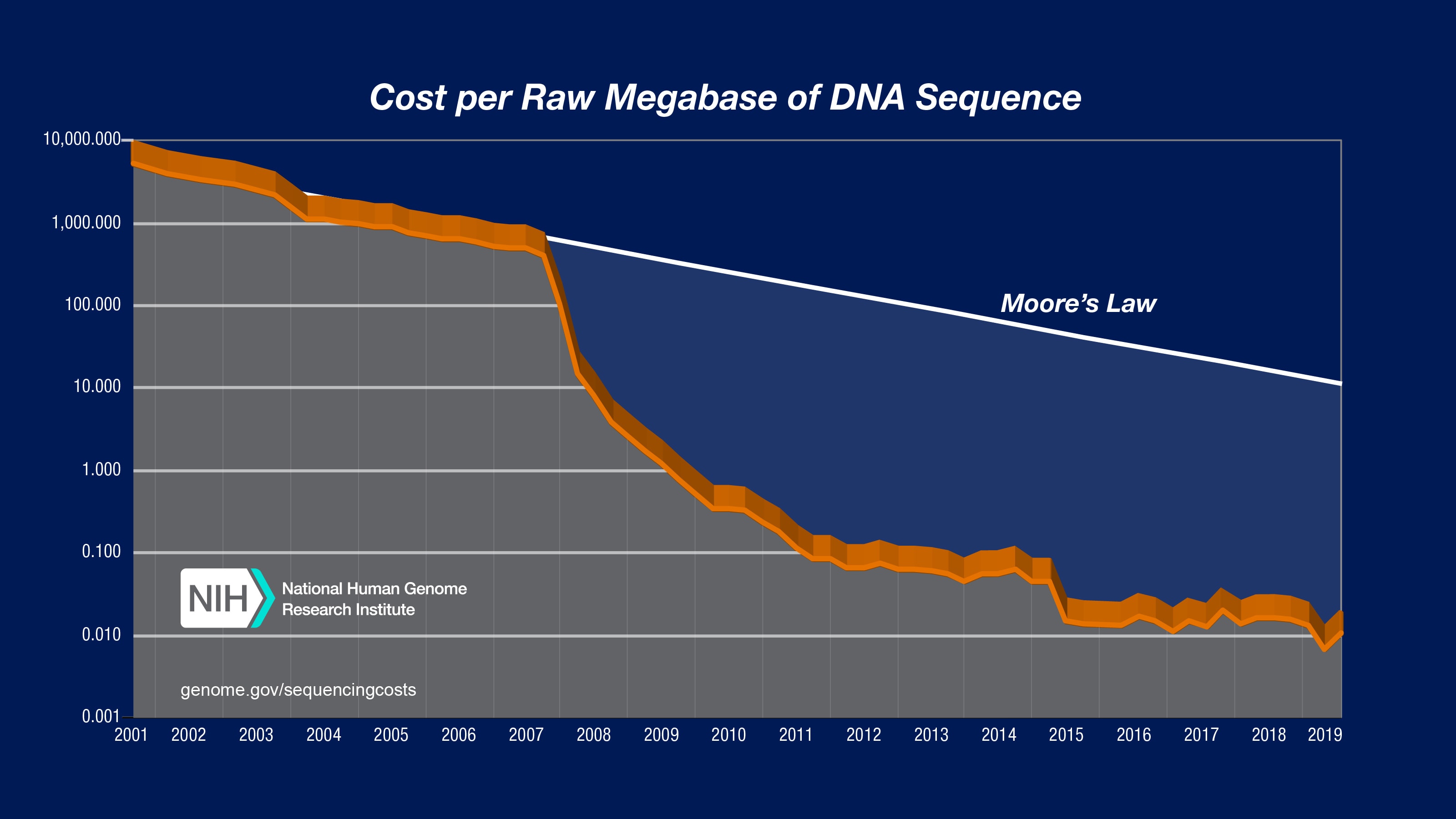

The technology of DNA sequencing has been one of the most rapidly advancing in biology, as reported by the National Human Genome Research Institute:

The marginal cost of production is likely less, as the market leader, Illumina, has market power for the moment and reaps high profit margins while restricting the functionality of the systems it sells. A number of competitors are working to contest its dominance with new technologies.

Sequencing technologies can be used to detect any pathogenic DNA (or RNA) sequence, including previously unseen organisms: the field of metagenomics studies collections of genetic material from mixed communities of organisms. Researchers have already demonstrated sequencing and metagenomic methods to monitor sewage systems for antimicrobial resistance genes in bacteria and detect the presence of poliovirus in a community for eradication, and advocated for the creation of global sewage surveillance systems (of the sort that have helped track SARS-CoV-2 this year), with waste. Such capabilities can be used for surveillance in hospitals, airports, etc.

The exponential decline in sequencing prices appears likely to eventually bring costs down to the point where sequencing costs are no longer a bottleneck for surveillance not just at the regional or community level, but for individual buildings (including airports and ultimately homes), e.g. by sequencing the condensation pan in HVAC systems. Cheaper sequencing also helps to detect genetic material at lower concentrations, helping with enabling earlier warning and detection in asymptomatic samples. Additional improvements would be needed for efficient sample acquisition and preparation, especially automation to eliminate dependency on skilled workers, but these seem eventually solvable if there is a large market. Combined with routine testing of medical samples in hospitals this could give quick warning of the spread of a new organism to flag it for close inspection of pathogenicity (although public health institutions would need to actually respond to investigate any spreading new organisms identified).

Blanket coverage of flexible or universal tests can affordably crush human-to-human transmission if adequate capacity is maintained

Attempts to contain the SARS-CoV-2 pandemic have been hindered by substantial asymptomatic and presymptomatic transmission. With knowledge of who is infected, strict isolation of infected people and contacts can help to slash the effective reproduction number of a pathogen. For instance, in the Italian town of Vò, universal testing found 3% infected, cut to 0.3% 10 days later and then zero. With a disease as damaging as COVID-19, frequently performing such blanket tests would easily pass cost-benefit tests at normal prices, as Paul Romer has called for. When isolation measures cost more than 10% of economic output over a year, it's worth spending 10% of output, trillions of dollars, to stop a plague. The current pandemic has shown that implementation and compliance with testing and isolation is imperfect and varies greatly with government policy and other factors, but some countries have managed high performance, and overall the response has been enormous compared to previous pandemics. For a GCBR pathogen more than 100x as lethal, with a higher effective reproduction number and more asymptomatic transmission, the cost-benefit would be even more favorable, justifying spending of most of world output.

So we should ask whether continued massive falls in sequencing/diagnostic costs and increasing attention to pandemic risks lead to enough (localized, as opposed to centralized) sequencing capacity being produced during non-pandemic times as to enable continuous and ubiquitous surveillance for new pathogens, and spaces and objects humans come into contact with. Extrapolating falling sequencing costs out a few decades suggests this will be within reach for a minute proportion of global output, and sequencing capacity is valuable for many medical uses, so it seems probable to me that many nations will have the necessary capacity by 2050, and the world will overall have the appropriate levels of capacity, although there may be distributional issues.

Could rich countries live at safety standards inspired by BSL-4?

The U.S. Center for Disease Control has 4 biosafety levels. At BSL-4, the highest safety level, workers wear positive pressure suits with heavy sterilization on entry and exit, while powerful filtration and sterilization systems along with negative air pressure prevent pathogens from being escaping by air. These do suffer accidents, but at a rate of one per hundreds of worker-years, which could be driven down further by large-scale studies and investments. The strain could be greater blocking threats from outside rather than inside, but I think it's a reasonable ballpark for overkill defenses inspired by BSL-4.

At current production scales "cost per gross square foot for a BSL-4 lab is in the range of $700 to $1,200" alongside various restrictions on the kinds of design that are compatible. Meanwhile, the median home price in San Francisco, one of the world's most expensive cities, is near $1,000 per square foot. The San Francisco housing market drives out many people with low incomes for places with cheaper housing, but American housing consumption is exceptionally high, at over 2000 square feet per capita, vs ~800 for the UK, and ~100 for India.

Another data point for this is the cost (decades ago) of measures to protect children with severe combined immunodeficiency (who usually die within a year from severe infections without special measures) via sterilized environments. [Thanks to Claire Zabel for this point.]

The cost of producing building to reach BSL-4 is also greatly elevated by limited production runs and amortizing the costs of regulatory approval: produced at scale for housing and workspaces would likely slash costs severalfold. However, costs would greatly expand with attempts to include land-expensive industries such as agriculture (with thousands of square feet for BSL-4 greenhouses to feed people). Those costs would be reduced insofar as telepresence, hazmat suits, and tiered safety measures could be used for lower priority areas, and food or goods produced produced in less safe environments sterilized before contact with humans.

My guess is that if the world had years of advance warning of a GCBR that required BSL-4 safety conditions, some rich countries could manage this using today's technologies and economies of scale, but this would require WWII mobilization levels, using most economic output for the adjustments. But such expenditures would not be made on the basis of speculation about future risks at a national level, so there would likely only be a limited number of facilities with such protection built in advance of a catastrophe.

How could the cost-benefit change such that societies would build broad BSL-4 protections in advance of a GCBR? One path would be greatly increased wealth, e.g. given advanced artificial intelligence and robotics, total wealth could rise by orders of magnitude, and the relevant technologies improve in price. Under those conditions atmospheric separation of buildings might be justified just to improve air quality, and a serious threat of GCBR could suffice. Robots, controlled by AI or telepresence, could also carry on jobs requiring physical presence without risk of infection, and improve the pace of scaleup of production in the event of a disaster.

Investment might also increase in response to GCBRs becoming a more serious threat, a negative feedback on potential biorisk levels: if perceived risks become very high then robust countermeasures will be deployed by larger and larger shares of the world.

Low dual use risk

A general problem with biosecurity is dual use research: if we think that biothreats largely lie with future discoveries in biotechnology, then general biotechnology will increase capabilities for both defense and offense. It is plausible that in the limit advances in biotechnology will overwhelmingly favor the defender, but not guaranteed, and net risk may be increased in the interim. Dual-use risk can substantially attenuate the biosecurity value of R&D: a technology with a 60% chance of providing a benefit of x and a 40% chance of a harm of -x has an expected benefit of 0.2x.

Advances in sequencing do boost biotechnology innovation generally (which may include dangerous innovations), but because of the small size of pathogen genomes, and research vs surveillance contexts, sequencing would probably already be fairly abundant for illegal bioweapons programs. Cheaper and improved BSL-4+ protective equipment and buildings, by reducing the risk of accidental release per capita, might reduce the disincentive to bioweapons research from accidents (which could overwhelm direct reductions of accidental release and resilience to release).

Nonetheless, both of these approaches seem relatively low in dual-use risk compared to approaches that require detailed understanding of all possible pathogenic attacks to defend against, and thus generate information about those attacks as well as any for which defense fails.

Biorisk and the 'time of perils'

If there were a fixed 0.05% risk each year of a GCBR, then the likelihood of an eventual event approaches 1 over the millennia. For the extreme case of an extinction event, that risk would set a civilizational life expectancy in the thousands of years. On the other hand, if risk levels eventually decline to ~0, then life expectancy of a civilization thereafter could be trillions of years (depending on other sources of mortality), so per Toby Ord averting extinction risks is most valuable when there is a limited 'time of perils' when risk is high, followed by a substantial probability of a stable low-risk regime.

Universal detection technologies and physical barriers/sterilization suggest there is an ~0 biorisk win state attainable. If one expects advanced artificial intelligence this century, then the possible time window for serious biorisk is limited between the possible development of biothreats and their mootness by the deployment of known robust countermeasures. Given the low observed rates of WMD war between states and the barriers to terrorists inventing and using cutting-edge WMD (accidental release from bioweapons programs or publication of dangerous bioweapon recipes may be more likely, but can at least be reduced nonadversarially), passing through some years or decades of vulnerability safely seems probale. And if improvement and deployment of robust countermeasures proceeds quickly enough relative to increases in biothreats there may be no time of perils with respect to biological risks.