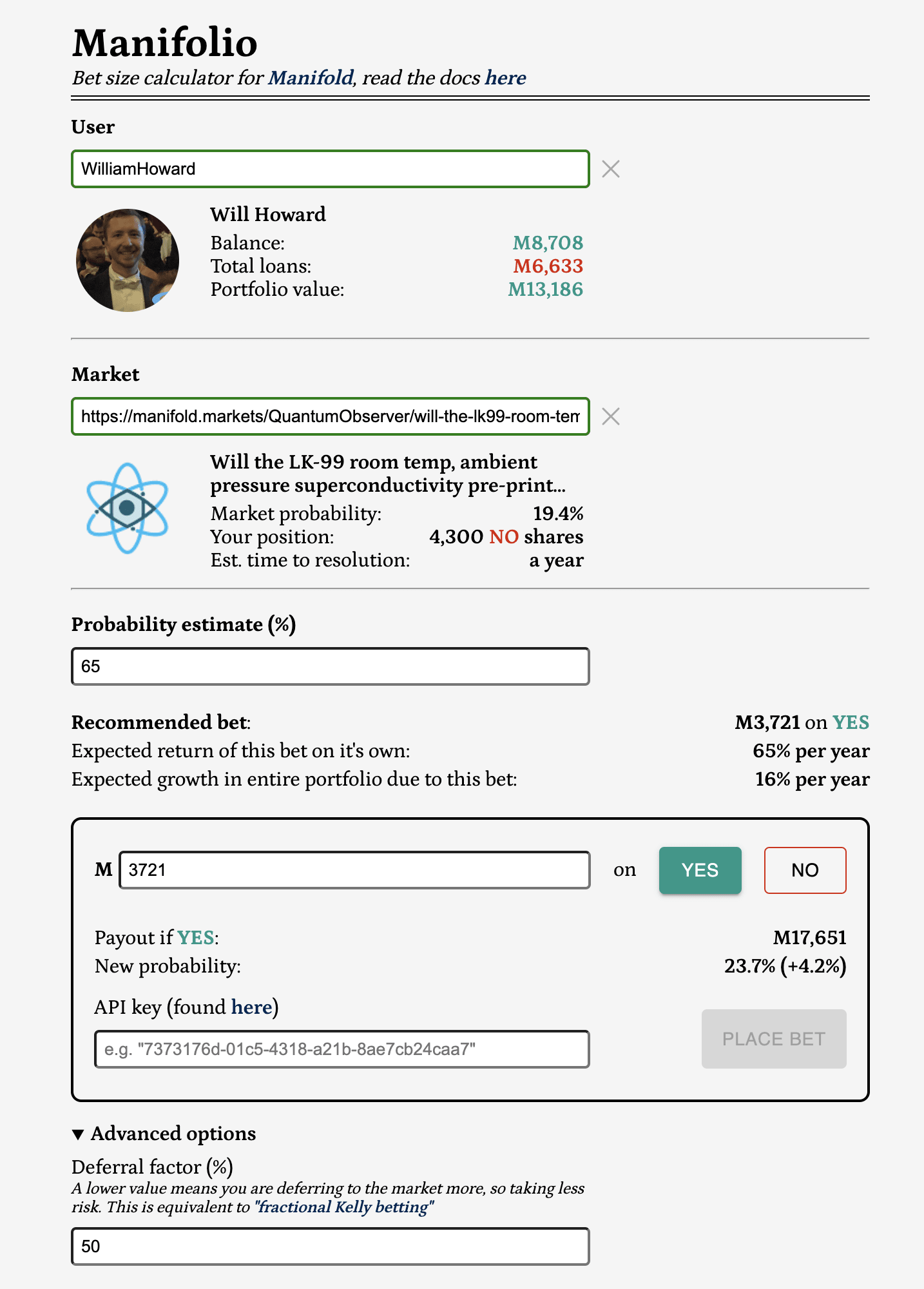

I've made a calculator that makes it easy to make correctly sized bets on Manifold. You just put in the market and your estimate of the true probability, and it tells you the right amount to bet according to the Kelly criterion.

“The right amount to bet according to the Kelly criterion” means maximising the expected logarithm of your wealth.

There is a simple formula for this in the case of bets with fixed odds, but this doesn’t work well on prediction markets in general because the market moves in response to your bet. Manifolio accounts for this, plus some other things like the risk from other bets in your portfolio. I've aimed to make it simple and robust so you can focus on estimating the probability and trust that you are betting the right amount based on this.

You can use it here (with a market prefilled as an example), or read a more detailed guide in the github readme. It's also available as a chrome extension... which currently has to be installed in a slightly roundabout way (instructions also in the readme). I'll update here when it's approved in the chrome web store.

EDIT: Good news! The extension has now been approved and can be installed from the web store.

Why bet Kelly (redux)?

Much ink has been spilled about why maximising the logarithm of your wealth is a good thing to do. I’ll just give a brief pitch for why it is probably the best strategy, both for you, and for “the good of the epistemic environment”.

For you

- Given a specific wealth goal, it minimises the expected time to reach that goal compared to any other strategy.

- It maximises wealth in the median (50th percentile) outcome.

- Furthermore, for any particular percentile it gets arbitrarily close to being the best strategy as the number of bets gets very large. So if you are about to participate in 100 coin flip bets in a row, even if you know you are going to get the 90th percentile luckiest outcome, the optimal amount to bet is still close to the Kelly optimal amount (just marginally higher). In my opinion this is the most compelling self-interested reason, even if you get very lucky or unlucky it’s never far off the best strategy.

(the above are all in the limit of a large number of iterated bets)

There are also some horror stories of how people do when using a more intuition based approach... it's surprisingly easy to lose (fake) money even when you have favourable odds.

For the good of the epistemic environment

A marketplace consisting of Kelly bettors learns at the optimal rate, in the following sense:

- Special property 1: the market will produce an equilibrium probability that is the wealth weighted average of each participant’s individual probability estimate. In other words it behaves as if the relative wealth of each participant is the prior on them being correct.

- Special property 2: When the market resolves one way or the other, the relative wealth distribution ends up being updated in a perfectly Bayesian manner. When it comes time to bet on the next market, the new wealth distribution is the correctly updated prior on each participant being right, as if you had gone through and calculated Bayes’ rule for each of them.

Together these mean that, if everyone bets according to the Kelly criterion, then after many iterations the relative wealth of each participant ends up being the best possible indicator of their predictive ability. And the equilibrium probability of each market is the best possible estimate of the probability, given the track record of each participant. This is a pretty strong result[1]!

...

I'd love to hear any feedback people have on this. You can leave a comment here or contact me by email.

Thanks to the people who funded this project on Manifund, and everyone who has given feedback and helped me test it out

- ^

This is shown in this paper. Importantly it's proven for the case of one market at a time, not when there are multiple markets running concurrently. I’m reasonably confident a version of it is still true with concurrent markets, but in any case Manifolio doesn't currently account for the opportunity cost of not betting in other markets, so this result doesn't carry over exactly.

This is a neat tool!

Just a little heads up for people in terms of privacy. If you use the built-in helper to place your bets, your API key is sent to the owner of the manifolo service. I've glanced over the source code, and it does not seem to be stored anywhere. It's mainly routed through the backend for easier integration with an SDK and some logging purposes (as far as I can tell). However, there aren't really any strong guarantees that the source code publicly available is in fact the source code running on the URL.

I have no reason to doubt this, but in theory your API key might be stored and could be misused at a later date. For example, a holder of many API keys could place multiple bets quickly from many different users to steer a market or make a quick profit before anyone realizes.

I don't think there is any technical reason why the communication with the manifold APIs couldn't just happen on the frontend, so it might be worth looking into?

In general one should be very careful about pasting in API keys anywhere you don't trust. Seems like the key for manifold gives the holder very wide permissions on your account.

Again, I have no reason to suspect that there is anything sinister going on here, but I think it's worth pointing out nevertheless!

Thanks for posting the source code as well! Personally I did use my API key while testing and I do trust the author :)

Ah, yes, the CORS policy would be an obstacle. It might be possible to contact them and ask to be added to the list.