I'm currently helping assess 80,000 Hours' impact over the past 2 years.

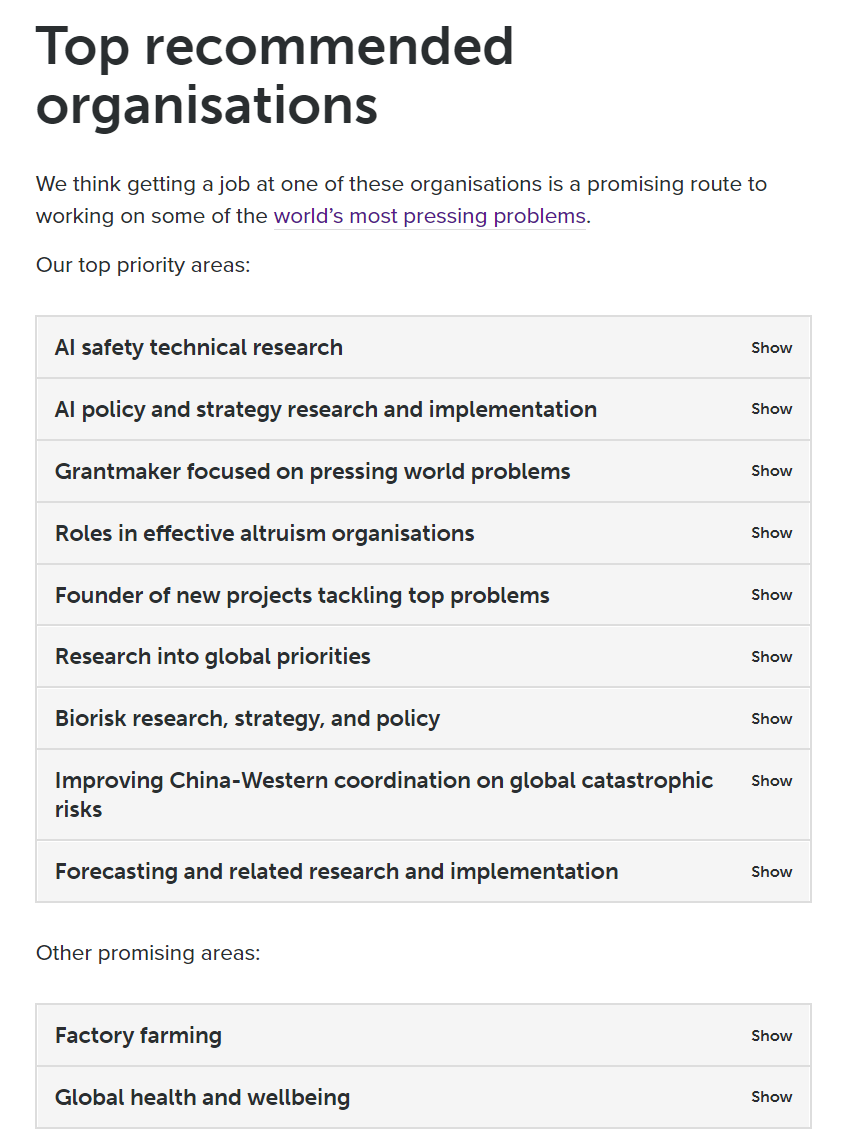

One part of our impact is ways we influence the direction of the EA community.

By "direction of the EA community," I mean a variety of things like:

- What messages seem prevalent in the community

- What ideas gain prominence or become less prominent

- What community members are interested in

To get a better understanding of this, I'm gathering thoughts from community members on how they perceive 80,000 Hours to have influenced the direction of the EA community, if at all.

If you have ideas, please share them using this short form!

Note we are interested to hear about both positive and negative influences.

This isn’t supposed to be a rigorous or thorough survey -- but we think we should have a low bar for rapid-fire surveys of people in the community that could be helpful for giving us ideas or things to investigate.

Thank you! Arden

I'd suggest changing the first short answer question on the google form to a longer response. Right now I can only see part of the sentence I'm typing.

fixed - thank you!