This is a special post for quick takes by tobyj. Only they can create top-level comments. Comments here also appear on the Quick Takes page and All Posts page.

I don't understand what you mean by a "folk-elite" intervention? Do you mean interventions that focus on people near the middle of the x-axis on your graph?

Yeah - appreciate this is ambiguous - I was essentially asking for examples of interventions that blur this binary. This would include interventions closer to the middle of this graph (insofar as they seem genuinely connected to the more extremes). Flavours I was imagining:

Wellbeing in elite spaces (or maybe better elite culture more generally)

It sounds like you are asking, do EAs ever apply folk-style interventions to elites, and elite-style interventions to "folks"?

In that case I think the answer is no:

The reason to help poor people, or sentient beings who are otherwise vulnerable, is that they're relatively powerless and there are relatively easy ways to help them. People who are wealthy from a global perspective (which includes most poor people in developed countries) are more difficult to help.

"Elite interventions" as you describe them only make sense for people who have a lot of influence. It's rare for someone with a lot of influence to be poor or vulnerable in the relevant sense.

Interventions that attempt to improve decisionmaking by elites in developing countries might at least slightly blur the chart, to the extent it suggests the "most powerful" are the USG, Silicon Valley elites, etc.

Would you qualify these as leadership in disempowered communities? I'm gonna agree wellbeing in elite spaces is probably only high EV if the TOC is it makes them better at wielding their power.

I am really into writing at the moment and I’m keen to co-author forum posts with people who have similar interests.

I wrote a few brief summaries of things I'm interested in writing about (but very open to other ideas).

Also very open to:

co-authoring posts where we disagree on an issue and try to create a steely version of the two sides!

being told that the thing I want to write has already been written by someone else

Things I would love to find a collaborator to co-write:

Comparing the Civil Service bureaucracy to the EA nebuleaucracy.

I recently took a break from the Civil Service and to work on an EA project full time. It’s much better, less bureaucratic and less hierarchical. There are still plenty of complex hierarchical structures in EA though. Some of these are explicit (e.g. the management chain of an EA org or funder/fundee relationships), but most aren’t as clear. I think the current illegibility of EA power structures is likely fairly harmful and want more consideration of solutions (that increase legibility).

What is the relationship between moral realism, obligation-mindset, and guilt/shame/burnout?

Despite no longer buying moral realism philosophically, I deeply feel like there is an objective right and wrong. I used to buy moral realism and used this feeling of moral judgement to motivate myself a lot. I had a very bad time.

People who reject moral realism philosophically (including me) still seem to be motivated by other, often more wholesome moral feelings, including towards EA-informed goals.

These seem like the main EA-neuroses - the fears that drive many of us.

I constantly feel like I’m sifting for gold in a stream, while there might be gold mines all around me. If I could just think a little harder, or learn faster, I could find them…

The distribution in value of different possible options is huge, and prioritisation seems to work. But you have to start doing things at some point. The fear that I’m working on the wrong thing is painful and constant and the reason I am here…

As with prioritisation, the fear that your beliefs are wrong is everywhere and is pretty all-consuming. False beliefs are deeply dangerous personally and catastrophic for helping others. I feel I really need to be obsessed with this.

I want to explore more feeling-focussed solutions to these fears.

When is it better to risk being too naive, or too cynical

Is the world super dog-eat-dog or are people mostly good? I’ve seen people all over the cynicism spectrum in EA. Going too far either way has its costs, but altruists might want to risk being too naive (and paying a personal cost) rather than too cynical (which had greater external cost).

To put this another way. If you are unsure how harsh the world is, lean toward acting like you’re living in a less harsh world - there is more value for EA to take there. (I could do with doing some explicit modelling on this one)

This is kinda the opposite of the precautionary principle that drives x-risk work - so is clearly very context specific.

I'd be interest to read a post you write regarding illegibility of EA power structures. In my head I roughly view this as sticking to personal networks and resisting professionalism/standardization. In a certain sense, I want to see systems/organizations modernize.

A quote from David Graeber's book, The Utopia of Rules, seems vaguely related: "The rise of the modern corporation, in the late nineteenth century, was largely seen at the time as a matter of applying modern, bureaucratic techniques to the private sector—and these techniques were assumed to be required, when operating on a large scale, because they were more efficient than the networks of personal or informal connections that had dominated a world of small family firms."

When is it better to risk being too naive, or too cynical

That reminds me of what I read about game theory in Give and Take by Adam Grant (iirc). The conclusion was that the strategy which results in most rewards was to behave cooperatively and only switch (to non-coop) once every three times if the other is uncooperative. The reasoning was that if you don't cooperate, the "selfish" won't either. But if you "forgive" and try to cooperate again after they weren't cooperative, you may sway them to cooperate too. You don't cooperate always regardless, at risk of being too naive and taken advantage of, but you lean towards cooperating more often than not.

If you are unsure how harsh the world is, lean toward acting like you’re living in a less harsh world - there is more value for EA to take there.

I'd be interested in reading more about this. I think a less cynical view would elicit more cooperation and goodwill due to likeability. I'm not sure this is the direction you're going so that's why I'm curious about it.

I wanted to get some perspective on my life so I wrote my own obituary (in a few different ways).

They ended up being focussed my relationship with ambition. The first is below and may feel relatable to some here!

Auto-obituary attempt one:

Thesis title: “The impact of the life of Toby Jolly” a simulation study on a human connected to the early 21st century’s “Effective Altruism” movement

Submitted by: Dxil Sind 0239β for the degree of Doctor of Pre-Post-humanities at Sopdet University August 2542

Abstract Many (>500,000,000) papers have been published on the Effective Altruism (EA) movement, its prominent members and their impact on the development of AI and the singularity during the 21st century’s time of perils. However, this is the first study of the life of Toby Jolly; a relatively obscure figure who was connected to the movement for many years. Through analysing the subject’s personal blog posts, self-referential tweets, and career history, I was able to generate a simulation centred on the life and mind of Toby. This simulation was run 100,000,000 times with a variety of parameters and the results were analysed. In the thesis I make the case that Toby Jolly had, through his work, a non-zero, positively-signed impact on the creation of our glorious post-human Emperium (Praise be to Xraglao the Great). My analysis of the simulation data suggests that his impact came via a combination of his junior operations work, and minor policy projects but also his experimental events and self-deprecating writing.

One unusual way he contributed was by consistently trying to draw attention to how his thoughts and actions were so often the product of his own absurd and misplaced sense of grandiosity; a delusion driven by what he would describe himself as a “desperate and insatiable need to matter”. This work marginally increased the self-awareness and psychological flexibility amongst the EA community. This flexibility subsequently improved the movement's ability to handle its minor role in the negotiations needed to broker power during the Grand Transition - thereby helping avoid catastrophe.

The outcomes of our simulations suggest that through his life and work Toby decreased the likelihood of a humanity-ending event by 0.0000000000024%. He is therefore responsible for an expected 18,600,000,000,000,000,000 quality adjusted experience years across the light-cone, before the heat-death of the universe (using typical FLOP standardisation). Toby mattered.

Ethics note: as per standard imperial research requirements, we asked the first 100 simulations of Toby if they were happy being simulated. In all cases, he said “Sure, I actually, kind of suspected it…look, I have this whole blog about it”

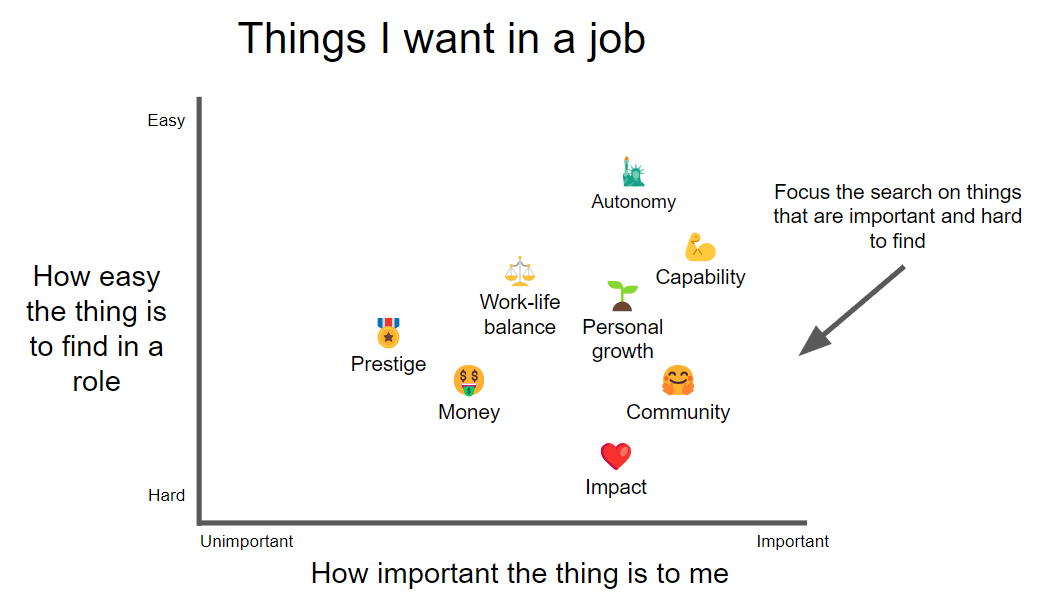

What are promising folk–elite interventions?

This distinction between "folk interventions" and "elite interventions" feels quite significant in EA spaces.

My instinct that hurt-people hurt people and that elites are often just the visible tip of wider cultural icebergs makes me want to blur this binary.

I don't understand what you mean by a "folk-elite" intervention? Do you mean interventions that focus on people near the middle of the x-axis on your graph?

Yeah - appreciate this is ambiguous - I was essentially asking for examples of interventions that blur this binary. This would include interventions closer to the middle of this graph (insofar as they seem genuinely connected to the more extremes). Flavours I was imagining:

It sounds like you are asking, do EAs ever apply folk-style interventions to elites, and elite-style interventions to "folks"?

In that case I think the answer is no:

Interventions that attempt to improve decisionmaking by elites in developing countries might at least slightly blur the chart, to the extent it suggests the "most powerful" are the USG, Silicon Valley elites, etc.

https://en.wikipedia.org/wiki/Civil_rights_movement

https://en.wikipedia.org/wiki/Women%27s_suffrage

https://en.wikipedia.org/wiki/Underground_Railroad

Would you qualify these as leadership in disempowered communities? I'm gonna agree wellbeing in elite spaces is probably only high EV if the TOC is it makes them better at wielding their power.

I have now turned this diagram into an angsty blog post. Enjoy!

Pareto priority problems

I am really into writing at the moment and I’m keen to co-author forum posts with people who have similar interests.

I wrote a few brief summaries of things I'm interested in writing about (but very open to other ideas).

Also very open to:

Things I would love to find a collaborator to co-write:

Re: cynicism, you might enjoy Hufflepuff cynicism.

I'd be interest to read a post you write regarding illegibility of EA power structures. In my head I roughly view this as sticking to personal networks and resisting professionalism/standardization. In a certain sense, I want to see systems/organizations modernize.

A quote from David Graeber's book, The Utopia of Rules, seems vaguely related: "The rise of the modern corporation, in the late nineteenth century, was largely seen at the time as a matter of applying modern, bureaucratic techniques to the private sector—and these techniques were assumed to be required, when operating on a large scale, because they were more efficient than the networks of personal or informal connections that had dominated a world of small family firms."

That reminds me of what I read about game theory in Give and Take by Adam Grant (iirc). The conclusion was that the strategy which results in most rewards was to behave cooperatively and only switch (to non-coop) once every three times if the other is uncooperative. The reasoning was that if you don't cooperate, the "selfish" won't either. But if you "forgive" and try to cooperate again after they weren't cooperative, you may sway them to cooperate too. You don't cooperate always regardless, at risk of being too naive and taken advantage of, but you lean towards cooperating more often than not.

I'd be interested in reading more about this. I think a less cynical view would elicit more cooperation and goodwill due to likeability. I'm not sure this is the direction you're going so that's why I'm curious about it.

I wanted to get some perspective on my life so I wrote my own obituary (in a few different ways).

They ended up being focussed my relationship with ambition. The first is below and may feel relatable to some here!

See my other auto-obituaries here :)

I wrote up my career review recently! Take a look (also, did you know that Substack doesn't change the URL of a post, even if you rename it?!)

(also, did you know that Substack doesn't change the URL of a post, even if you rename it?!)