The failure rate is not 0

The base rate of failure for startups is not 0: Of the 6000 companies Y Combinator has funded, only ~16 are public. This is the wrong reference class for FTX at a $32b valuation, but even amongst extremely valuable companies, failures are not uncommon:

- WeWork had a peak valuation of $47b

- Theranos had a peak valuation of $10b

- Lucid Motors had a peak valuation of $90b

- Virgin Galactic had a peak valuation of $14b

- Jull had a peak valuation of $38b

- Bolt had a peak valuation of $11b

- Magic Leap had a peak valuation of $13b

That is only a handful of cases, but the reference class for startups worth over $10b is also pretty small. Maybe 45 private companies and another ~100 that have gone public. I'm playing pretty fast and loose here because the exact number isn't important, the odds of failure are not super high, but they are considerably higher than 0.

The base rate of failure for crypto exchanges and hedge funds is not 0: Three Arrows Capital was managing $10b, Mt. Gox was "handling over 70% of all bitcoin transactions worldwide" until it went bankrupt. Celsius Network was managing $12b. BlockFi was valued at $3b.

Again, it's a bit tough to say what the appropriate reference class is and to get a really good accounting. But reading through some lists of comparably sized companies, I would match these 4 failures against a population of maybe ~40 companies.

For a fixed valuation, potential is inversely correlated with probability of success: At very rough first approximation:

Byrne Hobart points out that gold is worth $9t, so if you think bitcoin has a 1% chance of becoming the new gold, $90b is a good place to start. We have to adjust down to discount that this value is in the future, and adjust down further for the associated risk, but this is the right ballpark.

We can also flip this equation to think about what a company's potential and current value imply about it's odds:

So if you see that Bitcoin could be worth $9t but is currenlty only worth $90b, the implied odds of success are 1% (again before accounting for risk and time).

Similarly, there have been some claims that SBF might become the world's first trillionaire. For this to happen, FTX would need to be worth around $2T, and is currently at $32b. This puts the implied odds of success at 1.6%.

We shouldn't take this math too literally, but we should take the prospects for FTX's growth really seriously. This is a platform that aims to displace a large chunk of all global financial infrastructure. $2t is not a bad estimate of potential value.

There is some more nuance we might want to apply here. If FTX realizes its full value in 10 years, at a 10% discount rate, today's valuation is discounted by 2.6x, and the implied odds should be adjusted upwards. Volatility also means the company is undervalued relative to a strict expected value, and that the odds should be adjusted upwards accordingly. There are various more sophisticated adjustments you could apply.

But the basic point stands: If a company has tremendous potential, and is only valued at a small fraction of that potential, the implied odds are not good.

Why does risk matter?

It is often worth speaking solely about expected value, and not worrying too much about risk. If a $5k intervention has a 50% chance of saving a life and a 50% chance of doing nothing, I am pretty comfortable calling this "an intervention which saves a life for $10k in expectation". In other cases, risk is more important.

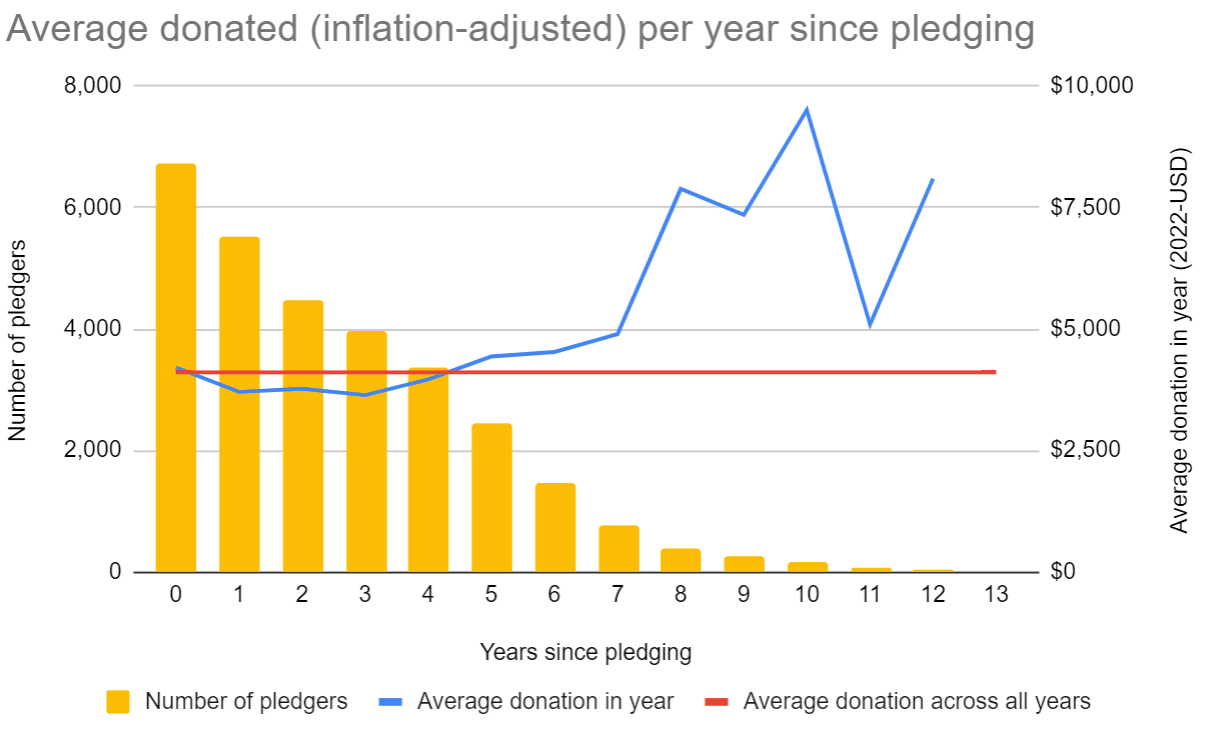

Non-profit operational needs: If you are an EA non-profit funded by donors, you care very much that the grants come to you in a predictable way. If you get $10m one year with the expectation of another $10m the subsequent year and instead get $0, you will have to lay off a lot of staff. If in the third year you get another $10m, it will be tough to re-hire and restart operations. You are not at all indifferent to the risk your donors take on.

Opportunities do get worse: SBF has famously said that his utility function with respect to money is roughly linear. I made a similar argument back in 2020:

But for a rationalist utilitarian, returns from wealth are perfectly linear! Every dollar you earn is another dollar you can give to prevent Malaria. So when it comes to earning, rationalists ought to be risk-neutral

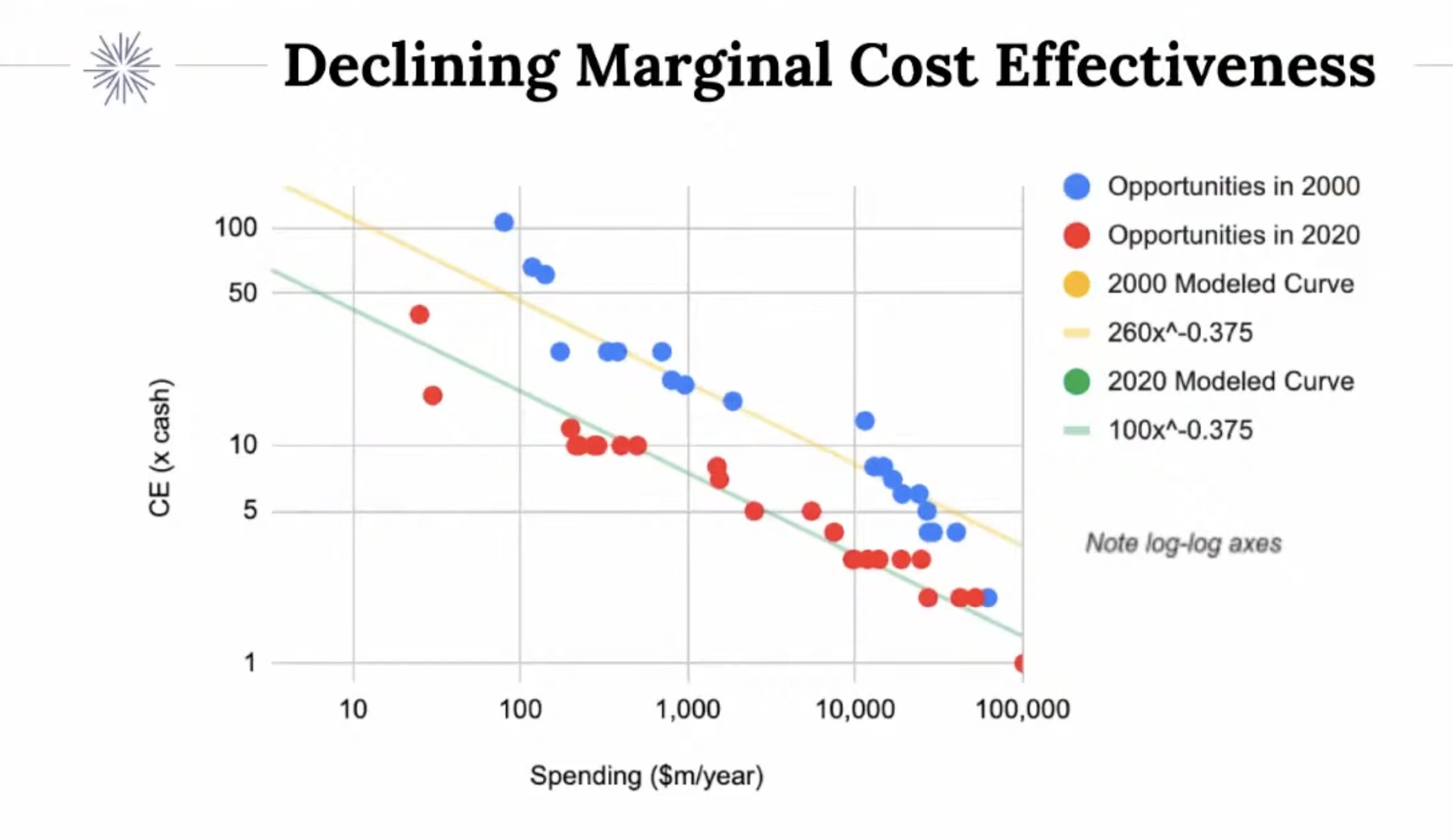

Though of course, it's not exactly linear, and at really extreme levels of wealth, this starts to matter. OpenPhil produced this chart which they urge us not to take literally, but does illustrate the declining value of money, even to a utilitarian. Eventually you run out of people who need bednets, or you are trying to distribute them to harder to reach places. There is some limit on how much money you can put into the most effective causes.

Again, not a literal interpretation, but just to get a sense of scale: Cost effectively drops by ~half as spending goes up ~10x.

I expect this effect to be more pronounced on the kinds of longtermist x-risk causes that FTX was particularly interested in funding. Even at current levels of wealth, when I talk to people about say, AI Safety, it does not feel clear that there are a bunch of non-profits that it would make sense to pump a bunch more money into.

Reputational risk: EA became a much more prominent movement in 2022. It is mainstream in a way that it wasn't previously, and remaining respectable and even popular will impact how much people want to work for EA orgs and give to EA causes. In this sense too, we should not be risk-neutral when it comes to dramatic outcomes.

Looking back from today

There are much better retrospectives than this one, but my aim is not to say what I want to with the benefit of hindsight. It is to say what I believe any reasonable person could have said even without that benefit. These views don't require any of the knowledge we have today or any deep insight into the operations of FTX. They are just the kind of basic outside-view takes that EA prides itself on.

We should not beat ourselves up for not knowing about the fraud or extent of the lies, but we absolutely should beat ourselves up for (from what I've seen from writing prior to 11/22), not making some version of these claims.

Since the FTX collapse, I periodically talk to people who say that they have "left EA". I ask what they're doing instead, and the answer is never some other version of altruism divorced from the EA movement, it is always just something that is not altruism.

I feel very bad when I have these conversations. There are still people who need bed nets. Still animals suffering in cages. Still a future that is fragile and precious. None of these facts about the world change as a result of what happened to FTX. This is easy for me to say because I have never thought about EA as a social circle. I don't go to EAG, I don't go to Bay Area house parties, none of my friends in real life are EAs.

"I found out that a priest was bad, and so now I no longer believe in God". I understand why someone might feel this way, but it just feels, to me, like that was never what any of this was about. I see faith as a matter between you and God, which is sometimes mediated in useful ways by a priesthood and community of practice, but does not need to be. We are all literate here. We are not denied access the way some people were in the past. If you care about doing good, facts about the community are incidental.

Of course, if a core tenet of your faith was something like "anyone who beliefs in God cannot do anything wrong", then I see how this would cause of questioning. But I don't see what's happened with FTX as incompatible in this way, because I have never seen EA as this kind of ideology.

In this sense, my own experience over the last couple of years has been to take the central questions of EA more personally and more seriously. Crises can, and often do, deepen rather than threaten faith.

In a practical sense, the losses the EA movement has experienced (in credibility, in funds, in human capital), make my marginal contributions more valuable. It feels more important than it did 2 years ago to do what I can. On an emotional level, I feel a greater sense that there is no secret cabal of powerful people ensuring that things go well, and subsequently feel more personal responsibility.

I am thinking hard about what is right, I am taking the gains I can see, and I am working hard to do the best that I can. I can only hope and suggest that others continue to do the same.

Oh, I think you would be super worried about it! But not "beat ourselves up" in the sense of feeling like "I really should have known". In contrast to the part I think we really should have known, which was that the odds of success were not 100% and that trying to figure out a reasonable estimate for the odds of success and the implications of failure would have been a valuable exercise that did not require a ton of foresight.

Bit of a nit, but "we created" is stronger phrasing than I would use. But maybe I would agree with something like "how can we be confident that the next billionaire we embrace isn't committing fraud". Certainly I expect there will be more vigilance the next time around and a lot of skepticism.