It’s been four months since our last forum update post! Here are some things the dev team has been working on.

We launched V1 of a new Events page

It shows you upcoming events near you, as well as global events open to everyone! We think it’s now the most complete overview of EA events that exists anywhere.

Some improvements we’ve made over the last few months:

- Anyone can post an event on the new Events page by clicking on “New Event” in their username dropdown menu in the Forum. (We also have a contractor who cross-posts many events).

- You can easily add an event to your calendar, or be notified about events near you.

- Events now have images, which we think makes the page more engaging and easier to parse.

- We’ve improved the individual event pages, to show key information more clearly, and make it obvious how you can attend an event.

- You can see upcoming events in the Forum sidebar.

If you think the new Events page is useful, please share it widely! :)

We also made a number of small improvements to the Community page, and we’re working on a significant redesign, to make it more visual and groups-focused.

Update: We launched the redesigned Community page! This will eventually replace the EA Hub groups list. (If you would like to be assigned as a group organizer to one of the groups on the Forum, or if you know of groups that are missing, please let me know.)

100+ karma users can add co-authors

It’s now possible for users to add co-authors to their posts. As a precaution against spamming, this is currently only available to users with 100+ karma. If you have less karma, feel free to contact us to add co-authors.

We updated the Sequences page

We renamed it to “Library” and highlighted some core sequences, like the Most Important Century series by Holden Karnofsky.

We merged our codebase with LessWrong

Now we share a Github repo: ForumMagnum. Feel free to check out what we’re working on, and do let us know of any issues you see.

We ran the EA Decade Review

Thanks to everyone who participated! The Review ran from December 1 to February 1. Our team has been busy since then, but we should be posting about the results soon - I know I’m looking forward to reading it! :)

We started reworking tag subscriptions

Currently, “subscribing” to a tag on the Forum means you get notifications for new posts with that tag. However, we are moving more toward the YouTube model, where “subscribing” weights posts with that tag more heavily on the frontpage, and you can separately sign up for tag notifications via the bell icon. See more details here.

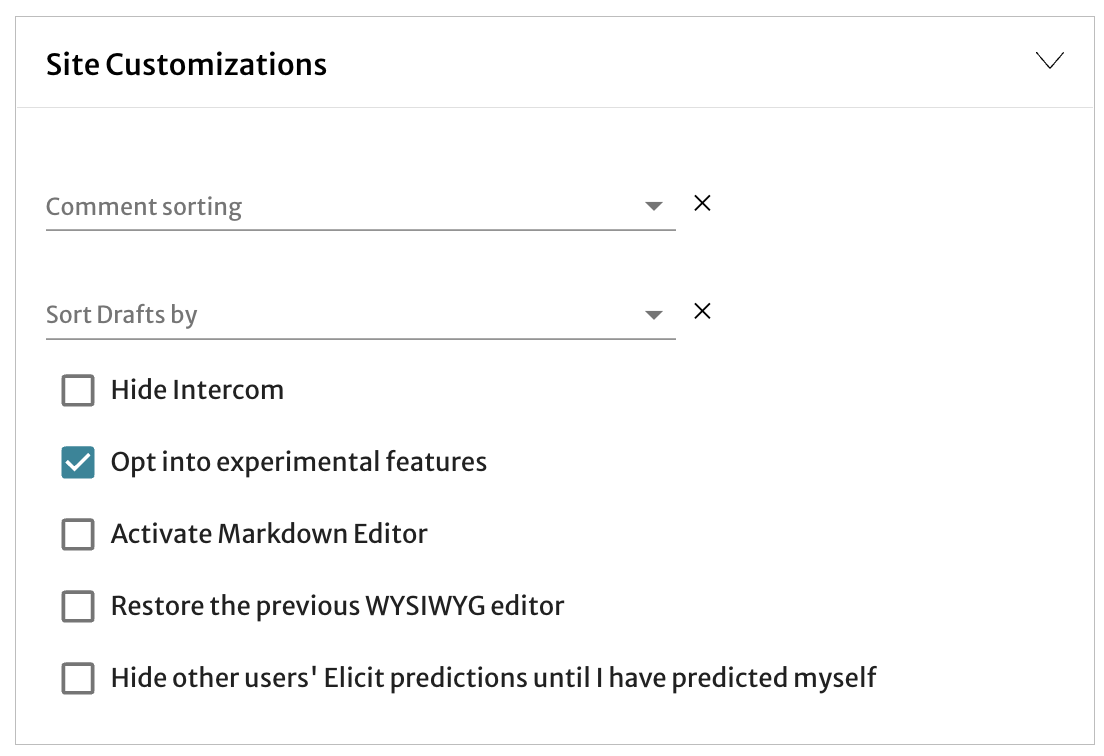

Right now this new version is behind the “experimental features” flag, so if you want to play with it you’ll have to check this box in your account settings:

You can now change your username

You can now change your username (i.e. display name) yourself via your account settings page. However, you only get one change - after that, you’ll need to contact us to change it again.

We can also hide your profile from search engines and change the URL associated with your profile. Please contact us if you’d like to do this.

We added footnotes support to our default text editor

Last but certainly not least, we deployed one of the most requested Forum features: footnotes! See the standalone post for more details.

Questions? Suggestions?

We welcome feedback! Feel free to comment on this post, leave a note on the EA Forum feature suggestion thread, or contact us directly.

Join our team! :)

We’ve built a lot these past few months, but there’s much more to be done. We’re currently hiring for software developers to join our team and help us make the EA Forum the best that it can be. If you’re interested, you can apply here.

Yeah, I agree "series" would be more appropriate if the collected posts are ordered, though it seems that some of the "sequences" in the library are not meant to be read in any particular order.