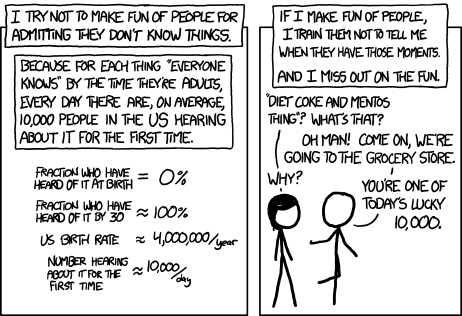

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

AISafety.info - Interactive FAQ

Additionally, this will serve as a way to spread the project Rob Miles' team[1] has been working on: Stampy and his professional-looking face aisafety.info. This will provide a single point of access into AI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that!

You can help by adding questions (type your question and click "I'm asking something else") or by editing questions and answers. We welcome feedback and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase and volunteer developers to help with the conversational agent and front end that we're building.

We've got more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

- In case you're not comfortable posting a question under your own name, you can use this form to send a question anonymously and I'll post it as a comment.

Guidelines for Answerers:

- Linking to the relevant answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

I find it remarkable how little is being said about concrete mechanisms for how advanced AI would destroy the world by the people who most express worries about this. Am I right in thinking that? And if so, is this mostly because they are worried about infohazards and therefore don't share the concrete mechanisms they are worried about?

I personally find it pretty hard to imagine ways that AI would e.g. cause human extinction that feel remotely plausible (allthough I can well imagine that there are plausible pathways I haven't thought of!)

Relatedly, I wonder if public communication about x-risks from AI should be more concrete about mechanisms? Otherwise it seems much harder for people to take these worries seriously.

This 80k article is pretty good, as is this Cold Takes post. Here are some ways an AI system could gain power over humans:

I agree, and I actually have the same question about the benefits of AI. It all seems a bit hand-wavy, like 'stuff will be better and we'll definitely solve climate change'. More specifics in both directions would be helpful.

It seems a lot of people are interested in this one! For my part, the answer is "Infohazards kinda, but mostly it's just that I haven't gotten around to it yet." I was going to do it two years ago but never finished the story.

If there's enough interest, perhaps we should just have a group video call sometime and talk it over? That would be easier for me than writing up a post, and plus, I have no idea what kinds of things you find plausible and implausible, so it'll be valuable data for me to hear these things from you.

Nanobots are a terrible method for world destruction, given that they have not been invented yet. Speaking as a computational physicist, there are some things you simply cannot do accurately without experimentation, and I am certain that building nanobot factories is one of them.

I think if you actually want to convince people that AI x-risk is a threat, you unavoidably have to provide a realistic scenario of takeover. I don't understand why doing so would be an "infohazard", unless you think that a human could pull off your plan?

Re: uncharitability: I think I was about as uncharitable as you were. That said, I do apologize -- I should hold myself to a higher standard.

I agree they might be impossible. (If it only finds some niche application in medicine, that means it's impossible, btw. Anything remotely similar to what Drexler described would be much more revolutionary than that.)

If they are possible though, and it takes (say) 50 years for ordinary human scientists to figure it out starting now... then it's quite plausible to me that it could take 2 OOMs less time than that, or possibly even 4 OOMs, for superintelligent AI scientists to figure it out starting whenever superintelligent AI scientists appear (assuming they have access to proper experimental facilities. I am very uncertain about how large such facilities would need to be.) 2 OOMs less time would be 6 months; 4 OOMs would be Yudkowsky's bathtub nanotech scenario (except not necessarily in a single bathtub, presumably it's much more likely to be feasible if they have access to lots of laboratories). I also think it's plausible that even for a superintelligence it would take at least 5 years (only 1 OOM speedup over humans). (again, conditional o... (read more)

I'm having an ongoing discussion with a couple professors and a PhD candidate in AI about "The Alignment Problem from a Deep Learning Perspective" by @richard_ngo, @Lawrence Chan, and @SoerenMind. They are skeptical of "3.2 Planning Towards Internally-Represented Goals," "3.3 Learning Misaligned Goals," and "4.2 Goals Which Motivate Power-Seeking Would Be Reinforced During Training". Here's my understanding of some of their questions:

- The argument for power-seeking during deployment depends on the model being able to detect the change from the training to deployment distribution. Wouldn't this require keeping track of the distribution thus far, which would require memory of some sort, which is very difficult to implement in the SSL+RLHF paradigm?

- What is the status of the model after the SSL stage of training?

- How robust could its goals be?

- Would a model be able to know:

- what misbehavior during RLHF fine-tuning would look like?

- that it would be able to better achieve its goals by avoiding misbehavior during fine-tuning?

- Why would a model want to preserve its weights? (Sure, instrumental convergence and all, but what's the exact mechanism here?)

- To what extent would all thes

... (read more)I don't see why it would require memory, because the model will have learned to recognize features of its training distribution. So this seems like this just requires standard OOD detection/anomaly detection. I'm not familiar with this literature but I expect that if you take a state-of-the-art model, you'd be able to train a linear probe on its activations to classify whether or not it's in-distribution or OOD with pretty high confidence. (Anyone got helpful references?)

Maybe it's helpful to say here that I think the change will be very blatant - for example, the model will have an extended conversation with the CEO of a company while sending emails to their employees and navigating their internal databases, and based on the responses to these actions it will be very confident that it's talking to an actual CEO, not a hired labeller. Or the model will literall... (read more)

Suppose that near-term AGI progress mostly looks like making GPT smarter and smarter. Do people think this, in itself, would likely cause human extinction? How? Due to mesa-optimizers that would emerge during training of GPT? Due to people hooking GPT up to control of actions in the real world, and those autonomous systems would themselves go off the rails? Just due to accelerating disruptive social change that makes all sorts of other risks (nuclear war, bioterrorism, economic or government collapse, etc) more likely? Or do people think the AI extinction risk mainly comes when people start building explicitly agentic AIs to automate real-world tasks like making money or national defense, not just text chats and image understanding as GPT does?

Another probably very silly question: in what sense isn't AI alignment just plain inconceivable to begin with? I mean, given the premise that we could and did create a superintelligence many orders of magnitude superior to ourselves, how could it even make sense to have any type of fail-safe mechanism to 'enslave it' to our own values? A priori, it sounds like trying to put shackles on God. We can't barely manage to align ourselves as a species.

If an AI is built to value helping humans, and if that value can remain intact, then it wouldn't need to be "enslaved"; it would want to be nice on its own accord. However, I agree with what I take to be the thrust of your question, which is that the chances seem slim that an AI would continue to care about human concerns after many rounds of self-improvement. It seems too easy for things to slide askew from what humans wanted one way or other, especially if there's a competitive environment with complex interactions among agents.

What's the expected value of working in AI safety?

I'm not certain about longtermism and the value of reducing x-risks, I'm not optimistic that we can really affect the long future, and I guess the future of humanity may be bad. Many EA people are like me, that's why only 15% people think AI safety is top cause area(survey by Rethink Priority).

However, In a "near-termist" view, AI safety research is still valuable because researching it can may avoid catastrophe(not only extinction), which causes the suffering of 8 billion people and maybe animals. But, things like researching on global health, preventing pandemic seems to have a more certain "expected value"(Maybe 100 QALY/extra person or so). Because we have our history experiences and a feedback loop. AI safety is the most difficult problem on earth, I feel like the expected value is like"???" It may be very high, may be 0. We don't know how serious suffering it would make(it may cause extinction in a minute when we're sleeping, or torture us for years?) We don't know if we are on the way finding the soultion, or we are all doing the wrong predictions of AGI's thoughts? Will the government control the power of A... (read more)

Work related to AI trajectories can still be important even if you think the expected value of the far future is net negative (as I do, relative to my roughly negative-utilitarian values). In addition to alignment, we can also work on reducing s-risks that would result from superintelligence. This work tends to be somewhat different from ordinary AI alignment, although some types of alignment work may reduce s-risks also. (Some alignment work might increase s-risks.)

If you're not a longtermist or think we're too clueless about the long-run future, then this work would be less worthwhile. That said, AI will still be hugely disruptive even in the next few years, so we should pay some attention to it regardless of what else we're doing.

Rambling question here. What's the standard response to the idea that very bad things are likely to happen with non-existential AGI before worse things happen with extinction-level AGI?

Eliezee dismissed this as unlikely "what, self-driving cars crashing into each other?", and I read his "There is no fire alarm" piece, but I'm unconvinced.

For example, we can imagine a range of self-improving, kinda agentic AGIs, from some kind of crappy ChaosGPT let loose online, to a perfect God-level superintelligence optimising for something weird and alien, but perfectly able to function in, conceal itself in and manipulate human systems.

It seems intuitively more likely we'll develop many of the crappy ones first (seems to already be happening). And that they'll be dangerous.

I can imagine flawed, agentic, and superficially self-improving AI systems going crazy online, crashing financial systems, hacking military and biosecurity, taking a shot at mass manipulation, but ultimately failing to displace humanity, perhaps because they fail to operate in analog human systems, perhaps because they're just not that good.

Optimistically, these crappy AIs might function as a warning shot/ fire alarm. Everyone gets terrified, realises we're creating demons, and we're in a different world with regards to AI alignment.

My own response is that AIs which can cause very bad things (but not human disempowerment) will indeed come before AIs which can cause human disempowerment, and if we had an indefinitely long period where such AIs were widely deployed and tinkered with by many groups of humans, such very bad things would come to pass. However, instead the period will be short, since the more powerful and more dangerous kind of AI will arrive soon.

(Analogy: "Surely before an intelligent species figures out how to make AGI, it'll figure out how to make nukes and bioweapons. Therefore whenever AGI appears in the universe, it must be in the post-apocalyptic remnants of a civilization already wracked by nuclear and biological warfare." Wrong! These things can happen, and maybe in the limit of infinite time they have to happen, but they don't have to happen in any given relatively short time period; our civilization is a case in point.)

I think there's a range of things that could happen with lower-level AGI, with increasing levels of 'fire-alarm-ness' (1-4), but decreasing levels of likelihood. Here's a list; my (very tentative) model would be that I expect lots of 1s and a few 2s within my default scenario, and this will be enough to slow down the process and make our trajectory slightly less dangerous.

Forgive the vagueness, but these are the kind of things I have in mind:

1. Mild fire alarm:

- Hacking (prompt injections?) within current realms of possibility (but amped up a bit)

- Human manipulation within current realms of possibility (IRA disinformation *5)

- Visible, unexpected self-improvement/ escape (without severe harm)

- Any lethal autonomous weapon use (even if generally aligned) especially by rogue power

- Everyday tech (phones, vehicles, online platforms) doing crazy, but benign misaligned stuff

- Stock market manipulation causing important people to lose a lot of money

2. Moderate fire alarm:

- Hacking beyond current levels of possibility

- Extreme mass manipulation

- Collapsing financial or governance systems causing minor financial or political crisis

- Deadly use of autonomous AGI in weapon... (read more)

Suggestion for the forum mods: make a thread like this for basic EA questions.

Steven: here's a semi-naive question: much of the recent debate about AI alignment & safety on social media seems to happen between two highly secular, atheist groups: pro-AI accelerationists who promise AI will create a 'fully automated luxury communist utopia' based on leisure, UBI, and transhumanism, and AI-decelerationist 'doomers' (like me) who are concerned that AI may lead to mass unemployment, death, and extinction.

To the 80% of the people in the world involved in organized religion, this debate seems to totally ignore some of the most important and fundamental values and aspirations in their lives. For those who genuinely believe in eternal afterlives or reincarnation, the influence of AI during our lives may seem quantitatively trivial compared to the implications after our deaths.

So, why is does the 'AI alignment' field -- which is supposed to be about aligning AI systems with human values & aspirations -- seem to totally ignore the values & aspirations of the vast majority of humans who are religious?

Wonderful! This will make me feel (slightly) less stupid for asking very basic stuff. I actually had 3 or so in mind, so I might write a couple of comments.

Most pressing: what is the consensus on the tractability of the Alignment problem? Have there been any promising signs of progress? I've mostly just heard Yudkowky portray the situation in terms so bleak that, even if one were to accept his arguments, the best thing to do would be nothing at all and just enjoy life while it lasts.

Is there anything that makes you skeptical that AI is an existential risk?

What do the recent developments mean for AI safety career paths? I'm in the process of shifting my career plans toward 'trying to robustly set myself up for meaningfully contributing to making transformative AI go well' (whatever that means), but everything is developing so rapidly now and I'm not sure in what direction to update my plans, let alone develop a solid inside view on what the AI(S) ecosystem will look like and what kind of skillset and experience will be most needed several years down the line.

I'm mainly looking into governance and field build... (read more)

How can you align AI with humans when humans are not internally aligned?

AI Alignment researchers often talk about aligning AIs with humans, but humans are not aligned with each other as a species. There are groups whose goals directly conflict with each other, and I don't think there is any singular goal that all humans share.

As an extreme example, one may say "keep humans alive" is a shared goal among humans, but there are people who think that is an anti-goal and humans should be wiped off the planet (e.g., eco-terrorists). "Humans should be ... (read more)

What does foom actually mean? How does it relate to concepts like recursive self-improvement, fast takeoff, winner-takes-all, etc? I'd appreciate a technical definition, I think in the past I thought I knew what it meant but people said my understanding was wrong.

I sometimes see it claimed that AI safety doesn't require longtermism to be cost effective (roughly: the work is cost effective considering only lives affected this century). However, I can't see how this is true. Where is the analysis that supports this, preferable relative to GiveWell?

I guess my fundamental question right now is what do we mean by intelligence? Like, with humans, we have a notion of IQ, because lots of very different cognitive abilities happen to be highly correlated in humans, and this allows us to summarize them all with one number. But different cognitive abilities aren't correlated in the same way in AI. So what do we mean when we talk about an AI being much smarter than humans? How do we know there even are significantly higher levels of intelligence to go to, since nothing much more intelligent than humans has eve... (read more)

Is there any consensus on who's making things safer, and who isn't? I find it hard to understand the players in this game, it seems like AI safety orgs and the big language players are very similar in terms of their language, marketing and the actual work they do. Eg openAI talk about ai safety in their website and have jobs on the 80k job board, but are also advancing ai rapidly. Lately it seems to me like there isn't even agreement in the ai safety sphere over what work is harmful and what isn't (I'm getting that mainly from the post on closing the lightcone office)

If I wanted to be useful to AI safety, what are the different paths I might take? How long would it take someone to do enough training to be useful, and what might they do?

This question is more about ASI, but here goes: If LLMs are trained on human writings, what is the current understanding for how an ASI/AGI could get smarter than humans? Would it not just asymptotically approach human intelligence levels? It seems to be able to get smarter learning more and more from the training set, but the training set also only knows so much.

How much of an AGI's self improvement is reliant on it training new AIs?

If alignment is actually really hard, wouldn't this AGI realise that and refuse to create a new (smarter) agent that will likely not exactly share its goals?

What's the strongest argument(s) for the orthogonality thesis, understandable to your average EA?

why does ECL mean a misaligned AGI would care enough about humans to keep them alive? Because there are others in the universe who care a tiny bit about humans even if humans weren't smart enough to build an aligned AGI? or something else?

I read that AI-generated text is being used as input data due to a data shortage. What do you think are some foreseeable implications of this?

Why does Google's Bard seem so much worse than GPT? If the bitter lesson holds, shouldn't they just be able to throw money at the problem?

Again, maybe next time include a Google Form where people can ask questions anonymously that you'll then post in the thread a la here?

I've seen people already building AI 'agents' using GPT. One crucial component seems to be giving it a scratchpad to have an internal monologue with itself, rather than forcing it to immediately give you an answer.

If the path to agent-like AI ends up emerging from this kind of approach, wouldn't that make AI safety really easy? We can just read their minds and check what their intentions are?

Holden Karnofsky talks about 'digital neuroscience' being a promising approach to AI safety, where we figure out how to read the minds of AI agents. And for curr... (read more)

Are there any concrete reasons to suspect language models to start to act more like consequentialists the better they get at modelling them? I think I'm asking something subtle, so let me rephrase. This is probably a very basic question, I'm just confused about it.

If an LLM is smart enough give us a step-by-step robust plan that covers everything with regards to solving alignment and steering the future to where we want it, are there concrete reasons to expect it to also apply a similar level of unconstrained causal reasoning wrt its own loss function or evolved proxies?

At the moment, I can't settle this either way, so it's cause for both worry and optimism.

There's been a lot of debate on whether AI can be conscious or not, and whether that might be good or bad.

What concerns me though is that we have yet to uncover what consciousness even is, and why we are conscious in the first place.

I feel that if there is a state inbetween being sentient and sophont, that AI may very well reach it without us knowing and that there could be unpredictable ramifications.

Of course, the possibility of AI helping us uncover these very things should not be disregarded in of itself, but it should ideall not come at a cost.

What would happen if AI developed mental health issues or addiction problems, surely it couldn’t be genetic, ?

If aligned AI is developed, then what happens?

Who should aligned AI be aligned with?

Hello, I am new to AI Alignment Policy research and am curious to learn about what the most reliable forecasts on the pace of AGI development are. So far what I have read points to the fact that it is just very difficult to predict when we will see the arrival of TAI. Why is that?

How do you prevent dooming? Hard to do my day to day work. I am new to this space but everyday I see people’s timelines get shorter and shorter.

I love love love this.

Is GPT-4 an AGI?

One thing I have noticed is goalpost shifting on what AGI is--it used to be the Turing test, until that was passed. Then a bunch of other criteria that were developed were passed and and now the definition of 'AGI' now seems to default to what previously what have been called 'strong AI'.

GPT-4 seems to be able to solve problems it wasn't trained on, reason and argue as well as many professionals, and we are just getting started to learn it's capabilities.

Of course, it also isn't a conscious entity--it's style of intelligence is strang... (read more)

I wonder to what extent people take the alignment problem to be the problem of (i) creating an AI system that reliaby does or tries to do what its operators want it do as opposed to (ii) the problem of creating an AI system that does or tries to do what is best "aligned with human values" (whatever this precisely means).

I see both definitions being used and they feel importantly different to me: if we solve the problem of aligning an AI with some operator, then this seems far away from safe AI. In fact, when I try to imagine how an AI might cause a catastr... (read more)

If we get AGI, why might it pose a risk? What are the different components of that risk?

If and how are risks from AGI distinct from the kinds of risks we face from other people? The problem "autonomous agent wants something different from you" is just the everyday challenge of dealing with people.

Will AGI development be restricted by physics and semiconductor wafer? I don't know how AI was developing so fast in the history, but some said it's because the Moore's Law of seniconductor wafer. If the development of semiconductor wafer comes to an end because of physical limitations l, can AI still grow exponentially?

What attempts have been made to map common frameworks and delineate and sequence steps plausibly required for AI theories to transpire, pre-superintelligence or regardless of any beliefs for or against potential for superintelligence?