Personal opinion only. Inspired by filling out the Meta coordination forum survey.

Epistemic status: Very uncertain, rough speculation. I’d be keen to see more public discussion on this question

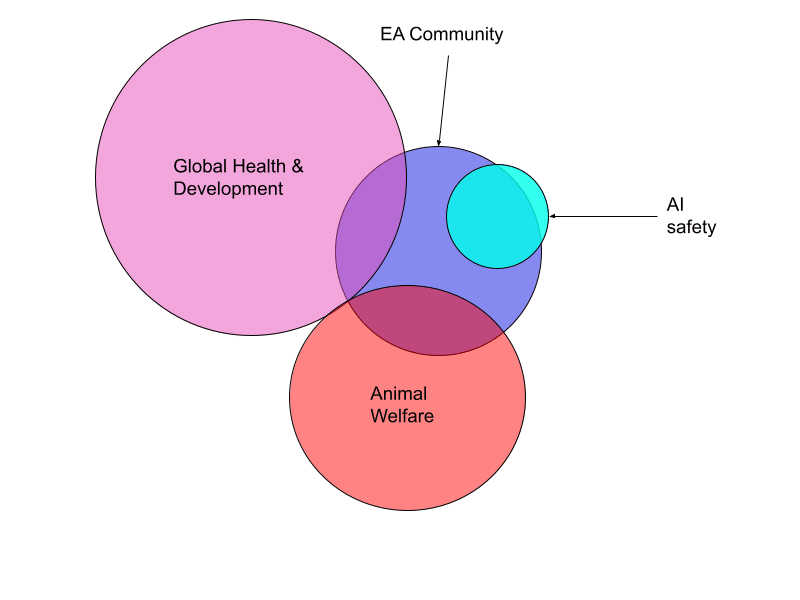

One open question about the EA community is it’s relationship to AI safety (see e.g. MacAskill). I think the relationship EA and AI safety (+ GHD & animal welfare) previously looked something like this (up until 2022ish):[1]

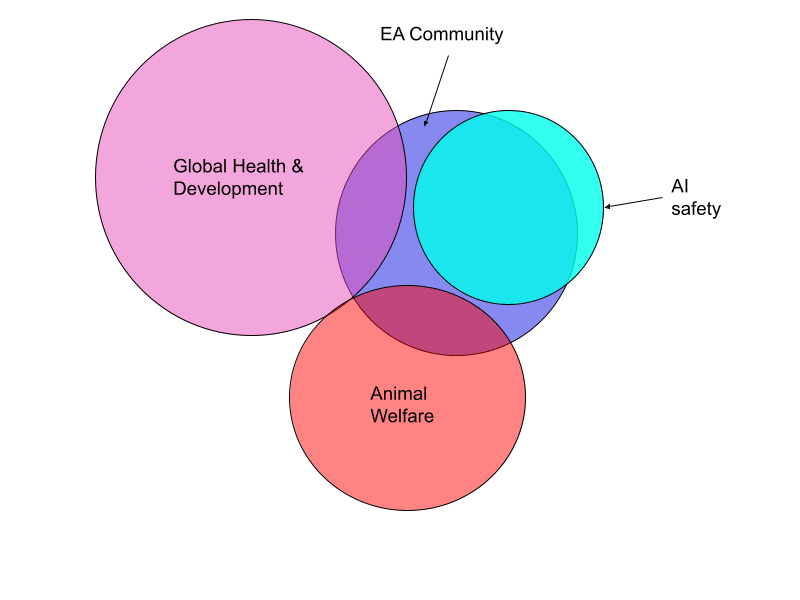

With the growth of AI safety, I think the field now looks something like this:

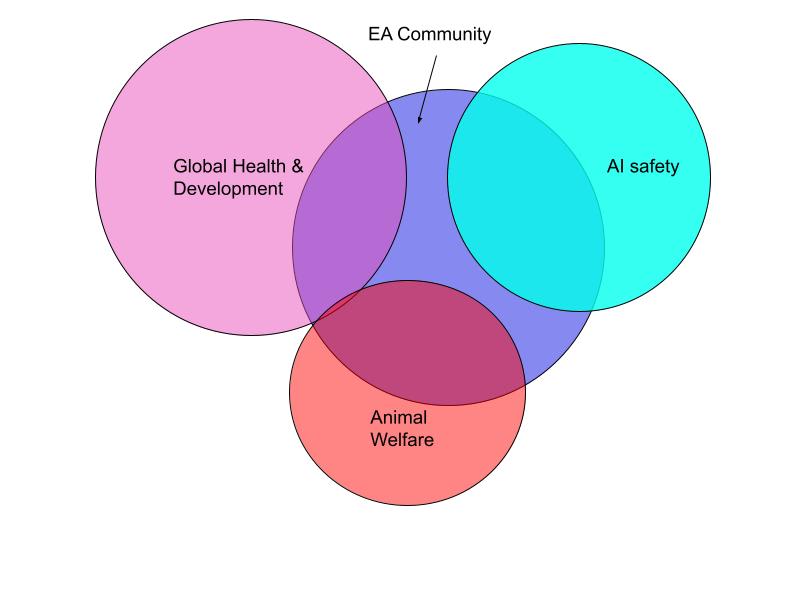

It's an open question whether the EA Community should further grow the AI safety field, or whether the EA Community should become a distinct field from AI safety. I think my preferred approach is something like: EA and AI safety grow into new fields rather than into eachother:

- AI safety grows in AI/ML communities

- EA grows in other specific causes, as well as an “EA-qua-EA” movement.

As an ideal state, I could imagine the EA community being in a similar state w.r.t AI safety that it currently has in animal welfare or global health and development.

However I’m very uncertain about this, and curious to here what other people’s takes are.

- ^

I’ve ommited non-AI longtermism, along with other fields, for simplicity. I strongly encourage not interpreting these diagrams too literally

That's my big concern as well. I just applied for my first EAG, only after asking the organisers if there would be enough content/people to make it worthwhile for someone who really has something to offer only to GHD people. Their response was still pretty encouraging

"We'd roughly estimate about 10% of attendees at Boston will be focussed on Global Health and Development. Our content specialist will be aiming to curate about 15% of the content around Global Health and Development (which refers to talks, workshops, speed meetings, and meetups). "

That would mean maybe 100-150 GHD people at the conference which is all good from my perspective, but if it was half that I would be getting a bit shaky on whether it would be worth it or not.