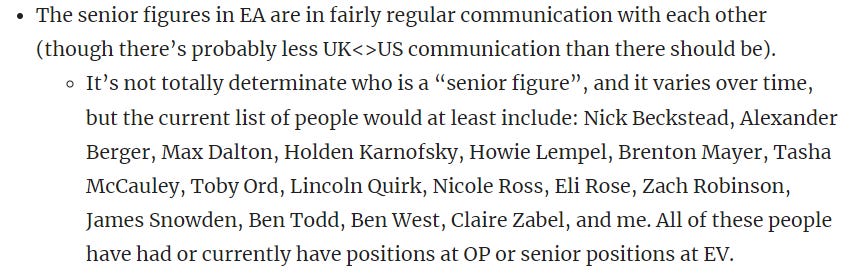

I was reading Will MacAskill’s recent EA Forum post when I came across a list of names. Will said this is a list of people who would definitely be considered “senior figures” within EA at the moment.

I really don’t mind that some of EA’s CEOs call each other to ask for advice or bounce ideas off of each other. I think it’s pretty healthy!

But also, I have never heard of at least half of these people??? I cannot be this out of the know, it’s embarrassing. So I did some digging.

Who are these “senior figures”?

Effective Ventures UK Trustees:

Will MacAskill: I know who this guy is. He was on a poster in my local tube station when his book What We Owe the Future came out. He also wrote Doing Good Better before that. Even before last summer’s media push, he was one of the most recognisable people in EA. He’s said he’ll be stepping down as a trustee after a few additional trustees join… but I assume people will still call him and ask for his opinion on things.

Claire Zabel: Senior Program Officer at Open Philanthropy.

Tasha McCauley: On the board of Effective Ventures UK. Her Linkedin shows she’s been working on AI stuff since at least 2010, but probably much earlier. Also happens to be married to Joseph Gordon Levitt.

Lincoln Quirk: Founder of Wave Mobile Money. Wave has been closely linked to the EA community since way back. (Edit: I previously incorrectly listed Lincoln under "Other".)

Nick Beckstead: Helped launch the Centre for Effective Altruism. Joined Open Philanthropy in 2014 (it was still part of GiveWell back then). Left OP to run the ill-fated FTX Future Fund.

Effective Ventures US Trustees:

Nick Beckstead: See above.

Eli Rose: Senior Program Officer at Open Philanthropy.

Nicole Ross: Community Health at CEA.

Zach Robinson: Interim CEO of Effective Ventures US, starting January 2023, as well as acting as a trustee. Apparently this is more normal in the US than it is in the UK. Previously Chief of Staff at Open Philanthropy.

CEOs/Directors:

Zach Robinson: See above - CEO and trustee of EV US.

Howie Lempel: Interim CEO of Effective Ventures UK, starting January 2023. Known for his appearance on the 80,000 Hours Podcast, talking about working at Open Philanthropy and 80,000 Hours with severe anxiety and depression.

Alexander Berger: Co-CEO of Open Philanthropy. Well-represented on Twitter (and the only “senior figure” to appear on both this list and its Twitter parody).

Holden Karnofsky: Co-CEO of Open Philanthropy. Went on a three-month sabbatical to explore whether he should move to working on AI safety starting on March 8th - no news yet on whether he’s returned to Open Philanthropy.

Ben West: Interim Executive Director of the Centre for Effective Altruism

Brenton Mayer: Interim CEO of 80,00 Hours. I’ve been to a few parties with him, which I highly recommend - he’s got a great sense of humour!

Other:

Max Dalton: Former Executive Director of CEA. In my view, he was a good one - CEA was as bit of a mess before he took over. Resigned in February 2023 and took on an advisory role instead, for the sake of his mental health. Still very involved, including in the search for his replacement.

Toby Ord: Founder of Giving What We Can. Author of The Precipice. I had heard of him before this too.

James Snowden: Program Officer at Open Philanthropy; previously GiveWell; previously CEA.

Ben Todd: Founder of 80,000 Hours. Currently on sabbatical.

Why does EA have senior figures that lots of us have never heard of?

The short answer is I’d barely heard of them too, so I’m not really qualified to say. But being unqualified has never stopped me from guessing! So my guess is some combination of:

It’s normal to have influential people just doing their daily jobs and no one really paying attention to them. Here in the UK, how many of us had heard of Chris Whitty, the Chief Medical Officer, before the televised Covid broadcasts? Definitely not me. But even though I wasn’t seeing him on TV, he was still having a major influence on UK politics before Covid started.

Some of these people probably didn’t want the spotlight. Maybe they had other career ambitions and didn’t want to advertise their involvement with EA. Maybe they’d seen EAs being mean to Will MacAskill on the Forum and didn’t want that for themselves. Maybe they were just shy. For whatever reason, it’s possible that some of these people were turning down podcasts invitations and not giving talks at EAG because they wanted to keep a low profile.

Attention follows power laws. This was a point Will made in the past and I agree completely, so I’ll just quote him here: “I don’t think we’re ever going to be able to get away from a dynamic where a handful of public figures are far more well-known than all others. Amount of public attention (as measured by, e.g. twitter followers) follows a power law.”

EA is still in the “group of friends just trying stuff” mindset even though it has far too much power at this point to be operating that way. And so we don’t always have the level of scrutiny or accountability that we should, because people who have been involved since 2014 are like, “Oh him? That’s just Bob. Everyone knows Bob.” But actually we don’t all know Bob anymore because there are like 10,000 of us now, not 100. The rest of Will’s post is about EA can be better about this, and I thought it was pretty good.

What’s going on with EA power dynamics over the next few months?

Several projects are going on at once. In roughly the order I predict they’ll happen:

New trustees are being recruited for Effective Ventures UK and US. The application process closed on June 4th. I expect it will take a while to announce the new trustees, but I also think this is a high priority, but so my prediction based on basically nothing would be an autumn announcement of some new trustees? Will MacAskill also plans to resign from his trusteeship once the new trustees are in post.

Claire Zabel, Max Dalton, and Michelle Hutchinson (not mentioned above - she works at 80,000 Hours) are leading the search for a new Executive Director for the Centre for Effective Altruism. They started a couple months ago, but EA job applications are famously slow, so who knows? I’m guessing also autumn, maybe closer to Christmas, but I’m hoping it will at least be a 2023 announcement.

Julia Wise, Sam Donald, and Ozzie Gooen are leading a project on reforms at EA organisations. They’re planning to make recommendations to EA organisations about ways they could change to, for example, be more friendly to whistleblowers. They’re planning to give public updates throughout but I wouldn’t be surprised if their final update isn’t until 2024. (None of these people are mentioned above either, but they each give bios in the linked Forum post. Julia is also known for her blog Giving Gladly and Ozzie is known for his Facebook page.)

I don’t when Zach and Howie will stop being interim CEOs of EV US and UK, respectively. I’m not sure they know either. I wouldn’t be surprised if this was in 2024.

I'm hoping we’ll see more organisations improving their governance structures and diversifying their board members. You can help by registering your interest in serving on a board with the EA Good Governance Project.

This post is excerpted from my weekly newsletter of EA-related miscellany, EA Lifestyles. You can subscribe for free at ealifestyles.substack.com.

thankyou! I keep pointing out that EVF doesn't have anything on their website about who their trustees are, and this isn't good for transparency.

As much as I appreciate this post, it seems to have followed the same process I have - asked 'ok who actually are these senior figures?', and realised that for some, there's not much info out there, and then gathered what little there is on google search and linkedin.

It would be great if the lesser-known EVF trustees could describe, in their own words, who they are, what they do, and how someone could contact them.

My sincere apologies, I had missed that it had been updated! V. Embarrassing. Thankyou for doing that