Before I address the title of the article I'm going to quickly outlined why I think brands and products grow and why behaviours in general become more popular. This post involves some meandering so reader beware.

I've been doing marketing for about 15 years and as far as I can tell there are 3 models of communication that change people's behaviour:

Model 1: "SALIENCE":

communication changes behaviour by creating salience between an intervention or product or brand and the memories people access at a point of purchase or engagement (i.e. 'when they are in-market'). For example, when I want to make "pasta bolognaise" the associated memories my brain surfaces could be "barilla", "italian" and "beyond meat" (for all the nerds this leans into associative network theory if you're interested in learning more)

Model 2: "PERSUASION":

communication changes behaviour by persuading or telling a story. This leans into System 2 thinking and Narrative Transportation Theory respectively (I recommend checking out Thinking Fast and Slow and https://en.wikipedia.org/wiki/Transportation_theory_(psychology))

Model 3: "CULTURAL IMPRINTING": communication changes behaviour because all consumption is actually about building and maintaining status within a desired group and all products are consumed in social settings (for more I recommend "ads don't work that way" > https://meltingasphalt.com/ads-dont-work-that-way/)

In classic marketing theory these are all bucketed under "Promotion" (i.e. communication). There are 3 other "P's" to marketing (and reasons why products or brands grow):

Product & Price (which IMO only need to be 'good enough' rather than 'the best' - see satisficing)

Physical availability (Is the thing I want to buy easy to find?)

Ok but what does this have to do with non human animals?

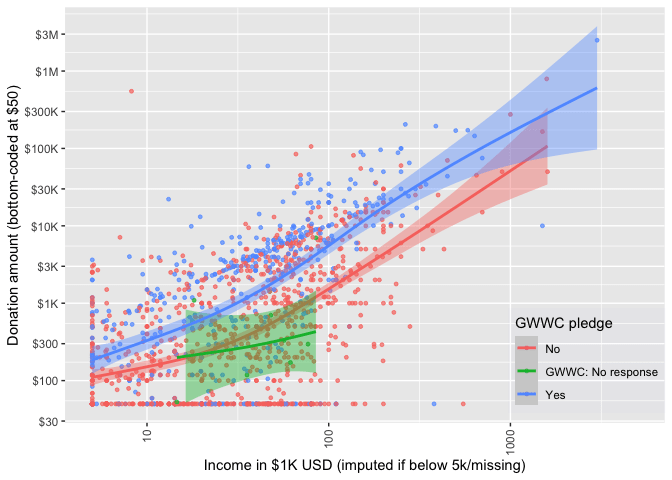

AFAICT about 30% of EAs are vegan but my model says that if non human animals have any hope this number should be closer to 90%.

Let's review the model:

M1 - "SALIENCE": if you're in EA you're hearing about animals suffering and vegan alternatives all the time

M2- "PERSUASION": EAs are especially rational people and not eating animals is obviously the more rational choice for 90%+ people reading this

M3: "CULTURAL IMPRINTING": it is a higher status move in the EA community to be vegan than not

Product & Price: vegan food tastes fine, and EAs can afford it (i.e. they're relatively rich)

Physical availability: the hardest part of any product adoption is to get people to try it once and you can't go to an EA event without trying vegan food

So how is any of this useful?

In behaviour change and marketing strategy a common practice to get a deeper or different view of peoples decision making is by studying its extreme users instead of the general population.

Some examples of how this has worked elsewhere:

- Transmen and transwomen for feminine care innovation

- Hikikomori for future social spaces

- Amish for clothing sustainability

- Arthritis sufferers for kitchen utensils

Anyway, I think looking deeply at why EAs do and do not eat farm grown meat (at an individual level) and why vegan adoption is so low 'in EA culture' could provide lots of insight.

https://github.com/rethinkpriorities/ea_data_public has "The actual code and data is in the EA-data private repo. A line in the main_2020.R file there copies the content to this repo in a parallel folder on one's hard drive, to be pushed here. ... No data will be shared here, for now at least."