As part of Effective Self-Help’s research into the most effective ways people can improve their wellbeing and productivity, we’ve compiled more than 100 practical productivity recommendations from 40 different articles written by the EA community.

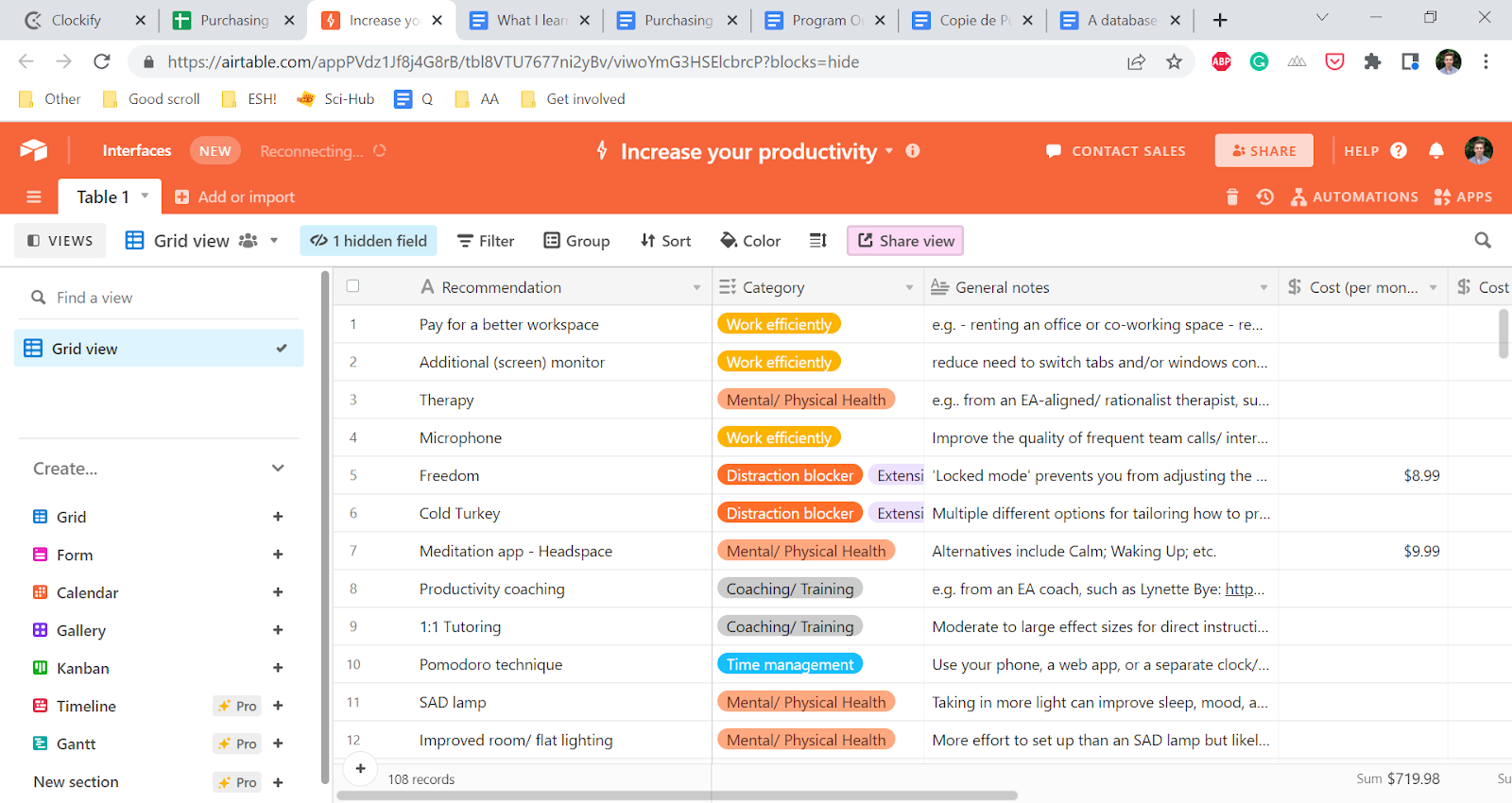

The result is this database on Airtable. Please take a look and see what you find useful!

To the best of our knowledge, this table includes the recommendations made in every prior post offering productivity advice written on the EA Forum, LessWrong, or by someone connected to the effective altruism and rationality communities. You can view the list of articles included here. Let us know if there are articles we’ve missed.

Link here: https://airtable.com/shrxxQ805blyYMyLH

This database is intended as a living document and remains a work in progress. We would love to hear your thoughts on how we can make this most useful, whether in the comments or via a private message.

In the next fortnight, we will publish a preliminary report into increasing productivity, synthesising and more thoroughly evaluating many of the recommendations included in this database.

The rest of this post provides an explanation of how the database works, why we made it, and a few of its potential limitations.

How does this table work?

As a whole, the table has 110 recommendations across 9 categories. If you’re unfamiliar with using Airtable, we recommend this short explainer. Key points to note are the following:

- Many of the cells contain more information than is visible at the top level. Click on a given cell and then the arrow in the top right to see everything written there.

- Airtable allows for easy filtering and sorting. Most usefully, you can:

- organise the results by category (including filtering out any your not interested in)

- rank them by cost (either per-month or one-off)

We’ve split the recommendations into the following rough categories:

- Mental/Physical health

- Working efficiently (helps you work faster)

- Working effectively (helps you prioritise better/ work on the most important thing)

- Distraction blocker (helps minimise time spent off-task)

- Extensions (software or browser add-ons)

- Security (file and account backups/ safety)

- Coaching/ Training

- Finances

- Misc.

Why did we build this table?

Productivity as a mechanism for increasing impact

If you’re reading this article on the EA Forum, it’s a fairly safe bet that you’re either currently working on one of the world’s most pressing problems or hold serious aspirations to do so in the future. By increasing your productivity, you can increase the value of your output (and the size of your output) for a given day/week/year of work. In doing so, you increase the endline impact of your work.

Theory of Change: Recommendation implemented -> Increased work output (more work done per day and/or value of output increased) -> Increased impact.

While the gains we can expect from many of these changes are very small, many also seem very cheap and easy to implement. Stacked together, implementing several recommendations could produce notable increases in your productivity, and by consequence, your impact. For a rough estimate of how this may translate into impact, see this Guesstimate model. For a note of caution on translating increased productivity directly into increased impact, see this comment.

Productivity is trainable

“In low-complexity jobs, the top 1% averages 52% more [productivity] than the average employee. For medium-complexity jobs this figure is 85%, and for high-complexity jobs it is 127%” (Hunter et al., 1990)

We believe these significant differences in productivity are largely trainable. Given that very few people recieve any formal education in working effectively and efficiently, it seems highly likely that there are low-hanging fruit for improving their productivity. This database is an attempt to identify those low-hanging fruit.

A few potential issues worth noting

The database is off-puttingly long/ dense/ hard to navigate

Fair enough! The database is definitely a work in progress and could likely be better organised. Let us know in the comments if you have ideas for how we could improve it.

We’re also currently finalising a report highlighting what appear to be the most useful and/or cost-effective recommendations to implement aimed at increasing your productivity. You can sign up to our newsletter if you’d like to ensure you see this once it’s published. Otherwise, keep your eyes peeled for us publishing this on the Forum in about two weeks’ time!

How do I know which recommendations are most worthwhile?

We hope to add quick estimates of cost-effectiveness ($ per hour saved) for each recommendation in the near future.

For now, we’d encourage you to apply a rough, intuitive version of the ITN framework:

- Importance: how big a difference does it seem like this would make to my productivity?

- Tractability: how cheap and/or easy would it be for me to do this?

- Neglectedness: how weak/ strong am I already at optimising in this area?

The database does not include every recommendation made in each article we reviewed.

This is for two primary reasons:

- Avoiding duplication

- Focusing on productivity

- Many of the recommendations made in these articles are for products that may bring small increases to your happiness/ satisfaction but are we feel are unlikely to increase your productivity.

- Hopefully, this narrower focus helps make the database more useful. At the very least, it made it substantially easier to complete. We encourage you to take a look at the articles we reviewed for themselves. You can find them linked in the database or in this spreadsheet.

The product links provided don’t work for where I live

Product links have generally been taken directly from the article where we found the recommendation. This means they are predominantly a mixture of US and UK websites and/or currencies.

We’d love to provide links tailored to specific countries so that implementing the recommendations is as simple as possible. Sadly, this just isn’t currently feasible with the time we have available.

The costs (per month and/or one-off) are inaccurate

For similar reasons as above, many of the product costs are only rough estimates. These are generally based on the first product we found or on the specific product recommended in the original article. Given this, please take the figures with a good few grains of salt.

Why have you just included articles written by people involved in EA/ Rationality/ similar?

About 90% of the articles included are from people involved in or adjacent to the EA and Rationality communities. Needless to say, there are almost certainly very high-quality recommendations from people unrelated to these communities that we are missing. However, we made this choice for a couple of key reasons:

- Scope. Limiting this to EA/ Rationality authors kept building this database to a manageable task size.

- Tailored value. If we accept the premise that people in EA and Rationality often think and act similarly to each other, it seems reasonable that you, dear reader, may benefit most from recommendations made from within these communities.

Perhaps with more time and resources, the database could be expanded to include recommendations from a much wider and more diverse range of sources.

Who are Effective Self-Help, anyway?

We’re a small research organisation set up in November 2021 to offer more effective productivity and wellbeing advice. Up to now, we’ve been funded by pilot grants from the EA Infrastructure Fund. Take a look at our website or this post introducing the project for more information about what we do, what we hope to do, and why.

A final request…

If you find a recommendation you like and want to quickly help with our work, please fill out this 1-minute form letting us know what you’re planning to/ have already done. We can then send you a follow-up email in a month with a separate super-short form to estimate how useful this practice has been to you.

Understanding the real-world effectiveness of providing resources and recommendations like these is hard. Collecting data on how useful any of our recommendations are to you makes this easier.

Acknowledgements

Thank you to everyone whose articles I have drawn the recommendations in this database from, and the many helpful commenters on these articles. This includes (in no particular order):

Max Carpendale; Sam Bowman; Ryan Carey; Dwarkesh Patel; Rosie Campbell; Michael Aird; Melissa Neiman; Rachel Moskowitz; Mark Xu; Patrick Stadler; Neil Bowerman; Akash Wasil; Milan Cvitkovic; Will Bradshaw; Rob Wiblin; Arden Koehler; Daniel Frank; Katja Grace; Darmani; Michelle Hutchinson; Lynette Bye; Joey Savoie; Peter Wildeford; Daniel Kestenholz; Adam Zerner; Kat Woods; Elizabeth Van Nostrand; Aaron Bergman; Alexey Guzey; Jose Ricon; Philip Storry; Scott Alexander; Gavin Leech; David Megins-Nicholas; Yuri Akapov.

Thanks as well to Manon Gouiran and Simon Newstead for their help in reviewing this database, and to Michael Aird for first prompting my interest in building this.

Here is some info related to recommendations #1 and #9 about lighting. https://meaningness.com/sad-light-led-lux David Chapman has put quite a bit of research into maximizing lux per dollar. I intend to try a similar setup to him with some Jeep lights and a voltage transformer to get 40,000-60,000 lux in my room. I'm going to add a smart plug so I can use an Apple Shortcuts automation to have the lights turn on with my wake-up alarm.