This post is about a question:

What does longtermism recommend doing in all sorts of everyday situations?

I've been thinking (on and off) about versions of this question over the last year or two. Properly I don't want sharp answers which try to give the absolute best actions in various situations (which are likely to be extremely context dependent and perhaps also weird or hard to find), but good blueprints for longtermist decision-making in everyday situations: pragmatic guidance which will tend to produce good outcomes if followed.

The first part of the post explains why I think this is an important question to look into. The second part talks about my current thinking and some guess answers: that everyday longtermism might involve seeking to improve decision-making all around us (skewing to more important decision-making processes), while abiding by commonsense morality.

A lot of people provided some helpful thoughts in conversation or on old drafts; interactions that I remember as particularly helpful came from: Nick Beckstead, Anna Salamon, Rose Hadshar, Ben Todd, Eliana Lorch, Will MacAskill, Toby Ord. They may not endorse my conclusions, and in any case all errors, large and small, remain my own.

Motivations for the question

There are several different reasons for wanting an answer to this. The most central two are:

- Strong longtermism says that the morally right thing to do is to make all decisions according to long-term effects. But for many many decisions it's very unclear what that means.

- At first glance the strong longtermist stance seems like it might recommend throwing away all of our regular moral intuitions (since they're not grounded in long-term effects). This could leave some dangerous gaps; we should look into whether they get rederived from different foundations, or if something else should replace them.

- More generally it just seems like if longtermism is important we should seek a deep understanding of it, and for that it's good to look at it from many angles (and everyday decisions are a natural and somewhat important class).

- Having good answers to the question of everyday longtermism might be very important for the memetics / social dynamics of longtermism.

- People encountering and evaluating an idea that seems like it's claiming broad scope of applicability will naturally examine it from lots of angles.

- Two obvious angles are "what does this mean for my day-to-day life?" and "what would it look like if everyone was on board with this?".

- Having good and compelling answers to these could be helpful for getting buy-in to the ideas.

- I think an action-guiding philosophy is at an advantage in spreading if there are lots of opportunities for people to practice it, to observe when others are/aren’t following it, and to habituate themselves to a self-conception as someone who adheres to it.

- For longtermism to get this advantage, it needs an everyday version. That shouldn't just provide a fake/token activity, but meaningful practice that is substantively continuous with the type of longtermist decision-making which might have particularly large/important long-term impacts.

- If longtermism got to millions or tens of millions of supporters -- as seems plausible on timescales of a decade or three -- it could be importantly bottlenecked on what kind of action-guiding advice to give people.

- People encountering and evaluating an idea that seems like it's claiming broad scope of applicability will naturally examine it from lots of angles.

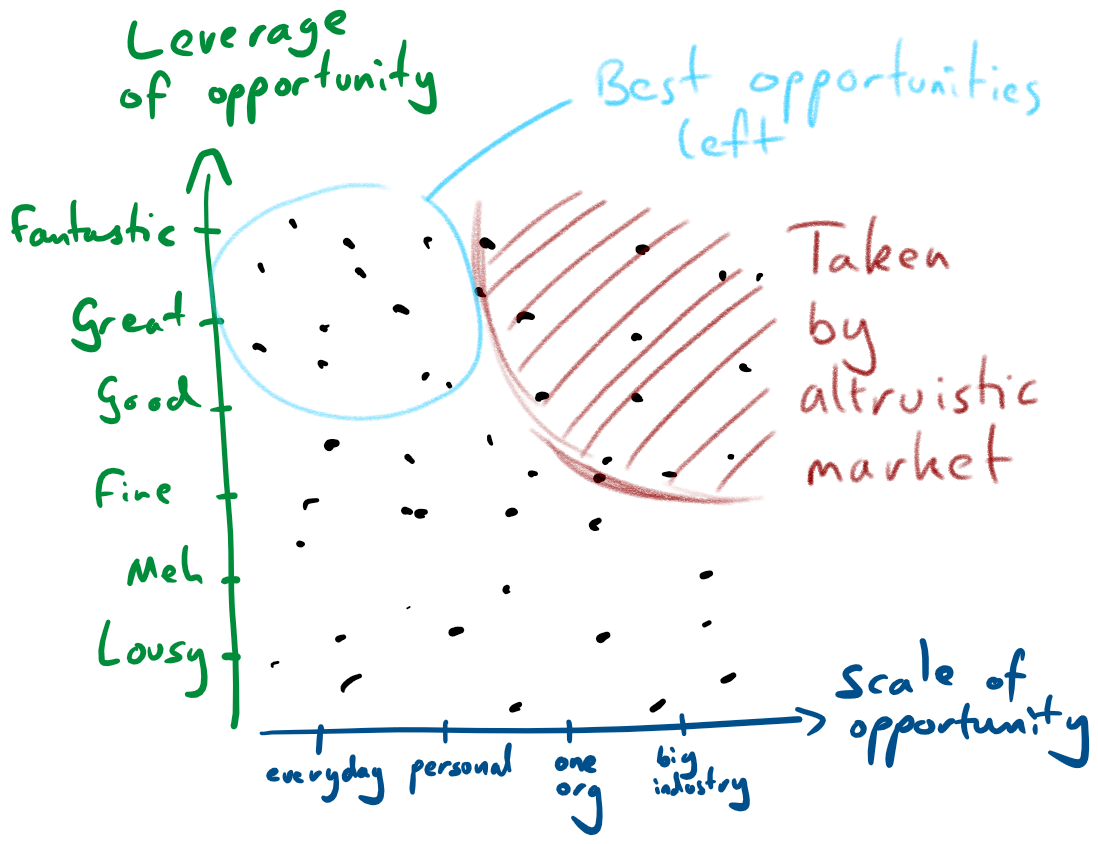

A third more speculative motivation is that the highest-leverage opportunities may be available only at the scale of individual decisions, so having better heuristics to help identify them might be important. The logic is outlined in the diagram below. Suppose opportunities naturally arise at many different levels of leverage (value out per unit of effort in) and scales (how much effort they can absorb before they’re saturated). In an ecosystem with lots of people seeking the best ways to help others, the large+good opportunities will all be identified and saturated. The best large opportunities left will be merely fine. For opportunities with small scale, however, there isn’t enough total value in exploiting them for the market to reliably have identified them. So there may be very high leverage opportunities left for individuals to pick up.

Of course this diagram is oversimplifying. It is an open question how efficient the altruistic market is even at large scales. It’s also an open question what the distribution of opportunities looks like to begin with. But even though it’s likely not this clean, the plausibility of the dynamic applying to some degree gives me reason to want decent guidance for longtermist action that can be applied on the everyday scale.

Interactions with patient/urgent longtermism

All of these reasons are stronger from a patient longtermist perspective than an urgent one. The more it's the case that the crucial moments for determining the trajectory of the future will occur quite soon, the less value there is in finding a good everyday longtermist perspective (versus just trying to address the crucial problems directly). I guess it has very limited leverage on anything in the next decade or two; is very important for critical junctures that are more than fifty or a hundred years away; and somewhere in the middle for timescales in the middle.

I think as a community we should have a portfolio which is spread across different timescales, and everyday longtermism seems like a really important question for the patient end of the spectrum.

Thoughts on some tentative answers

It seems likely to me that good blueprints will involve both some proxy goals and some other heuristics to follow (proxies have some advantages but also some disadvantages; I could elaborate on the thinking here but I don't have a super crisp way of expressing it and I'm not sure anyone will be that interested).

Good candidates for proxy goals would ideally:

- Be good (in expectation) for the long-term future;

- Specify something that is broad enough that many decision-situations have some interaction with the proxy;

- Be robust, such that slight perturbations of the goal or the world still leave something good to aim for;

- Have some continuity with good goals in more strategic (less "everyday") situations.

Cultivating good decision-making

The proxy goal that I (currently) feel best about is improving decision-making. I don't know how to quantify the goal exactly, but it should put more weight on bigger improvements; on more important decision-makers; and on the idea of good decision-making itself being important to foster.

An elevator pitch for a blueprint for longtermism which naturally comes with an everyday component might be something like:

We help decision-makers care for the right things, and make good choices.

We care about the long term, but it's mostly too far off to perceive clearly what to do. So our chief task is to set up the world of tomorrow for success by having people/organisations well placed to make good decisions (in the senses both of aiming for good things, and doing a good job of that).

We practice this at every scale, with attention to how much the case matters.

At the local level, everyone can contribute to this by nudging towards good decision-making wherever they see opportunities: at work; in their community; with family and friends. Collectively, we work to identify and then take particularly good opportunities for improving decision-making. This often means aiming at improving decision-making in particularly important domains, but can also mean looking for opportunities to make improvements at large scale. Currently, much of our work is in preventing global catastrophes; improving thinking about how to prioritise; and helping expose people to the ideas of longtermism.

For background on why I think cultivating good decision-making is robustly good for the long-term, see my posts on the "web of virtue thesis" and good altruistic decision-making as a basin in idea space.

Overall I feel reasonably good about this as a candidate blueprint for longtermism:

- It's basically a single coherent thing:

- It connects up to philosophical motivations and down to action-guidance

- The proxy of “good decision-making” is basically one I got to by thinking with the timeless lens on longtermism

- It contextualises existing EA work:

- Spreading ideas of EA/longtermism are particularly important in the world today because these are crucial pieces missing from most decision-making

- When we have good foresight over existential risks, these become key challenges of our time, and it becomes obviously good decision-making to work on them

- There are opportunities to help improve decision-making on all sorts of different scales including the everyday, so there are lots of chances for people to get their hands dirty trying to help things

Here are some examples of everyday longtermism in pursuit of this proxy:

- Alice votes for a political candidate whom she has relative trust in the character of (even though she disagrees with some of their policies), and encourages others to do the same.

- Slowly contributing to the message "you need to be principled to be given power", which both:

- makes it more likely that important decision-makers are principled/trustworthy

- reinforces social incentives to be of good character

- Slowly contributing to the message "you need to be principled to be given power", which both:

- Bob goes to work as a primary school teacher, and helps the children to perceive themselves as moral actors, as well as rewarding clear thinking.

- Clara is a manager in the tech industry, and encourages a mindset on her team of "do things properly, and for the right reasons" rather than just chasing short-term results. She talks about this at conferences.

- Diya works as a science journalist, and tries to give readers a clear picture of what we do and don't understand about how the future might go.

- Elmo hosts dinner parties, and exhibits curiosity about guests’ opinions, particularly on topics that touch on how the world may unfold over decades, warmly but robustly pushing back on parts that don’t make sense to him. He occasionally talks about his views on the importance of the long-term, and why it means it’s particularly valuable to encourage good thinking.

Abide by commonsense morality

While pursuing the proxy goal of improving decision-making, I would also recommend following general precepts of commonsense morality (e.g. don't mislead people; try to be reliable; be considerate of the needs of others).

There are a few different reasons that I think commonsense morality is likely to be largely a good idea (expanded below):

- It's generated by a similar optimisation process as would ideally produce "commonsense longtermist morality"

- It can serve as a safeguard to help avoid the perils of naive utilitarianism

- It's an expensive-to-fake signal of good intent, so can help make longtermism look good

I don't think that commonsense morality will give on-the-nose the right recommendations. I am interested in the project of working out which pieces of commonsense morality can be gently put down, as well as which new ideas should be added to the corpus. However, I think it it will get a lot of things basically right, and so it's a good place to start and consider deviations slowly and carefully.

Similar optimisation processes [speculative]

Commonsense morality seems like it's been memetically selected to produce good outcomes for societies in which it’s embedded, when systematically implemented at local level.

We'd like a "commonsense longtermist morality", something selected to produce good outcomes for larger future society, when systematically implemented at local level. Unfortunately finding that might be difficult. There's a lot of texture to commonsense morality; we might expect that the ideal version of commonsense longtermist morality would have similar amounts of texture. And we can't just run an evolutionary process to find a good version.

However, in an ideal world we might be able to run such an evolutionary process -- letting people take lots of different actions, magically observing which turned out well or badly for the long-term, and then selectively boosting the types of behaviour that produced good results. That hypothetical process would be somewhat similar to the real process that has produced commonsense morality. The outputs of those processes would be optimised for slightly different things, but I expect there would be a significant degree of alignment, since both outcomes benefit from generally-good-&-fair decisions, and are hurt by selfishness, corruption, etc.

Safeguarding against naive utilitarianism

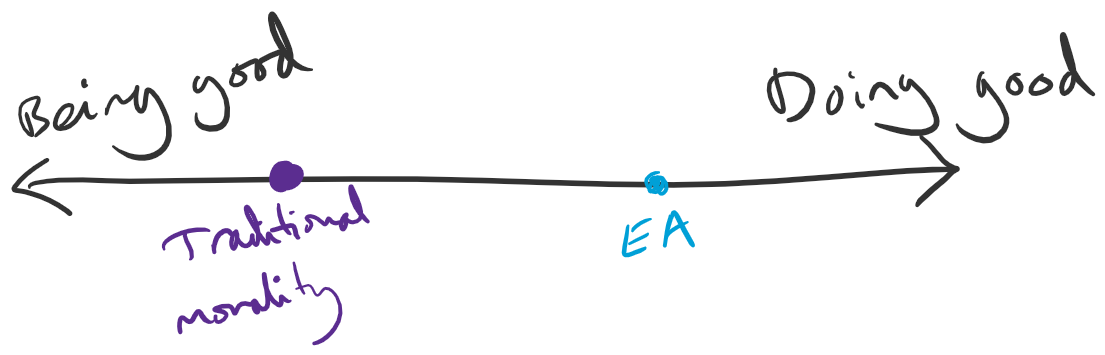

Roughly speaking, I think people sometimes think of the relationship between EA and traditional everyday morality as something like differing points on a spectrum:

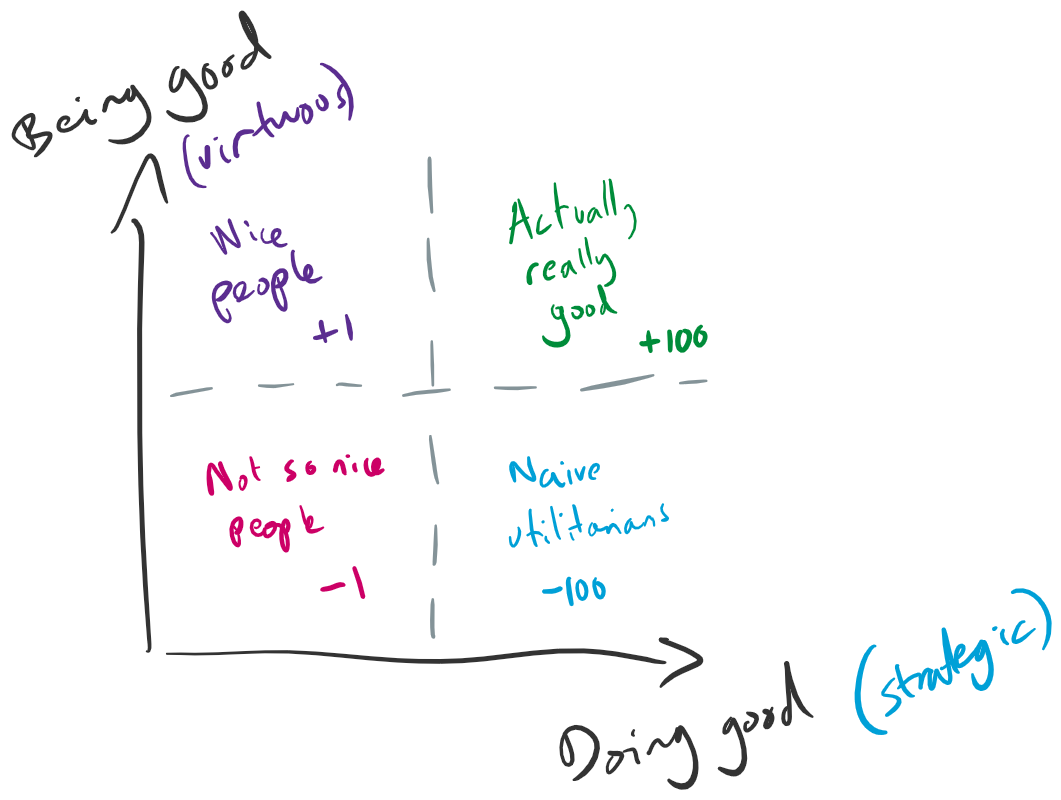

I think that these two dimensions are actually more orthogonal than in opposition (the spectrum only appears when you think about a tug of war about what “good” is):

Utilitarianism (along with other optimising behaviours) gets kind of a bad reputation. I think this is significantly because naive application can cause large and real harms, when the mechanisms of harm are indirect and so easy to sometimes lose sight of. I think that commonsense morality can serve as a backstop which stops a lot of these bad effects.

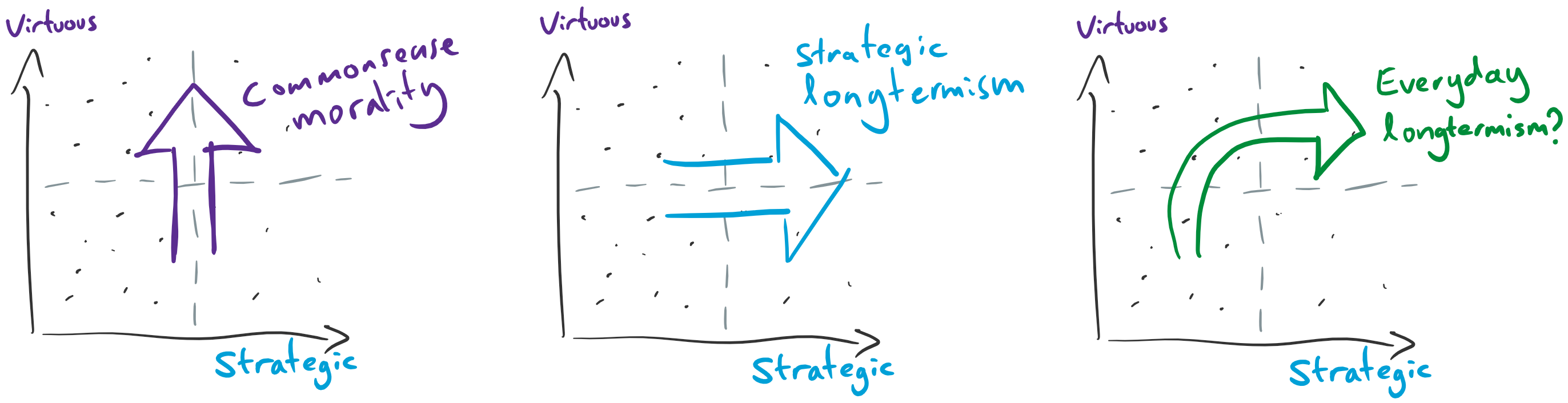

We might think of commonsense morality as a memetic force exerting upwards pressure in this diagram. What we might call "strategic longtermism" is a memetic force exerting rightwards pressure (which seems much more undersupplied in society as a whole). But given the asymmetry between the top-left and bottom-right quadrants, we'd ideally like a memetic force which routes via the top-left. I think this provides some reason to bundle (something in the vicinity of) commonsense morality in as part of the memetic package of longtermism. And then since (as I argued above) part of the purpose of having a good blueprint for everyday longtermism is to help the memetics of it by giving people to practice, this should presumably be part of that blueprint. (And since commonsense morality is already something like a known quantity, it doesn't cost a great deal of complexity to include it as part of the message.)

Signalling value

As longtermism grows as a cultural force, it seems likely that people encountering it will use a variety of different means to judge what they think of it. Some people will try to examine the merits of the philosophical arguments; some people will consider whether the actions it recommends seem to make sense; some people will question the motivations of the people involved in promoting it.

I think that a lot of parts of commonsense morality stand in the way of corruption. This means that abiding by commonsense morality is relatively cheap for actors who are not self-interested, and relatively expensive for actors who are primarily self-interested. So it's a costly signal of good intent. I think this will help to make it seem legitimate and attractive to people.

To be clear, if I thought abiding by commonsense morality was otherwise a bad idea, this consideration would be quite unlikely to tip it over into worthwhile. But I think it's anyway a good idea, and this consideration provides reason to prioritise it even somewhat beyond what would be suggested by its own merits.

Closing thoughts

I've laid out a case for considering the question of everyday longtermism. I feel confident that this is an important question, and think it deserves quite a bit of attention from the community.

I've also given my current picture of what seems like a good blueprint for everyday longtermism. I'd be surprised if that's going in totally the wrong direction, but I'd love to hear arguments that it is. On the other hand I think it's quite possible that the high-level picture is slightly off, very likely that I've made some errors of judgement in the details, and nigh-certain that there's a lot more detail that could productively be hashed out.

Love it! And I love the series of posts you had written lately.

I think that the suggestions here, and most of the arguments, should apply to "Everyday EA " which isn't necessarily longtermistic. I'd be interested in your thoughts about where exactly should we make a distinction between everyday longtermist actions and non-longtermist everyday actions.

Some further suggestions:

I share the view that that could be a good suggestion for an action/proxy in line with everyday longtermism.

Two collections of resources on cooperation (in the relevant sense) are the Cooperation & Coordination tag and (parts of) EA reading list: moral uncertainty, moral cooperation, and values spreading.

Thanks! I didn't see any post under that tag that had the type of argument I had in mind, but I think that this article by Brian Tomasik was what I intended (which I found now from the reading list you linked).

Yeah, I should've said "resources on cooperation (including in the relevant sense)", or something like that. The tag's scope is a bit broad/messy (though I still think it's useful - perhaps unsurprisingly, given I made it :D).

Yea, I think the tag is great! I was surprised that I couldn't find a resource from the forum, not that the tag wasn't comprehensive enough :)

It might be nice if someone would collect resources outside the forum and publish each one as a forum linkpost so that people could comment and vote on them and they'd be archived in the forum.

I've often thought pretty much exactly the same thought, but have sometimes held back because I don't see that done super often and thus worried it'd be weird or that there was some reason not to. Your comment has made me more inclined to just do it more often.

Though I do wonder where the line should be. E.g., it'd seem pretty weird to just try to link post every single journal article on nuclear war which I found useful.

Maybe it's easier to draw the line if this is mostly limited to posts by explicitly EA people/orgs? Then we aren't opening the door to just trying to linkpost the entire internet :D

Yea, I don't know. I think that it may even be worthwhile to linkpost every such journal article if you also write your notes on these and cross-link different articles, but I agree that it would be weird. I'm sure that there must be a better way for EA to coordinate on such knowledge building and management.

(Partly prompted by this thread, I've made a question post on whether pretty much all content that's EA-relevant and/or created by EAs should be (link)posted to the Forum.)

💖

I basically like all of these. I think there might be versions which could be bad, but they seem like a good direction to be thinking in.

I'd love to see further exploration of these -- e.g. I think any of your six suggestions could deserve a top-level post going into the weeds (& ideally reporting on experiences from trying to implement it). I feel most interested in #3, but not confidently so.

Gidon Kadosh, from EA Israel, is drafting a post with a suggested pitch for EA :)

I agree that quite a bit of the content seems not to be longtermist-specific. But I was approaching it from a longtermist perspective (where I think the motivation is particularly strong), and I haven't thought it through so carefully from other angles.

I think the key dimension of "longtermism" that I'm relying on is the idea that the longish-term (say 50+ years) indirect effects of one's actions are a bigger deal in expectation than the directly observable effects. I don't think that that requires e.g. any assumptions about astronomically large futures. But if you thought that such effects were very small compared to directly observable effects, then you might think that the best everyday actions involved e.g. saving money or fundraising for charities you had strong reason to believe were effective.

Hmm. There are many studies on "friend of a friend" relationships (say this on how happiness propagates through the friendship network). I think that it would be interesting to research how some moral behaviors or beliefs propagate through the friendship networks (I'd be surprised if there isn't a study on the effects of a transition to a vegetarian diet, say). Once we have a reasonable model of how that works we could make a basic analysis of the impact of such daily actions. (Although I expect some non-linear effects that would make this very complicated)

While I very much liked this post overall, I think I feel some half-formed scepticism or aversion to some of the specific ideas/examples.

In particular, when reading your list of "examples of everyday longtermism in pursuit of this proxy", I found myself feeling something like the following:

But I'm not sure how important these worries are. And maybe they can all be readily addressed just through careful choices of examples, avoiding emphasising the examples too much/in the wrong way, also mentioning other higher value activities, etc.

It might be interesting to compare that to everyday environmentalism or everyday antispeciesism. EAs have already thought about these areas a fair bit and have said interesting things about in the past.

In both of these areas, the following seems to be the case:

EAs are already thinking a lot about optimizing #1 by default, so perhaps the project of "everyday longtermism" could be about exploring whether actions fall within #2 or #3 or #4 (and what to do about #4), and what the virtues corresponding to #5 might look like.

I appreciate the pushback!

I have two different responses (somewhat in tension with each other):

Ah, your first point makes me realise that at times I mistook the purpose of this "everyday longtermism" idea/project as more similar to finding Task Ys than it really is. I now remember that you didn't really frame this as "What can even 'regular people' do, even if they're not in key positions or at key junctures?" (If that was the framing, I might be more inclined to emphasise donating effectively, as well as things like voting effectively - not just for politicians with good characters - and meeting with politicians to advocate for effective policies.)

Instead, I think you're talking about what anyone can do (including but not limited to very dedicated and talented people) in "everyday situations", perhaps alongside other, more effective actions.

I think at times I was aware of that, but times I forgot it. That's probably just on me, rather than an issue with the clarity of this post or project. But I guess perhaps misinterpretations along those lines are a failure mode to look out for and make extra efforts to prevent?

---

As for concrete examples, off the top of my head, the key thing is just focusing more on donating more and more effectively. This could also include finding ways to earn or save more money. I think that those actions are accessible to large numbers of people, would remain useful at scale (though with diminishing returns, of course), and intersect with lots of everyday situations (e.g., lots of everyday situations could allow opportunities to save money, or to spend less time on X in order to spend more time working out where to donate).

To be somewhat concrete: In a scenario with 5 million longtermists, if we choose a somewhat typical teacher who wants to make the world better, I think they'd do more good by focusing a bit more on donating more and more effectively than by focusing a bit more on trying to cause their students to see themselves as moral actors and think clearly. (This is partly based on me expecting that it's really hard to have a big, lasting impact on those variables as a teacher, which in turn in is loosely informed by research I read and experiences I had as a teacher. Though I do expect one could have some impact on those variables, and I think for some people it's worth spending some effort on that.)

That said, I think it makes sense to use other examples as well as donating more and more effectively. Especially now that I remember what the purpose of this project actually is. But I am a bit surprised that donating more and more effectively wasn't one of the examples in your list? Is there a reason for that?

---

I feel like that's a bit different. If I get a dedicated EA to do everyday things more efficiently, it's fairly obvious how that could result in more hours or dollars going towards very high impact activities. (I don't expect every hour/dollar saved to go towards very high impact activities, but a fair portion might.)

Perhaps you mean that EAs sometimes talk about this stuff in e.g. a Medium article aimed at the general public, perhaps partly with the intention of showing people how EA-style thinking is useful in a domain they already care about and thereby making them more likely to move towards EA in future. I do see how that is similar to the everyday longtermism project and examples.

But still, in that case the increased everyday efficiency of these people could fairly directly cause more hours/dollars to go towards high impact activities, if these people do themselves become EAs/EA-aligned. So it still feels a bit different.

---

I'm not sure how important or valid any of these points are, and, as noted, overall I really like the ideas in this post.

I believe the framing in the 80,000 Hours podcast was something like when we run out of targeted things to do. But if we include global warming, depending on your temperature increase limit, we could easily spend $1 trillion per year. If people in developed countries make around $30,000 a year and they donate 10% of that, that would require about 300 million people. And of course there are many other global catastrophic risks. So I think it's going to be a long time before we run out of targeted things to do. But it could be good to do some combination of everyday longtermism and targeted interventions.

Agree - I think an interesting challenge is "when does this become better than donating 10% to the top marginal charity?"

I spent a little while thinking about this. My guess is that of the activities I list:

All of those numbers are super crude and I might well disagree with myself if I came back later and estimated again. They also depend on lots of details (like how good the individuals are at executing on those strategies).

Perhaps most importantly, they're excluding the internal benefits -- if these activities are (as I suggest) partly good for practicing some longtermist judgement, then I'd really want to see them as a complement to donation rather than just a competitor.

I really liked this post - both a lot of the specific ideas expressed, and the general style of thinking and communication used.

Vague example of the general style of thinking and communication seeming useful: When reading the "Safeguarding against naive utilitarianism" section, I realised that the basic way you described and diagrammed the points there were applicable to a very different topic I'd thought about recently, and provided a seemingly useful additional lens for that.

A few things that parts of this post made me think of:

I really appreciate you highlighting these connections with other pieces of thinking -- a better version of my post would have included more of this kind of thing.

This felt like I was reading my own thoughts (albeit better-articulated, and with lovely diagrams).

For a long time, I've been convinced that one of the community's greatest assets was "a collection of some of the kindest/most moral people in the [local area]". In my experience, people in the community tend to "walk the walk": They are kind, cooperative, and truth-seeking even outside their EA work.

Examples include:

I think that one of the best ways an average person can support EA, even if they can't easily change their career or donate very much, is to be a kind, helpful figure in the lives of those around them:

"You're so nice, Susan!"

"I just try to help people as much as I can!"

(I plan to publish a post on this cluster of ideas at some point.)

Mostly, I've been thinking about the social/movement growth benefits of the community having a ton of nice people, but I agree that such behavior also seems likely to aid in our uncovering good ways to make good decisions and developing good moral systems for long-term impact.

On a related note, I often refer back to these words of Holden Karnofsky:

Brainstorming some concrete examples of what everyday longtermism might look like:

> Alice is reviewing a CV for an applicant. The applicant does not meet the formal requirements for the job, but Alice wants to hire them anyway. Alice visualices the hundreds of people making a similar decision to hers. She would be ok with hiring this specific applicant, because she trusts her instincts a lot. But she would not trust 100 people in a similar position to make the right choice; ignorning the recruiting guidelines might disadadvantage minorities in a illegible way. She decides that the best would be if everyone chose to just follow the procedure, so she chooses to forego her intuition to favor better decision making overall.

> Beatrice is offered a job as a Machine Learning engineer to help the police with some automated camera monitoring. Before deciding whether to accept, she seeks out open criticism of that line of work, and tries to imagine what are some likely consequences of developing that kind of technology, both positive and negative. After doing a balance she realizes that while it will most likely be positive there is a plausible chance that it will enable really bad situations, and rejects the job offer.

> Carol reads an interesting article. She wants to share it in social media. She could spend some effort paraphrasing the key ideas in the article, or just share the link. She has internalized that spending one minute summarizing key ideas might well be worth a lot of time saved to her friends who otherwise could use her summary to decide whether to read the whole article. Out of habit she summarizes the article as best as she can, making it clear who she genuinely thinks would benefit from reading the article.

You argue that some guidance about "What does longtermism recommend doing in all sorts of everyday situations?" could help people become inclined towards and motivated by EA/longtermism. You also argue that aligning/packaging longtermism with (aspects of) commonsense morality could help achieve a similar objective, partly via signalling good things about longtermists/longtermism in a way that's expensive to fake.

I agree with these points, and might add two somewhat related points:

Thanks, I agree with both of those points.

In this 2017 post Emily Tench talks about "The extraordinary value of ordinary norms", as (I think) she did while in an internship at CEA and where she got feedback and comments from Owen and others.

i feel interested in how attention interacts with this. if we are able to pay more attention (to our bodies, to others, to the world around us), are we able to make better decisions than we would otherwise? would our actions, with this extra information, mean less harm needed to be undone?

The way I see this argument in this post is that you pick out a value/ capacity which you think would be especially desirable for the future to hold- in this case "good decision-making"- and then track back to the individual acts which might help propagate it.

If this is the correct model of the argument, might it be the case that more narrowly defined values/capacities are more memetically strong and morally robust? In my opinion many aspects of "good decision-making" might lead to worse outcomes- for example the focus on character in politicians mentioned above might be present in the best possible worlds but in many nearer possible worlds it is a failed proxy which leads to politicians selected because they seem reliable rather than because they have the best policies. Or perhaps many people who learn a few decision-making skills become over confident and stray into a naive version of the goal. In contrast, more finite goals such as "abolish factory farming", "promote anti-racism" are (in my opinion) less likely to lead to false proxies and clearly propagate along social networks because they incorporate their own ends. Not every aspect of an imperfect decision-making practice is bad or leads to bad outcomes, but with more narrow goals like those mentioned above, falling short of the goals is always bad. My impression is that this is a sign of a better proxy for what is good.

I'd love to know whether people think this is on the right track- I don't know very much about the strength of the "good decision-making" goal so it would be great to hear more about its strengths.