Epistemic Status: I think this is a real issue, and think the importance of the issue varies significantly by introductory experience/facilitator.

TLDR

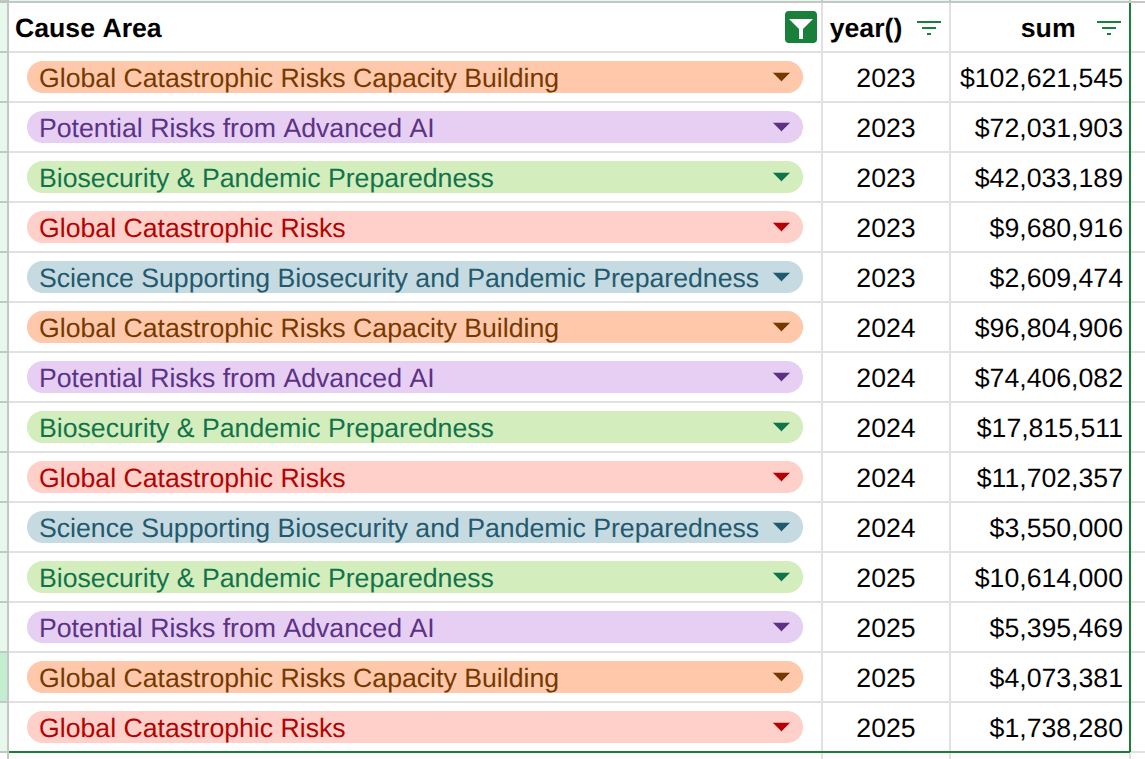

- EA introductions present the movement as cause-neutral, but newcomers discover that most funding and opportunities actually focus on longtermism (especially biosecurity and AI)

- This creates a "bait and switch" problem where people join expecting balanced cause prioritization but find stark funding hierarchies

- The solution is honest upfront communication about EA's current priorities rather than pretending to be more cause-neutral than we actually are

- We should update intro fellowship curricula to include transparent data about funding distribution and encourage open discussion about whether EA's shift toward longtermism represents rational updating or cultural bias

1. The Problem (Personal Experience)

In EA Bath, we have a group member, who's exactly the kind of person you'd want in EA - agentic, impact-minded, thoughtful about doing good. They had their first encounter with the broader EA world at the Student Summit in London last month, and came back genuinely surprised. They're interested in conservation and plant biology, but the conference felt like AI dominated everything - something like half the organisations there were AI-focused. They left wondering whether EAs as a community actually have much to offer someone like her.

This got me thinking about my own approach to introducing EA. When I'm tabling at university, talking to students walking by, I instinctively mention climate change and pandemics before AI. I talk about cause areas broadly, emphasising how we think carefully about cause prioritisation. But my group member's experience made me realise there's a gap - I'm presenting the concept of cause prioritisation without being transparent about just how stark the funding disparities actually are between different causes.

This isn't just about the group member, or about my particular approach to outreach. I think there's a systematic pattern where people interested in areas like conservation, biodiversity, or education policy in developed countries discover that EA has relatively little interest in funding work or opportunities in their domains. EA introductions do discuss cause prioritisation, but they don't make it clear enough that there are massive disparities in resources and attention between different cause areas. The result is that newcomers often feel confused or sidelined when they discover how dramatically unequal the actual allocation of EA resources really is.

2. The Evidence

My group member's experience reflects a broader pattern supported by data showing that EA's actual priorities don't match how we typically present ourselves to newcomers.

Conference and organisational focus

At the 2025 Student Summit, roughly half the organisations were AI-focused. At EAG London, it was a similar split - about 50% of organisations focusing on AI or AI plus biosecurity, and 50% everything else. For someone new to EA, which this event was, these events provide their first concrete sense of what the community actually works on. I liked the Student Summit, and rated it highly in my feedback. I also appreciate the organisers reached out to a diverse range of organisations, the reality is that organisations who said yes were focused on existential risks.

The engagement effect

Perhaps most tellingly, the 2024 EA Survey revealed a stark pattern: among highly engaged EAs, 47% most prioritised only a longtermist cause while only 13% most prioritised a neartermist cause. Among less engaged EAs, the split was much more even - 26% longtermist vs 31% neartermist. This suggests that as people become more involved in EA, they systematically shift toward longtermist priorities. But newcomers aren't told about this progression.

Community dissatisfaction

The 2024 EA survey found that the biggest source of dissatisfaction within the community was cause area prioritisation, specifically the perceived overemphasis on longtermism and AI. If existing EAs are feeling this tension, imagine how much stronger it must be for newcomers who weren't expecting it.

Ideological tendencies

As Thing of Things argues in "What Do Effective Altruists Actually Believe?", EA has distinctive ideological patterns that go beyond our stated principles - tendencies toward consequentialism, rationalism, specific approaches to politics and economics. These aren't mentioned in most EA introductions, but they significantly shape how the community operates and what work gets prioritised. These patterns are the opinions of one person, but somewhat similar patterns are discussed by Peter Hartree and Zvi.

Career advice concentration

This pattern extends to career guidance as well. 80,000 Hours, long considered EA's flagship career advice organisation, has pivoted toward AI-focused career recommendations. This shift has been significant enough that EA group organisers are now questioning whether they should still recommend 80k's advising services to newcomers, as discussed in recent forum posts. While I feel 80k is appropriately transparent about this focus on their website, someone who hears about "80,000 Hours career advice" at an EA intro talk and then explores their resources or signs up for their newsletter might be surprised to find most content centred on AI careers rather than the broader cause-neutral career guidance they might have expected.

3. Why This Matters + What Honest Introductions Could Look Like

This transparency gap creates real problems for both newcomers and the EA community itself.

The "bait and switch" problem

When someone gets interested in EA through cause-neutral principles and then discovers the stark funding hierarchies, it feels misleading. My group member's experience (probably) isn't unique - people enter thinking they're joining a movement that evaluates causes objectively, and will find some causes have been pre-judged as dramatically more important. This creates confusion and, potentially disillusionment.

We lose valuable perspectives: People passionate about education policy, conservation, or other lower-prioritised areas often drift away from EA when they realise how little space there is for their interests. But these are exactly the people who might bring fresh insights, identify overlooked opportunities, or build bridges to other communities. When we're not upfront about cause prioritisation, we're selecting for people who happen to align with existing EA priorities rather than people who might expand or challenge them. (I'll address the obvious objection - that being more transparent might worsen this selection bias - in section 4.)

What honest introductions could look like

The solution isn't to present every possible cause area and their relative funding levels - that would be overwhelming and impossible to keep current. Instead, we can be earnest about the realities without being exhaustive.

Chana Messinger writes compellingly about the power of earnest transparency in community building. Rather than scripted interactions that hide uncomfortable realities, she suggests being upfront: "I'm engaged in a particular project with my EA time... I'm excited to find people who are enthused by that same project and want to work together on it. If people find this is not the project for them, that's a great thing to have learned."

For individual conversations, this might mean saying something like: "EA principles suggest we should be cause-neutral, but in practice, most EA funding and career opportunities currently focus on AI safety and other longtermist causes. There's ongoing debate about this prioritisation, and we welcome people interested in other areas, but you should know what the current landscape looks like."

A concrete, scalable approach - Updating the Intro Fellowship

Rather than relying on individual organisers to navigate these conversations perfectly, we could update the intro fellowship curriculum itself. This might mean creating a forum post or resource that presents the evidence from this post - showing the actual funding landscape, conference composition, and survey data on cause prioritisation. We could be transparent about EA's historical shift toward long termism and AI risk over the past decade, presenting it as an interesting case study in how social movements evolve rather than something to hide.

This would require someone to update the resource annually as new data becomes available, but it would ensure that everyone going through the fellowship gets the same honest picture of EA's current priorities. It would also make the information feel less like a personal opinion from their local organiser and more like objective information about the movement. Such a resource could sit alongside the existing materials about EA principles - not replacing them, but providing the context that those principles currently operate within a community with particular priorities and funding patterns.

4. Objections & Responses

"This creates selection bias for people who already agree with EA priorities"

This is my main worry, and it directly relates to the point in section 3 about losing valuable perspectives. If we're explicit about AI/longtermism focus, we might inadvertently filter for people who already lean that way, losing the diversity of perspectives we claim to value. But I think we're already selecting for these people - we're just doing it inefficiently, after they've invested time in learning about EA. Better to be honest upfront and actively encourage people with different interests to engage critically rather than pretend EA is more cause-neutral than it actually is.

"There isn't time for this level of nuance"

In a two-minute elevator pitch or an Instagram post, there genuinely isn't space to explain funding hierarchies and ideological tendencies. But I think this misses where the transparency matters most. The crucial moments aren't the initial hook, but the follow-up conversations, the intro fellowship materials, and the first few meetings someone attends. These are when people are deciding whether to get seriously involved, and when honest conversations about cause prioritisation could prevent later disappointment.

"This will put people off who might otherwise contribute"

Possibly. Some people who would have stayed if they thought EA was more cause-neutral might leave earlier if they know about the longtermism dominance. But this cuts both ways - we might also attract people who are specifically interested in the causes EA actually prioritises, rather than people who join under false pretenses and leave disappointed later.

"We can't possibly mention every cause area people might care about"

True. The goal isn't comprehensive coverage but basic honesty about the current distribution of resources and attention. We can't predict every cause area someone might be passionate about, but we can acknowledge that EA's current priorities are more concentrated than our principles might suggest.

The alternative to these uncomfortable conversations isn't neutrality - it's deception by omission.

5. Conclusion

EA has powerful principles and valuable insights about doing good effectively. But when we introduce these ideas without being transparent about how they're actually applied in practice, we create unnecessary confusion and missed opportunities.

My group member's surprise at the Student Summit could have been avoided with more honest upfront communication about where EA resources and attention actually go. They might still have chosen to engage with EA - plenty of people interested in conservation find value in EA principles and community even if they don't work on the highest-prioritised cause areas. But they could have made that choice with full information rather than discovering it by accident.

The solution isn't to abandon cause prioritisation or pretend all causes are equally prioritised in EA. It's to be honest about what EA actually looks like while remaining open to the people who might help us do better. This means acknowledging funding hierarchies, explaining how cause prioritisation works in practice, and creating space for productive disagreement rather than surprise and disillusionment.

Chana Messinger is right that earnestness is powerful. We can trust people to handle honest information about EA's realities. The alternative - presenting EA as more cause-neutral than it actually is - serves no one well.

Massive thanks to Ollie Base for giving the encouragement and feedback that got me to write this :)

Disclaimer: This post was written with heavy AI assistance (Claude), with me providing the ideas, evidence, personal experiences, and direction while Claude helped with structure and drafting. I'm excited about this collaborative approach to writing and curious whether it produces something valuable, but I'm very aware this may read as AI-generated. I'd be keen to hear feedback on both the content and this writing process - including whether the AI assistance detracts from the argument or makes it less engaging to read. The conversation I used to write this is here: https://claude.ai/share/e75cd51a-0513-4447-a264-ded89bc1189a.

I discovered EA well after my university years, which maybe gives me a different perspective. It sounds to me like both you and your group member share a fundamental misconception of what EA is, what questions are the central ones EA seeks to answer. You seem to be viewing it as a set of organizations from which to get funding/jobs. And like, there is a more or less associated set of organizations which provide a small number of people with funding and jobs, but that's not central to EA, and if that is your motivation for being part of EA, then you've missed what EA is fundamentally about. Most EAs will never receive a check from an EA org, and if your interest in EA is based on the expectation that you will, then you are not the kind of person we should want in EA. EA is, at its core, a set of ideas about how we should deploy whatever resources (our time and our money) that we choose to devote to benefiting strangers. Some of those are object level ideas (we can have the greatest impact on people far away in time and/or space), some are more meta level (the ITN framework), but they are about how we give, not how we get. If you think that you can have more impact in the near term than the long term, we can debate that within EA, but ultimately as long as you are genuinely trying to answer that question and base your giving decisions on it, you are doing EA. You can allocate your giving to near term causes and that is fine. But if you expect EAs who disagree with you to spread their giving in some even way, rather than allocating their giving to the causes they think are most effective, then you are expecting those EAs to do something other than EA. EA isn't about spreading giving in any particular way across cause areas, it is about identifying the most effective cause areas and interventions and allocating giving there. The only reason we have more than one cause area is because we don't all agree on which ones are most effective.

But that isn't true, never has been, and never will be. Most people who are interested in AI safety will never find paid work in the field, and we should not lead them to expect otherwise. There was a brief moment when FTX funding made it seem like everyone could get funding for anything, but that moment is gone, and it's never coming back. The economics of this are pretty similar to a church - yes there are a few ... (read more)