Crossposted to LessWrong

TL;DR Quantified Intuitions helps users practice assigning credences to outcomes with a quick feedback loop. Please leave feedback in the comments, join our Discord, or send thoughts to aaron@sage-future.org.

Quantified Intuitions

Quantified Intuitions currently consists of two apps:

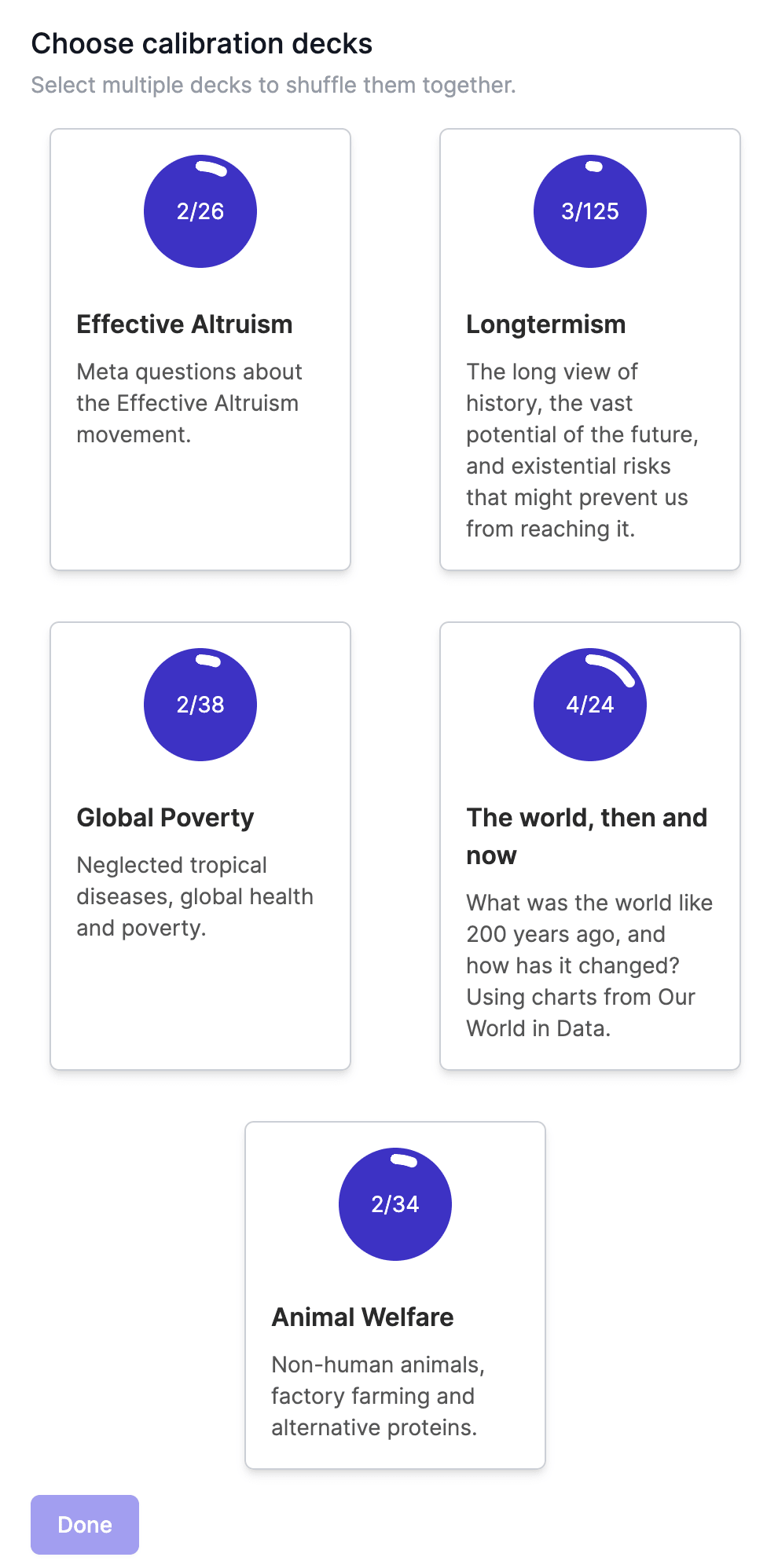

- Calibration game: Assigning confidence intervals to EA-related trivia questions.

- Question sources vary but many are from Anki deck for "Some key numbers that (almost) every EA should know"

- Compared to Open Philanthropy’s calibration app, it currently contains less diversity of questions (hopefully more interesting to EAF/LW readers) but the app is more modern and nicer to use in some ways

- Pastcasting: Forecasting on already resolved questions that you don’t have prior knowledge about.

- Questions are pulled from Metaculus and Good Judgment Open

- More info on motivation and how it works are in the LessWrong announcement post

Please leave feedback in the comments, join our Discord, or send it to aaron@sage-future.org.

Motivation

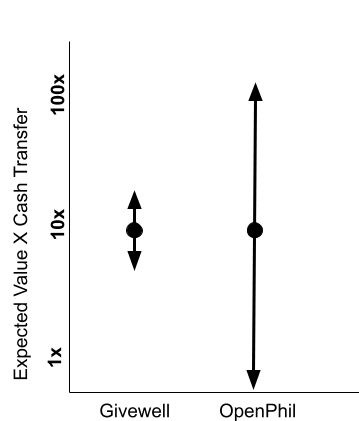

There are huge benefits to using numbers when discussing disagreements: see “3.3.1 Expressing degrees of confidence” in Reasoning Transparency by OpenPhil. But anecdotally, many EAs still feel uncomfortable quantifying their intuitions and continue to prefer using words like “likely” and “plausible” which could be interpreted in many ways.

This issue is likely to get worse as the EA movement attempts to grow quickly, with many new members joining who are coming in with various backgrounds and perspectives on the value of subjective credences. We hope that Quantified Intuitions can help both new and longtime EAs be more comfortable turning their intuitions into numbers.

More background on motivation can be found in Eli’s forum comments here and here.

Who built this?

Sage is an organization founded earlier this year by Eli Lifland, Aaron Ho and Misha Yagudin (in a part-time advising capacity). We’re funded by the FTX Future Fund.

As stated in the grant summary, our initial plan was to “create a pilot version of a forecasting platform, and a paid forecasting team, to make predictions about questions relevant to high-impact research”. While we build a decent beta forecasting platform (that we plan to open source at some point), the pilot for forecasting on questions relevant to high-impact research didn’t go that well due to (a) difficulties in creating resolvable questions relevant to cruxes in AI governance and (b) time constraints of talented forecasters. Nonetheless, we are still growing Samotsvety’s capacity and taking occasional high-impact forecasting gigs.

Eli was also struggling some personally around this time and updating toward AI alignment being super important but crowd forecasting not being that promising for attacking it. He stepped down and is now advising Sage part-time.

Meanwhile, we pivoted to building the apps contained in Quantified Intuitions to improve and maintain epistemics in EA. Aaron wrote most of the software for both apps within the past few months, Alejandro Ortega helped with the calibration game questions and Alina Timoshkina helped with a wide variety of tasks.

If you’d like to contact Sage you can message us on EAF/LW or email aaron@sage-future.org. If you’re interested in helping build apps similar to the ones on Quantified Intuitions or improving the current apps, fill out this expression of interest. It’s possible that we’ll hire a software engineer, product manager, and/or generalist, but we don’t have concrete plans.

This is a great idea, so we made Anki with Uncertainty to do exactly this!

Thank you Hauke for the suggestion :D

I think we'll keep the calibration app as a pure calibration training game, where you see each question only once. Anki is already the king of spaced repetition, so adding calibration features to it seemed like a natural fit.