This poll was linked in the July edition of the Effective Altruism Newsletter.

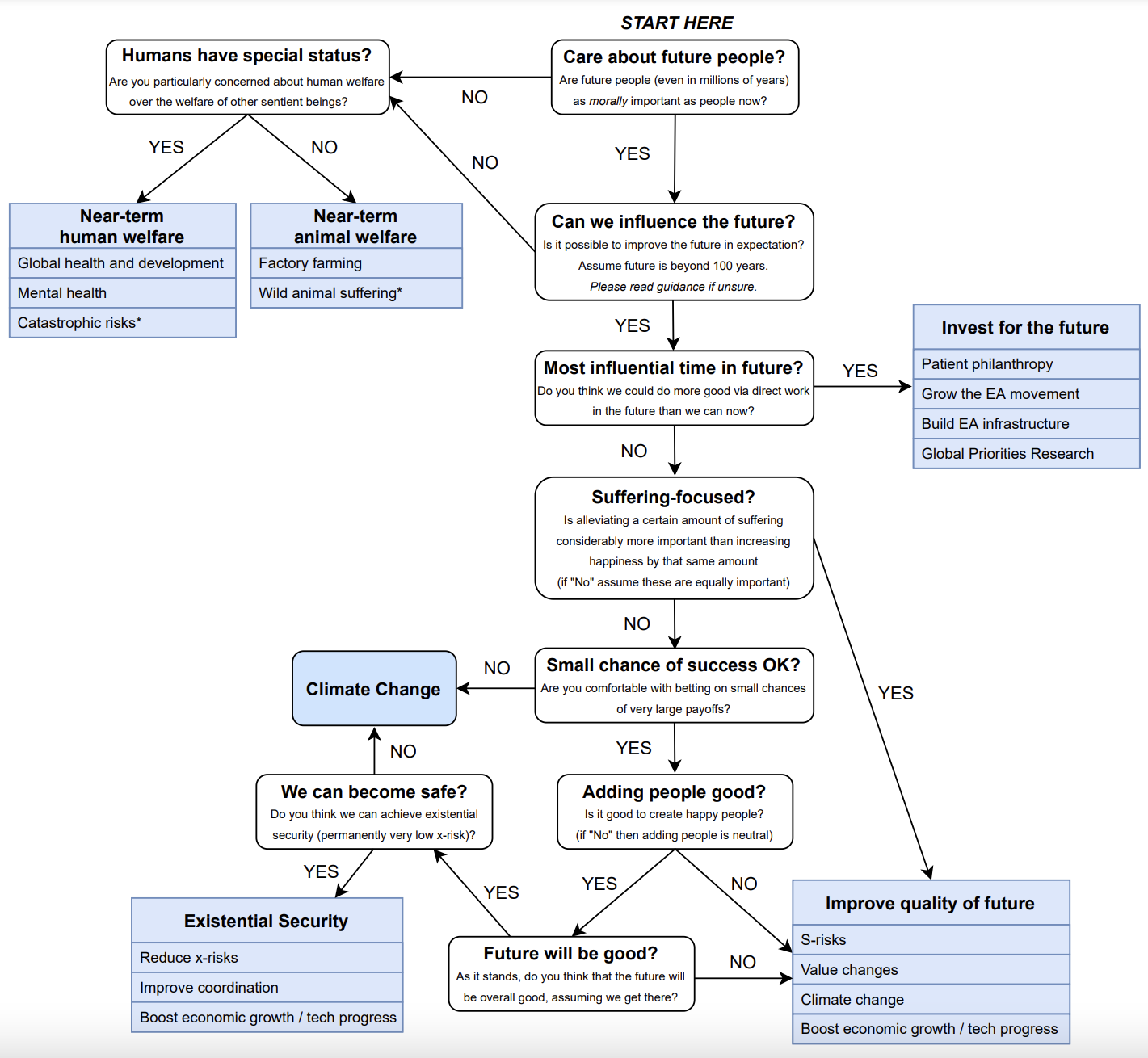

I've chosen this question because Marcus A. Davis's Forum post, featured in this month's newsletter, raises this difficult question: if we are uncertain about our fundamental philosophical assumptions, how can we prioritise between causes?

There is no neutral position. If we want to do something, we have to first focus on a cause. And the difference between the importance and tractability of one cause and another is likely to be the largest determinant of the impact we have.

And yet, cause-prioritisation decisions often reach a philosophical quagmire. What should we do? How do you personally deal with this difficulty?

I think GiveWell's criteria of Effective Charities needing to be 10x better than direct cash transfers is greatly flawed for so many reasons.

- We each might consider giving to local needs in our own neighborhood as well as top charities no matter how far away. Why? Because you can't allow your own neighborhood to decay while you optimize bang for buck elsewhere. the argument that only a few will optimize and plenty will give locally is good for adjusting your ratio, but you still are responsible for where you live. The other side is so many don't give at all, so you're needed locally.

- it is a big gigantic reality we live in and all important considerations are not knowable, some hide in areas we are ignorant of, so balance with common sense that is purely subjective is our only recourse and it's kept humanity alive so far, we shouldn't abandon it.

- following the above idea one might imagine there are many specific benefits of a particular cause area and specific charities within it whose impact is just not measurable by comparing it to the specific criteria's used to measure in current effective giving formula's and that it be 10x...what kind of random thing is 10x?? There just has to be more, and philosophy is part of that, but so is deep ancient wisdom and common sense. What about root causes that affect so much upstream? Maybe a root cause is vastly more important because of how it affects so much upstream and it can't be compared apples to apples with a 10X measurement. Maybe the criteria of how they're measuring is so missing this root issue multiplier at times that it is in reality way off.

- giving to causes you care for has value. If we are to disconnect with our own emotional experiences and values we become not humans but robots optimizing on algorithms. Losing our humanity leads to things like FTX. Not optimal.

- in the end there should be a balance that suits you. GiveWell should be a trusted source weaved in with others in the mix of your best balance. We should continually spread awareness of Effective Giving so others can add this to their balance. it's not either/or but both/and. If your best balance imbues you with a sense you are holding responsibility locally with optimizing globally and that injects some turbo into your engine, then you are going much faster and it feels great, which makes you more effective at everything in your life. If you are at all feeling anxiety about your contribution to good in this world, you might consider how your balance is, have certain pressures pushed you from what is best for you? Lots of EA's have suffered guilt at not doing enough...I'd suggest be both EA and other ways of thinking too. Balance.