We can use Nvidia's stock price to estimate plausible market expectations for the size of the AI chip market, and we can use that to back-out expectations about AI software revenues and value creation.

Doing this helps to understand how much AI growth is expected by society, and how EA expectations compare. It's similar to an earlier post that uses interest rates in a similar way, except I'd argue using the prices of AI companies is more useful right now, since it's more targeted at the figures we most care about.

The exercise requires making some assumptions which I think are plausible (but not guaranteed to hold).

The full analysis is here, but here are some key points:

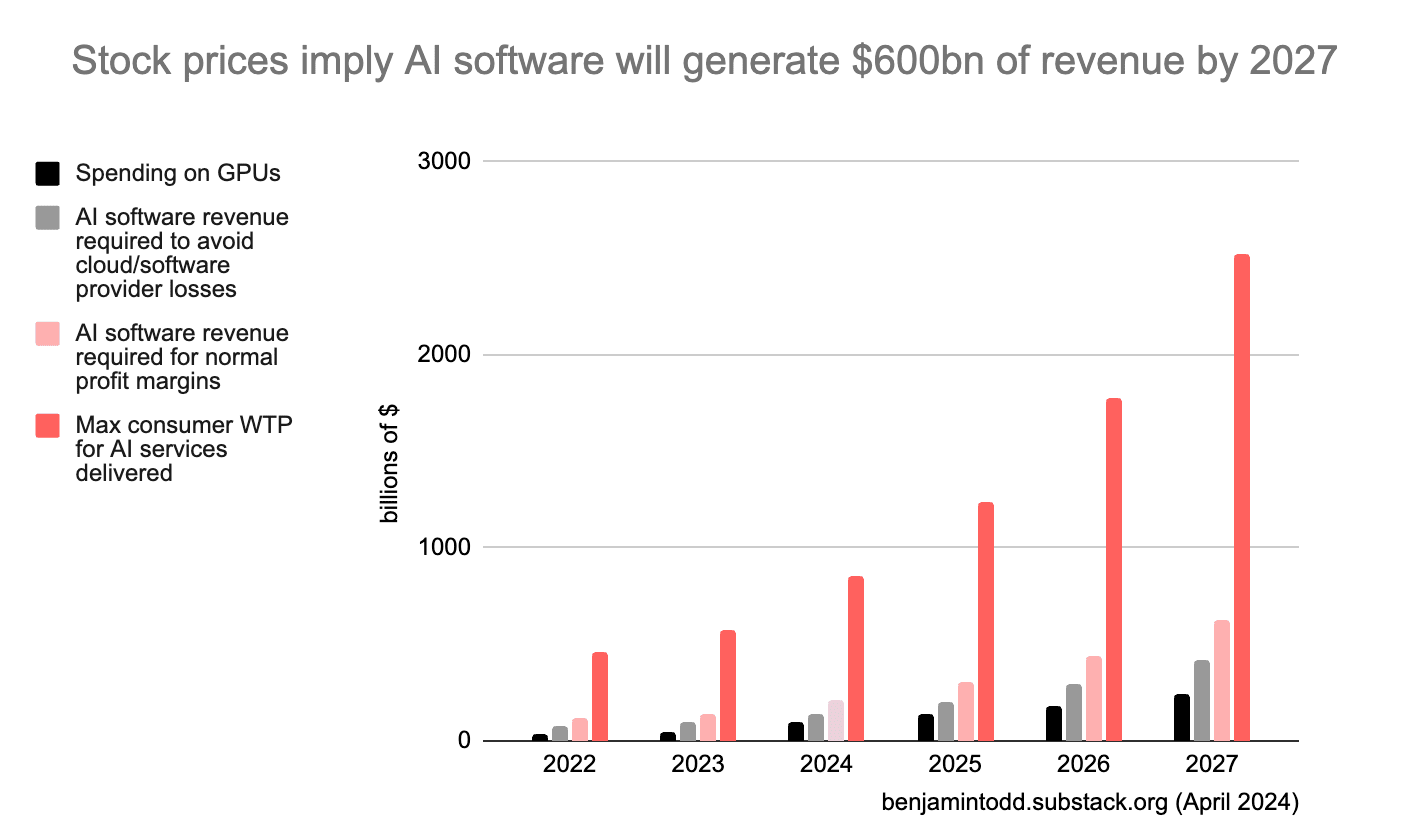

- Nvidia’s current market cap implies the future AI chip market reaches over ~$180bn/year (at current margins), then grows at average rates after that (so around $200bn by 2027). If margins or market share decline, revenues need to be even higher.

- For a data centre to actually use these chips in servers costs another ~80% for other hardware and electricity, then the AI software company that rents the chips will typically have at least another 40% in labour costs.

- This means with $200bn/year spent on AI chips, AI software revenues need reach $500bn/year for these groups to avoid losses, or $800bn/year to make normal profit margins. That would likely require consumers to be willing to pay up to several trillion for these services.

- The typical lifetime of a GPU implies that revenues would need to reach these levels before 2028. If you made a simple model with 35% annual growth in GPU spending, you can estimate year-by-year revenues, as shown in the chart below.

- This isn’t just about Nvidia – other estimates (e.g. the price of Microsoft) seem consistent with these figures.

- These revenues seem high in that they require a big scale up from today; but low if you think AI could start to automate a large fraction of jobs before 2030.

- If market expectations are correct, then by 2027 the amount of money generated by AI will make it easy to fund $10bn+ training runs.

As models are pushed into every computer-mediated online interaction, training costs will likely be dwarfed by inference costs. NVidia's market cap may therefore be misleading in terms of the potential magnitude of investment in inference infrastructure, as NVidia is not as well positioned for inference as it is currently for training. Furthermore, cloud-based AI inference requires low-latency network data centre (DC) access. Such availability will likely be severely curtailed by the electrical power density that is physically available for the scaling of AI inference. i.e. cheap electricity for AI compute is near nuclear and hydro power, and typically not near major conurbations, and suitable for AI training, but not for inference.

How would you factor in exponential growth specifically in AI inference? Do you think this will occur within the DC or in edge computing?

I suspect AI inference will be pushed to migrate to smartphones due to both latency requirements and significant data privacy concerns. If this migration is inevitable, it will likely drive a huge amount of innovation in low-power ASIC neural compute.