I (Vael Gates) recently ran a small pilot study with Collin Burns in which we showed ML researchers (randomly selected NeurIPS / ICML / ICLR 2021 authors) a number of introductory AI safety materials, asking them to answer questions and rate those materials.

Summary

We selected materials that were relatively short and disproportionally aimed at ML researchers, but we also experimented with other types of readings.[1] Within the selected readings, we found that researchers (n=28) preferred materials that were aimed at an ML audience, which tended to be written by ML researchers, and which tended to be more technical and less philosophical.

In particular, for each reading we asked ML researchers (1) how much they liked that reading, (2) how much they agreed with that reading, and (3) how informative that reading was. Aggregating these three metrics, we found that researchers tended to prefer (Steinhardt > [Gates, Bowman] > [Schulman, Russell]), and tended not to like Cotra > Carlsmith. In order of preference (from most preferred to least preferred) the materials were:

- “More is Different for AI” by Jacob Steinhardt (2022) (intro and first three posts only)

- “Researcher Perceptions of Current and Future AI” by Vael Gates (2022) (first 48m; skip the Q&A) (Transcript)

- “Why I Think More NLP Researchers Should Engage with AI Safety Concerns” by Sam Bowman (2022)

- “Frequent arguments about alignment” by John Schulman (2021)

- “Of Myths and Moonshine” by Stuart Russell (2014)

- "Current work in AI Alignment" by Paul Christiano (2019) (Transcript)

- “Why alignment could be hard with modern deep learning” by Ajeya Cotra (2021) (feel free to skip the section “How deep learning works at a high level”)

- “Existential Risk from Power-Seeking AI” by Joe Carlsmith (2021) (only the first 37m; skip the Q&A) (Transcript)

(Not rated)

- "AI timelines/risk projections as of Sept 2022" (first 3 pages only)

Commentary

Christiano (2019), Cotra (2021), and Carlsmith (2021) are well-liked by EAs anecdotally, and we personally think they’re great materials. Our results suggest that materials EAs like may not work well for ML researchers, and that additional materials written by ML researchers for ML researchers could be particularly useful. By our lights, it’d be quite useful to have more short technical primers on AI alignment, more collections of problems that ML researchers can begin to address immediately (and are framed for the mainstream ML audience), more technical published papers to forward to researchers, and so on.

More Detailed Results

Ratings

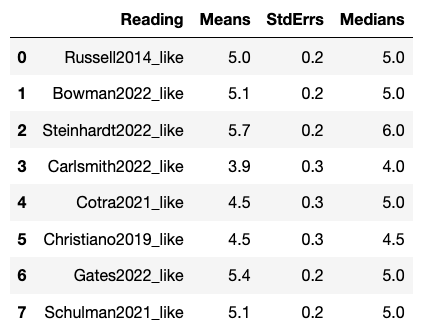

For the question “Overall, how much did you like this content?”, Likert 1-7 ratings (I hated it (1) - Neutral (4) - I loved it (7)) roughly followed:

- Steinhardt > Gates > [Schulman, Russell, Bowman] > [Christiano, Cotra] > Carlsmith

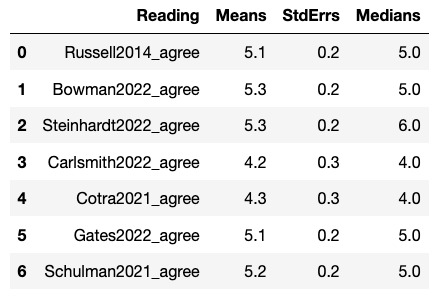

For the question “Overall, how much do you agree or disagree with this content?”, Likert 1-7 ratings (Strongly disagree (1) - Neither disagree nor agree (4) - Strongly agree (7)) roughly followed:

- Steinhardt > [Bowman, Schulman, Gates, Russell] > [Cotra, Carlsmith]

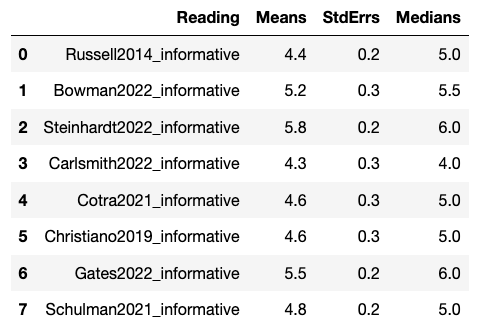

For the question “How informative was this content?”, Likert 1-7 ratings (Extremely noninformative (1) - Neutral (4) - Extremely informative (7)) roughly followed:

- Steinhardt > Gates > Bowman > [Cotra, Christiano, Schulman, Russell] > Carlsmith

The combination of the above questions led to the overall aggregate summary (Steinhardt > [Gates, Bowman] > [Schulman, Russell]) as preferred readings listed above.

Common Criticisms

In the qualitative responses about the readings, there were some recurring criticisms, including: a desire to hear from AI researchers, a dislike of philosophical approaches, a dislike of a focus on existential risks or an emphasis on fears, a desire to be “realistic” and not “speculative”, and a desire for empirical evidence.

Appendix - Raw Data

You can find the complete (anonymized) data here. This includes both more comprehensive quantitative results and qualitative written answers by respondents.

- ^

We expected these types of readings to be more compelling to ML researchers, as also alluded to in e.g. Hobbhann. See also Gates, Trötzmüller for other similar AI safety outreach, with similar themes to the results in this study.

Do you plan on doing any research into the cruxes of disagreement with ML researchers?

I realise that there is some information on this within the qualitative data you collected (which I will admit to not reading all 60 pages of), but it surprises me that this isn't more of a focus. From my incredibly quick scan (so apologies for any inaccurate conclusions) of the qualitative data, it seems like many of the ML researchers were familiar with basic thinking about safety but seemed to not buy it for reasons that didn't look fully drawn out.

It seems to me that there is a risky presupposition that the arguments made in the papers you used are correct, and that what matters now is framing. To me, given the proportion of resources EA stakes on AI safety, it would be worth trying to understand why people (particularly knowledgeable ML researchers) have a different set of priorities to many in EA. It seems suspicious how little intellectual credit that ML/AI people who aren't EA are given.

I am curious to hear your thoughts. I really appreciate the research done here and am very much in favour of more rigorous community/field building being done as you have here.

I'm not going to comment too much here, but if you haven't seen my talk (“Researcher Perceptions of Current and Future AI” (first 48m; skip the Q&A) (Transcript)), I'd recommend it! Specifically, you want the timechunk 23m-48m in that talk, when I'm talking about the results of interviewing ~100 researchers about AI safety arguments. We're going to publish much more on this interview data within the next month or so, but the major results are there, which describes some AI researchers cruxes.