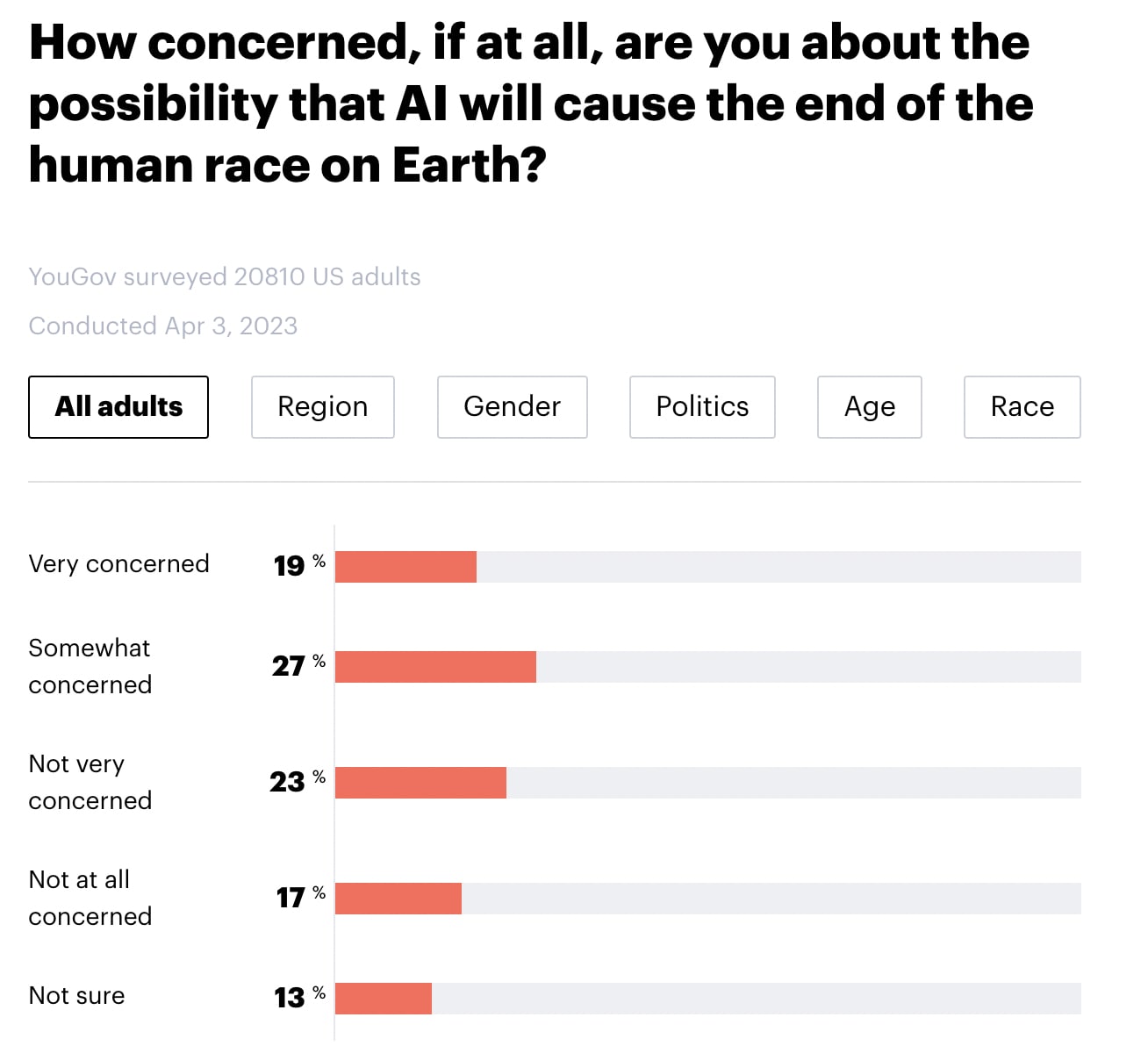

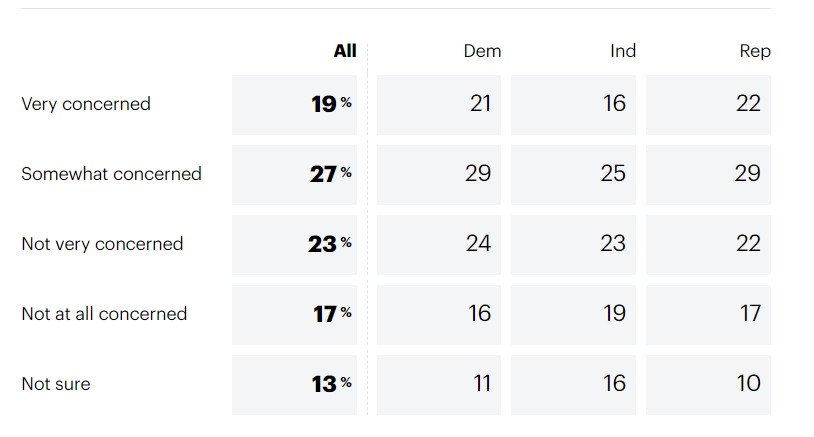

YouGov America released a survey of 20,810 American adults. Highlights below. Note that I didn't run any statistical tests, so any claims of group differences are just "eyeballed."

- 46% say that they are "very concerned" or "somewhat concerned" about the possibility that AI will cause the end of the human race on Earth (with 23% "not very concerned, 17% not concerned at all, and 13% not sure).

- There do not seem to be meaningful differences by region, gender, or political party.

- Younger people seem more concerned than older people.

- Black individuals appear to be somewhat more concerned than people who identified as White, Hispanic, or Other.

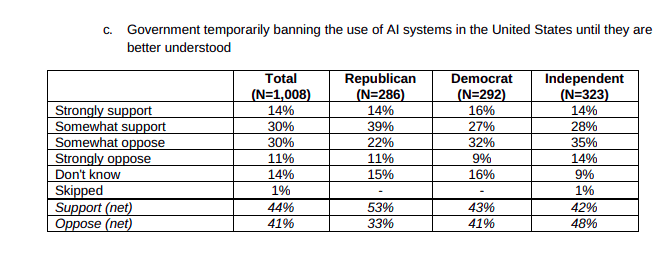

Furthermore, 69% of Americans appear to support a six-month pause in "some kinds of AI development". Note that there doesn't seem to be a clear effect of age or race for this question. (Particularly if you lump "strongly support" and "somewhat support" into the same bucket). Note also that the question mentions that 1000 tech leaders signed an open letter calling for a pause and cites their concern over "profound risks to society and humanity", which may have influenced participants' responses.

In my quick skim, I haven't been able to find details about the survey's methodology (see here for info about YouGov's general methodology) or the credibility of YouGov (EDIT: Several people I trust have told me that YouGov is credible, well-respected, and widely quoted for US polls).

See also:

The "human range" at various tasks is much larger than one would naively think because most people don't obsess over becoming good at any metric, let alone the types of metrics on which GPT-4 seems impressive. Most people don't obsessively practice anything. There's a huge difference between "the entire human range at some skill" and "the range of top human experts at some skill." (By "top experts," I mean people who've practiced the skill for at least a decade and consider it their life's primary calling.)

GPT-4 hasn't entered the range of "top human experts" for domains that are significantly more complex than stuff for which it's easy to write an evaluative function. If we made a list of the world's best 10,000 CEOs, GPT-4 wouldn't make the list. Once it ranks at 9,999, I'm pretty sure we're <1 year away from superintelligence. (The same argument goes for top-10,000 Lesswrong-type generalists or top-10,000 machine learning researchers who occasionally make new discoveries. Also top-10,000 novelists, but maybe not on a gamifiable metric like "popular appeal.")