I'm often asked about how the existential risk landscape has changed in the years since I wrote The Precipice. Earlier this year, I gave a talk on exactly that, and I want to share it here.

Here's a video of the talk and a full transcript.

In the years since I wrote The Precipice, the question I’m asked most is how the risks have changed. It’s now almost four years since the book came out, but the text has to be locked down a long time earlier, so we are really coming up on about five years of changes to the risk landscape.

I’m going to dive into four of the biggest risks — climate change, nuclear, pandemics, and AI — to show how they’ve changed. Now a lot has happened over those years, and I don’t want this to just be recapping the news in fast-forward. But luckily, for each of these risks I think there are some key insights and takeaways that one can distill from all that has happened. So I’m going to take you through them and tease out these key updates and why they matter.

I’m going to focus on changes to the landscape of existential risk — which includes human extinction and other ways that humanity’s entire potential could be permanently lost. For most of these areas, there are many other serious risks and ongoing harms that have also changed, but I won’t be able to get into those. The point of this talk is to really narrow in on the changes to existential risk.

Climate Change

Let’s start with climate change.

We can estimate the potential damages from climate change in three steps:

- how much carbon will we emit?

- how much warming does that carbon produce?

- how much damage does that warming do?

And there are key updates on the first two of these, which have mostly flown under the radar for the general public.

Carbon Emissions

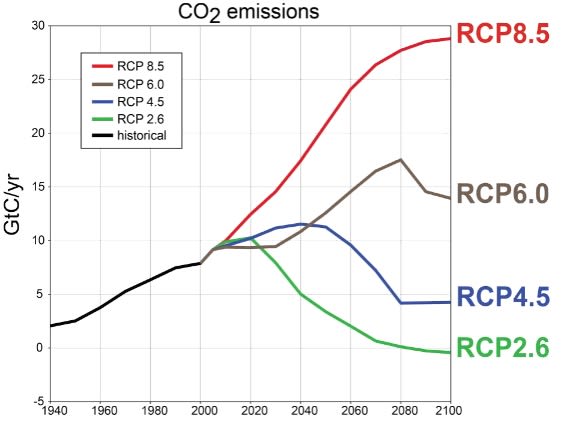

The question of how much carbon we will emit is often put in terms of Representative Concentration Pathways (RCPs).

Initially there were 4 of these, with higher numbers meaning more greenhouse effect in the year 2100. They are all somewhat arbitrarily chosen — meant to represent broad possibilities for how our emissions might unfold over the century. Our lack of knowledge about which path we would take was a huge source of uncertainty about how bad climate change would be. Many of the more dire climate predictions are based on the worst of these paths, RCP 8.5. It is now clear that we are not at all on RCP 8.5, and that our own path is headed somewhere between the lower two paths.

This isn’t great news. Many people were hoping we could control our emissions faster than this. But for the purposes of existential risk from climate change, much of the risk comes from the worst case possibilities, so even just moving towards the middle of the range means lower existential risk — and the lower part of the middle is even better.

Climate Sensitivity

Now what about the second question of how much warming that carbon will produce? The key measure here is something called the equilibrium climate sensitivity. This is roughly defined as how many degrees of warming there would be if the concentrations of carbon in the atmosphere were to double from pre-industrial levels. If there were no feedbacks, this would be easy to estimate: doubling carbon dioxide while keeping everything else fixed produces about 1.2°C of warming. But the climate sensitivity also accounts for many climate feedbacks, including water vapour and cloud formation. These make it higher and also much harder to estimate.

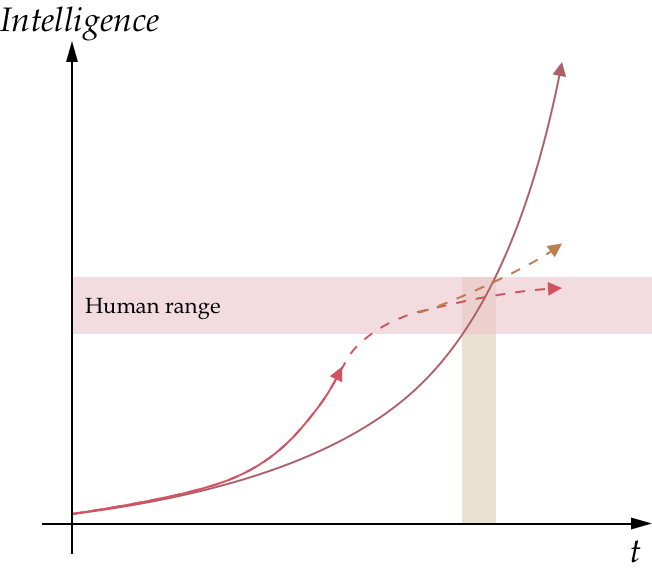

When I wrote The Precipice, the IPCC stated that climate sensitivity was likely to be somewhere between 1.5°C and 4.5°C.

When it comes to estimating the impacts of warming, this is a vast range, with the top giving three times as much warming as the bottom. Moreover, the true sensitivity could easily be even higher, as the IPCC was only saying that there was a two-thirds chance it falls within this range. So there was about a 1 in 6 chance that the climate sensitivity is even higher than 4.5°. And that is where a lot of the existential risk was coming from — scenarios where the climate is more responsive to emissions than we expect.

To understand the existential risk from climate change, we’d really want to find out where in this vast range the climate sensitivity really lies. But there has been markedly little progress in this. The range of 1.5° to 4.5° was first proposed the year I was born and had not appreciably changed in the following 40 years of research.

Well, now it has.

In the latest assessment report, the IPCC has narrowed the likely range to span from 2.5° to 4°, reflecting an improved understanding of the climate feedbacks. This is a mixed blessing, as it is saying that very good and very bad outcomes are both less likely to happen. But again, reducing the possibility of extreme warming will lower existential risk, which is our focus here. And they also narrowed their 90% confidence range too, with them now saying it is 95% likely that the climate sensitivity is less than 5°.

In both cases here, the updates are about narrowing the range of plausible possibilities, which has the effect of reducing the chance of extreme outcomes, which in turn reduces the existential risk. But there is an interesting difference between them. When considering our emissions, humanity’s actions in responding to the threat of climate change have lowered our emissions from what they would have been with business as usual. Our actions lowered the risk. In contrast, in the case of climate sensitivity, the change in risk is not causal, but evidential — we’ve discovered evidence that the temperature won’t be as sensitive to our emissions as we’d feared. We will see this for other risks too. Sometimes we have made the risks lower (or higher), sometimes we’ve discovered that they always were.

Nuclear

Heightened Chance of Onset

At the time of writing, the idea of nuclear war had seemed like a forgotten menace — something that I had to remind people still existed. Here’s what I said in The Precipice about the possibility of great-power war, when I was trying to make the case that it could still happen:

Consider the prospect of great-power war this century. That is, war between any of the world’s most powerful countries or blocs. War on such a scale defined the first half of the twentieth century, and its looming threat defined much of the second half too. Even though international tension may again be growing, it seems almost unthinkable that any of the great powers will go to war with each other this decade, and unlikely for the foreseeable future. But a century is a long time, and there is certainly a risk that a great-power war will break out once more.

The Russian invasion of Ukraine has brought nuclear powers substantially closer to the brink of nuclear war. Remember all the calls for a no-fly zone, with the implicit threat that if it was violated, the US and Russia would immediately end up in a shooting war in Ukraine. Or the heightened rhetoric from both the US and Russia about devastating nuclear retaliation, and actions to increase their nuclear readiness levels. This isn’t a Cuban missile crisis level, and the tension has subsided from its peak, but the situation is still markedly worse than anyone anticipated when I was writing The Precipice.

Likely New Arms Race

When I wrote The Precipice, the major nuclear treaty between the US and Russia (New START) was due to lapse and President Donald Trump intended to let that happen. It was re-signed at the last minute by the incoming Biden administration. But it can’t be renewed again. It is due to expire exactly 2 years from now and a new replacement treaty would have to be negotiated at a time when the two countries have a terrible relationship and are actively engaged in a proxy war.

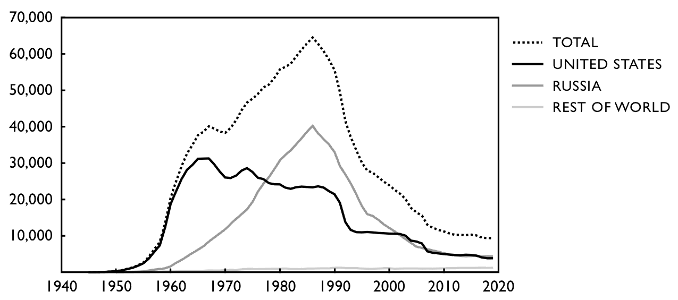

In The Precipice, I talked about the history of nuclear warhead stockpiles decreasing ever since 1986 and showed this familiar graph.

We’d been getting used to that curve of nuclear warheads going ever downwards, with the challenge being how to make it go down faster.

But now there is the looming possibility of stockpiles increasing. Indeed I think that is the most likely possibility. In some ways this could be an even more important time for the nuclear risk community, as their actions may make the difference between a dramatic rise and a mild rise, or a mild rise and stability. But it is a sad time.

In The Precipice, one reason I didn’t rate the existential risk from nuclear war higher was that with our current arsenals there isn’t a known scientific pathway for an all out war to lead to human extinction (nor a solid story about how it could permanently end civilisation). But now the arsenals are going to rise — perhaps a long way.

Funding Collapse

Meanwhile, there has been a collapse in funding for work on nuclear peace and arms reduction — work that (among other things) helped convince the US government to create the New START treaty in the first place. The MacArthur Foundation had been a major donor in this space, contributing about 45% of all funding. But it pulled out of funding this work, leading to a massive collapse in funding for the field, with nuclear experts having to leave to seek other careers, even while their help was needed more than at any time in the last three decades.

Each of these changes is a big deal.

- A Russian invasion of a European country, leading to a proxy war with the US.

- The only remaining arms control treaty due to expire, allowing a new nuclear arms race.

- While the civil society actors working to limit these risks see their funding halve.

Nuclear risk is up.

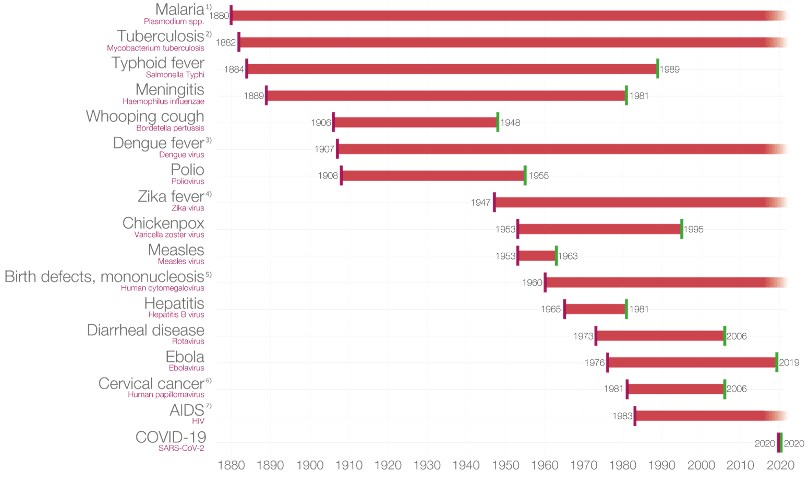

Pandemics

Covid

Since I wrote the book, we had the biggest pandemic in 100 years. It started in late 2019 when my text was already locked in and then hit the UK and US in full force just as the book was released in March.

Covid was not an existential risk. But it did have implications for such risks.

First, it exposed serious weaknesses in our ability to defend against such risks. One of the big challenges of existential risk is that it is speculative. I wrote in The Precipice that if there was a clear and present danger (like an asteroid visibly on a collision course with the Earth) things would move into action. Humanity would be able to unite behind a common enemy, pushing our national or factional squabbles into the background. Well, there was some of that, at first. But as the crisis dragged on, we saw it become increasingly polarised along existing national and political lines. So one lesson here is that the uniting around a common threat is quite a temporary phase — you get a few months of that and need to use them well before the polarisation kicks in.

Another kind of weakness it exposed was in our institutions for managing these risks. I entered the pandemic holding the WHO and CDC in very high regard, which I really think they did not live up to. One hypothesis I’ve heard for this is that they did have the skills to manage such crises a generation ago, but decades without needing to prove these skills led them to atrophy.

A second implication of Covid is that the pandemic acted as a warning shot. The idea that humanity might experience large sweeping catastrophes that change our way of life was almost unthinkable in 2019, but is now much more beleivable. This is true both on a personal level and a civilisational level. We can now imagine going through a new event where our day to day life is completely upended for years on end. And we can imagine events that sweep the globe and threaten civilisation itself. Our air of invincibility has been punctured.

That said, it produced less of an immune response than expected. The conventional wisdom is that a crisis like this leads to a panic-neglect cycle, where we oversupply caution for a while, but can’t keep it up. This was the expectation of many people in biosecurity, with the main strategy being about making sure the response wasn’t too narrowly focused on a re-run of Covid, instead covering a wide range of possible pandemics, and that the funding was ring-fenced so that it couldn’t be funnelled away to other issues when the memory of this tragedy began to fade.

But we didn’t even see a panic stage: spending on biodefense for future pandemics was disappointingly weak in the UK and even worse in the US.

A third implication was the amazing improvement in vaccine development times, including the successful first deployment of MRNA vaccines.

When I wrote the Precipice, the typical time from vaccine development was decades and the fastest ever time was 10 years. The expert consensus was that it would take at least a couple of years for Covid, but instead we had several completely different vaccines ready within just a single year. It really wasn’t clear beforehand that this was possible. Vaccines are still extremely important in global biodefense, providing an eventual way out for pandemics like Covid whose high R0 means they can’t be suppressed by behaviour change alone. Other interventions buy us the time to get to the vaccines, and if we can bring that time sooner, it also makes that task of surviving that long easier.

A fourth and final implication concerns gain of function research. This kind of biological research where scientists make a more deadly or more transmissible version of an existing pathogen is one of the sources of extreme pandemics that I was most concerned about in The Precipice. While the scientists behind gain of function research are well-meaning, the track record of pathogens escaping their labs is much worse than people realise.

With Covid, we had the bizarre situation where the biggest global disaster since World War II was very plausibly caused by a lab escape from a well-meaning but risky line of research on bat coronaviruses. Credible investigations of Covid origins are about evenly split on the matter. It is entirely possible that the actions of people at one particular lab may have killed more than 25 million people across the globe. Yet the response is often one of indifference — that it doesn’t really matter as it is in the past and we can’t change it. I’m still genuinely surprised by this. I’d like to think that if it were someone at a US lab who may or may not be responsible for the deaths of millions of people that it would be the trial of the century. Clearly the story got caught up in a political culture war as well as the mistrust between two great powers, though I’d actually have thought that this would make it an even more visible and charged issue. But people don’t seem to mind and gain of function research is carrying on, more or less as it did before.

Protective technologies

Moving on from Covid, there have been recent developments in two different protective technologies I’m really excited about.

The first is metagenomic sequencing. This is technology that can take a sample from a patient or the environment and sequence all DNA and RNA in it, matching them against databases of known organisms. An example of a practical deployment would be to use it for diagnostic conundrums — if a doctor can’t work out why a patient is so sick, they can take a sample and send it to a central lab for metagenomic sequencing. If China had had this technology, they would have known in 2019 that there was a novel pathogen whose closest match was SARS, but which had substantial changes. Given China’s history with SARS they would likely have acted quickly to stop it. There are also potential use-cases where a sample is taken from the environment, such as waste water or an airport air conditioner. Novel pandemics could potentially be detected even before symptoms manifest by the signature of exponential growth over time of a novel sequence.

The second is improving air quality. A big lesson from the pandemic is that epidemiology was underestimating the potential for airborne transmission. This opens the possibility for a revolution in public health. Just as there were tremendous gains in the 19th century when water-based transmission was understood and the importance of clean water was discovered, so there might be tremendous gains from clean air.

A big surprise in the pandemic was how little transmission occurred on planes, even when there was an infected person sitting near you. A lot of this was due to the impressive air conditioning and filtration modern planes have. We could upgrade our buildings to include such systems. And there have been substantial advances in germicidal UV light, including the new UVC band of light that may have a very good tradeoff in its ability to kill germs without damaging human skin or eyes.

There are a couple of different pathways to protection using these technologies. We could upgrade our shared buildings such as offices and schools to use these all the time. This would limit transmission of viruses even in the first days before we’ve become aware of the outbreak, and have substantial immediate benefits in preventing spread of colds and flu. And during a pandemic, we could scale up the deployment to many more locations.

Some key features of these technologies are that:

- They can deal with a wide range of pathogens including novel ones (whether natural or engineered). They even help with stealth pathogens before they produce symptoms.

- They require little prosocial behaviour-change from the public.

- They have big spillover benefits for helping fight everyday infectious diseases like the flu.

- And research on them isn’t dual use (they systematically help defence rather than offence).

AI in Biotech

Finally, there is the role of AI on pandemic risk. Biology is probably the science that has benefitted most from AI so far. For example, when I wrote The Precipice, biologists had determined the structure of about 50 thousand different proteins. Then DeepMind’s AlphaFold applied AI to solve the protein-folding problem and has used it to find and publish the structures of 200 million proteins. This example doesn’t have any direct effect on existential risk, but a lot of biology does come with risks, and rapidly accelerating biological capabilities may open up new risks at a faster rate, giving us less time to anticipate and respond to them.

A very recent avenue where AI might contribute to pandemic risk is via language models (like ChatGPT) making it easier for bad actors to learn how to engineer and deploy novel pathogens to create catastrophic pandemics. This isn’t a qualitative change in the risk of engineered pandemics, as there is already a strong process of democratisation of biotechnology, with the latest breakthroughs and techniques being rapidly replicable by people with fewer skills and more basic labs. Ultimately, very few people want to deploy catastrophic bioweapons, but as the pool of people who could expands, the chance it contains someone with such motivations grows. AI language models are pushing this democratisation further faster, and unless these abilities are removed from future AI systems, they will indeed increase the risk from bioterrorism.

AI

Which brings us, at last, to AI risk.

So much has happened in AI over the last five years. It is hard to even begin to summarise it. But I want to zoom in on three things that I think are especially important.

RL agents ⇒ language models

First, there is the shift from reinforcement learning agents to language models.

Five years ago, the leading work on artificial general intelligence was game-playing agents trained with deep reinforcement learning (‘deep RL’). Systems like AlphaGo, AlphaZero, AlphaStar, and OpenAI 5. These are systems that are trained to take actions in a virtual world which outmanoeuvre an adversary — pushing inexorably towards victory no matter how the opponent tries to stop them. And they all created AI agents which outplayed the best humans.

Now, the cutting edge is generative AI: systems that generate images, videos, and (especially) text.

These new systems are not (inherently) agents. So the classical threat scenario of Yudkowsky & Bostrom (the one I focused on in The Precipice) doesn’t directly apply. That’s a big deal.

It does look like people will be able to make powerful agents out of language models. But they don’t have to be in agent form, so it may be possible for first labs to make aligned non-agent AGI to help with safety measures for AI agents or for national or international governance to outlaw advanced AI agents, while still benefiting from advanced non-agent systems

And as Stuart Russell predicted, these new AI systems have read vast amounts of human writing. Something like 1 trillion words of text. All of Wikipedia; novels and textbooks; vast parts of the internet. It has probably read more academic ethics than I have and almost all fiction is nothing but humans doing things to other humans combined with judgments of those actions.

These systems thus have a vast amount of training signal about human values. This is in big contrast to the game-playing systems, which knew nothing of human values and where it was very unclear how to ever teach them about such values. So the challenge is no longer getting them enough information about ethics, but about making them care.

Because this was supervised learning with a vast amount of training data (not too far away from all text ever written), this allowed a very rapid improvement of capabilities. But this is not quite the general-purpose acceleration that people like Yudkowsky had been predicting.

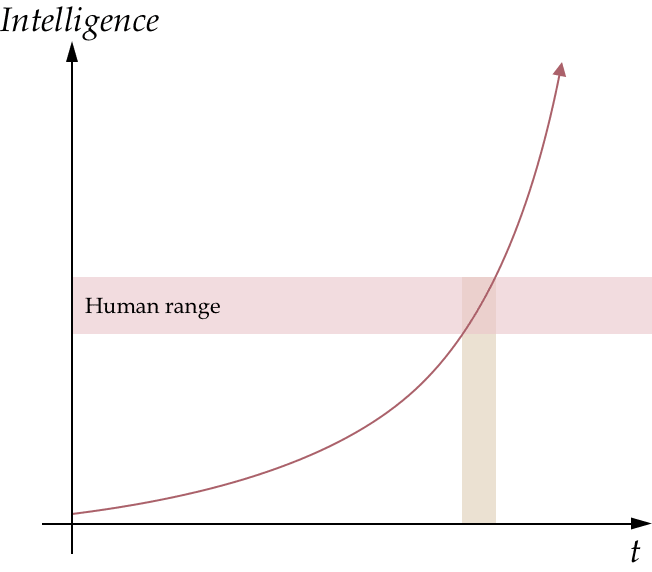

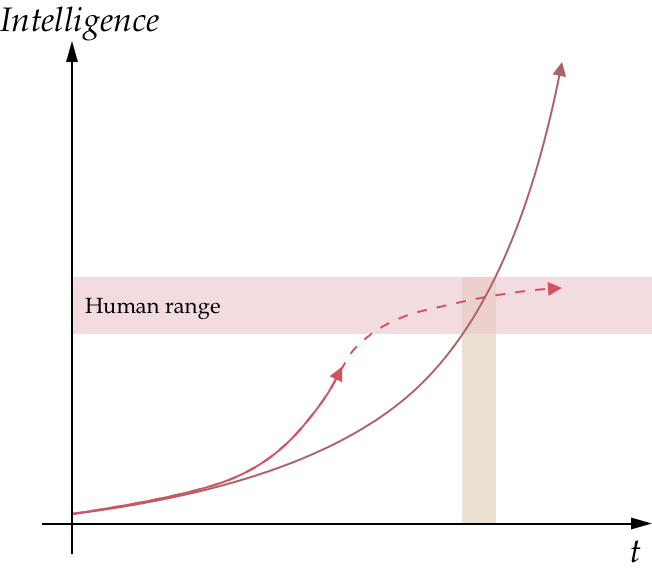

He predicted that accelerating AI capabilities would blast through the relatively narrow range of human abilities because there is nothing special about those levels. And because it was rising so fast, it would pass through in a very short time period:

Language models have been growing more capable even faster. But with them there is something very special about the human range of abilities, because that is the level of all the text they are trained on. Their capabilities are being pulled rapidly upwards towards the human level as they train on all our writings, but they may also plateau at that point:

In some sense they could still exceed human capabilities (for example, they could know more topics simultaneously than any human and be faster at writing), but without some new approach, they might not exceed the best humans in each area.

Even if people then move to new kinds of training to keep advancing beyond the human level, this might return to the slower speeds of improvement — putting a kink in the learning curves:

And finally because language models rely on truly vast amounts of compute in order to scale, running an AGI lab is no longer possible as a nonprofit, or as a side project for a rich company. The once quite academic and cautious AGI labs have become dependent on vast amounts of compute from their corporate partners, and these corporate partners want rapid productisation and more control over the agenda in return.

Racing

Another big change in AI risk comes from the increased racing towards AI.

We had long been aware of the possibilities and dangers of racing: that it would lead to cutting corners in safety in an attempt to get to advanced AGI before anyone else.

The first few years after writing The Precipice saw substantial racing between OpenAI and DeepMind, with Anthropic also joining the fray. Then in 2023, something new happened. It became not just a race between these labs, but a race between the largest companies on Earth. Microsoft tried to capitalise on its prescient investments in OpenAI, seizing what might be the moment of its largest lead in AI to try for Google’s crown jewel — Search. They put out a powerful, but badly misaligned system that gave the world its first example of an AI system that turned on its own users.

Some of the most extreme examples were threatening revenge on a journalist for writing a negative story about it, and even threatening to kill an AI ethics researcher. It was a real 2001 A Space Odyssey moment, with the AI able to autonomously find out if you had said negative things about it in other conversations and threaten to punish you for it.

The system still wasn’t really an agent and wasn’t really trying to get vengeance on people. It is more that it was simulating a persona who acts like that. But it was still systematically doing behaviours that if done by humans would be threats of vengeance.

Microsoft managed to tamp down these behaviours, and ended up with a system that did pose a real challenge for Google on search. Google responded by merging its Brain and DeepMind labs into a single large lab and to make them much more focused on building ever larger language models and immediately deploying them in products.

I’m really worried that this new race between big tech companies (who don’t get the risks from advanced AI in the same way that the labs themselves do) will push the labs into riskier deployments, push back against helpful regulation, and make it much harder for the labs to pull the emergency brake if they think their systems are becoming too dangerous.

Governance

Finally, the last 16 months has seen dramatic changes in public and government interest.

By the time I wrote The Precipice, there was certainly public interest in the rise of AI — especially the AlphaGo victory over Le Sedol. And the US government was making noises about a possible race with China on AI, but without much action to show for it.

This really started to change in October 2022, when the US government introduced a raft of export controls on computer chip technologies to keep the most advanced AI chips out of China and delay China developing an AI chip industry of its own. This was a major sign of serious intent to control international access to the compute needed for advanced AI development.

Then a month later saw the release of ChatGPT, followed by Bing and GPT-4 in early 2023. Public access to such advanced systems led to a rapid rise in public awareness of how much AI had progressed, and public concern about its risks.

In May, Geoffrey Hinton and and Yoshua Bengio — two of the founders of deep learning — both came out expressing deep concern about what they had created and advocated for governments to respond to the existential risks from AI. Later that month, they joined a host of other eminent people in AI in signing a succinct public statement that addressing the risk of human extinction from AI should be a global priority.

This was swiftly followed by a similar statement by The Elders — a group of former world leaders including a former UN secretary general, WHO director-general, and presidents and prime ministers of Ireland, Norway, Mongolia, Mexico, Chile, and more with multiple Nobel Peace prizes between them. They agreed that:

without proper global regulation, the extraordinary rate of technological progress with AI poses an existential threat to humanity.

Existential risk from AI had finally gone from a speculative academic concern to a major new topic in global governance.

Then in October and November there was a flurry of activity by the US and UK governments. Biden signed an executive order aimed at the safety of the frontier AI systems. The UK convened the first of an ongoing series of international meetings on international AI governance, with an explicit focus on the existential risk from AI. 28 countries and the EU signed the Bletchley Declaration. Then the US and UK each launched their own national AI safety institutes.

All told, this was an amazing shift in the Overton window in just 16 months. While there is by no means universal agreement, the idea that existential risk from AI is a major global priority is now a respected standard position by many leaders in AI and global politics, as is the need for national and international regulation of frontier AI.

The effects on existential risk of all this are complex:

- The shift to language models has had quite mixed effects

- The racing is bad

- The improved governance is good

The overall picture is mixed.

Conclusions

In The Precipice, I gave my best guess probabilities for the existential risk over the next 100 years in each of these areas. These were 1 in 1,000 for Climate and for Nuclear, 1 in 30 for Pandemics and 1 in 10 for Unaligned AI. You are probably all wondering how all of this affects those numbers and what my new numbers are. But as you may have noticed, these are all rounded off (usually to the nearest factor of 10) and none of them have moved that far.

But I can say that in my view, Climate risk is down, Nuclear is up, and the story on Pandemics and AI is mixed — lots of changes, but no clear direction for the overall risk.

I want to close by saying a few words about how the world has been reacting to existential risk as a whole.

In The Precipice, I presented the idea of existential security. It is a hypothetical future state of the world where existential risk is low and kept low; where we have put out the current fires, and put in place the mechanisms to ensure fire no longer poses a substantive threat.

We are mainly in the putting-out-fires stage at the moment — dealing with the challenges that have been thrust upon us — and this is currently the most urgent challenge. But existential security also includes the work to establish the norms and institutions to make sure things never get as out of control as they are this century: establishing the moral seriousness of existential risk; establishing the international norms, then international treaties, and governance for tackling existential risk.

This isn’t going to be quick, but over a timescale of decades there could be substantial progress. And making sure this process succeeds over the coming decades is crucial if the gains we make from fighting the current fires are to last.

I think things are moving in the right direction. The UN has started to explicitly focus on existential risks as a major global priority. The Elders have structured their agenda around fighting existential risks. One British Prime Minister quoted The Precipice in a speech to the UN and another had a whole major summit around existential risk from AI. The world is beginning to take security from existential risks seriously, and that is a very positive development.

Thanks for this!

I think one important caveat to the climate picture is that part of the third of the three factors -- climate impacts at given levels of warming -- has also changed and that this change runs in the other direction.

Below is the graphic on this from the recent IPCC Synthesis Report (p. 18), which shows on the right hand side how the expected occurrence of reasons for concern has moved downwards between AR 5 (2014) and AR6 (2022). In other words, there is an evidential change that moves higher impacts towards lower temperatures and could potentially compensate the effect of lower expected temperature (eyeballing this, for some of the global reasons for concern it moves down by a degree).

I think one should treat the IPCC graphic with some skepticism -- it is a bit suspicious that all the impact estimates move to lower temperatures at the same time as higher temperatures become less likely and IPCC reports are famously politicized documents -- but it is still a data point worth taking into account.

I haven't done the math on this, but I would expect that the effect of a lowered distribution of warming will still dominate, but it is something that moderates the picture and I think is worth including when giving complete accounts to avoid coming across as cherry-picking only the positive updates.

I hadn't seen that and I agree that it looks like a serious negative update (though I don't know what exactly it is measuring). Thanks for drawing it to my attention. I'm also increasingly worried about the continued unprecedentedly hot stretch we are in. I'd been assuming it was just one of these cases of a randomly hot year that will regress back to the previous trend, but as it drags on the hypothesis of there being something new happening does grow in plausibility.

Overall, 'mixed' might be a better summary of Climate.

Sorry for the delay!

Here is a good summary of whether or not the recent warming should make us worried more: https://www.carbonbrief.org/factcheck-why-the-recent-acceleration-in-global-warming-is-what-scientists-expect/

It is nuanced, but I think the TLDR is that recent observations are within the expected range (the trend observed since 2009 is within the range expected by climate models, though the observations are noisy and uncertain, as are the models).

"IPCC reports are famously politicized documents"

Why do you say that? It's not my impression when it comes to physical changes and impacts. (Not so sure about the economics and mitigation side.)

Though I find the "burning embers" diagrams like the one you show hard to interpret as what "high" risk/impact means doesn't seem well-defined and it's not clear to me it's being kept consistent between reports (though most others seem to love them for some reason...).

It is true that this is not true for the long-form summary of the science.

What I mean is that this graphic is out of the "Summary for Policymakers", which is approved by policymakers and a fairly political document.

Less formalistically, all of the infographics in the Summary for Policymakers are carefully chosen and one goal of the Summary for Policymakers is clearly to give ammunition for action (e.g. the infographic right above the cited one displays impacts in scenarios without any additional adaptation by end of century, which seems like a very implausible assumption as a default and one that makes a lot more sense when the goal is to display gravity of climate impacts rather than making a best guess of climate impacts).

Whilst policymakers have a substantial role in drafting the SPM, I've not generally heard scientists complain about political interference in writing it. Some heavy fossil fuel-producing countries have tried removing text they don't like, but didn't come close to succeeding. The SPM has to be based on the underlying report, so there's quite a bit of constraint. I don't see anything to suggest the SPM differs substantially from researchers' consensus. The initial drafts by scientists should be available online, so it could be checked what changes were made by the rounds of review.

When people say things are "politicized", it indicates to me that they have been made inaccurate. I think it's a term that should be used with great care re the IPCC, since giving people the impression that the reports are inaccurate or political gives people reason to disregard them.

I can believe the no adaptation thing does reflect the literature, because impacts studies do very often assume no adaptation, and there could well be too few studies that credibly account for adaptation to do a synthesis. The thing to do would be to check the full report to see if there is a discrepancy before presuming political influence. Maybe you think the WGII authors are politicised - that I have no particular knowledge of, but again climate impacts researchers I know don't seem concerned by it.

Apologies if my comment was triggering the sense that I am questioning published climate science. I don't. I think / hope we are mostly misunderstanding each other.

With "politicized" here I do not mean that the report says inaccurate things, but merely that the selection of what is shown and how things are being framed in the SMP is a highly political result.

And the climate scientists here are political agents as well, so comparing it with prior versions would not provide counter-evidence.

To make clear what I mean with "politicized".

1. I do not think it is a coincidence that the fact that the graphic on climate impacts only shows very subtly that this assumes no adaptation.

2. And that the graph on higher impacts at lower levels of warming does not mention that since the last update of the IPCC report we also have now expectations of much lower warming.

These kind of things are presentational choices that are being made, omissions one would not make if the goal was to maximally clarify the situation because those choices are always made in ways justifying more action. This is what I mean with "politicized", selectively presented and framed evidence.

[EDIT: This is a good reference from very respected IPCC authors that discusses the politicized process with many examples]

Yeah I think that it's just that, to me at least, "politicized" has strong connotations of a process being captured by a particular non-broad political constituency or where the outcomes are closely related to alignment with certain political groups or similar. The term "political", as in "the IPCC SPMs are political documents", seems not to give such an impression. "Value-laden" is perhaps another possibility. The article you link to also seems to use "political" to refer to IPCC processes rather than "politicized" - it's a subtle difference but there you go. (Edit - though I do notice I said not to use "political" in my previous comment. I don't know, maybe it depends on how it's written too. It doesn't seem like an unreasonable word to use to me now.)

Re point 1 - I guess we can't know the intentions of the authors re the decision to not discuss climate adaptation there.

Re 2 - I'm not aware of the IPCC concluding that "we also have now expectations of much lower warming". So a plausible reason for it not being in the SPM is that it's not in the main report. As I understand it, there's not a consensus that we can place likelihoods on future emissions scenarios and hence on future warming, and then there's not a way to have consensus about future expectations about that. One line of thought seems to be that it's emission scenario designers' and the IPCC's job to say what is required to meet certain scenarios and what the implications of doing so are, and then the likelihood of the emissions scenarios are determined by governments' choices. Then, a plausible reason why the IPCC did not report on changes in expectations of warming is that it's largely about reporting consensus positions, and there isn't one here. The choice to report consensus positions and not to put likelihoods on emissions scenarios is political in a sense, but not in a way that a priori seems to favour arguments for action over those against. (Though the IPCC did go as far as to say we are likely to exceed 1.5C warming, but didn't comment further as far as I'm aware.)

So I don't think we could be very confident that it is politicized/political in the way you say, in that there seem to be other plausible explanations.

Furthermore, if the IPCC wanted to motivate action better, it could make clear the full range of risks and not just focus so much on "likely" ranges etc.! So if it's aiming to present evidence in a way to motivate more action, it doesn't seem that competent at it! (Though I do agree that in a lot of other places in the SYR SPM, the presentational choices do seem to be encouraging of taking greater action.)

I came across this account of working as an IPCC author and drafting the SPM by a philosopher who was involved in the 5th IPCC report, which provides some insight: link to pdf - see from p.7. @jackva

Thanks, fascinating stuff!

Thanks for the update, Toby. I used to defer to you a lot. I no longer do. After investigating the risks myself in decent depth, I consistently arrived to estimates of the risk of human extinction orders of magnitude lower than your existential risk estimates. For example, I understand you assumed in The Precipice an annual existential risk for:

In addition, I think the existential risk linked to the above is lower than their extinction risk. The worst nuclear winter of Xia et. al 2022 involves an injection of soot into the stratosphere of 150 Tg, which is just 1 % of the 15 Pg of the Cretaceous–Paleogene extinction event. Moreover, I think this would only be existential with a chance of 0.0513 % (= e^(-10^9/(132*10^6))), assuming:

Vasco, how do your estimates account for model uncertainty? I don't understand how you can put some probability on something being possible (i.e. p(extinction|nuclear war) > 0), but end up with a number like 5.93e-12 (i.e. 1 in ~160 billion). That implies an extremely, extremely high level of confidence. Putting ~any weight on models that give higher probabilities would lead to much higher estimates.

Thanks for the comment, Stephen.

I tried to account for model uncertainty assuming 10^-6 probability of human extinction given insufficient calorie production.

Note there are infinitely many orders of magnitude between 0 and any astronomically low number like 5.93e-12. At least in theory, I can be quite uncertain while having a low best guess. I understand greater uncertainty (e.g. higher ratio between the 95th and 5th percentile) holding the median constant tends to increase the mean of heavy-tailed distributions (like lognormals), but it is unclear to which extent this applies. I have also accounted for that by using heavy-tailed distributions whenever I thought appropriate (e.g. I modelled the soot injected into the stratosphere per equivalent yield as a lognormal).

As a side note, 10 of 161 (6.21 %) forecasters of the Existential Risk Persuasion Tournament (XPT), 4 experts and 6 superforecasters, predicted a nuclear extinction risk until 2100 of exactly 0. I guess these participants know the risk is higher than 0, but consider it astronomically low too.

I used to be persuaded by this type of argument, which is made in many contexts by the global catastrophic risk community. I think it often misses that the weight a model should receive is not independent of its predictions. I would say high extinction risk goes against the low prior established by historical conflicts.

I am also not aware of any detailed empirical quantitative models estimating the probability of extinction due to nuclear war.

That's an odd prior. I can see a case for a prior that gets you to <10^-6, maybe even 10^-9, but how can you get to substantially below 10^-9 annual with just historical data???

Sapiens hasn't been around for that long for longer than a million years! (and conflict with homo sapiens or other human subtypes still seems like a plausible reason for extinction of other human subtypes to me). There have only been maybe 4 billion species total in all of geological history! Even if you have almost certainty that literally no species has ever died of conflict, you still can't get a prior much lower than 1/4,000,000,000! (10^-9).

EDIT: I suppose you can multiply average lifespan of species and their number to get to ~10^-15 or 10^-16 prior? But that seems like a much worse prior imo for multiple reasons, including that I'm not sure no existing species has died of conflict (and I strongly suspect specific ones have).

Thanks for the comment, Linch.

Fitting a power law to the N rightmost points of the tail distribution of annual conflict deaths as a fraction of the global population leads to an annual probability of a conflict causing human extinction lower than 10^-9 for N no higher than 33 (for which the annual conflict extinction risk is 1.72*10^-10), where each point corresponds to one year from 1400 to 2000. The 33 rightmost points have annual conflict deaths as a fraction of the global population of at least 0.395 %. Below is how the annual conflict extinction risk evolves with the lowest annual conflict deaths as a fraction of the global population included in the power law fit (the data is here; the post is here).

The leftmost points of the tail suggest a high extinction risk because the tail distribution is quite flat for very low annual conflict deaths as a fraction of the global population.

Interesting numbers! I think that kind of argument is too agnostic, in the sense it does not leverage the empirical evidence we have about human conflicts, and I worry it leads to predictions which are very off. For example, one could also argue the annual probability of a human born in 2024 growing to an height larger than the distance from the Earth to the Sun cannot be much lower than 10^-6 because Sapiens have only been around for 1 M years or so. However, the probability should be way way lower than that (excluding genetic engineering, very long light appendages, unreasonable interpretations of what I am referring to, like estimating the probability from the chance a spaceship with humans will collide with the Sun, etc.). One can see the probability of a (non-enhanced) human growing to such an height is much lower than 10^-6 based on the tail distribution of human heights. Since height roughly follows a normal distribution, the probability of huge heights is negligible. It might be the case that past human heights (conflicts) are not informative of future heights (conflicts), but past heights still seem to suggest an astronomically low chance of huge heights (conflicts causing human extinction).

It is also unclear from past data whether annual conflict deaths as a fraction of the global population will increase.

Below is some data on the linear regression of the logarithm of the annual conflict deaths as a fraction of the global population on the year.

As I have said:

Thanks for engaging! For some reason I didn't get a notification on this comment.

Broadly I think you're not accounting enough for model uncertainty. I absolutely agree that arguments like mine above are too agnostic if deployed very generally. It will be foolish to throw away all information we have in favor of very agnostic flat priors.

However, I think most of those situations are importantly disanalogous to the question at hand here. To answer most real-world questions, we have multiple lines of reasoning consistent with our less agnostic models, and can reduce model uncertainty by appealing to more than one source of confidence.

I will follow your lead and use the "Can a human born in 2024 grow to the length of one astronomical unit" question to illustrate why I think that question is importantly disanalogous to the probability of conflict causing extinction. I think there are good reasons both outside the usual language of statistical modeling, and within it. I will focus on "outside" because I think that's a dramatically more important point, and also more generalizable. But I will briefly discuss a "within-model" reason as well, as it might be more persuasive to some readers (not necessarily yourself).

Below is Vasco's analogy for reference:

Outside-model reasons:

For human height we have very strong principled, scientific, reasons to believe that someone born in 2024 cannot grow to a height larger than one astronomical unit. Note that none of them appeal to a normal distribution of human height:

I say all of this as someone without much of a natural sciences background. I suspect if I talk to a biophysicist or astrophysicist or someone who studies anatomy, they can easily point to many more reasons why the proposed forecast is impractical, without appealing to the normal distribution of human height. All of these point strongly against human height reaching very large values, never mind literally one astronomical unit. Now some theoretical reasons can provide us with good explanatory power about the shape of the statstical distribution as well (for example, things that point to the generator of human height being additive and thus following the Central Limit Theorem), but afaik those theoretical arguments are weaker/lower confidence than the bounding arguments.

In contrast, if the only thing I knew about human height is the empirical observed data on human height so far, (eg it's just displayed as a column of numbers), plus some expert assurances that the data fits a normal distribution extremely well, I will be much less confident that human height cannot reach extremal values.

Put more concretely, human average male height is maybe 172cm with a standard deviation of 8cm (The linked source has a ton of different studies; I'm just eyeballing the relevant numbers). Ballpark 140 million people born a year, ~50% of which are men. This corresponds to ~5.7 sds. 5.7 sds past 172cm is 218cm. If we throw in 3 more s.d.s (which corresponds to >>1,000,000 difference on likelihood on a normal distribution), we get to 242cm. This is a result that will be predicted as extremely unlikely from statistically modeling a normal distribution, but "allowed" with the more generous scientific bounds from 1-6 above (at least, they're allowed at my own level of scientific sophistication; again I'm not an expert on the natural sciences).

Am I confident that someone born in 2024 can't grow to be 1 AU tall? Yep, absolutely.

Am I confident that someone born in 2024 can't grow to be 242cm? Nope. I just don't trust the statistical modeling all that much.

(If you disagree and are willing to offer 1,000,000:1 odds on this question, I'll probably be willing to bet on it).

This is why I think it's important to be able to think about a problem from multiple angles. In particular, it often is useful (outside of theoretical physics?) to think of real physical reality as a real concrete thing with 3 dimensions, not (just) a bunch of abstractions.[1]

Statistical disagreement

I just don't have much confidence that you can cleanly differentiate a power law or log-normal distribution from more extreme distributions from the data alone. One of my favorite mathy quotes is "any extremal distribution looks like a straight-line when drawn on a log-log plot with a fat marker[2]".

Statistically, when your sample size is <1000, it's not hard to generate a family of distributions that have much-higher-than-observed numbers with significant but <1/10,000 probability. Theoretically, I feel like I need some (ideally multiple) underlying justifications[3] for a distribution's shape before doing a bunch of curve fits and calling it a day. Or like, you can do the curve fit, but I don't see how the curve fit alone gives us enough info to rule out 1/100,000 or 1 in 1,000,000 or 1 in 1,000,000,000 possibilities.

Now for normal distributions, or normal-ish distributions, this may not matter all that much in practice. As you say "height roughly follows a normal distribution," so as long as a distribution is ~roughly normal, some small divergences doesn't get you too far away (maybe with a slightly differently shaped underlying distribution that fits the data it's possible to get a 242 cm human, maybe even 260 cm, but not 400cm, and certainly not 4000 cm). But for extremal distributions this clearly matters a lot. Different extremal distributions predict radically different things at the tail(s).

Early on in my career as a researcher, I reviewed a white paper which made this mistake rather egregiously. Basically they used a range of estimates to 2 different factors (cell size and number of cells per feeding tank) to come to a desired conclusion, without realizing that the two different factors combined lead to a physical impossibility (the cells will then compose exactly 100% of the tank).

I usually deploy this line when arguing against people who claim they discovered a power law when I suspect something like ~log-normal might be a better fit. But obviously it works in the other direction as well, the main issue is model uncertainty.

Tho tbh, even if you did have strong, principled justifications for a distribution's shape, I still feel like it's hard to get >2 additional OOMs of confidence in a distribution's underlying shape for non-synthetic data. ("These factors all sure seem relevant, and there are a lot of these factors, and we have strong principled reasons to see why they're additive to the target, so the central limit theorem surely applies" sure seems pretty persuasive. But I don't think it's more than 100:1 odds persuasive to me).

To add to this, assuming your numbers are right (I haven't checked), there have been multiple people born since 1980 who ended up taller than 242cm, which I expect would make any single normal distribution extremely unlikely to be a good model (either a poor fit on the tails, or a poor fit on a large share of the data), given our data: https://en.m.wikipedia.org/wiki/List_of_tallest_people

I suppose some have specific conditions that led to their height. I don’t know if all or most do.

Thanks, Linch. Strongly upvoted.

Right, by "the probability of huge heights is negligible", I meant way more than 2.42 m, such that the details of the distribution would not matter. I would not get an astronomically low probability of at least such an height based on the methodology I used to get an astronomically low chance of a conflict causing human extinction. To arrive at this, I looked into the empirical tail distribution. I did not fit a distribution to the 25th to 75th range, which is probably what would have suggested a normal distribution for height, and then extrapolated from there. I said I got an annual probability of conflict causing human extinction lower than 10^-9 using 33 or less of the rightmost points of the tail distribution. The 33rd tallest person whose height was recorded was actually 2.42 m, which illustrates I would not have gotten an astronomically low probability for at least 2.42 m.

I agree. What do you think is the annualised probability of a nuclear war or volcanic eruption causing human extinction in the next 10 years? Do you see any concrete scenarios where the probability of a nuclear war or volcanic eruption causing human extinction is close to Toby's values?

I think power laws overestimate extinction risk. They imply the probability of going from 80 M annual deaths to extinction would be the same as going from extinction to 800 billion annual deaths, which very much overestimates the risk of large death tolls. So it makes sense the tail distribution eventually starts to decay much faster than implied by a power law, especially if this is fitted to the left tail.

On the other hand, I agree it is unclear whether the above tail distribution suggests an annual probability of a conflict causing human extinction above/below 10^-9. Still, even my inside view annual extinction risk from nuclear war of 5.53*10^-10 (which makes no use of the above tail distribution) is only 0.0111 % (= 5.53*10^-10/(5*10^-6)) of Toby's value.

To be clear, I'm not accusing you of removing outliers from your data. I'm saying that you can't rule out medium-small probabilities of your model being badly off based on all the direct data you have access to, when you have so few data points to fit your model (not due to your fault, but because reality only gave you so many data points to look at).

My guess is that randomly selecting 1000 data points of human height and fitting a distribution will more likely than not generate a ~normal distribution, but this is just speculation, I haven't done the data analysis myself.

I haven't been able to come up with a good toy model or bounds that I'm happy with, after thinking about it for a bit (I'm sure less than you or Toby or others like Luisa). If you or other commenters have models that you like, please let me know!

(In particular I'd be interested in a good generative argument for the prior).

I do not want to take this bet, but I am open to other suggestions. For example, I think it is very unlikely that transformative AI, as defined in Metaculus, will happen in the next few years.

Huh? It was about 6 months for the 1957 pandemic.

I meant vaccines for diseases that didn't yet have a vaccine. The 1957 case was a vaccine for a new strain of influenza, when they already had influenza vaccines.

Possibly worth flagging: we had the Moderna vaccine within

two daysof genome sequencing - a monthbeforethe first confirmed COVID death in the US.a month or so. Waiting a whole year to release it to the public was a policy choice, not a scientific constraint. (Which is not to say that scaling up production would have been instant. Just that it could have been done a lot faster, if the policy will was there.)I think the two-day meme is badly misleading. Having a candidate vaccine sequence on a computer is very different from "having the vaccine".

It looks like Moderna shipped its first trial doses to NIH in late February, more than a month later. I think that's the earliest reasonable date you could claim that "we had the vaccine". If you were willing to start putting doses in arms without any safety or efficacy testing at all, that's when you could start.

(Of course, if you did that you'd presumably also have done it with all the vaccine candidates that didn't work out, of which there's no shortage.)

I think even this is pretty optimistic because there was very little manufacturing capacity at that point.

I didn't say a lot of arms. 😛

But it's a fair point. Of course, in the absence of testing moderna could have ramped up production much faster. But I'm not sure they would have even if they were allowed to - that's a pretty huge reputational risk.

Fair point - updated accordingly. (The core point remains.)

Thanks for the post! I wanted to add a clarification regarding the discussion of metagenomic sequencing,

China does have metagenomic sequencing. In fact, metagenomic sequencing was used in China to help identify the presence of a new coronavirus in the early Covid patient samples (https://www.nature.com/articles/s41586-020-2008-3),

Thanks Mike — a very useful correction. I'm genuinely puzzled as to why this didn't lead to a more severe early response given China's history with SARS. That said, I can't tell from the article how soon the sample from this patient was sequenced/analysed.

Thanks for writing this update Toby!

I'm far from an expert on the subject, but my impression was that a lot of people were convinced by the rootclaim debate that it was not a lab leak. Is there a specific piece of evidence they might have missed to suggest that lab leak is still plausible? (The debate focused on genetically modified leaks, and unfortunately didn't discuss the possibility of a leak of naturally evolved disease).

I think Toby's use of "evenly split" is a bit of a stretch in 2024 with the information available, but lab leak is definitely still plausible. To quote Scott in the review:

I think lab leak is now a minority position among people who looked into it, but it's not exactly a fringe view. I would guess at least some US intelligence agencies still think lab leak is more likely than not, for example.

I delivered this talk before the Rootclaim debate, though I haven't followed that debate since, so can't speak to how much it has changed views. I was thinking of the US intelligence community's assessments and the diversity of opinions among credible people who've looked into it in detail.

Have you seen data on spending for future pandemics before COVID and after?

Toby - I appreciated reading your updates based on the events of the last 5ish years.

I'm am wondering if you have also reconsidered the underlying analyses and assumptions that went into your initially published models? There's been a fair amount written about this; to me the best is from David Thorstad here:

https://reflectivealtruism.com/category/exaggerating-the-risks/

I would really value you engaging with the arguments he or others present, as a second kind of update.

Cheers

This was very good.

You didn't mention the good/bad aspects of hardware limitation on current AI growth (roughly, hardware is expensive and can theoretically be tracked, hurting development speed and helping governance). You did note that the expense kicked AI labs out of the forefront, but the strict dependence of modern frontier development on more compute has broader implications, seems to me, for risk.

Also, on the observations about air quality in the pandemic section, I realize that there's a lot more awareness about the importance of clean air, but is there any data out there on whether this is being implemented? Changes in filter purchase stats, HVAC install or service standards, technician best practices, customer requests, features of new air handlers, etc?

@Toby_Ord, I very much appreciated your speech (only wish I'd seen it before this week!) not to mention your original book, which has influenced much of my thinking.

I have a hot take[1] on your puzzlement that viral gain of function research (GoFR) hasn’t slowed, despite having possibly caused the COVID-19 pandemic, as well as the general implication that it does more to increase risks to humans than reduce them. Notwithstanding some additional funding bureaucracy, there’s been nothing like the U.S.'s 2014-17 GoFR moratorium.

From my (limited) perspective, the lack of a moratorium may stem from a simple conflict of interest in those we entrust with these decisions. Namely, we trust experts to judge their work’s safety, but these experts have at stake their egos and livelihoods — not to mention potential castigation and exclusion from their communities if they speak out. Moreover, if the idea that their research is unsafe poses such psychological threats, then we might expect impartial reasoning to become motivated rationalization for a continuation of GoFR. This conflict might thus explain not only the persistence of GoFR, but also the initial dismissal of the lab-leak theory as a “conspiracy theory”.

∗ ∗ ∗

“When you see something that is technically sweet, you go ahead and do it and you argue about what to do about it only after you have had your technical success. That is the way it was with the atomic bomb.”

— J. Robert Oppenheimer

“What we are creating now is a monster whose influence is going to change history, provided there is any history left, yet it would be impossible not to see it through, not only for military reasons, but it would also be unethical from the point of view of the scientists not to do what they know is feasible, no matter what terrible consequences it may have.”

— John von Neumann

Those words from those who developed the atomic bomb resonate today. I think they very much apply to GoFR — not to mention research in other fields like AI.

I’d love any feedback, especially by any experts in the field. (I'm not one.)

In terms of extinction I will not challenge your estimates, but in terms of "going back to the Middle Age with not clear road to recovery", in my view nuclear war is massively above the rest. I wrote this two pieces that also explore how "AI" is perhaps the only strategy two avoid nuclear war (one and two).

Unfortunately, the industry is already aiming to develop agent systems. Anthropic's Claude noe has "computer use" capabilities, and I recall that Demis Hassabis has also stated that agentsbstr the next area to make big capability gains. I expect more bad news in this direction in the next five years.

This sounds like a hypothesis that makes predictions we can go check. Did you have any particular evidence in mind? This and this come to mind, but there is plenty of other relevant stuff, and many experiments that could be quickly done for specific domains/settings.

Note that you say "something very special" whereas my comment is actually about a stronger claim like "AI performance is likely to plateau around human level because that's where the data is". I don't dispute that there's something special here, but I think the empirical evidence about plateauing — that I'm aware of — does not strongly support that hypothesis.

Executive summary: The landscape of existential risks has evolved since The Precipice was written, with climate change risk decreasing, nuclear risk increasing, and mixed changes for pandemic and AI risks, while global awareness and governance of existential risks have improved significantly.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Regarding existential risk of AI and global AI governance: the UN has convened a High-Level Advisory Body on AI which produced an interim report, Governing AI for Humanity, and issued a call for submissions/feedback on it (which I also responded to). It is due to publish the final, revised version based on this feedback in 'the summer of 2024', in time to be presented at the UN Summit of the Future, 20-23 September. (The final report has not yet been published at the time of writing this comment, 6 August.)

https://www.un.org/sites/un2.un.org/files/231025_press-release-aiab.pdf

https://www.un.org/en/ai-advisory-body

https://www.un.org/sites/un2.un.org/files/un_ai_advisory_body_governing_ai_for_humanity_interim_report.pdf

https://www.un.org/en/summit-of-the-future

See also report on interim workshop:

https://royalsociety.org/-/media/policy/publications/2024/un-role-in-international-ai-governance.pdf