Knowing the shape of future (longterm) value appears to be important to decide which interventions would more effectively increase it. For example, if future value is roughly binary, the increase in its value is directly proportional to the decrease in the likelihood/severity of the worst outcomes, in which case existential risk reduction seems particularly useful[1]. On the other hand, if value is roughly uniform, focussing on multiple types of trajectory changes would arguably make more sense[2].

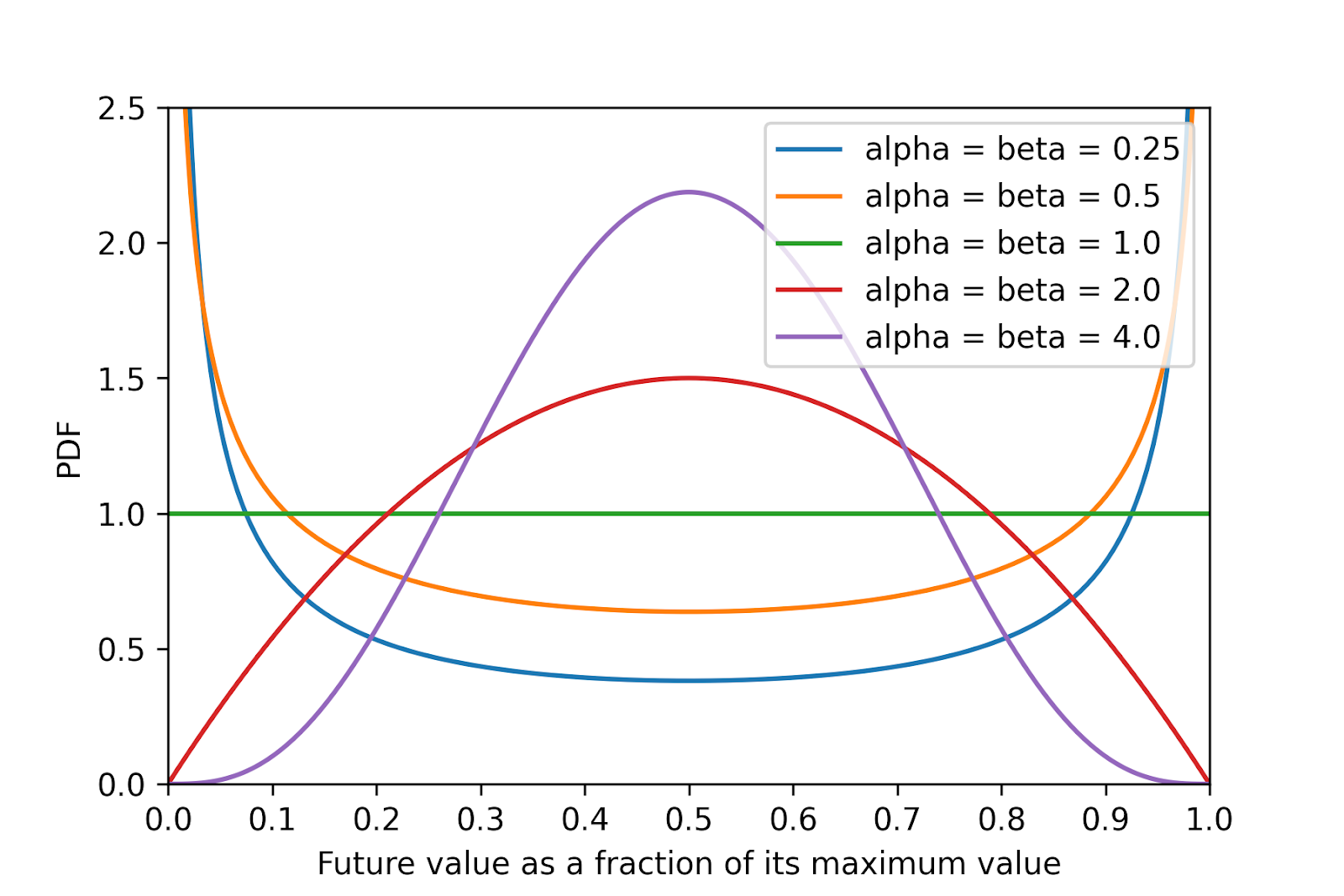

So I wonder what is the shape of future value. To illustrate the question, I have plotted in the figure below the probability density function (PDF) of various beta distributions representing the future value as a fraction of its maximum value[3].

For simplicity, I have assumed future value cannot be negative. The mean is 0.5 for all distributions, which is Toby Ord’s guess for the total existential risk given in The Precipice[4], and implies the distribution parameters alpha and beta have the same value[5]. As this tends to 0, the future value becomes more binary.

- ^

Existential risk was originally defined in Bostrom 2002 as:

One where an adverse outcome would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential.

- ^

Although trajectory changes encompass existential risk reduction.

- ^

The calculations are in this Colab.

- ^

If forced to guess, I’d say there is something like a one in two chance that humanity avoids every existential catastrophe and eventually fulfills its potential: achieving something close to the best future open to us.

- ^

According to Wikipedia, the expected value of a beta distribution is “alpha”/(“alpha” + “beta”), which equals 0.5 for “alpha” = “beta”.

That depends on what you mean by "existential risk" and "trajectory change". Consider a value system that says that future value is roughly binary, but that we would end up near the bottom of our maximum potential value if we failed to colonise space. Proponents of that view could find advocacy for space colonisation useful, and some might find it more natural to view that as a kind of trajectory change. Unfortunately it seems that there's no complete consensus on how to define these central terms. (For what it's worth, the linked article on trajectory change seems to define existential risk reduction as a kind of trajectory change.)

FWIW I take issue with that definition, as I just commented in the discussion of that wiki page here.