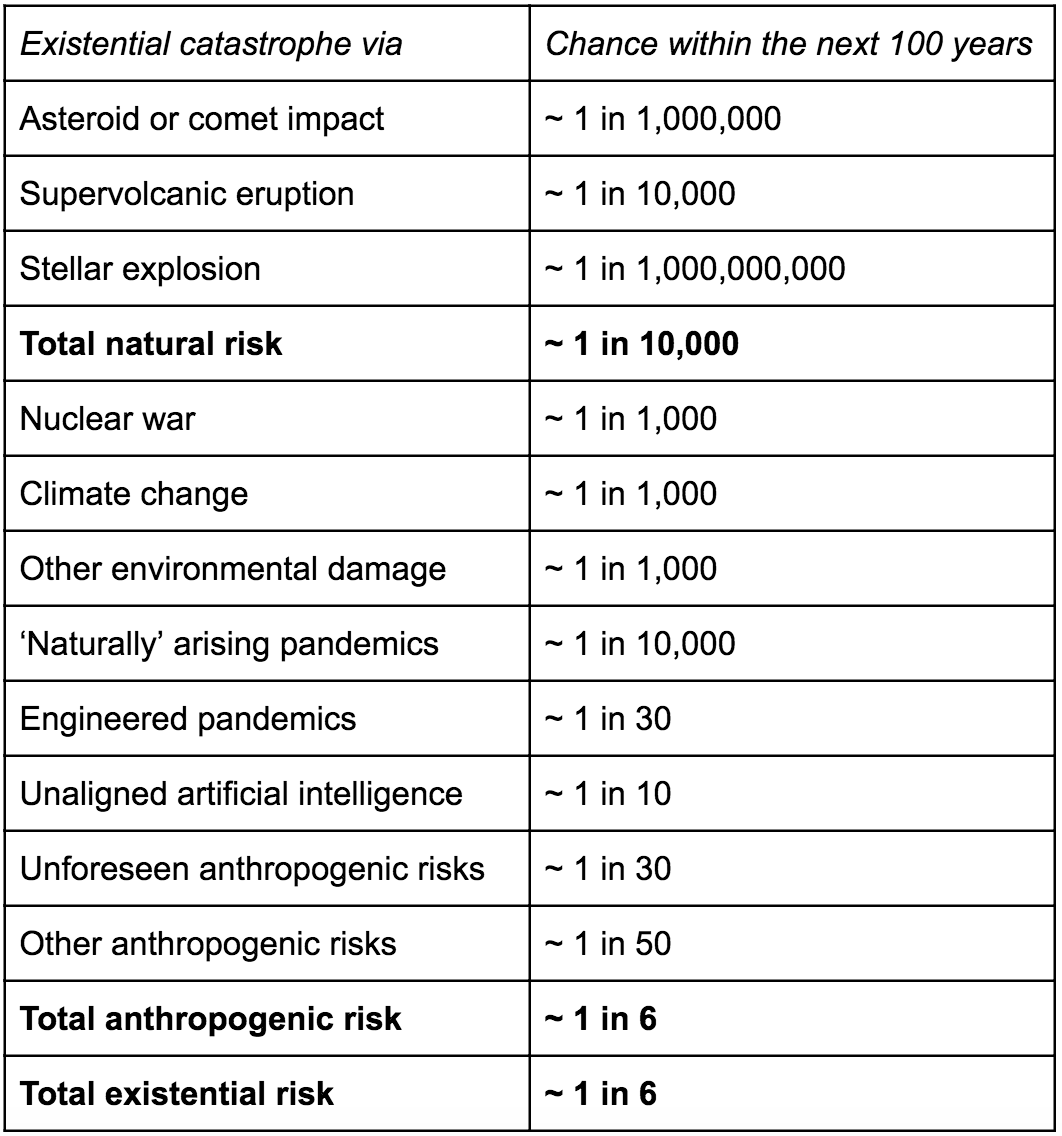

It's pretty much generally agreed upon in the EA community that the development of unaligned AGI is the most pressing problem, with some saying that we could have AGI within the next 30 years or so. In The Precipice, Toby Ord estimates the existential risk from unaligned AGI is 1 in 10 over the next century. On 80,000 Hours, 'positively shaping the development of artificial intelligence' is at the top of the list of its highest priority areas.

Yet, outside of EA basically no one is worried about AI. If you talk to strangers about other potential existential risks like pandemics, nuclear war, or climate change, it makes sense to them. If you speak to a stranger about your worries of unaligned AI, they'll think you're insane (and watch too many sci-fi films).

On a quick scan of some mainstream news sites, it's hard to find much about existential risk and AI. There are bits here and there about how AI could be discriminatory, but mostly the focus is on useful things AI can do e.g. 'How rangers are using AI to help protect India's tigers'. In fact (and this is after about 5 mins of searching so not a full blown analysis) it seems that overall the sentiment is generally positive. Which is totally at odds to what you see in the EA community (I know there is acknowledgement of how AI could be really positive, but mainly the discourse is about how bad it could be). Alternatively, if you search nuclear war, pretty much every mainstream news site is talking about it. It's true we're at a slightly more risky time at the moment, but I reckon most EA's would still say the risk of unaligned AGI is higher than the risk of nuclear war, even given the current tensions.

So if it's such a big risk, why is no one talking about it?

Why is it not on the agenda of governments?

Learning about AI, I feel like I should be terrified, but when I speak to people who aren't in EA, I feel like my fears are overblown.

I genuinely want to hear people's perspectives on why it's not talked about, because without mainstream support of the idea that AI is a risk, I feel like it's going to be a lot harder to get to where we want to be.

I recently found a Swiss AI survey that indicates that many people do care about AI.

[This is only very weak evidence against your thesis, but might still interest you 🙂.]

Population – 1245 people

Opinion Leaders – 327 people [from the economy, public administration, science and education]

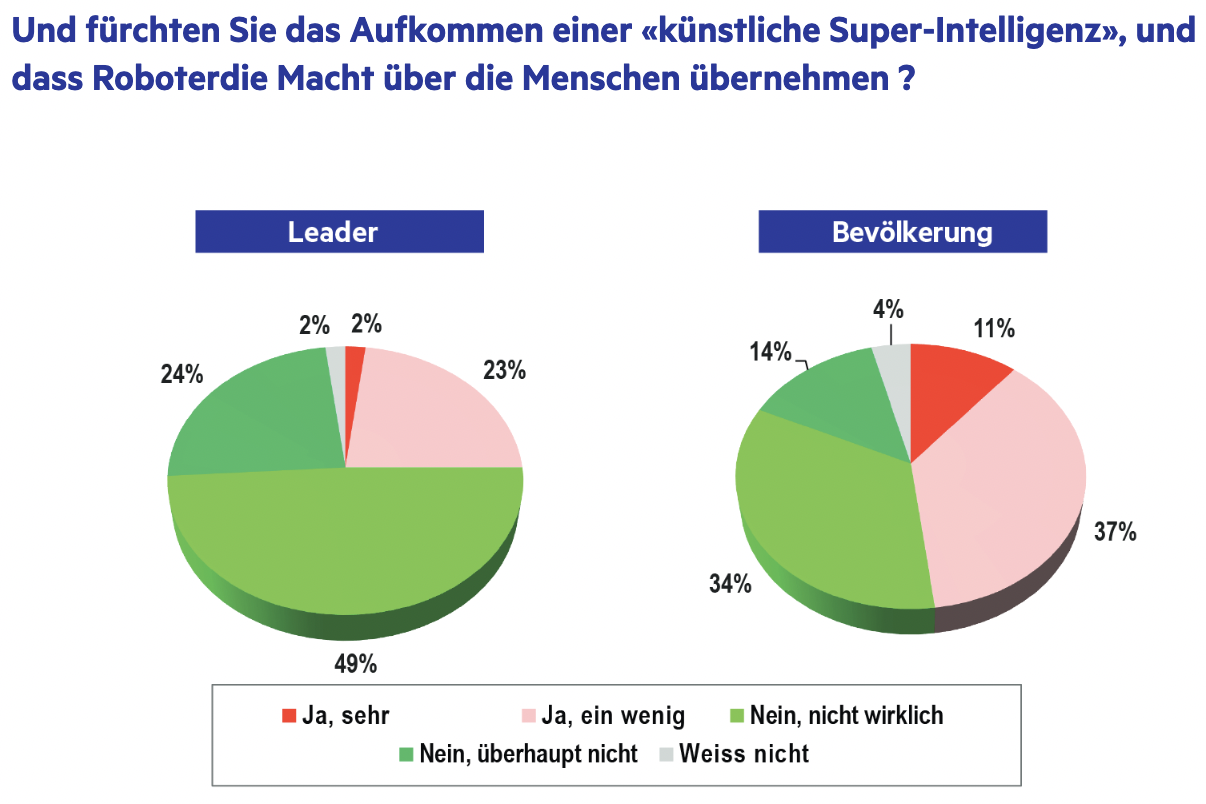

The question:

"Do you fear the emergence of an "artificial super-intelligence", and that robots will take power over humans?"

From the general population, 11% responded "Yes, very", and 37% responded "Yes, a bit".

So, half of the respondents (that expressed any sentiment) were at least somewhat worried.

The 'opinion leaders' however are much less concerned. Only 2% have a lot of fear and 23% have a bit of fear.

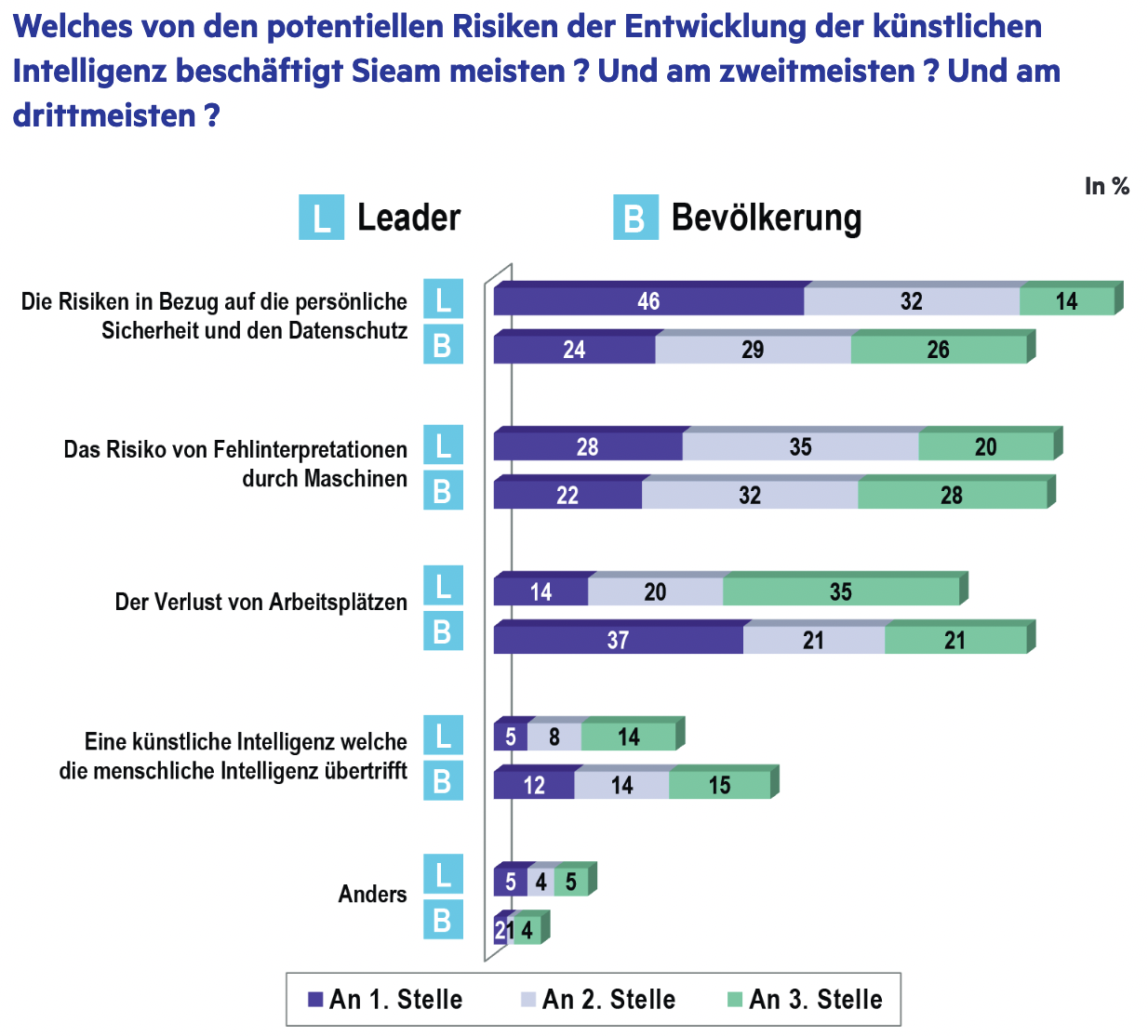

But the same study also found that only 41% of respondents from the general population placed AI becoming more intelligent than humans into the 'first 3 risks of concern' out of a choice of 5 risks.

Only for 12% of respondents was it the biggest concern. 'Opinion leaders' were again more optimistic – only 5% of them thought AI intelligence surpassing human intelligence was the biggest concern.

Option 1: T