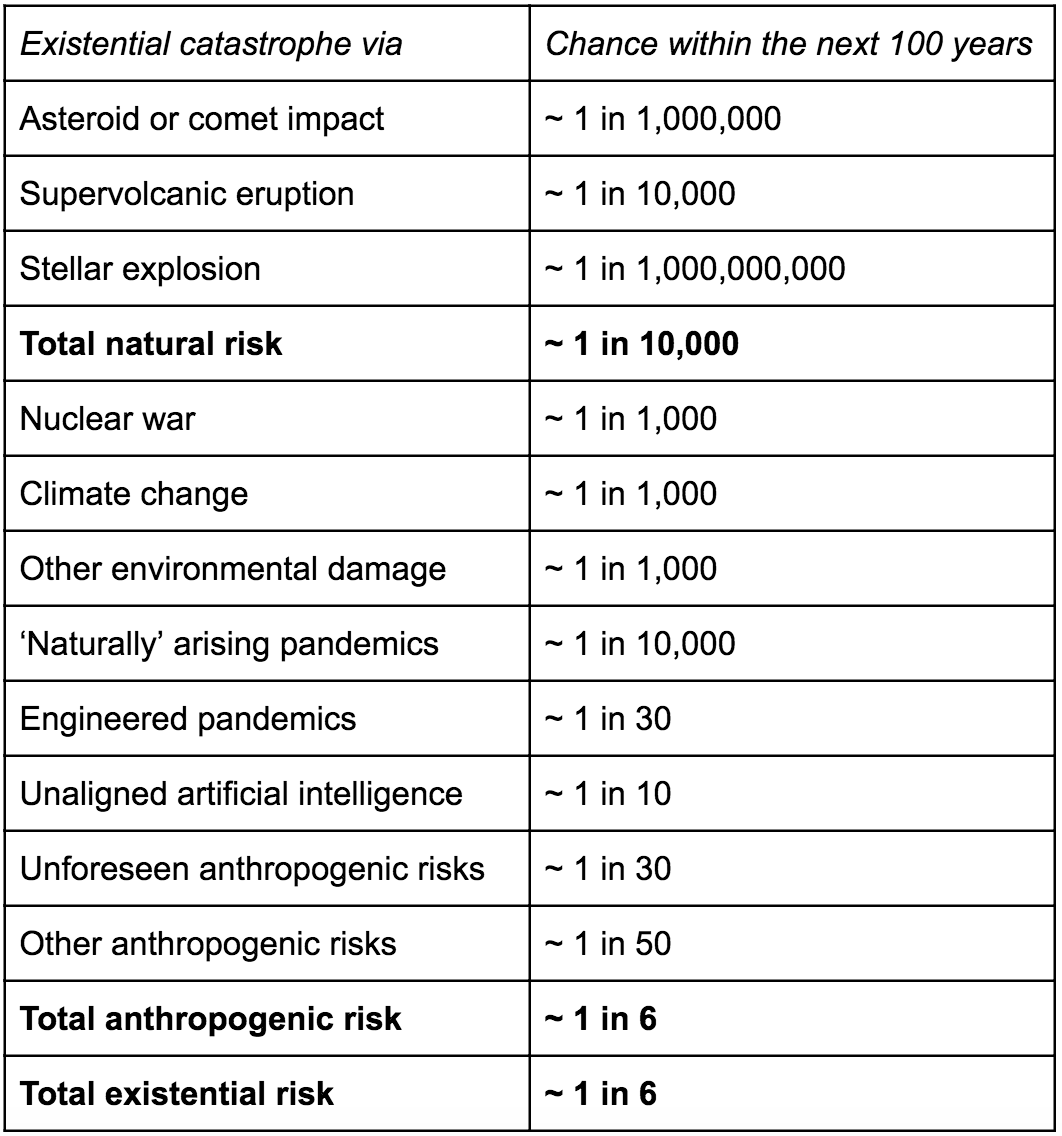

It's pretty much generally agreed upon in the EA community that the development of unaligned AGI is the most pressing problem, with some saying that we could have AGI within the next 30 years or so. In The Precipice, Toby Ord estimates the existential risk from unaligned AGI is 1 in 10 over the next century. On 80,000 Hours, 'positively shaping the development of artificial intelligence' is at the top of the list of its highest priority areas.

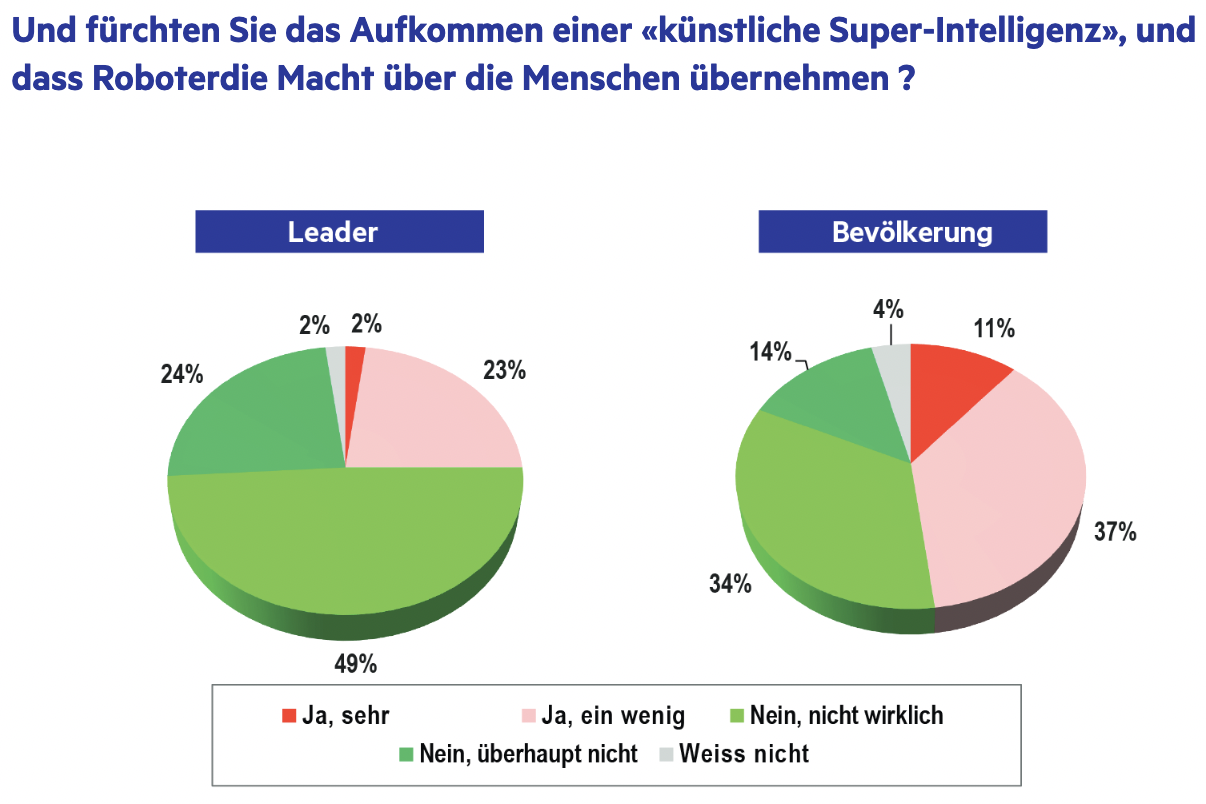

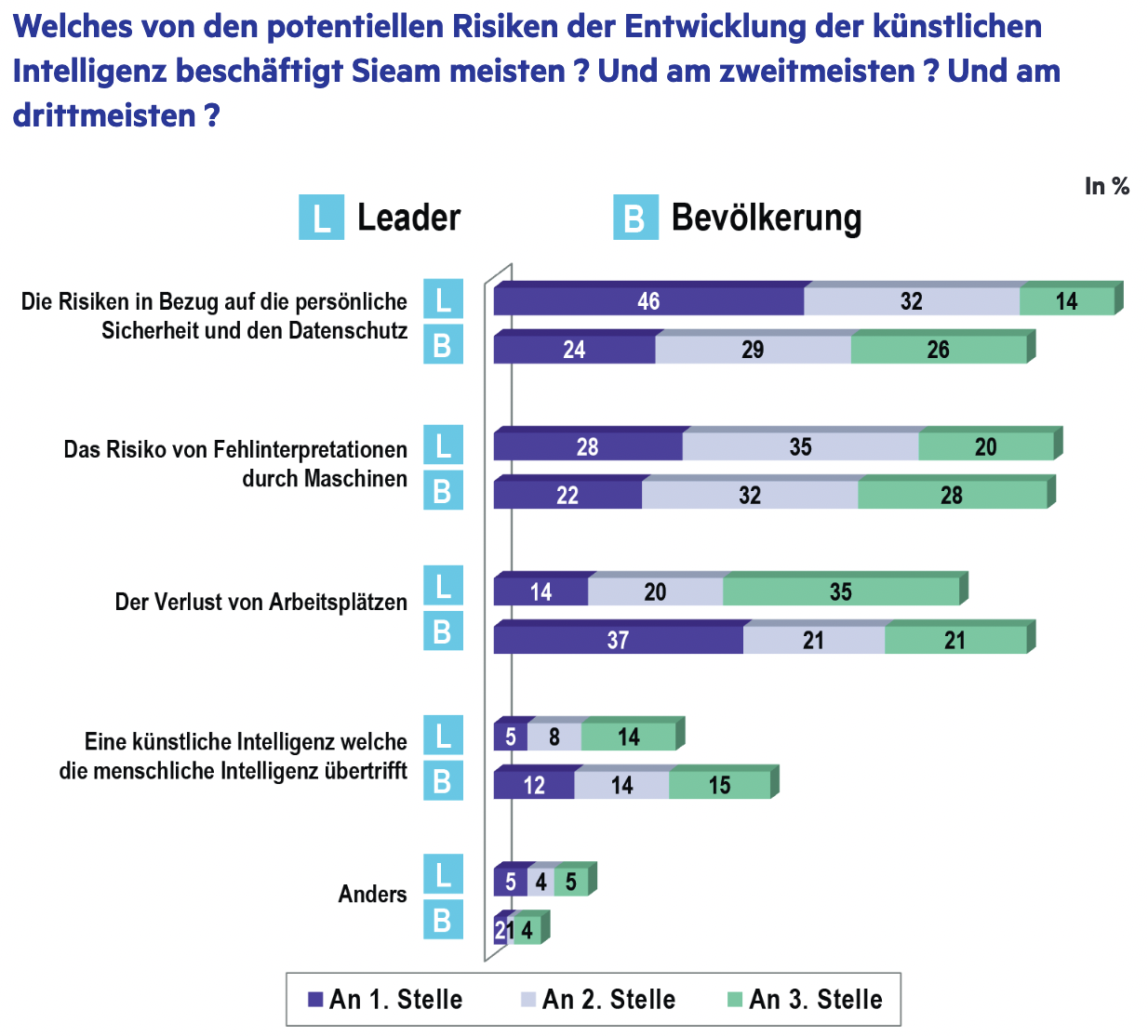

Yet, outside of EA basically no one is worried about AI. If you talk to strangers about other potential existential risks like pandemics, nuclear war, or climate change, it makes sense to them. If you speak to a stranger about your worries of unaligned AI, they'll think you're insane (and watch too many sci-fi films).

On a quick scan of some mainstream news sites, it's hard to find much about existential risk and AI. There are bits here and there about how AI could be discriminatory, but mostly the focus is on useful things AI can do e.g. 'How rangers are using AI to help protect India's tigers'. In fact (and this is after about 5 mins of searching so not a full blown analysis) it seems that overall the sentiment is generally positive. Which is totally at odds to what you see in the EA community (I know there is acknowledgement of how AI could be really positive, but mainly the discourse is about how bad it could be). Alternatively, if you search nuclear war, pretty much every mainstream news site is talking about it. It's true we're at a slightly more risky time at the moment, but I reckon most EA's would still say the risk of unaligned AGI is higher than the risk of nuclear war, even given the current tensions.

So if it's such a big risk, why is no one talking about it?

Why is it not on the agenda of governments?

Learning about AI, I feel like I should be terrified, but when I speak to people who aren't in EA, I feel like my fears are overblown.

I genuinely want to hear people's perspectives on why it's not talked about, because without mainstream support of the idea that AI is a risk, I feel like it's going to be a lot harder to get to where we want to be.

A lot of it, I would guess, comes down to lack of exposure to the reasonable version of the argument.

A key bottleneck would therefore be media, as it was for climate change.

Trying to upload "media brain", here are reasons why you might not want to run a story on this topic (apologies if it seems dismissive, that is not the intent, I broadly sympathize with the thesis that AGI is at least a potential major cause area, however it may be helpful to channel where the dismissiveness comes from on a deeper level)...

-The thesis is associated with science-fiction. Taking science-fiction seriously as an adult is associated with escapism and a refusal to accept reality.

- It is seen as something only computer science "nerds", if you'll pardon the term, worry about. This makes it easy to dismiss the underlying concept as reflecting a blinkered vision of the world. The idea is that if only those people went outside and "touched grass", they would develop a different worldview - that the worldview is a product of being stuck inside a room looking at a computer screen all day.

- There is something inherently suspicious about CS people looking at the problem of human suffering and concluding that more than helping people in tangible ways in the physical world, the single most important thing other people should be doing is to be more like them and to do more of the things that they are already doing. Of course the paper clip optimizer is going to say that increasing the supply of paper clips is the most pressing problem in the universe. In fact it's one reason why media people love to run stories about the media itself!

- In any case there appears to be no theory of change - "if only we did this, that would take care of that". At least with climate change there was the basic idea of reducing carbon emissions. What is the reducing carbon emissions of AI X-risk? If there is nothing we can do that would make a difference, might as well forget about it.

What might help overcome these issues?

To start with you'd need some kind of translator, an idea launderer. Someone

Basically, a human equivalent of the BBC. Then have them produce documentaries on the topic - some might take off.

Thanks for this comment, it's exactly the type of thing I was looking for. The person who comes to mind for me would be Louis Theroux...although he mainly makes documentaries more on people than on 'things' nonetheless some of his documentaries grapple with issues around ethics or morality. Think it might be a bit of stretch to imagine this actually happening though, but he does meet the criteria I would say.