There's a huge amount of energy spent on how to get the most QALYs/$. And a good amount of energy spent on how to increase total $. And you might think that across those efforts, we are succeeding in maximizing total QALYs.

I think a third avenue is under investigated: marginally improving the effectiveness of ineffective capital. That's to say, improving outcomes, only somewhat, for the pool of money that is not at all EA-aligned.

This cash is not being spent optimally, and likely never will be. But the sheer volume could make up for the lack of efficacy.

Say you have the option to work for the foundation of one of two donors:

- Donor A only has an annual giving budget of $100,000, but will do with that money whatever you suggest. If you say “bed nets” he says “how many”.

- Donor B has a much larger budget of $100,000,000, but has much stronger views, and in fact, only wants to donate domestically to the US.

Donor B feels overlooked to me, despite the fact that even within the US, even without access to any of the truly most effective charities, there are still lots of opportunities to do marginally better.

In practice, I note a conspicuous lack of EAs working for Donor B-like characters. There does not seem to be any kind of concerted effort influence ineffective foundations.[1]

Most money is not EA money

I’ve often heard of the Seeing Eye Dog argument for the overwhelming importance of EA:

One eye-seeing dog charity claims it costs ‘$42,000 or more’ to train the dog and provide instructions to the user. A cataract charity claims to be able to perform the procedure for $25.

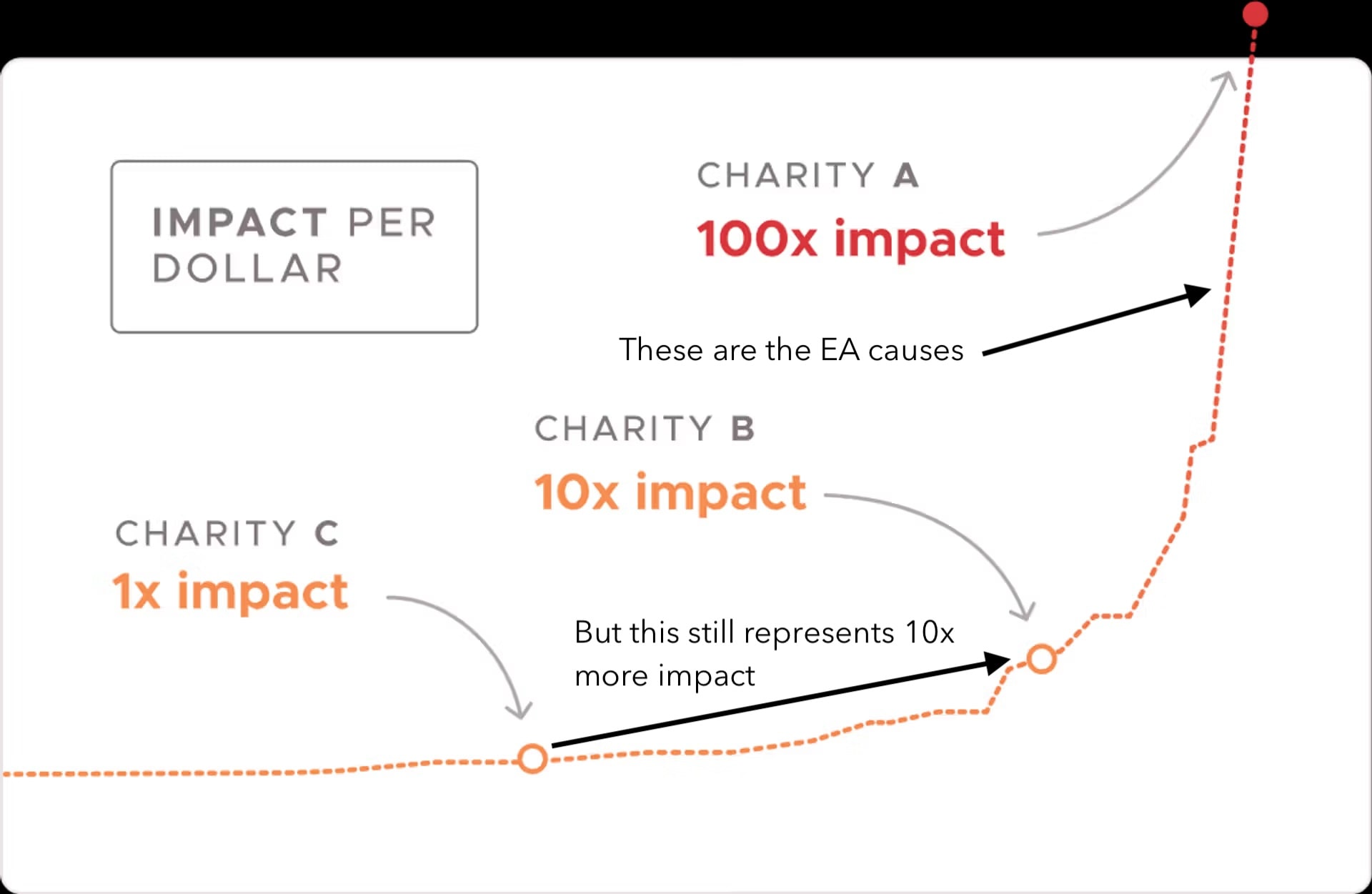

Less anecdotally, an 80k report highlights the power law in the effectiveness of global health interventions.

The power law of impact is a really strong argument for prioritizing QALYs/$, even at the cost of overall dollars. If the best interventions are literally 100x or even 1,000x as impactful as the median ones, that is going to be tough to make up for.

On the other hand, there is a really steep power law on the dollars side too! Some people have way more money than others. And most money in the world is not EA money.

Take the Azim Premji Foundation. It has a massive endowment of $21 billion, yet there are no mentions of it on the EA Forum.

APF was founded with an explicit focus on education in India, so their grant making is pretty restricted, and it might feel like getting them to have anything to do with EA would be a huge long shot. But there is a lot of room between "status quo" and "fully optimized", and this space feels neglected (to EAs), tractable and highly scalable.

Modified from Giving What We Can

As a simplified model, let's take these numbers literally, and assume that the APF currently operates at a mere 1x. Their geographic and cause restrictions might mean that they'll never go to 100x, but 10x could be entirely plausible. If the status quo is that $20b of APF giving is like $0.2b of Give Well giving, there's an opportunity to 10x that impact, and generate net $1.8b in Give Well equivalent impact.

Those units aren't entirely intuitive and the numbers are largely made up, but they get across the point that moving huge sums of money from Charity C to Charity B matters a huge amount, even if you never get to Charity A.

Going further, there could be room to negotiate or expand the charity's entire charter. It would perhaps not be impossible to argue that Vitamin A supplementation should count as an education intervention since it can prevent blindness. And in fact, APF has already begun to branch out into health, and specifically on the nutritional status of young children. Nudging them in this direction even a year earlier, or doing the work they now want to do in a marginally more effective way (even without say, redirecting the funds to Niger), could be hugely impactful.

How much money is there?

Give Well already does hundreds of millions per year, and in some sense that's a lot, but it pales in comparison to the total pool of capital that feels, in principle, "available".

As of 2025, there were 3000 billionaires representing a total net worth of $1.6T. That is a lot of money! They might not give it all to charities, but they will have to do something with it, and we should work hard to make sure that something is as good as it reasonably can be.

There are already lots of very motivated EAs trying to to direct Dustin Moskovitz’s relatively modest $12b. There seem to be way fewer trying hard to direct even 1% of the budget of the other billionaires. There is only one mention even of Amancio Ortega on EA Forum, even though he is the 9th richest person with a net worth of $124b. And barely any mention of Bernard Arnault or Larry Ellison or Steve Ballmer.

These four alone represent over $600b in wealth that could at least be spent on marginally better causes. And in fact, they’ve already collectively spent billions on philanthropic causes. Marginally improving even a small portion of these donations could be huge.

Effective Everything?

EA-relevant organizations do around $1b/year in giving, but the world collectively does around $1t. There’s a tremendous neglected opportunity to improve the effectiveness of the world’s charities, even without making it all the way to truly optimal causes.

It feels, in some sense, against EA principles, but there should be lists of "the most effective charities, given that you can only give to X country" or "given that you're only interested in X cause". Beyond analysis, it feels like there is a huge amount of room for direct engagement, and trying to work at some the world's largest non-EA foundations.

DAFgiving360™ does not sound like an EA nonprofit. It works with Charles Schwab & Co., which is not a very EA organization. And yet they have done $44 billion in recommendations, will do a lot more in the future, and are hiring a senior manager for charitable consulting. This kind of job does not currenly end up on the 80,000 Hours Job Board, but I believe it really ought to. And we ought to think harder about "marginal reform of legacy non-EA institutions" as an important skill set.

- ^

An obvious explanation for the lack of visibility would be that these people don’t want to identify as EAs, because it would alienate "normie" donors. This is possible, but I’m still suspicious that I’ve literally never heard of anyone in EA taking this path to impact.

I agree that it could be useful but I don't think it's as neglected as you think.

Anecdotally I know quite a few people in your second category, people in less 'EA' branded areas/orgs (although a lot will have more impact). There are several orgs looking into advising donors that haven't heard/aren't interested in EA (Giving Green, Longview, Generation Pledge, Ellis Impact, Founders Pledge, etc).

I think some may not be seen in EA spaces as much because of PR concerns but I think the main reason is that they are focused on their target audiences or mainly just interact with others in the effective giving ecosystem.

Also it's not quite the forum, but I did link to a blog listing Azim Premji on this global health landscape post (not that you would ever be expected to know that).

While this is true, I think this comment somewhat misunderstands the point of the post, or at least doesn't engage with the most promising interpretation of it.

I work at Founders Pledge, and I do think it is true that the correct function of an org like FP is (speaking roughly) to move money that is not effectiveness-focused from "bad stuff" to "good stuff." Over the years, FP has had many conversations about the extent to which we want to encourage "better" giving as opposed to "best" giving. I think we've basically cohered on the view that focusing on "better" giving (within e.g. American education or arts giving or whatever) is a kind of value drift for FP that would reduce our impact over the medium- and long-term. We try to move money to the best stuff, not to better stuff.

But I still think this is a promising frame that deserves some dedicated attention.

Imagine two interventions, neither of which is cost-effective by "EA lights." Intervention A can save a life for $100,000. Intervention B can save a life for $1 million. Enormous amounts of money are spent on Intervention B, which is popular among normie donors for reasons that are unrelated to effectiveness. If you can shift $1 billion from Intervention B to Intervention A, you save 9,000 lives. Thus working to shift this money is cost-effective — competitive with GiveWell top charities in expectation — if it costs less than $45 million to do so (~$5,000 per life).

More generally, shifting money by ~0.1 GDx is cost-effective even if you're shifting money far below the cost-effectiveness bar as long as you have 100x leverage over the money you are shifting.

I don't think opportunities like this are super easy to find, but it seems plausible to me that the following avenues will contain promising options:

I agree that there is impact to be found here, but the framing in the main post seemed to not consider the effective giving ecosystem as it currently is.

I'm still saying that this area is neglected. I'm trying to give more context, rather than telling people to not work on it. In my own advising I've recommended a lot of people to consider these wider areas.

(Disclaimer: I'm not an expert, but I have a DAF).

DAFgiving360 until 2024 was known as Schwab Charitable. It's the 501(c)(3) that receives contributions to Schwab donor-advised funds. It's analogous to Fidelity Charitable and Vanguard Charitable. My understanding is that the "recommendations" referred to above are the recommendations of individual donors to DAFgiving360 about how to donate the funds they've contributed, not the other way around.

From what I've heard and from my own experience, DAFgiving360 et al. approve pretty much request to grant to a 501(c)(3). (If DAFgiving360 started being more opinionated and rejecting a significant number of donation recommendations, people would shift new funds to another DAF provider.) And I haven't seen them do anything that would provide levers for influencing donations, such as advising clients on where to donate or publishing lists of recommended charities.

DAFgiving360 has a giving guide. It's very much "not EA," but I doubt it's especially influential. (I wasn't aware of its existence till today).

Ah okay, thanks for explaining this. That does meaningfully affect my understanding of this particular role.

Thanks for sharing this post. I think it deserves a lot more thought and discussion. When I've asked this question in the past I've been encouraged to not go down this rabbit hole because it's not effective, and I disagree.

I run a giving pledge and often encounter people who want to give with their heart. I never want to lead with the sentiment “The causes you care about aren’t an effective use of your resources” That's just polarizing. So I'd rather meet them in the middle.

It makes me wonder if there’s a “loss leader” effect here: If people are encouraged to explore effectiveness in a cause area they care about (maybe a "normie" area like social justice or supporting victims of abuse), they might discover that either there’s not enough evidence of impact or that real change requires substantial research. That, in turn, could help them understand why effective organizations matter. It seems like an important part of the mindset shift.

I also notice organizations like GiveDirectly sometimes fund very visible causes, like emergency relief for widely publicized disasters (e.g., the LA wildfires). Clearly, that goes against the EA focus on highly-neglected problems. But perhaps it works as a kind of entry point: people who care about these high-profile issues can encounter GiveDirectly and then learn about other, highly effective but less-known opportunities. At least in the emergency relief space, we know GiveDirectly is doing effective work, which might help bridge that gap?

Here's what else I'd want to know more about:

Update: I found this questionnaire from GiveWell to be very helpful

Do-It-Yourself Charity Evaluation Questions

https://www.givewell.org/charity-evaluation-questions?utm_source=chatgpt.com

I really hope this is the sort of thing that can be enabled by the (relatively recent) shift to principles-first EA as a community outreach organising mode.

Previously, EA was a union of three(ish) cause areas sharing the same infrastructure, and getting someone "into EA" was basically about persuading them on one or more of the cause areas. I think this is a mistake.

I really think we have the opportunity to make EA more of an educational program on how to evaluate impact and optimise for effectiveness given the resources you have, as well as the provision of such resources insofar as they are copyable without marginal cost. And that means that this kind of stuff is included in the movement.

I couldn't agree more. I anticipate there is information value in including more moderate perspectives too. EA is a small bubble and interacting with the rest of the world should help us to better understand how to communicate (especially with policy makers who have to consider the opinions of the electorate, a.k.a. "normal people")

I've signed up as a One for the World Community Ambassador to promote more effective giving at the 1% level, because I believe in EA's fringe!

Robin Hanson's post Marginal Charity seems relevant, even though it's a distinct idea.

You might like "A Model Estimating the Value of Research Influencing Funders" which makes a similar point, but quantitatively

Ah wow, yeah super relevant. Thanks for sharing!

In my view, there's a further consideration in favour of your suggestion: by making non-optimal charities/capital marginally more effective, we're spreading the "effectiveness meme".

By opening people's eyes to the magic of improving effectiveness, we're putting them on a track towards further effectiveness improvements. Once they see how they can marginally improve effectiveness, they might ask themselves "Why not go further, and improve effectiveness ever more" --- and this is one route into an EA-style mindset.

Broadly agree that applying EA principles towards other cause areas would be great, especially for areas that are already intuitively popular and have a lot of money behind them (eg climate change, education). One could imagine a consultancy or research org that specializes as "Givewell of X".

Influencing where other billionaires give also seems great. My understanding is that this is Longview's remit, but I don't think they've succeeded at moving giant amounts yet, and there's a lot of room for others to try similar things. It might be harder to advise billionaires who already have a foundation (eg Bill Gates), since their foundations see it as their role to decide where money should go; but doing work to catch the eye of newly minted billionaires might be a viable strategy, similar to how Givewell found a Dustin.

I agree with the Overall statement of this Post. Regarding a "GiveWell of X" type of organization I believe it would have to function quite differently, ideally only working on-demand instead of doing broadly aimed research for the following 2 reasons:

Not what you are saying necessarily but I think local ea groups focusing on local outcomes is somewhat reasonable. It would possibly make the group feel like they had more purpose outside of discussion, could beta test and be a proving ground for people on a smaller scale, and build reputation in the city the group is in.

I'm glad you shared this. I wrote something 18 months ago that was trying to get at a similar point, but then got distracted by delivering on contracts and applying for jobs, so never followed up on it.

Having spent most of my career working with and consulting for funders like 'Donor B', I couldn't agree more.

It also prompts me to reflect on a conversation I had at EAG this year, in which a prominent EA shared that they were considering applying to be CEO of a well-resourced non-EA animal welfare organisation. I'm not sure if they ended up applying, or how that went, but I suspect that this kind of thing might also help on the path to (marginally more) 'effective everything'.

Thank you for a very thoughtful forum post! I had an idea a few years ago that was kind of like this: That the United Nations should use the same concept as Founders Pledge to get funding. I was thinking that because they have so many collaborations, connections and volunteers, it would be quite easy for the UN to get great amounts of funding by letting people take the same kind of pledge as Founders Pledge use (giving X % to charity when you sell your business).

Agree with your assessment of the DAF360 job. I also generally agree with your overall points.

I've said (in like 5 comments now) that a very high impact opportunity is to influence grantmakers of large private foundations.

Great post! Thanks for writing it.

I believe many interventions in this area could be cost-effective. However, given the vastness of the space, each project’s cost-effectiveness and expected value should be carefully assessed and compared against the most effective charities in EA.

I wanted to underline this because this is also a quite “sweet” idea, where you spread EA ideas with the world, and due to the sweetness of the idea, sometimes even the EA community forgets about the fat-tailed nature of impact. The bar for effectiveness we have should be kept the same, and if math doesn't math we should stop doing this type of intervention

I strongly agree! Improving the cost-effectiveness (and cost-efficiency) of non-EA resources seems underexplored in EA discussions. I'd argue this applies to talent, not just funding.

In mainstream fields like global development and climate change, there are many talented, impact-driven professionals who don't know EA or wouldn't join the EA community (perhaps disagreeing with cause-neutrality or the utilitarian foundations). Yet many of these professionals would be quite willing to put in a lot of effort and energy into high-impact projects if exposed to important agendas and projects they're well-positioned to tackle. There could be significant value in shaping agendas and channeling these professionals toward more impactful (not necessarily "most impactful" by EA standards) work within their existing domains.

I should note this point is less relevant/salient for AI Safety field-building, where there already seem to be more pathways for non-EA people and broader engagement beyond the EA-aligned community.

On an additional note: Rethink Priorities' A Model Estimating the Value of Research Influencing Funders report had a relevant point:

"Moving some funders from an overall lower cost effectiveness to a still relatively low or middling level of cost effectiveness can be highly competitive with, and, in some cases, more effective than working with highly cost-effective funders."

Discussed on the forum here