Hi, have you been rejected from all the 80K listed EA jobs you’ve applied for? It sucks, right? Welcome to the club. What might be comforting is that you (and I) are not alone. EA Job listings are extremely competitive, and in the classic EA career path, you just get rejected over and over. Many others have written about their rejection experience, here, here, and here.

Even if it is quite normal for very smart, hardworking, proactive, and highly motivated EAs to get rejected from high-impact positions, it still sucks.

It sucks because we sincerely want to make the world a radically better place. We’ve read everything, planned accordingly, gone through fellowships, rejected other options, and worked very hard just to get the following message:

"Thank you for your interest in [Insert EA Org Name]... we have decided to move forward with other candidates for this role... we're unfortunately unable to provide individual feedback...”

It’s also a hit to your self-esteem. People who get rejected from EA jobs are used to performing very well in the non-EA world. They get into extremely competitive programs in top universities, are at the top of their class, and are generally appraised within their family and social circle for their performance. After getting used to this, receiving 20 rejections for something you deeply cared about and worked really hard for is a blow to your ego.

This may also lead to disillusionment with the overall EA community. We have been told that we can create an incredible impact. We’ve believed in it and done all we can. Now we are unemployed with very weird niche skills and knowledge that we can’t use elsewhere.

You also get frustrated with EA. How is the community not seeing my value? In all other places, I’m at the top, I’m selected in the most competitive positions, highly regarded, respected, and appreciated. How does EA not see how awesome I am?

With the combination of all the different feelings above, I’ve seen many who lose their hope of creating an incredible impact with their career by saying something like: “Well, I’ve tried it, and I can’t seem to be able to create an impact with my career.” and slowly engage less and less with the community.

As a person who has also been rejected repeatedly, I feel the frustration. But I think the statement above is just wrong. You can create a massive impact with your career. Getting rejected from 30 positions is just a very weak evidence for your “inability to create impact with your career”.

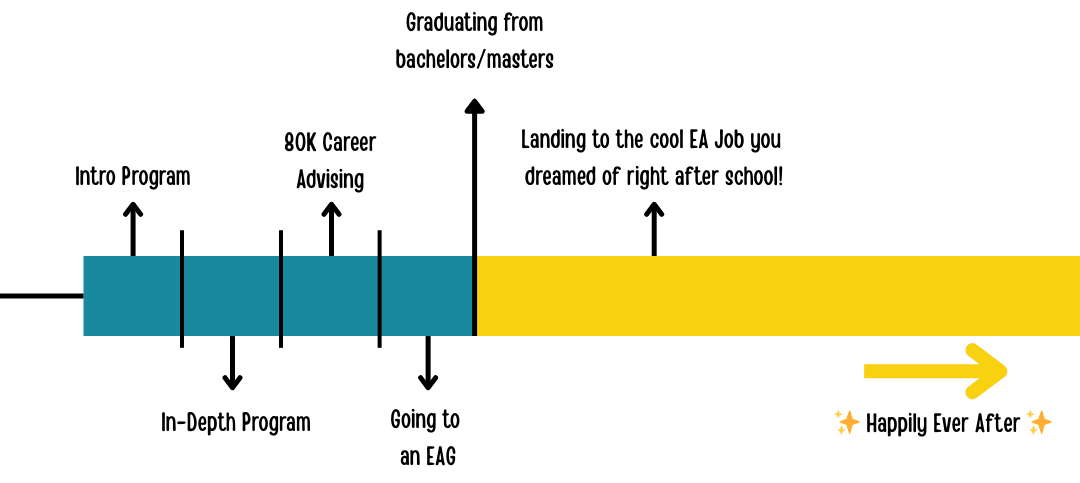

We generally see our path to impact like this:

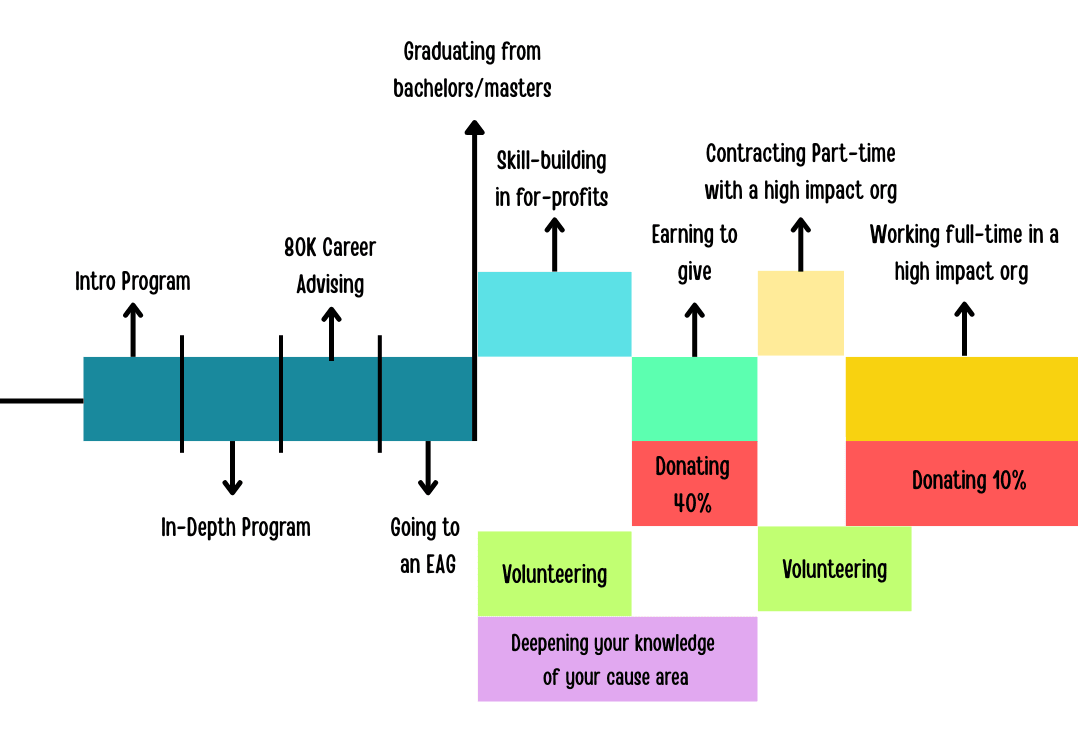

However, this is very unlikely or even ODD to happen. I think most of the people in the EA Community impact will be something more like this:

Most of our career paths will look like this since are very few job listings, extreme competition, and a lack of funding.

One can have many reasons not to aim for a career to create good: not aligning with the core EA ideas, having other priorities etc. But abandoning this goal simply because of 30+ rejections is just a poor reason to give up.

You typically need 100-200+ applications to land a job. [1] These figures are for the job market as a whole; now add the EA space's competitiveness. Not finding a position is less about you and more about the number of jobs and early-career people in EA. This is normal. You are at the beginning of your impact journey, and there will be excellent opportunities for you in the near future.

Take Brendan, for example:

He discovered EA and realized that he was strongly aligned with the principles. However, he couldn’t find a position in EA in his journalism/comms field. So, for the 3-4 years, he took different roles in both the public and private sectors for skill-building. During this time, he also stayed connected with the community by volunteering for high-impact organizations and writing a blog on effective giving. His skill-building and continued engagement paid off. He is now the Communications Lead at the School of Moral Ambition.

Or Richie:

After earning his PhD in evolutionary psychology, Richie was turned down for several roles in the EA space and realised he needed new skills to make a contribution. Realising there is a growing need for data science across most organisations, he retrained in data science and worked for three years as an AI consultant in for-profit companies.. At EAGx Cambridge 2023, he met his now-boss Chris at an alternative proteins event, which led to a researcher role at Bryant Research, a social science think tank serving animal advocacy and alternative proteins. This year, he was promoted to director of research.

I think we are very lucky to have them. I’m happy that they didn’t give up on EA 5 years ago when they couldn’t find a position from the 80K job listings. Similar to them, it would also be a pity if we lose you as well. With more experience, you’ll be needed more. Yes, it is a bit frustrating to “wait” to have an impact, but in this context, we already couldn't find our cool ideal EA job, and 5 years will pass, even if we want it or not. So why not optimize that and maximize the chance to have an impact in 5 years? Why abandon this project just because you lost some years? Yes, you won’t be doing high-impact work for 80,000 hours, but you can still train and use well 10,000 hours (5 years) and later do the most good you can with the remaining 70,000 hours.

A note on AI timelines

In the current stage of LLMs, one may reasonably have short timelines for AGI coming in the next 3-5 years, as given here, here, here, and here. It might not make sense to optimize skilling up for a couple of years because AGI will already be here, and most of the impact opportunity will be lost. So maybe if I can’t find a highly impactful job right now, I’ll never have to because we’ll run out of time.

I believe there is significant evidence that AGI can be invented in the next few years. However, even the biggest proponents of this idea disclaim that this scenario is around or much less than 50% likely. However, it still makes sense to allocate a lot of resources to this area due to the stakes and possible consequences we might have. Yes, the efforts and careers spent on short timelines are unlikely to create any impact. But it still makes sense to work in the area because it is a good bet to take.

This is the case for all interventions. There’s no guarantee that any career will create any impact. Not all 4000 dollars donated to GiveWell saves a life, and not all cage-free campaigns are successful. However, doing all of them makes sense because they are all “good bets to take”. Similarly, skilling up for longer timelines is a good bet to take.

Let's imagine a scenario where 5 years pass and we don’t have AGI in 2030. This wouldn’t be surprising for the loudest advocates of short timelines wouldn’t be so surprised:

We should, as a civilization, operate under the assumption that transformative AI will arrive 10-40 years from now, with a wide range for error in either direction. [2]- Scott Alexander

Either we hit AI that can cause transformative effects by ~2030: AI progress continues or even accelerates, and we probably enter a period of explosive change. Or progress will slow. I roughly think of these scenarios as 50:50 — though I can vary between 30% and 80% depending on the day. [3]- Benjamin Todd

And for this very likely scenario, we will definitely need intelligent, altruistic, and experienced EAs to take positions in high-impact organizations.

So you taking 5 years to skill up is a very good bet to take. Again it's not 100% certain and maybe you won’t have any impact, just like all other interventions in EA.

Time to go forward

I know it is very disappointing to get rejected over and over. It makes you feel like your value is not seen, and all your work was wasted, but that is not true. I know that you sincerely want to create an impact. I know that you worked so hard for it, and I’m thankful that you are in this community.

I also know that these rejections are not the end of your career.

You can still earn to give, found to give, take on EA projects, write in the forum, host events, give feedback, inspire friends and family to donate…. and more importantly, develop skills and experience in the outside world and bring them back to EA when we need it. Just like Brendan and Richie, we’ll be lucky to have you when we have you.

Let's get, frustrated, angry with EA. Mourn that our cool EA career goals are currently not in reach... Then shake it off and continue on your journey.

You still have 70,000 hours

Don’t waste them

I would also guess that the overwhelming majority (>95%) of highly impactful jobs are not at explicitly EA-aligned organizations, just because only a minuscule fraction of all jobs are at EA orgs. It can be harder to identify highly impactful roles outside of these specific orgs, but it's worth trying to do this, especially if you've faced a lot of rejection from EA orgs.

Great post! I think once you've identified a pressing problem in the world, your highest goal should not be "get a full time job in that field." It should be "get exceptionally good at the skills for solving the problem." That's what actually matters. Insofar as you care about (AI risk, animal suffering, global health, ___), you should see a job as one of many instrumental ways to get good at solving the problem, not "getting good enough" as instrumental to getting a job. Which one is your north star? It's easier said than done, but getting so good they can't ignore you is still underrated advice, and can help you shift your focus to more medium term skill building trajectories.

As you describe in the post, that can take many forms short of "full time capital E capital A work right away." Here are some slides on this theme (and trying to concretize strategies) from a recent talk I gave if it's helpful for anyone. And the 80,000 Hours career guide has many career capital tips.

And on AI timelines, people should probably think more seriously about opportunity costs when weighing between specific options, but I agree it shouldn't be their only factor or close off longer term skill building priorities. We should maximize our impact across the distribution of plausible outcomes- if AGI comes tomorrow, I'm probably not in a position to help directly anyway.

Great post! This is good advice. Donating while building skills and volunteering still allows to have tons of impact.

The graph represents well the career path I followed:

I think that's a good approach by default!

I think some of the frustration you describe comes from all-or-nothing thinking about having a positive impact. This type of thinking is probably more common among EA people, especially those with the goal of "doing the most good you possibly can".

As lilly mentioned, the majority of impactful jobs are probably not at EA-aligned organizations. Just because you're getting rejected by all of the jobs posted by 80,000 hours doesn't mean you can't get an impactful job elsewhere.

Also, fierce competition and repeated rejection are not unique to EA jobs. Think of all the people trying to make it as actors, musicians, writers, or in basically any creative industry. Think of all the people trying to make a lot of money without the goal of earning to give. Even in less competitive industries, getting rejected by the first 30 employers seems pretty normal to me. Perhaps the only difference for EA-aligned organizations is that more of the applicants have perfectionistic tendencies.

I think there might be some missing links:

> In the current stage of LLMs, one may reasonably have short timelines for AGI coming in the next 3-5 years, as given here. Here, here, here, and here.

Thanks for flagging this. Someone else DMed about this, but it worked in my and a friend's devices. What device and browser are you using?

I'm using Chrome on Windows desktop.

Strangely enough, the links here works:

> Many others have written about their rejection experience, here, here, and here.

Forwarding this to the tech team - weird!

Still seeing this problem on iOS Safari.

Are you sure you were looking at the right "here, here and here"? I just checked the raw post HTML in the forum database and there's no links in that sentence:

Thank you for sharing your thoughts on this - I *also* have been rejected frequently from EA jobs and I quietly follow/read the discussions on this topic (on which, as you mention, there are many). I fully agree the ‘dream’ timeline is a fantasy but (and I fully appreciate the supportive and empathic sentiment) I would be genuinely surprised if anyone really had that fantasy, as not only in EA but in the job market in general such paths are virtually non-existent. Most careers are very, very squiggly! Not even the most structured, old fashion career ladders (lawyer, doctor, accountant) are as linear as that and haven’t been for a while.

My one observation is that the overall EA rejects space is so heavily focused on early career people (fair, as it’s the majority of applicants) that it misses out what the intense rejection rate means for folks like me, who already have 9+ years of work experience (in non-EA jobs). I support the spirit and lesson of being rejected as just part of the process, and that there are many ways to stay engaged with EA. Although….

There is truth in the “go out in the world and come back”, but, honestly, for us mid-careers getting an EA aligned job can also be extremely hard or ~impossible, and things like earn to give would mean pivoting in very weird ways (for example, if you have been a fundraiser (or X role) for big/non-EA charities or a UN body for 14 years, suddenly transitioning out of a low paying industry and becoming a high-salary person in the corporate ladder is not easy).

You mention the extreme competitiveness - yes! It can get a bit demoralising, but it’s also fascinating how these things work - for someone early in a PhD who has been essentially headhunted by 80K Hours marketing (1) or some university EA group, someone with a lot of front line experience may seem like a “threat” as, in theory, they’d have a lot of that desirable career capital and skills; but for those of us mid-career with robust skills from the ‘normal’ world, all of the youngsters sharpened by attending EAGx since they were teens and reading all the longtermism books.. they own the EA networks way more than us, and are the ones that feel like the real threat.

TBH I have not done any research (stalking) to verify who got the positions that I have personally been rejected from (I’m sure they are awesome people and doing a great job, which, as an EA heart, is what I want), but from observations of other orgs my gut feeling is that people who are more EA-native (but have less career capital from the ‘normal’ world) (2) outweigh those who were trained in the non-EA job market. (3)

I don’t mean to be a negative Nelly but I think it’s worth opening up this end of the discussion on how decoupling yourself from the EA world, at least professionally speaking, can happen, and it’s not just that you were demoralised by multiple rejections!

(1) I do not mean to be pejorative, 80K Hours marketing and comms are doing great at casting a wide net to inspire people to have impactful careers. This is great news and they should absolutely keep doing it!

(2) With this I mean, less traditional work experience in paid positions. I know a lot of EAs start very early while in college/university doing a ton of volunteering that absolutely counts.

(3) This is a personal take based on nothing but feels and casual monitoring of EA orgs news and ‘team’ pages

PS. Sorry for the weird formatting with the footnotes - having a device glitch!

NOTE: I was wrong, not LLM written at all, am retracting the content because I think it's probably unhelpful on reflection.

Just wanted to flag that I feel conflicted about this post. I really like the sentiment, the visualisations and the clear communication style. Thank you!

But it feels like its written by an LLM and I don't like the feeling/vibes of that. I'm not sure whether its rights though for me to discriminate based on my "LLM-ness" vibes as those vibes are a bit hard to rationally justify. My vibes could also be flat wrong and there could actually be little to no LLM use here at all - my LLM vibes are often wrong (em-dash my own). It also might be just fine for people to write mostly using LLMs, especially if it helps them get their thoughts out there.

I've weak upvoted the post but I'm unsure what a good/fair/reasonable Karma-approach is here.

FWIW the piece didn't strike me as having the hallmarks of LLM-written prose, just as a counter-anecdote :)

Thanks for the comment! Which parts sounded the most like an LLM? I'm surprised because I only used AI (Grammarly) to catch grammar/spelling mistakes.

Hey man sorry about that, Im clearly wrong and have retracted because I think it's unhelpful and I'm off that mark. It's hard to say exactly why it felt that way (my bad). The only thing I can say for sure is the last couple of lines felt quite like a punchy LLM ending, and maybe the upbeat tone (which anyone could have). I think it probably doesn't actually have LLM hallmarks.

As someone who has been nothing but a failure my whole life, I can't relate to this narrative of being a top-achiever outside of EA. If that is the base expectation, I think I have essentially no hope.

I like and agree with this post a lot. I just want to push back on this part:

These numbers are crazy. It may be that many people make so many applications, but they certainly shouldn't: with so many applications, you can't put in the effort needed to have a really good shot, and you're probably applying for many positions where you have next to no chance anyway. Not to mention that with so many rejections, I would be highly suspicious of whoever did end up hiring me (surely there's some bad reason why they didn't hire anyone else)!

So: better to apply where you have a real shot, make fewer high-quality applications, and end up in a position where you can take an offer from an employer you feel confident you want to work for. Ideally, you might even be able to negotiate.

Also: I haven't looked into these numbers but I suspect they might also be inflated by job-searching requirements in many unemployment insurance schemes.

Thanks for the great post, Güney!

Side note. The link to your LinkedIn profile on your EA Forum page is broken.

I find it helpful to think about the (expected) benefits and costs to decide whether to apply to jobs. One can estimate the benefits of completing the 1st stage from "probability of getting an offer conditional on completing the 1st stage"*"value in $ from getting an offer", and a lower (upper) bound for the cost from "value of my time in $/h"*"hours to complete the 1st stage (all the stages)"[1]. It is only worth applying if the benefits are larger than the lower bound for the cost. For example, if one thinks there a 1 % chance of getting an offer conditional on completing the 1st stage, considers an offer to be worth 20 k$[2], values one's time at 20 $/h, and estimates it will take 1 h to complete the 1st stage, and 40 h to complete all the stages, the benefits would be 200 $ (= 0.01*20*10^3), and the cost between 20 (= 20*1) and 400 $ (= 40*10). So the benefits would be 0.5 (= 200/400) to 10 (= 200/20) times the cost, and therefore it seems worth applying.

One can estimate the probability of getting an offer from "offers for similar roles"/"applications for similar roles". If one has never got an offer for a similar role, and has progressed at most to the Nth last stage, the probability of getting an offer can be calculated from ("probability of progressing to the Nth last stage" = "number of times one has progressed to the Nth stage applying for similar roles"/"applications for similar roles")*("probability of getting an offer conditional on completing the Nth last stage" = 1/"expected number of candidates in the Nth last stage"[3]).

This is a lower bound because one would still need more time to complete subsequent stages.

For example, 5 months until getting a new job with 2 k$/month more of net earnings relative to the current job.

Even better, "probability of getting an offer conditional on completing the Nth last stage" = "expected value (E) of the reciprocal of the best guess distribution for the number of candidates in the Nth last stage", as E(1/X) is not equal to 1/E(X), but I do not think it is worth being this rigorous.

I've been rejected by over 4000 companies from all around the world for the past 10 years. Many of these years, I attempted to find a job at EA or its family organizations. I also attempted multiple times through all these years, to create robust plans and apply for EA grants (research plans and business plans where each took a substantial time and effort to shape - as non of them was trivial or random - but they were original research directly relevant and personalized on EA's principles, organizational structure, and directives - months and months of preparation), but especially this was my greatest dissapointment! I even got at a stage where I said some very hard truths directly to EA's CEO through email and to another person who evaluated grant proposals - explaining how the directives have deviate from the principles. How the principle of Effective Altruism became a brand and stopped being effective and altruistic.

Favoritism and systemic racism, which are covered by insensitive and in my view unethical typicalities such as responses like "try again next time", are societal issues that we face for millenia. EA is not to blame for their origins - but the people who are perpetuating them on accord of EA are! Also the contradiction is that EA exists to alleviate such issues. People are the source of decision and we are all to blame for the state that our society has been, is and will be. Recruitment is a mechanism which is gambling with people's lives and societal wellbeing by design - and as a sociotechnical function it sits at the very core of ALL the issues that EA attempts to address. That is why the Center of Effective Altruism, eventually became a money and job distribution mechanism - but in the path on finding the source, they seem to have lost their purpose.

Poverty is at the heart of all Crises we face globally. But it is a poverty of mind first.

Where I am going with this. There is a huge issue, that this essay barely touched. Still, I am thankful for bringing the topic up and being honest about it, as it gave me the strength to be honest about my truth as well. Business as usual won't solve the issue. Many of us don't have 70k hours, and for the majority of us, even 24 hours are unbearable!!! So I will use the word "pray" here - but without religious context. I pray this: May people who have the power to choose better, do so!

We all can contribute, stop excluding us! Poverty and unemployment is not a matter of financial incapacity. There are money for all of us! Structure your business plans properly!

Respectfully,

Basil.

Thanks for the post. I am also currently sending out applications and encouraging messages like this are highly appreciated right now.