TL;DR

- As some have already argued, the EA movement would be more effective in convincing people to take existential risks seriously by focusing on how these risks will kill them and everyone they know, rather than on how they need to care about future people

- Trying to prevent humanity from going extinct does not match people’s commonsense definition of altruism

- This mismatch causes EA to filter out two groups of people: 1) People who are motivated to prevent existential risks for reasons other than caring about future people; 2) Altruistically motivated people who want to help those less fortunate, but are repelled by EA’s focus on longtermism

- We need an existential risk prevention movement that people can join without having to rethink their moral ideas to include future people and we need an effective altruism movement that people can join without being told that the most altruistic endeavor is to try to minimize existential risks

Addressing existential risk is not an altruistic endeavor

In what is currently the fourth highest-voted EA forum post of all time, Scott Alexander proposes that EA could talk about existential risk without first bringing up the philosophical ideas of longtermism.

If you're under ~50, unaligned AI might kill you and everyone you know. Not your great-great-(...)-great-grandchildren in the year 30,000 AD. Not even your children. You and everyone you know. As a pitch to get people to care about something, this is a pretty strong one.

But right now, a lot of EA discussion about this goes through an argument that starts with "did you know you might want to assign your descendants in the year 30,000 AD exactly equal moral value to yourself? Did you know that maybe you should care about their problems exactly as much as you care about global warming and other problems happening today?"

Regardless of whether these statements are true, or whether you could eventually convince someone of them, they're not the most efficient way to make people concerned about something which will also, in the short term, kill them and everyone they know.

The same argument applies to other long-termist priorities, like biosecurity and nuclear weapons. Well-known ideas like "the hinge of history", "the most important century" and "the precipice" all point to the idea that existential risk is concentrated in the relatively near future - probably before 2100.

The average biosecurity project being funded by Long-Term Future Fund or FTX Future Fund is aimed at preventing pandemics in the next 10 or 30 years. The average nuclear containment project is aimed at preventing nuclear wars in the next 10 to 30 years. One reason all of these projects are good is that they will prevent humanity from being wiped out, leading to a flourishing long-term future. But another reason they're good is that if there's a pandemic or nuclear war 10 or 30 years from now, it might kill you and everyone you know.

I agree with Scott here. Based on the reaction on the forum, a lot of others do as well. So, let’s read that last sentence again: “if there's a pandemic or nuclear war 10 or 30 years from now, it might kill you and everyone you know”. Notice that this is not an altruistic concern – it is a concern of survival and well-being.

I mean, sure, you could make the case that not wanting the world to end is altruistic because you care about the billions of people currently living and the potential trillions of people who could exist in the future. But chances are, if you’re worried about the world ending, what’s actually driving you is a basic human desire for you and your loved ones to live and flourish.

I share the longtermists’ concerns about bio-risk, unaligned artificial intelligence, nuclear war, and other potential existential risks. I believe these are important cause areas. But if I’m being honest, I don’t worry about these risks because they could prevent trillions of unborn humans from existing. I don’t even really worry about them because they might kill millions or billions of people today. The main reason I worry about them is because I don’t want myself or the people I care about to be harmed.

Robert Wright asks “Concern for generations unborn is laudable and right, but is it really a pre-requisite for saving the world?” For the vast majority of people, the answer is obviously not. But for a movement called effective altruism, the answer is a resounding yes. Because an altruistic movement can’t be about its members wanting themselves and their loved ones to live and flourish – it has to, at least primarily, be about others.

Eli Lifland responds to Scott and others who question the use of the longtermist framework to talk about existential risk, arguing that, by his rough analyses, “without taking into account future people, x-risk interventions are approximately as cost-effective as (a) near-term interventions, such as global health and animal welfare and (b) global catastrophic risk (GCR) interventions, such as reducing risk of nuclear war”. Eli may be right about this but his whole post is predicated on the idea that preventing existential risks should be approached as an altruistic endeavor – which makes sense, because it’s a post on the Effective Altruism forum to be read by people interested in altruism.

But preventing existential risk isn’t only in the purview of altruists. However small or wide one’s circle of concern is, existential risk is of concern to them. Whether someone is totally selfish, cares only for their families, cares only for their communities, cares only for their country, or is as selfless as Peter Singer, existential risks matter to them. EAs might require expanding the circle of concern to future people to justify prioritizing these existential risks, but the rest of the world does not.

(One might argue that this is untrue, that most people outside of the EA movement clearly don’t seem to care or think much about existential risks. But this is a failure of reasoning, not of moral reasoning. There are many who don’t align themselves with effective altruists who highly prioritize addressing existential risks, including Elon Musk, Dominic Cummings, and Zvi Mowshowitz.)

The EA movement shoots itself in the foot with by starting its pitches to reduce existential risks with philosophical arguments on how they should care about future people. We need more people taking these cause areas seriously and working on them, and the longtermist pitch is far from the most persuasive one. Bucketing the issue as an altruistic one also prevents what’s really needed to attract lots of talent: high levels of pay without any handwringing about how that’s not how altruism should be. Given the importance of these issues, we can’t afford to filter out everyone who rejects longtermist arguments, can’t be motivated by altruistic considerations for future people, or would only work on these problems as part of high-earning, high-status careers (aka the overwhelming majority of people).

The EA movement then proceeds to shoot itself in the other foot by keeping all the great and revolutionary ideas of the bed net era in the same movement as longtermism. When many who are interested in altruism see EA’s focus on longtermism, they simply don’t recognize it as an altruism-focused movement. This isn’t just because these ideas are new. Some of the critics who once thought that caring about a charity’s effectiveness somehow made the altruism defective eventually came around because ultimately the bed net era really was about how to do altruism better. The ideas of using research and mathematical tools to identify the most effective charities and only giving to them are controversial, but fundamentally it still falls under people’s intuitive definition of what altruism is. This is not the case with things like trying to solve the AI alignment problem or lobbying congress to prioritize pandemic prevention, which fall well outside that definition.

The consequence of this is to filter out potential EAs who find longtermist ideas too bizarre to be part of the movement. I have met various effective altruists who care about fighting global poverty, and maybe care about improving animal welfare, but who are not sold on longtermism (and are sometimes hostile to portions of it, usually to concerns about AI). In their cases, their appreciation for what they consider to be the good parts of EA outweighed their skepticism of longtermism, and they become part of the movement. It would be very surprising if there weren’t others who are in a similar boat, except being somewhat more averse to longtermism and somewhat less appreciative of the rest of the EA, the balance swings the other way and they avoid the movement altogether.

Again, EA has always challenged people’s conceptions of how to do altruism, but the pushback to the bed nets era EA was about the concept of altruism being effective and EA’s claims that the question had a right answer. Working or donating to help poor people in the developing world already matched people’s conceptions of altruism very well. The challenge longtermism poses to people is about the concept of altruism itself and how it’s being extended to solve very different problems.

The “effective altruism” name

“As E.A. expanded, it required an umbrella nonprofit with paid staff. They brainstormed names with variants of the words “good” and “maximization,” and settled on the Centre for Effective Altruism.” – The New Yorker in their profile of Will MacAskill

So why did EA shoot itself in both feet like this? It’s not because anyone decided that it would be a great idea to have the same movement both fighting global poverty and preventing existential risk. It happened organically, as a result of how the EA movement evolved.

Will MacAskill lays out how the term “effective altruism” came about in this post. When it was first introduced in 2011, the soon-to-be-named-EA community was still largely the Giving What We Can community. With the founding of 80,000 Hours, the movement began taking its first steps “away from just charity and onto ethical life-optimisation more generally”. But this was still very much the bed nets era, where the focus was on helping people in extreme poverty. Longtermist ideas had not yet taken a stronghold in the movement.

Effective altruism was, I believe, a very good name for the movement as it was then. The movement has undergone huge changes since then, and to reflect these changes it decided to… drop the long form and go by just the acronym? Here’s Matt Yglesias:

The Effective Altruism movement was born out of an effort to persuade people to be more charitable and to think more critically about the cost-effectiveness of different giving opportunities. Effective Altruism is a good name for those ideas. But movements are constellations of people and institutions, not abstract ideas — and over time, the people and institutions operating under the banner of Effective Altruism started getting involved in other things.

My sense is that the relevant people have generally come around to the view that this is confusing and use the acronym “EA,” like how AT&T no longer stands for “American Telephone and Telegraph.”

If Matt is right here, it means that many EAs already do agree that effective altruism is a bad name for what the movement is today. But if they’re trying to remedy it by just dropping to using the acronym EA, they’re not doing a very good job. (It’s also worth noting that the acronym EA means something very different to most people.)

So, what is the remedy? Well, this is an EA criticism post, so actually addressing it is someone else’s job. I can think of two ways to address this.

The first is that the effective altruism movement gets a new name that properly reflects the full scope of what it tries to do today. This doesn’t mean ditching the Effective Altruism name entirely. Think about what Google did in 2015 when it realized that its founding product’s brand name was no longer the right name for the company, given all the non-Google projects and products it was working on: it restructured itself to form a parent company called Alphabet Inc. It didn’t ditch the Google brand name; in fact, Google is still its own company, but it’s a subsidiary of Alphabet, which also owns other companies like DeepMind and Waymo. EA could do something similar.

The second option is that extinction risk prevention be its own movement outside of effective altruism. Think about how the rationality and EA movements coexist: the two have a lot of overlap but they are not the same thing and there are plenty of people who are in one but not the other. There’s also no good umbrella term that encompasses both. Why not have a third extinction risk prevention movement, one that has valuable connections to the other two, but includes people who aren’t interested in moral thought experiments or reading LessWrong, but who are motivated to save the world?

Once the two movements are distinguished, they can each shed the baggage that comes from their current conflation with each other. The effective altruism movement can be an altruism-focused movement that brings in people who want to help the less fortunate (humans or animals) to the best of their abilities, without anyone trying to be convince them that protecting the far future is the most altruistic cause area. The extinction risk prevention movement can be a movement that tries to save the world, attracting top talent with money, status and a desire to have a heroic and important job, rather than narrowing itself to people who can be persuaded to care deeply about future people.

Restructuring a movement is hard. It’s messy, it involves a lot of grunt work, it can confuse things in the short term, and the benefits might be unclear. But consider that the EA movement is just 15 or so years old and that the longtermist view is based on the idea that humanity could still be in its infancy and we want to ensure there is still a very long way to go. Do we really want to trap the movement in a framework that no one chose because it’s too awkward and annoying to change it?

Concluding with a personal perspective

I’m a co-founder and organizer of the Effective Altruism chapter at Microsoft. Every year, during Give month, we try to promote the concept of effective altruism at the company. We’ve had various speakers over the years but the rough pitch goes something like this: “We have high-quality evidence to show that some charities are orders of magnitude more effective than others. If you want do the most good with your money, you should find organizations that are seen and measured to be high impact by reputed organizations like GiveWell. This can and will save lives, something Peter Singer’s drowning child thought experiment shows is arguably a moral obligation.”

That’s where our pitches end for most people because we usually only have an hour to get these new ideas across. If I was to think about how to pitch longtermism afterwards, here’s my impression of how it would come across:

“Alright, so now that I’ve told you how we have a moral obligation to help those in extreme poverty and how EA is a movement that tries to find the best ways to do that, you should know that real effective altruists think global poverty is just a rounding error. We’ve done the math and realized that when you consider all future people, helping people in the third world (you know, the thing we just told you that you should do) is actually not a priority. What you really want to donate to is trying to prevent existential risks. We can’t really measure how impactful charities in this space are (you know, the other thing we just told you should do) but it’s so important that even if there’s a small chance it could help, the expected value is higher.”

(I’m being uncharitable to EA and longtermism here, but only to demonstrate how I think I will end up sounding if I pitch longtermism right after our talks introducing people to EA ideas for the first time.)

I love introducing people to the ideas of Peter Singer and how by donating carefully, we can make a difference in the world and save lives. And I introduce these ideas as effective altruism, because that’s what they are. But I sometimes have a fear in the back of my mind that some of the attendees who are intrigued by these ideas are later going to look up effective altruism, get the impression that the movement’s focus is just about existential risks these days, and feel duped. Since EA pitches don’t usually start with longtermist ideas, it can feel like a bait and switch.

I would love to introduce people to the ideas of existential risk separately. The longtermist cause areas are of huge importance, and I think there needs to be a lot of money and activism and work directed at solving these problems. Not because it’s altruistic, even if it is that too, but because we all care about our own well-beings.

This means that smart societies and governments should take serious steps to mitigate the existential risks that threaten its citizens. Humanity’s fight against climate change has already shown that it is possible for scientists and activists to make tackling an existential risk a governmental priority, one that bears fruit. That level of widespread seriousness and urgency is needed to tackle the other existential risks that threaten us as well. It can’t just be an altruistic cause area.

Effective Altruists want to effectively help others. I think it makes perfect sense for this to be an umbrella movement that includes a range of different cause areas. (And literally saving the world is obviously a legitimate area of interest for altruists!)

Cause-specific movements are great, but they aren't a replacement for EA as a cause-neutral movement to effectively do good.

The claim isn't that the current framing of all these cause areas as effective altruism doesn't make any sense, but that it's confusing and sub-optimal. According to Matt Yglesias, there are already "relevant people" who agree strongly enough with this that they're trying to drop to just using the acronym EA - but I think that's a poor solution and I hadn't seen those concerns explained in full anywhere.

As multiple recent posts have said, EAs today try to sell the obvious important and important idea of preventing existential risk using counterintuitive ideas about caring about the far future, which most people won't buy. This is an example of how viewing these cause areas through just the lens of altruism can be damaging to those causes.

And then it damages the global poverty and animal welfare cause areas because many who might be interested in the EA ideas to do good better there get turned off by EA's intense focus on longtermism.

A phrase that I really like to describe longtermism is "altruistic rationalty" which covers activities that are a subset of "effective altruism"

The point about global poverty and longtermism being very different causes is a good one, and the idea of these things being more separate is interesting.

That said, I disagree with the idea that working to prevent existential catastrophe within one's own lifetime is selfish rather than altruistic. I suppose it's possible someone could work on x-risk out of purely selfish motivations, but it doesn't make much sense to me.

From a social perspective, people who work on climate change are considered altruistic even if they are doomy on climate change. People who perform activism on behalf of marginalised groups are considered altruistic even if they're part of that marginalised group themselves and thus even more clearly acting in their own self-interest.

From a mathematical perspective, consider AI alignment. What are the chances of me making the difference between "world saved" and "world ends" if I go into this field? Let's call it around one in a million, as a back-of-the-envelope figure. (Assuming AI risk at 10% this century, the AI safety field reducing it by 10%, and my performing 1/10,000th of the field's total output)

This is still sufficient to save 7,000 lives in expected value, so it seems a worthy bet. By contrast, what if, for some reason, misaligned AI would kill me and only me? Well, now I could devote my entire career to AI alignment and only reduce my chance of death by one micromort - by contrast, my Covid vaccine cost me three micromorts all by itself, and 20 minutes of moderate exercise gives a couple of micromorts back. Thus, working on AI alignment is a really dumb idea if I care only about my own life. I would have to go up to at least 1% (10,000x better odds) to even consider doing this for myself.

You make a strong case that trying to convince people to work on existential risks for just their own sakes doesn't make much sense. But promoting a cause area isn't just about getting people to work on them but about getting the public and governments and institutions to take them seriously.

For instance, Will MacAskill talks about ideas like scanning the wastewater for new pathogens and using UVC to sterilize airborne pathogens. But he does this only after trying to sell the reader/listener on caring about the potential trillions of future people. I believe this is a very suboptimal approach: most people will support governments and institutions pursuing these, not for the benefits of future people, but because they're afraid of pathogens and pandemics themselves.

And even when it comes to people who want to work on existential risks, people have a more natural drive to try to save humanity that doesn't require them to buy the philosophical ideas of longtermism first. That is the drive we should leverage to get more people working on these cause areas. It seems to be working well for the fight against climate change after all.

Right, there is still a collective action/public goods/free rider problem. (But many people reflectively don’t think in these terms and use ‘team reasoning’ … and consider cooperating in the prisoner’s dilemma to be self interested rationality.)

Agree with this, with the caveat that the more selfish framing ("I don't want to die or my family to die") seems to be helpfully motivating to some productive AI alignment researchers.

The way I would put it is on reflection, it's only rational to work on x-risk for altruistic reasons rather than selfish. But if more selfish reasoning helps for day-to-day motivation even if it's irrational, this seems likely okay (see also Dark Arts of Rationality).

William_MacAskill's comment on the Scott Alexander post explains his rational for leading with longermism over x-risk. Pasting it below so people don't have to click.

It's a tough question, but leading with x-risk seems like it could turn off a lot of people who have (mostly rightly, up until recently) gotten into the habit of ignoring doomsayers. Longtermism comes at it from a more positive angle, which seems more inspiring to me (x-risk seems to be more promoting acting out of fear to me). Longtermism is just more interesting as an idea to me as well.

I agree that Effective Altruism and the existential risk prevention movement are not the same thing. Let me use this as an opportunity to trot out my Venn diagrams again. The point is that these communities and ideas overlap but don't necessarily imply each other - you don't have to agree to all of them because you agree with one of them, and there are good people doing good work in all the segments.

Cool diagram! I would suggest rephrasing the Longtermism description to say “We should focus directly on future generations.” As it is, it implies that people only work on animal welfare and global poverty because of moral positions, rather than concerns about tractability, etc.

Who do you think is in the 'Longterm+EA' and 'Xrisk+EA' buckets? As far as I know, even though they may have produced some pieces about those intersections, both Carl and Holden are in the center, and I doubt Will denies that humanity could go extinct or lose its potential either.

Longtermism + EA might include organizations primarily focused on the quality of the long-term future rather than its existence and scope (e.g., CLR, CRS, Sentience Institute), although the notion of existential risk construed broadly is a bit murky and potentially includes these (depending on how much of the reduction in quality threatens “humanity’s potential”)

Yep totally fair point, my examples were about pieces. However, note that the quote you pulled out referred to 'good work in the segments' (though this is quite a squirmy lawyerly point for me to make). Also, interestingly 2019-era Will was a bit more skeptical of xrisk - or at least wrote a piece exploring that view.

I'm a bit wary of naming specific people whose views I know personally but haven't expressed them publicly, so I'll just give some orgs who mostly work in those two segments, if you don't mind:

If you believe that existential risk is the highest priority cause area by a significant margin - a belief that is only increasing in prominence - then the last thing you'd want to do is interfere with the EA -> existential risk pipeline.

That said, I'm hugely in favour of cause-specific movement building - it solves a lot of problems, such as picking up different people from the EA framing and makes it easier to spend more money running programs, just as you've said.

I don't believe the EA -> existential risk pipeline is the best pipeline to bring in people to work on existential risks. I actually think it's a very suboptimal one and that absent how EA history played out, no one would ever have had answered the question of "What's the best way to get people to work on existential risks?" with anything resembling "Let's start them with the ideas of Peter Singer and then convince them that they should include future people in their circle of concern and do the math." Obviously this argument has worked well for longtermist EAs, but it's hard for me to believe that's a more effective approach than appealing to people's basic intuitions about why the world ending would be bad.

That said, I also do think closing this pipeline entirely would be quite bad. Sam Bankman-Fried, after all, seems to have come through that pipeline. But I think the EA <-> rationality pipeline is quite strong despite the two being different movements, and that the same would be true here for a separate existential risk prevention movement as well.

I don't know if it's the best pipeline, but a lot of people have come through this pipeline who were initially skeptical of existential risks. So empirically, it seems to be a more effective pipeline than people might think. I guess one of the advantages is that people only need to resonate with one of the main cause areas to initially get involved and they can shift cause areas over time and I think it's really important to have a pipeline like this.

Yes. I liked the OP, but think it might be better titled/framed as "X-risk should have it's own distinct movement (separate from EA)", or "X-risk without EA/Longtermism". There are definitely still hugely active parts of the EA movement that aren't focused on Longtermism or X-risk (Global Poverty and Animal Welfare donations and orgs are still increasing/expanding, despite losing relative "market share").

I agree that in practice x-risk involves different types of work and people than e.g. global poverty or animal welfare. I also agree that there is a danger of x-risk / long-termism cannibalizing the rest of the movement, and this might easily lead to bad-on-net things like effectively trading large amounts of non-x-risk work for very little x-risk / long-termist work (because the x-risk people would have done found their work anyway had x-risk been a smaller fraction of the movement, but as a consequence of x-risk preeminence a lot of other people are not sufficiently attracted to even start engaging with EA ideas).

However, I worry about something like intellectual honesty. Effective Altruism, both the term and the concept, are about effective forms of helping other people, and lots of people keep coming to the conclusion that preventing x-risks is one of the best ways of doing so. It seems almost intellectually dishonest to try to cut off or "hide" (in the weak sense of reducing the public salience of) the connection. One of the main strengths of EA is that it keeps pursuing that whole "impartial welfarist good" thing even if it leads to weird places, and I think EA should be open about the fact that it seems weird things follow from trying to do charity rigorously.

I think ideally this looks like global poverty, animal welfare, x-risk, and other cause areas all sitting under the EA umbrella, and engaged EAs in all of these areas being aware that the other causes are also things that people following EA principles have been drawn towards (and therefore prompted to weigh them against each other in their own decisions and cause prioritization). Of course this also requires that one cause area does not monopolize the EA image.

I agree with your concern about the combination seeming incongruous, but I think there are good ways to pitch this while tying them all into core EA ideas, e.g. something like:

I think you also overestimate the cultural congruence between non-x-risk causes like, for example, global poverty and animal welfare. These concerns span from hard-nosed veteran economists who think everything vegan is hippy nonsense and only people matter morally, to young non-technical vegans with concern for everything right down to worms. Grouping these causes together only looks normal because you're so used to EA.

(likewise, I expect low weirdness gradients between x-risk and non-x-risk causes, e.g. nuclear risk reduction policy and developing-country economic development, or GCBRs and neglected diseases)

Thanks for contributing to one of the most important meta-EA discussions going on right now (c.f. this similar post)! I agree that there should be splinter movements that revolve around different purposes (e.g., x-risk reduction, effective giving) but I disagree that EA is no longer accurately described by 'effective altruism,' and so I disagree that EA should be renamed or that it should focus on "people who want to help the less fortunate (humans or animals) to the best of their abilities, without anyone trying to be convince them that protecting the far future is the most altruistic cause area." I think this because

I also think that EA community builders are doing a decent job of creating alternative pipelines, similar to your proposal for creating an x-risk movement. For example,

There's still a lot more work to be done but I'm optimistic that we can create sub-movements without completely rebranding or shifting the EA movement away from longtermist causes!

Do you have any evidence that this is happening? Feeling duped just seems like a bit of a stretch here. Animal welfare and global health still make up a large part of the effectivealtrusim.org home page. Givewell still ranks their top charities and their funds raised more than doubled from 2020 to 2021.

My impression is that the movement does a pretty good job explaining that caring about the future doesn’t mean ignoring the present.

Assuming someone did feel duped, what are the range of results?

Kidding aside, the latter possibility seems like the least likely to me, and anyone in that bucket seems like a pretty bad candidate for EA in general.

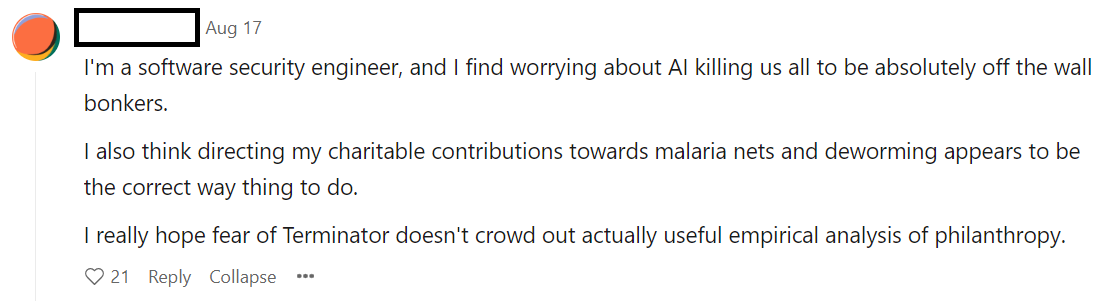

Anecdotally, yes. My partner who proofread my piece left this comment around what I wrote here: "Hit the nail on the head. This is literally how I experienced coming in via effective giving/global poverty calls to action. It wasn't long till I got bait-and-switched and told that this improvement I just made is actually pointless in the grand scheme of things. You might not get me on board with extinction prevention initiatives, but I'm happy about my charity contributions."

The comment I linked to explains well why many can come away with the impression that EA is just about longtermism these days.

My impression is that about half of EA is ppl complaining that ‘EA is just longtermism these days’ :)

LOL. I wonder how much of this is the red-teaming contest. While I see the value in it, the forum will be a lot more readable once that and the cause exploration contest are over.

I guess we can swap anecdotes. I came to EA for the Givewell top charities, a bit after that Vox article was written. It took me several years to come around on the longtermism/x-risk stuff, but I never felt duped or bait-and-switched. Cause neutrality is a super important part of EA to me and I think that naturally leads to exploring the weirder/more unconventional ideas.

Using terms like dupe and bait and switch also implies that something has been taken away, which is clearly not the case. There is a lot of longtermist/x-risk content these days, but there is still plenty going on with donations and global poverty. More money than ever is being moved to Givewell top charities (don't have the time to look it up, but I would be surprised if the same wasn't also true of EA animal welfare) and (from memory) the last EA survey showed a majority of EAs consider global health and wellbeing their top cause area.

I hadn't heard the "rounding error" comment before (and don't agree with it), but before I read the article, I was expecting that the author would have made that claim, and was a bit surprised he was just reporting having heard it from "multiple attendees" at EAG - no more context than that. The article gets more mileage out of that anonymous quote than really seems warranted - the whole thing left me with a bit of a clickbait-y/icky feeling. FWIW, the author also now says about it, "I was wrong, and I was wrong for a silly reason..."

In any case, I am glad your partner is happy with their charity contributions. If that's what they get out of EA, I wouldn't at all consider that being filtered out. Their donations are doing a lot of good! I think many come to EA and stop with that, and that's fine. Some, like me, may eventually come around on ideas they didn't initially find convincing. To me that seems like exactly how it should work.

There's a sampling bias problem here. The EAs who are in the movement, and the people EAs are likely to encounter, are the people who weren't filtered out of the movement. One could sample EAs, find a whole bunch of people who aren't into longtermism but weren't filtered out, and declare that the filter effect isn't a problem. But that wouldn't take into account all the people who were filtered out, because counting them is much harder.

In the absence of being able to do that, this is how I explained my reasoning about this:

Sorry for the delay. Yes this seems like the crux.

As you pointed out, there's not much evidence either way. Your intuitions tell you that there must be a lot of these people, but mine say the opposite. If someone likes the Givewell recommendations, for example, but is averse to longtermism and less appreciative of the other aspects of EA, I don't see why they wouldn't just use Givewell for their charity recommendations and ignore the rest, rather than avoiding the movement altogether. If these people are indeed "less appreciative of the rest of EA", they don't seem likely to contribute much to a hypothetical EA sans longtermism either.

Further, it seems to me that renaming/dividing up the community is a huge endeavor, with lots of costs. Not the kind of thing one should undertake without pretty good evidence that it is going to be worth it.

One last point, for those of us who have bought in to the longtermist/x-risk stuff, there is the added benefit that many people who come to EA for effective giving, etc. (including many of the movement's founders) eventually do come around on those ideas. If you aren't convinced, you probably see that as somewhere on the scale of negative to neutral.

All that said, I don't see why your chapter at Microsoft has to have Effective Altruism in the name. It could just as easily be called Effective Giving if that's what you'd like it to focus on. It could emphasize that many of the arguments/evidence for it come from EA, but EA is something broader.

I agree it'd be good do rigorous analyses/estimations on what the costs vs benefits to global poverty and animal welfare causes are from being under the same movement as longtermism. If anyone wants to do this, I'd be happy to help brainstorm ideas on how it can be done.

I responded to the point about longtermism benefiting from its association with effective giving in another comment.

This post got me considering opening a Facebook group called "Against World Destruction Israel" or something like that (as a sibling to "Effective Altruism Israel")

Most of our material would be around prioritizing x-risks, because I think many people already care about x-risk, they're just focusing on the wrong ones.

As a secondary but still major point, "here are things you can do to help".

What do you (or others) think? (This is a rough idea after 2 minutes of thinking)

This sounds like a great idea. Maybe the answer to the pitching Longtermism or pitching x-risk question is both?

I wouldn't discuss longtermism at all, it's complicated, unintuitive, or more formally: a far inferential distance from a huge amount of people compared to just x-risk. And moat of the actionable conclusions are the same anyway, no?

That didn’t come off as clearly as I had hoped. What I meant was that maybe the leading with X-Risk will resonate for some and Longtermism for others. It seems worth having separate groups that focus on both to appeal to both types of people.

I found this very interesting to read, not because I agree with everything that was said (some bits I do, some bits I don't) but because I think someone should be saying it.

I have had thoughts about the seeming divide between 'longtermism' vs 'shorttermism', when to me there seems to be a large overlap between the two, which kind of goes in line with what you mentioned: x-risk can occur within this lifetime, therefore you do not need to be convinced about longtermism to care about it. Even if future lives had no value (I'm not making that argument), and you only take into account current lives, preventing x-risk has a massive value because that is still nearly 8 billion people we are talking about!

Therefore I like the point that x-risk does not need to be altruistic. But it also very much can be, therefore having it separate from effective altruism is not needed:

1. I want to save as many people as possible therefore stopping extinction is good - altruism, and it is a good idea to do this as effectively as possible

2. I want to save myself from extinction - not altruism, but can lead to the same end therefore there can be a high benefit of promoting it this way to more general audiences

So I do not think that 'effective altruism' is the wrong name, as I think everyone so far I've come across in EA has been in the first category. EA is also broader, including things like animal welfare and global health and poverty and various other areas to improve the lives on sentient beings (and I think this should be talked about more). I think EA is a good name for all of those things.

But if the goal is to reduce x-risk in any way possible, working with people who fall into the second category, who want to save themselves and their loved ones, is good. If we want large shifts in global policy and people generally to act a certain way, things need to be communicated to a general audience, and people should be encouraged to work on high impact things even if they are 'not aligned'.

GREAT post! Such a fantastic and thorough explanation of a truly troubling issue! Thank you for this.

We definitely need to distinguish what I call the various "flavors" of EA. And we have many options for how to organize this.

Personally, I'm torn because, on one hand, I want to bring everyone together still under an umbrella movement, with several "branches" within it. However, I agree that, as you note, this situation feels much more like the differences between the rationality community and the EA community: "the two have a lot of overlap but they are not the same thing and there are plenty of people who are in one but not the other. There’s also no good umbrella term that encompasses both." The EA movement and the extinction risk prevention movement are absolutely different.

And anecdotally, I really want to note that the people who are emphatically in one camp but not the other are very different people. So while I often want to bring them together harmoniously in a centralized community, I've honestly noticed that the two groups don't relate as well and sometimes even argue more than they collaborate. (Again, just anecdotal evidence here — nothing concrete, like data.) It's kind of like the people who represent these movements don't exactly speak the same language and don't exactly always share the same perspectives, values, worldviews, or philosophies. And that's OK! Haha, it's actually really necessary I think (and quite beautiful, in its own way).

I love the parallel you've drawn between the rationality community and the EA community. It's the perfect example: people have found their homes among 2 different forums and different global events and different organizations. People in the two communities have shared views and can reasonably expect people within to be familiar with the ideas, writings, and terms from within the group. (For example, someone in EA can reasonably expect someone else who claims any relation to the movement/community to know about 80000 Hours and have at least skimmed the "key ideas" article. Whereas people in the rationality community would reasonably expect everyone within it to have familiarity with HPMOR. But it's not necessarily expected that these expectations would carry across communities and among both movements.)

You've also pointed out amazing examples of how the motivations behind people's involvement vary greatly. And that's one of the strongest arguments for distinguishing communities; there are distinct subsets of core values. So, again, people don't always relate to each other across the wide variety of diverse cause areas and philosophies. And that's OK :)

Let's give people a proper home — somewhere they feel like they truly belong and aren't constantly questioning if they belong. Anecdotally I've seen so many of my friends struggle to witness the shifts in focus across EA to go towards X risks like AI. My friends can't relate to it; they feel like it's a depressing way to view this movement and dedicate their careers. So much so that we often stop identifying with the community/movement depending on how it's framed and contextualized. (If I were in a social setting where one person [Anne] who knows about EA was explaining it to someone [Kyle] who had never heard of it and that person [Kyle] then asked me if I am "an EA", then I might vary my response, depending on the presentation that the first person [Anne] provided. If the provided explanation was really heavy handed with an X-risk/AI focus, I'd probably have a hard time explaining that I work for an EA org that is actually completely unrelated... Or I might just say something like "I love the people and the ideas" haha)

I'm extra passionate about this because I have been preparing a forum post called either "flavors of EA" or "branches of EA" that would propose this same set of ideas! But you've done such a great job painting a picture of the root issues. I really hope this post and its ideas gain traction. (I'm not gonna stop talking about it until it does haha) Thanks ParthThaya

Thanks for the kind words! Your observations that "people who are emphatically in one camp but not the other are very different people" matches my beliefs here as well. It seems intuitively evident to me that most of the people who want to help the less fortunate aren't going to be attracted to, and often will be repelled by, a movement that focuses heavily on longtermism. And that most of the people who want to solve big existential problems aren't going to be interested in EA ideas or concepts (I'll use Elon Musk and Dominic Cummings are my examples here again).

To avoid the feeling of a bait and switch, I think one solution is to introduce existential risk in the initial pitch. For example, when introducing my student group Effective Altruism at Georgia Tech, I tend to say something like: "Effective Altruism at Georgia Tech is a student group which aims to empower students to pursue careers tackling the world's most pressing problems, such as global poverty, animal welfare, or existential risk from climate change, future pandemics, or advanced AI." It's totally fine to mention existential risk – students still seem pretty interested and happy to sign up for our mailing list.

I think this is a great opening pitch!

Not every personality type is well-equipped to work in AI alignment, so I strongly feel the pitch should be about finding the field that best suits you and where you specifically can find your greatest impact, regardless of whether that ends up being in a longtermist career or global health/poverty or earning to give. As to what charity to give to, whether it would be better to donate to a GiveWell charity or better to donate to AI alignment research, I personally am not sure, I lean more toward the GiveWell charities but I'm fairly new to the EA community and still forming my opinions....