This is a story of growing apart.

I was excited when I first discovered Effective Altruism. A community that takes responsibility seriously, wants to help, and uses reason and science to do so efficiently. I saw impressive ideas and projects aimed at valuing, protecting, and improving the well-being of all living beings.

Today, years later, that excitement has faded. Certainly, the dedicated people with great projects still exist, but they've become a less visible part of a community that has undergone notable changes in pursuit of ever-better, larger projects and greater impact:

From concrete projects on optimal resource use and policy work for structural improvements, to avoiding existential risks, and finally to research projects aimed at studying the potentially enormous effects of possible technologies on hypothetical beings. This no longer appeals to me.

Now I see a community whose commendable openness to unbiased discussion of any idea is being misused by questionable actors to platform their views.

A movement increasingly struggling to remember that good, implementable ideas are often underestimated and ignored by the general public, but not every marginalized idea is automatically good. Openness is a virtue; being contrarian isn't necessarily so.

I observe a philosophy whose proponents in many places are no longer interested in concrete changes, but are competing to see whose vision of the future can claim the greatest longtermist significance.

This isn't to say I can't understand the underlying considerations. It's admirable to rigorously think about the consequences one must and can draw when taking moral responsibility seriously. It's equally valuable to become active and try to advance one's vision of the greatest possible impact.

However, I believe a movement that too often tries to increase the expected value of its actions by continuously reducing probabilities in favor of greater impact loses its soul. A movement that values community building, impact multiplying and getting funding much higher than concrete progress risks becoming an intellectual pyramid scheme.

Again, I’m aware that concrete, impactful projects and people still exist within EA. But in the public sphere accessible to me, their influence and visibility are increasingly diminishing, while indirect high-impact approaches via highly speculative expected value calculations become more prominent and dominant. This is no longer enough for me to publicly and personally stand behind the project named Effective Altruism in its current form.

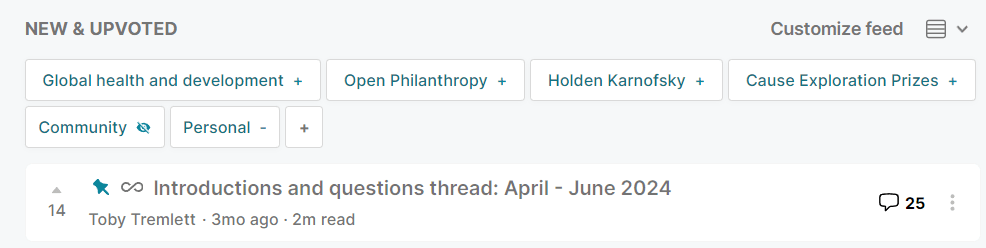

I was never particularly active in the forum, and it took years before I even created an account. Nevertheless, I always felt part of this community. That's no longer the case, which is why I'll be leaving the forum. For those present here, this won't be a significant loss, as my contributions were negligible, but for me, it's an important step.

I'll continue to donate, support effective projects with concrete goals and impacts, and try to actively shape the future positively. However, I'll no longer do this under the label of Effective Altruism.

I'm still searching for a movement that embodies the ideal of committed, concrete effective (lowercase e) altruism. I hope it exists. Good luck to those here that feel the same.

I gave this post a strong downvote because it merely restates some commonly held conclusions without speaking directly to the evidence or experience that supports those conclusions.

I think the value of posts principally derives from their saying something new and concrete and this post failed to do that. Anonymity contributed to this because at least knowing that person X with history Y held these views might have been new and useful.

I don't think you can post 'anonymously' in the sense that there is no account related to your post, you'll always have to create an account, but you can of course use a one-off username and even email address if you want to. However, you can delete your account and then apparently all 'your' posts appear as "[anonymous]" whether you intended this or not. (And in this case, it seems the original poster just created this post with their usual, non-anonymous account, but then deleted their account.)