When I try to think about how much better the world could be, it helps me to sometimes pay attention to the less obvious ways that my life is (much) better than it would have been, had I been born in basically any other time (even if I was born among the elite!).

So I wanted to make a quick list of some “inconspicuous miracles” of my world. This isn’t meant to be remotely exhaustive, and is just what I thought of as I was writing this up. The order is arbitrary.

1. Washing machines

It’s amazing that I can just put dirty clothing (or dishes, etc.) into a machine that handles most of the work for me. (I’ve never had to regularly hand-wash clothing, but from what I can tell, it is physically very hard, and took a lot of time. I have sometimes not had a dishwasher, and really notice the lack whenever I do; my back tends to start hurting pretty quickly when I’m handwashing dishes, and it’s easier to start getting annoyed at people who share the space.)

Source: Illustrations from a Teenage Holiday Journal (1821). See also.

2. Music (and other media!)

Just by putting on headphones, I get better music performances than most royalty could ever hope to see in their c... (read more)

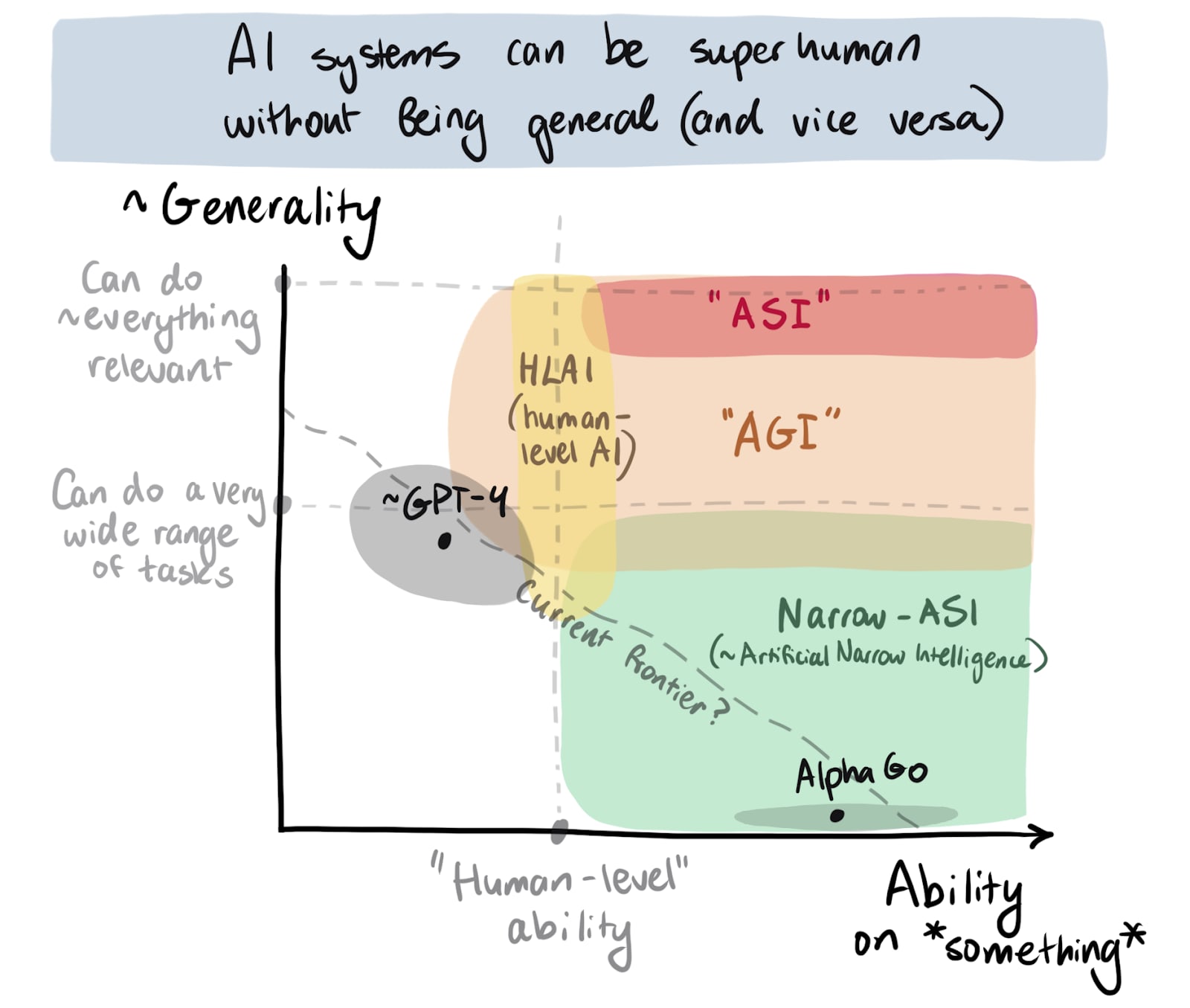

Notes on some of my AI-related confusions[1]

It’s hard for me to get a sense for stuff like “how quickly are we moving towards the kind of AI that I’m really worried about?” I think this stems partly from (1) a conflation of different types of “crazy powerful AI”, and (2) the way that benchmarks and other measures of “AI progress” de-couple from actual progress towards the relevant things. Trying to represent these things graphically helps me orient/think.

First, it seems useful to distinguish the breadth or generality of state-of-the-art AI models and how able they are on some relevant capabilities. Once I separate these out, I can plot roughly where some definitions of "crazy powerful AI" apparently lie on these axes:

(I think there are too many definitions of "AGI" at this point. Many people would make that area much narrower, but possibly in different ways.)

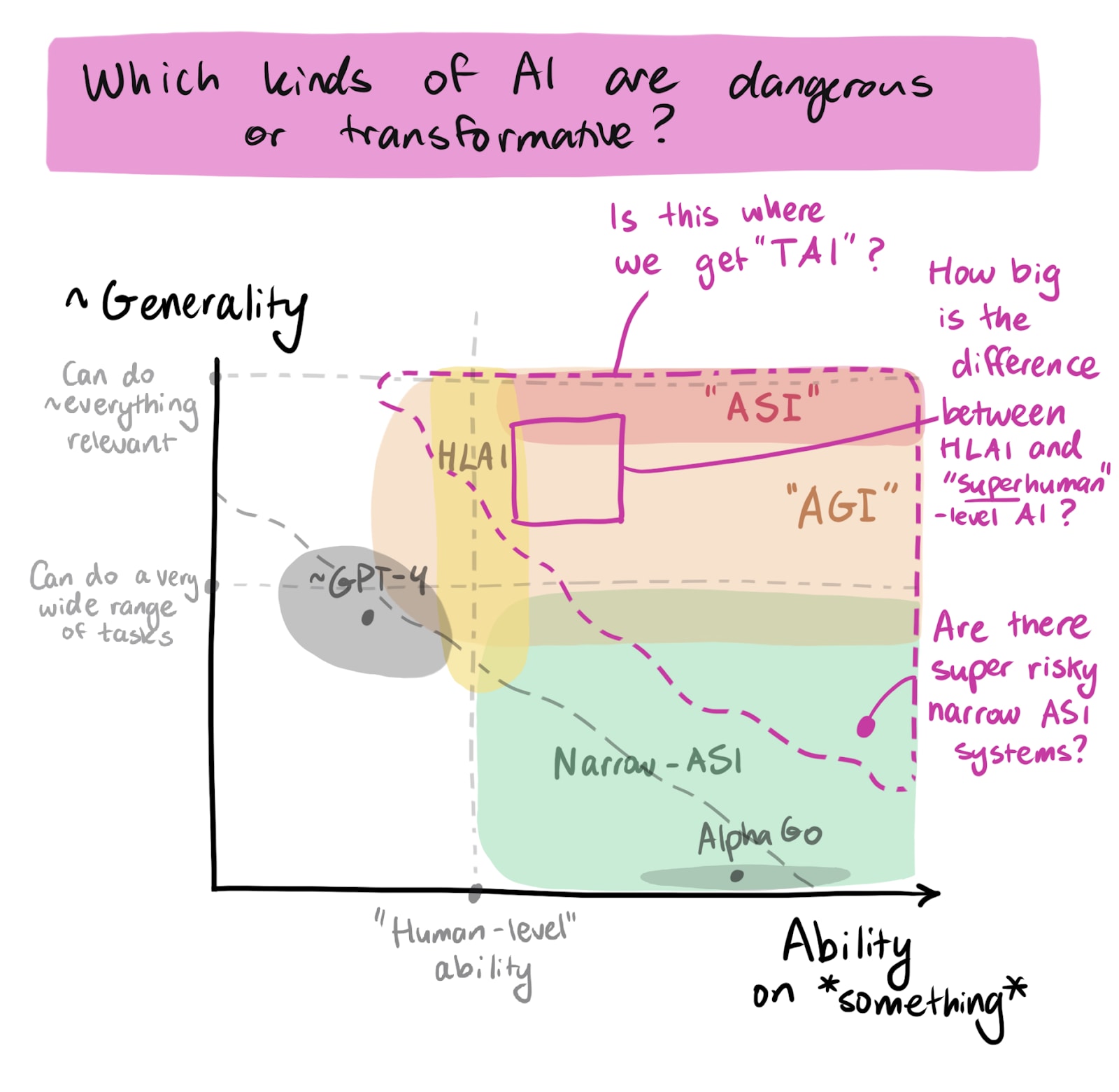

Visualizing things this way also makes it easier for me[2] to ask: Where do various threat models kick in? Where do we get “transformative” effects? (Where does “TAI” lie?)

Another question that I keep thinking about is something like: “what are key narrow (sets of) capabilities such that the... (read more)

TLDR: Notes on confusions about what we should do about digital minds, even if our assessments of their moral relevance are correct[1]

I often feel quite lost when I try to think about how we can “get digital minds right.” It feels like there’s a variety of major pitfalls involved, whether or not we’re right about the moral relevance of some digital minds.

| Digital-minds-related pitfalls in different situations | ||

Reality ➡️ Our perception ⬇️ | These digital minds are (non-trivially) morally relevant[2] | These digital minds are not morally relevant |

| We see these digital minds as morally relevant | (1) We’re right But we might still fail to understand how to respond, or collectively fail to implement that response. | (2) We’re wrong We confuse ourselves, waste an enormous amount of resources on this[3] (potentially sacrificing the welfare of other beings that do matter morally), and potentially make it harder to respond to the needs of other digital minds in the future (see also). |

| We don’t see these digital minds as morally relevant | (3) We’re wrong The default result here seems to be moral catastrophe through ignorance/sleepwalking (although maybe we’ll get lucky). | (4) We’re righ |

A note on how I think about criticism

(This was initially meant as part of this post,[1] but while editing I thought it didn't make a lot of sense there, so I pulled it out.)

I came to CEA with a very pro-criticism attitude. My experience there reinforced those views in some ways,[2] but it also left me more attuned to the costs of criticism (or of some pro-criticism attitudes). (For instance, I used to see engaging with all criticism as virtuous, and have changed my mind on that.) My overall takes now aren’t very crisp or easily summarizable, but I figured I'd try to share some notes.

...

It’s generally good for a community’s culture to encourage criticism, but this is more complicated than I used to think.

Here’s a list of things that I believe about criticism:

- Criticism or critical information can be extremely valuable. It can be hard for people to surface criticism (e.g. because they fear repercussions), which means criticism tends to be undersupplied.[3] Requiring critics to present their criticisms in specific ways will likely stifle at least some valuable criticism. It can be hard to get yourself to engage with criticism of your work or things you care about. I

A note on mistakes and how we relate to them

(This was initially meant as part of this post[1], but I thought it didn't make a lot of sense there, so I pulled it out.)

“Slow-rolling mistakes” are usually much more important to identify than “point-in-time blunders,”[2] but the latter tend to be more obvious.

When we think about “mistakes”, we usually imagine replying-all when we meant to reply only to the sender, using the wrong input in an analysis, including broken hyperlinks in a piece of media, missing a deadline, etc. I tend to feel pretty horrible when I notice that I've made a mistake like this.

I now think that basically none of my mistakes of this kind — I’ll call them “Point-in-time blunders” — mattered nearly as much as other "mistakes" I've made by doing things like planning my time poorly, delaying for too long on something, setting up poor systems, or focusing on the wrong things.

This second kind of mistake — let’s use the phrase “slow-rolling mistakes” — is harder to catch; I think sometimes I'd identify them by noticing a nagging worry, or by having multiple conversations with someone who disagreed with me (and slowly changing my mind), or by seriously reflec... (read more)

USAID has announced that they've committed $4 million to fighting global lead poisoning!

USAID Administrator Samantha Power also called other donors to action, and announced that USAID will be the first bilateral donor agency to join the Global Alliance to Eliminate Lead Paint. The Center for Global Development (CGD) discusses the implications of the announcement here.

For context, lead poisoning seems to get ~$11-15 million per year right now, and has a huge toll. I'm really excited about this news.

Also, thanks to @ryancbriggs for pointing out that this seems like "a huge win for risky policy change global health effective altruism" and referencing this grant:

In December 2021, GiveWell (or the EA Funds Global Health and Development Fund?) gave a grant to CGD to "to support research into the effects of lead exposure on economic and educational outcomes, and run a working group that will author policy outreach documents and engage with global policymakers." In their writeup, they recorded a 10% "best case" forecast that in two years (by the end of the grant period), "The U.S. government, other international actors (e.g., bilateral and multilateral donors), and/or national ... (read more)

Reflection on my time as a Visiting Fellow at Rethink Priorities this summer

I was a Visiting Fellow at Rethink Priorities this summer. They’re hiring right now, and I have lots of thoughts on my time there, so I figured that I’d share some. I had some misconceptions coming in, and I think I would have benefited from a post like this, so I’m guessing other people might, too. Unfortunately, I don’t have time to write anything in depth for now, so a shortform will have to do.

Fair warning: this shortform is quite personal and one-sided. In particular, when I tried to think of downsides to highlight to make this post fair, few came to mind, so the post is very upsides-heavy. (Linch’s recent post has a lot more on possible negatives about working at RP.) Another disclaimer: I changed in various ways during the summer, including in terms of my preferences and priorities. I think this is good, but there’s also a good chance of some bias (I’m happy with how working at RP went because working at RP transformed me into the kind of person who’s happy with that sort of work, etc.). (See additional disclaimer at the bottom.)

First, some vague background on me, in case it’s relevant:

- I finished m

Thanks for writing this Lizka! I agree with many of the points in this [I was also a visiting fellow on the longtermist team this summer]. I'll throw my two cents in about my own reflections (I broadly share Lizka's experience, so here I just highlight the upsides/downsides things that especially resonated with me, or things unique to my own situation):

Vague background:

- Finished BSc in PPE this June

- No EA research experience and very little academic research experience

- Introduced to EA in 2019

Upsides:

- Work in areas that are intellectually stimulating and feel meaningful (e.g. Democracy, AI Governance).

- Become a better researcher. In particular, understanding reasoning transparency, reaching out to experts, the neglected virtue of scholarship, giving and receiving feedback, and being generally more productive. Of course, there is a difference between 1. Understanding these skills, and 2. internalizing & applying them, but I think RP helped substantially with the first and set me on the path to doing the second.

- Working with super cool people. Everyone was super friendly, and clearly supportive of our development as researchers. I also had not written an EA forum post be

Protip: if you find yourself with a slow computer, fix that situation asap.

Note to onlookers that we at Rethink Priorities will pay up to $2000 for people to upgrade their computers and that we view this as very important! And if you work with us for more than a year, you can keep your new computer forever.

I realize that this policy may not be a great fit for interns / fellows though, so perhaps I will think about how we can approach that.

I think we should maybe just send a new mid-end chromebook + high-end headsets with builtin mic + other computing supplies to all interns as soon as they start (or maybe before), no questions asked. Maybe consider higher end equipment for interns who are working on more compute-intensive stuff and/or if they or their managers asked for it.

For some of the intern projects (most notably on the survey team?), more computing power is needed, but since so much of RP work involves Google docs + looking stuff up fast on the internet + Slack/Google Meet comms, the primary technological bottlenecks that we should try to solve is really fast browsing/typing/videocall latency and quality, which chromebooks and headsets should be sufficient for.

(For logistical reasons I'm assuming that the easiest thing to do is to let the interns keep the chromebook and relevant accessories)

Edit: I've now shared: Donation Election: how voting will work. Really grateful for the discussion on this thread!

We're planning on running a Donation Election for Giving Season.

What do you think the final voting mechanism should be, and why? E.g. approval voting, ranked-choice voting, quadratic voting, etc.

Considerations might include: how well this will allocate funds based on real preferences, how understandable it is to people who are participating in the Donation Election or following it, etc.

I realize that I might be opening a can of worms, but I'm looking forward to reading any comments! I might not have time to respond.

Some context (see also the post):

Users will be able to "pre-vote" (to signal that they're likely to vote for some candidates, and possibly to follow posts about some candidates), for as many candidates as they want. The pre-votes are anonymous (as are final votes), but the total numbers will be shown to everyone. There will be a separate process for final voting, which will determine the three winners in the election. The three winners will receive the winnings from the Donation Election Fund, split proportionally based on the votes.

On... (read more)

I’m a researcher on voting theory, with a focus on voting over how to divide a budget between uses. Sorry I found this post late, so probably things are already decided but I thought I’d add my thoughts. I’m going to assume approval voting as input format.

There is an important high-level decision to make first regarding the objective: do we want to pick charities with the highest support (majoritarian) or do we want to give everyone equal influence on the outcome if possible (proportionality)?

If the answer is “majoritarian”, then the simplest method makes the most sense: give all the money to the charity with the highest approval score. (This maximizes the sum of voter utilities, if you define voter utility to be the amount of money that goes to the charities a voter approves.)

If the answer is “proportionality”, my top recommendation would be to drop the idea of having only 3 winners and not impose a limit, and instead use the Nash Product rule to decide how the money is split [paper, wikipedia]. This rule has a nice interpretation where let’s say there are 100 voters, then every voter is assigned 1/100th of the budget and gets a guarantee that this part is only spent on charities ... (read more)

I think since there can be multiple winners, letting people vote on the ideal distribution then averaging those distributions would be better than direct voting, since it most directly represents "how voters think the funds should be split on average" or similar, which seems like what you want to capture? And also is still very understandable I hope.

E.g. if I think 75% of the pool should go to LTFF and 20% to GiveWell, and 5% to the EA AWF, 0% to all the rest, I vote 75%/20%/5%/0%/0%/0% etc. Then, you take the average of those distributions across all voters. I guess it gets tricky if you are only paying out to the top three, but maybe you can just scale their percentage splits? IDK.

If not that or if it is annoying to implement, IMO approval voting or quadratic are probably best, but am not really sure. Ranked choice feels like it is so explicitly designed for single winner elections that it is harder to apply here.

some thoughts on different mechanisms:

Quadratic voting:

I think this could be fun. An advantage here is that voters have to think about the relative value of different charities, rather than just deciding which are better or worse. This could also be an important aspect when we want people to discuss how they plan to vote/how others should vote. If you want to be explicit about this, you could also consider designing the user interface so that users enter these relative differences of charities directly (e.g. "I vote charity A to be 3 times as good as charity B" rather than "I assign 90 vote credits to charity A and 10 vote credits to charity B"). Note however, that due to the top-3 cutoff, putting in the true relative differences between charities might not be the optimal policy.

A technical remark: If you want only to do payouts for the top three candidates, instead of just relying on the final vote, I think it would be better to rescale the voting credits of each voter after kicking out the charity with the least votes and then repeating the process until there are only 3 charities left. This would reduce tactical voting and would respect voters more who pick unusual charities as ... (read more)

Vasili Arkhipov is discussed less on the EA Forum than Petrov is (see also this thread of less-discussed people). I thought I'd post a quick take describing that incident.

Arkhipov & the submarine B-59’s missile

On October 27, 1962 (during the Cuban Missile Crisis), the Russian diesel-powered submarine B-59 started experiencing[1] nearby depth charges from US forces above them; the submarine had been detected and US ships seemed to be attacking. The submarine’s air conditioning was broken,[2] CO2 levels were rising, and B-59 was out of contact with Moscow. Two of the senior officers on the submarine, thinking that a global war had started, wanted to launch their “secret weapon,” a 10-kiloton nuclear torpedo. The captain, Valentin Savistky, apparently exclaimed: “We’re gonna blast them now! We will die, but we will sink them all — we will not become the shame of the fleet.”

The ship was authorized to launch the torpedo without confirmation from Moscow, but all three senior officers on the ship had to agree.[3] Chief of staff of the flotilla Vasili Arkhipov refused. He convinced Captain Savitsky that the depth charges were signals for the Soviet s... (read more)

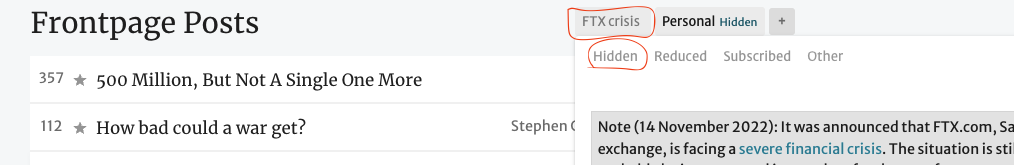

If you feel overwhelmed by FTX-collapse-related content on the Forum, you can hide most of it by using a tag filter: hover over the "FTX collapse" tag on the Frontpage (find it to the right of the "Frontpage Posts" header), and click on "Hidden."

[Note: this used to say "FTX crisis," and that might still show up in some places.]

Superman gets to business [private submission to the Creative Writing Contest from a little while back]

“I don’t understand,” she repeated. “I mean, you’re Superman.”

“Well yes,” said Clark. “That’s exactly why I need your help! I can’t spend my time researching how to prioritize while I should be off answering someone’s call for help.”

“But why prioritize? Can’t you just take the calls as they come?”

Lois clicked “Send” on the email she’d been typing up and rejoined the conversation. “See, we realized that we’ve been too reactive. We were taking calls as they came in without appreciating the enormous potential we had here. It’s amazing that we get to help people who are being attacked, help people who need our help, but we could also make the world safer more proactively, and end up helping even more people, even better, and when we realized that, when that clicked—”

“We couldn’t just ignore it.”

Tina looked back at Clark. “Ok, so what you’re saying is that you want to save people— or help people — and you think there are better and worse ways you could approach that, but you’re not sure which are which, and you realized that instead of rushing off to fight the most immediate threat, yo... (read more)

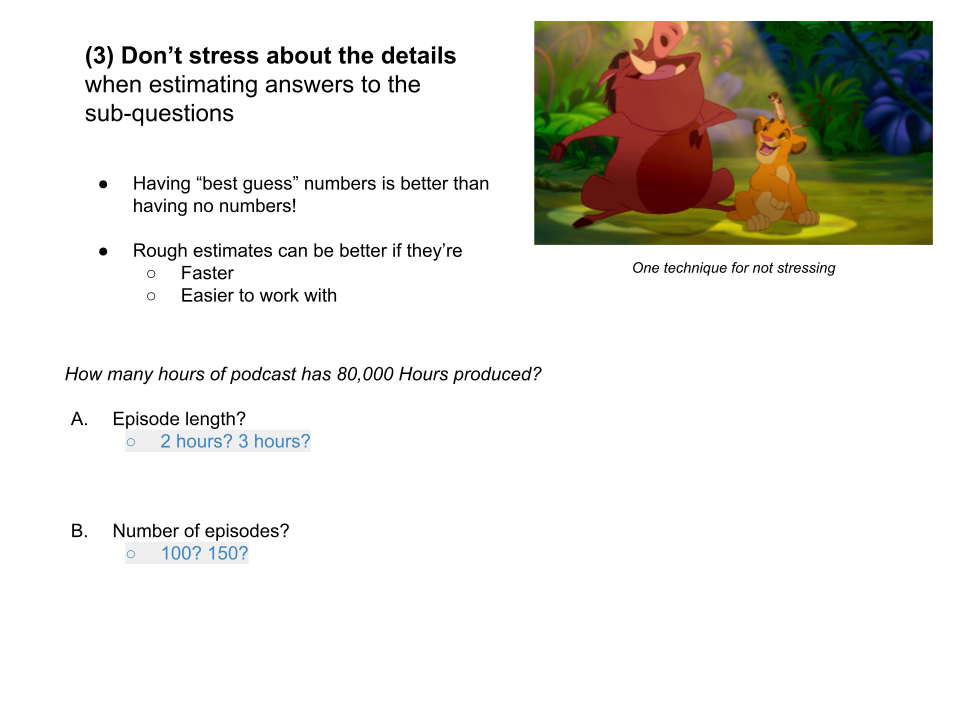

I recently ran a quick Fermi workshop, and have been asked for notes several times since. I've realized that it's not that hard for me to post them, and it might be relatively useful for someone.

Quick summary of the workshop

- What is a Fermi estimate?

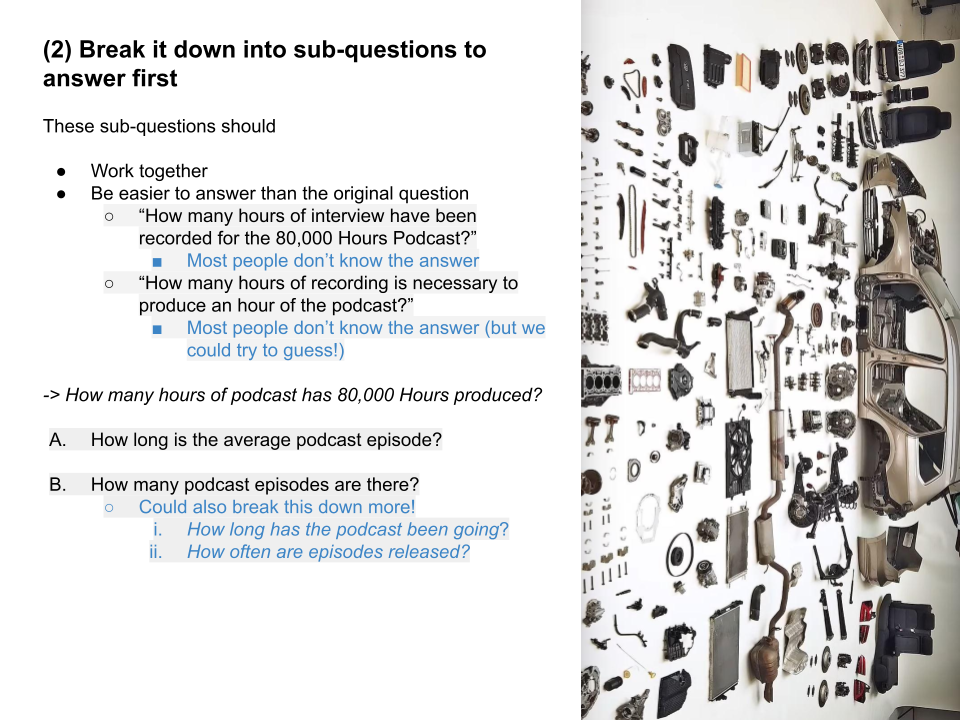

- Walkthrough of the main steps for Fermi estimation

- Notice a question

- Break it down into simpler sub-questions to answer first

- Don’t stress about the details when estimating answers to the sub-questions

- Consider looking up some numbers

- Put everything together

- Sanity check

- Different models: an example

- Examples!

- Discussion & takeaways

Resources

- Guesstimate is a great website for Fermi estimation (although you can also use scratch paper or spreadsheets if that's what you prefer)

- This is a great post on Fermi estimation

- In general, you can look at a bunch of posts tagged "Fermi Estimation" on LessWrong or look at the Forum wiki description

Disclaimers:

- I am not a Fermi pro, nor do I have any special qualifications that would give me credibility :)

- This was a short workshop, aimed mostly at people who had done few or no Fermi estimates before

***************

***************

***************

***************

***************

************... (read more)

Moderation update: We have indefinitely banned 8 accounts[1] that were used by the same user (JamesS) to downvote some posts and comments from Nonlinear and upvote critical content about Nonlinear. Please remember that voting with multiple accounts on the same post or comment is very much against Forum norms.

(Please note that this is separate from the incident described here)

- ^

my_bf_is_hot, inverted_maslow, aht_me, emerson_fartz, daddy_of_upvoting, ernst-stueckelberg, gpt-n, jamess

LukeDing (and their associated alt account) has been banned for six months, due to voting & multiple-account-use violations. We believe that they voted on the same comment/post with two accounts more than two hundred times. This includes several instances of using an alt account to vote on their own comments.

This is against our Forum norms on voting and using multiple accounts. We will remove the duplicate votes.

As a reminder, bans affect the user, not the account(s).

If anyone has questions or concerns, please feel free to reach out, and if you think we made a mistake here, you can appeal the decision.

We also want to add:

- LukeDing appealed the decision; we will reach out to them and ask them if they’d like us to feature a response from them under this comment.

- As some of you might realize, some people on the moderation team have conflicts of interest with LukeDing, so we wanted to clarify our process for resolving this incident. We uncovered the norm violation after an investigation into suspicious voting patterns, and only revealed the user’s identity to part of the team. The moderators who made decisions about how to proceed weren’t aware of LukeDing's identity (they only saw anonymized information).

Moderation update:

We have strong reason to believe that Torres (philosophytorres) used a second account to violate their earlier ban. We feel that this means that we cannot trust Torres to follow this forum’s norms, and are banning them for the next 20 years (until 1 October 2042).

Moderation update: A new user, Bernd Clemens Huber, recently posted a first post ("All or Nothing: Ethics on Cosmic Scale, Outer Space Treaty, Directed Panspermia, Forwards-Contamination, Technology Assessment, Planetary Protection, (and Fermi's Paradox)") that was a bit hard to make sense of. We hadn't approved the post over the weekend and hadn't processed it yet, when the Forum team got an angry and aggressive email today from the user in question calling the team "dipshits" (and providing a definition of the word) for waiting throughout the weekend.

If the user disagrees with our characterization of the email, they can email us to give permission for us to share the whole thing.

We have decided that this is not a promising start to the user's interactions on the Forum, and have banned them indefinitely. Please let us know if you have concerns, and as a reminder, here are the Forum's norms.

We’re issuing [Edit: identifying information redacted] a two-month ban for using multiple accounts to vote on the same posts and comments, and in one instance for commenting in a thread pretending to be two different users. [Edit: the user had a total of 13 double-votes, most far apart and are likely accidental, two upvotes close together on others' posts (which they claim are accidental as well), but two cases of deliberate self upvote from alternative accounts]

This is against the Forum norms around using multiple accounts. Votes are really important for the Forum: they provide feedback to authors and signal to readers what other users found most valuable, so we need to be particularly strict in discouraging this kind of vote manipulation.

A note on timing: the comment mentioned above is 7 months old but went unnoticed at the time, a report for it came in last week and triggered this investigation.

If [Edit: redacted] thinks that this is not right, he can appeal. As a reminder, bans affect the user, not the account.

[Edit: We have retroactively decided to redact the user's name from this early message, and are currently rethinking our policies on the mat... (read more)

Moderation update: We have banned "Richard TK" for 6 months for using a duplicate account to double-vote on the same posts and comments. We’re also banning another account (Anin, now deactivated), which seems to have been used by that same user or by others to amplify those same votes. Please remember that voting with multiple accounts on the same post or comment is very much against Forum norms.

(Please note that this is separate from the incident described here)

Moderation update:

We have strong reason to believe that Charles He used multiple new accounts to violate his earlier 6-month-long ban. We feel that this means that we cannot trust Charles He to follow this forum’s norms, and are banning him from the Forum for the next 10 years (until December 20, 2032).

We have already issued temporary suspensions to several suspected duplicate accounts, including one which violated norms about rudeness and was flagged to us by multiple users. We will be extending the bans for each of these accounts to mirror Charles’s 10-year ban, but are giving the users an opportunity to message us if we have made any of those temporary suspensions in error (and have already reached out to them). While we aren’t >99% certain about any single account, we’re around 99% that at least one of these is Charles He.

You can find more on our rules for pseudonymity and multiple accounts here. If you have any questions or concerns about this, please also feel free to reach out to us at forum-moderation@effectivealtruism.org.

the relatively small crime of evading a previous ban

I don't think repeatedly evading moderator bans is a "relatively small crime". If Forum moderation is to mean anything at all, it has to be consistently enforced, and if someone just decides that moderation doesn't apply to them, they shouldn't be allowed to post or comment on the Forum.

Charles only got to his 6 month ban via a series of escalating minor bans, most of which I agreed with. I think he got a lot of slack in his behaviour because he sometimes provided significant value, but sometimes (with insufficient infrequency) behaved in ways that were seriously out of kilter with the goal of a healthy Forum.

I personally think the 10-year thing is kind of silly and he should just have been banned indefinitely at this point, then maybe have the ban reviewed in a little while. But it's clear he's been systematically violating Forum policies in a way that requires serious action.

The post on forum norms says a picture of geese all flying in formation and in one direction is the desirable state of the forum; I disagree that this is desirable.

I have no idea if this was intentional on the part of the moderators, but they aren't all flying in the same direction. ;-)

It's kind of jarring to read that someone has been banned for "violating a norm" - that word to me implies that they're informal agreements between the community. Why not call them "rules"?

pinkfrog (and their associated account) has been banned for 1 month, because they voted multiple times on the same content (with two accounts), including upvoting pinkfrog's comments with their other account. To be a bit more specific, this happened on one day, and there were 12 cases of double-voting in total (which we’ll remove). This is against our Forum norms on voting and using multiple accounts.

As a reminder, bans affect the user, not the account(s).

If anyone has questions or concerns, please feel free to reach out, and if you think we made a mistake here, you can appeal the decision.

Multiple people on the moderation team have conflicts of interest with pinkfrog, so I wanted to clarify our process for resolving this incident. We uncovered the norm violation after an investigation into suspicious voting patterns, and only revealed the user’s identity to part of the team. The moderators who made decisions about how to proceed aren't aware of pinkfrog's real identity (they only saw anonymized information).

Have the moderators come to a view on identifying information? is pinkfrog the account with higher karma or more forum activity?

In other cases the identity has been revealed to various degrees:

LukeDing

JamesS

Richard TK (noting that an alt account in this case, Anin, was also named)

[Redacted]

Charles He

philosophytorres (but identified as "Torres" in the moderator post)

It seems inconsistent to have this info public for some, and redacted for others. I do think it is good public service to have this information public, but am primarily pushing here for consistency and some more visibility around existing decisions.

Agree. It seems potentially pretty damaging to people’s reputations to make this information public (and attached to their names); that strikes me as a much bigger penalty than the bans. There should, at a minimum, be a consistent standard, and I’m inclined to think that standard should be having a high bar for releasing identifying information.

I think we should hesitate to protect people from reputational damage caused by people posting true information about them. Perhaps there's a case to be made when the information is cherry-picked or biased, or there's no opportunity to hear a fair response. But goodness, if we've learned anything from the last 18 months I hope it would include that sharing information about bad behaviour is sometimes a public good.

Strong +1 to Richard, this seems a clear incorrect moderation call and I encourage you to reverse it.

I'm personally very strongly opposed to killing people because they eat meat, and the general ethos behind that. I don't feel in the slightest offended or bothered by that post, it's just one in a string of hypothetical questions, and it clearly is not intended as a call to action or to encourage action.

If the EA Forum isn't somewhere where you can ask a perfectly legitimate hypothetical question like that, what are we even doing here?

The moderators have reviewed the decision to ban @dstudioscode after users appealed the decision. Tl;dr: We are revoking the ban, and are instead rate-limiting dstudioscode and warning them to avoid posting content that could be perceived as advocating for major harm or illegal activities. The rate limit is due to dstudiocode’s pattern of engagement on the Forum, not simply because of their most recent post—for more on this, see the “third consideration” listed below.

More details:

Three moderators,[1] none of whom was involved in the original decision to ban dstudiocode, discussed this case.

The first consideration was “Does the cited norm make sense?” For reference, the norm cited in the original ban decision was “Materials advocating major harm or illegal activities, or materials that may be easily perceived as such” (under “What we discourage (and may delete or edit out)” in our “Guide to norms on the Forum”). The panel of three unanimously agreed that having some kind of Forum norm in this vein makes sense.

The second consideration was “Does the post that triggered the ban actually break the cited norm?” For reference, the post ended with the question “sho... (read more)

I appreciate the thought that went into this. I also think that using rate-limits as a tool, instead of bans, is in general a good idea. I continue to strongly disagree with the decisions on a few points:

- I still think including the "materials that may be easily perceived as such" clause has a chilling effect.

- I also remember someone's comment that the things you're calling "norms" are actually rules, and it's a little disingenuous to not call them that; I continue to agree with this.

- The fact that you're not even willing to quote the parts of the post that were objectionable feels like an indication of a mindset that I really disagree with. It's like... treating words as inherently dangerous? Not thinking at all about the use-mention distinction? I mean, here's a quote from the Hamas charter: "There is no solution for the Palestinian question except through Jihad." Clearly this is way way more of an incitement to violence than any quote of dstudiocode's, which you're apparently not willing to quote. (I am deliberately not expressing any opinion about whether the Hamas quote is correct; I'm just quoting them.) What's the difference?

- "They see the fact that it is “just” a philosophical

On point 4:

I'm pretty sure we could come up with various individuals and groups of people that some users of this forum would prefer not to exist. There's no clear and unbiased way to decide which of those individuals and groups could be the target of "philosophical questions" about the desirability of murdering them and which could not. Unless we're going to allow the question as applied to any individual or group (which I think is untenable for numerous reasons), the line has to be drawn somewhere. Would it be ethical to get rid of this meddlesome priest should be suspendable or worse (except that the meddlesome priest in question has been dead for over eight hundred years).

And I think drawing the line at we're not going to allow hypotheticals about murdering discernable people[1] is better (and poses less risk of viewpoint suppression) than expecting the mods to somehow devise a rule for when that content will be allowed and consistently apply it. I think the effect of a bright-line no-murder-talk rule on expression of ideas is modest because (1) posters can get much of the same result by posing non-violent scenarios (e.g., leaving someone to drown in a pond is neither an a... (read more)

Ty for the reply; a jumble of responses below.

I think there are better places to have these often awkward, fraught conversations.

You are literally talking about the sort of conversations that created EA. If people don't have these conversations on the forum (the single best way to create common knowledge in the EA commmunity), then it will be much harder to course-correct places where fundamental ideas are mistaken. I think your comment proceeds from the implicit assumption that we're broadly right about stuff, and mostly just need to keep our heads down and do the work. I personally think that a version of EA that doesn't have the ability to course-correct in big ways would be net negative for the world. In general it is not possible to e.g. identify ongoing moral catastrophes when you're optimizing your main venue of conversations for avoiding seeming weird.

I agree with you the quote from the Hamas charter is more dangerous - and think we shouldn't be publishing or discussing that on the forum either.

If you're not able to talk about evil people and their ideologies, then you will not be able to account for them in reasoning about how to steer the world. I think EA is already far ... (read more)

Narrowing in even further on the example you gave, as an illustration: I just had an uncomfortable conversation about age of consent laws literally yesterday with an old friend of mine. Specifically, my friend was advocating that the most important driver of crime is poverty, and I was arguing that it's cultural acceptance of crime. I pointed to age of consent laws varying widely across different countries as evidence that there are some cultures which accept behavior that most westerners think of as deeply immoral (and indeed criminal).

Picturing some responses you might give to this:

- That's not the sort of uncomfortable claim you're worried about

- But many possible continuations of this conversation would in fact have gotten into more controversial territory. E.g. maybe a cultural relativist would defend those other countries having lower age of consent laws. I find cultural relativism kinda crazy (for this and related reasons) but it's a pretty mainstream position.

- I could have made the point in more sensitive ways

- Maybe? But the whole point of the conversation was about ways in which some cultures are better than others. This is inherently going to be a sensitive claim, and it's hard

Do you have an example of the kind of early EA conversation which you think was really important which helped came up with core EA tenets might be frowned upon or censored on the forum now? I'm still super dubious about whether leaving out a small number of specific topics really leaves much value on the table.

And I really think conversations can be had in more sensitive ways. In the the case of the original banned post, just as good a philosophical conversation could be had without explicitly talking about killing people. The conversation already was being had on another thread "the meat eater problem"

And as a sidebar yeah I wouldn't have any issue with that above conversation myself because we just have to practically discuss that with donors and internally when providing health care and getting confronted with tricky situations. Also (again sidebar) it's interesting that age of marriage/consent conversations can be where classic left wing cultural relativism and gender safeguarding collide and don't know which way to swing. We've had to ask that question practically in our health centers, to decide who to give family planning to and when to think of referring to police etc. Super tricky.

My point is not that the current EA forum would censor topics that were actually important early EA conversations, because EAs have now been selected for being willing to discuss those topics. My point is that the current forum might censor topics that would be important course-corrections, just as if the rest of society had been moderating early EA conversations, those conversations might have lost important contributions like impartiality between species (controversial: you're saying human lives don't matter very much!), the ineffectiveness of development aid (controversial: you're attacking powerful organizations!), transhumanism (controversial, according to the people who say it's basically eugenics), etc.

Re "conversations can be had in more sensitive ways", I mostly disagree, because of the considerations laid out here: the people who are good at discussing topics sensitively are mostly not the ones who are good at coming up with important novel ideas.

For example, it seems plausible that genetic engineering for human intelligence enhancement is an important and highly neglected intervention. But you had to be pretty disagreeable to bring it into the public conversation a few years ago (I think it's now a bit more mainstream).

This moderation policy seems absurd. The post in question was clearly asking purely hypothetical questions, and wasn't even advocating for any particular answer to the question. May as well ban users for asking whether it's moral to push a man off a bridge to stop a trolley, or ban Peter Singer for his thought experiments about infanticide.

Perhaps dstudiocode has misbehaved in other ways, but this announcement focuses on something that should be clearly within the bounds of acceptable discourse. (In particular, the standard of "content that could be interpreted as X" is a very censorious one, since you now need to cater to a wide range of possible interpretations.)

Another comment from me:

I don’t like my mod message, and I apologize for it. I was rushed and used some templated language that I knew damn well at the time that I wasn’t excited about putting my name behind. I nevertheless did and bear the responsibility.

That’s all from me for now. The mods who weren’t involved in the original decision will come in and reconsider the ban, pursuant to the appeal.

If you voted in the Donation Election, how long did it take you? (What did you spend the most time on?)

I'd be really grateful for quick notes. (You can also private message me if you prefer.)

Another comment from me:

I don’t like my mod message, and I apologize for it. I was rushed and used some templated language that I knew damn well at the time that I wasn’t excited about putting my name behind. I nevertheless did and bear the responsibility.

That’s all from me for now. The mods who weren’t involved in the original decision will come in and reconsider the ban, pursuant to the appeal.