At EA Global Boston last year I gave a talk on how we're in the third wave of EA/AI safety, and how we should approach that. This post contains a (lightly-edited) transcript and slides. Note that in the talk I mention a set of bounties I'll put up for answers to various questions—I still plan to do that, but am taking some more time beforehand to get the questions right.

Transcript and slides

Hey everyone. Thanks for coming. You're probably looking at this slide and thinking: "how to change a changing world? That's a weird title for a talk. Probably it's a kind of weird talk." And the answer is, yes, it is a kind of weird talk.

So what's up with this talk? Firstly, I am trying to do something a little unusual here. It's quite abstract. It's quite disagreeable as a talk. I'm trying to poke at the things that maybe we haven't been thinking enough about as a movement or as a community. I'm trying to do a "steering the ship" type thing for EA.

I don't want to make this too inside baseball or anything. I think it's going to be useful even if people don't have that much EA background. But this seems like the right place to step back and take stock and think: what's happened so far? What's gone well? What's gone wrong? What should we be doing?

So the structure of the talk is:

- Three waves of EA and AI safety. (I mix these together because I think they are pretty intertwined, at least intellectually. I know a lot of people here work in other cause areas. Hopefully this will still be insightful for you guys.)

- Sociopolitical thinking and AI. What are the really big frames that we need as a community to apply in order to understand the way that the world is going to go and how to actually impact it over the next couple of decades?

There's a thing that sometimes happens with talks like this—maybe status regulation is the word. In order to give a really abstract big picture talk, you need to be super credible because otherwise it's very aspirational. I'm just going to say a bunch of stuff where you might be like, that's crazy. Who's this guy to think about this? It's not really his job or anything. I mean, it kind of is my job, and part of it is my own framework, but a lot of it is reporting back from certain front lines. I get to talk to a bunch of people who are shaping the future or living in the future in a way that most of the world isn't. So part of what I'm doing here is trying to integrate those ideas from a bunch of people that I get to talk to and lay them out. Your mileage may vary.

Three waves of EA and AI safety

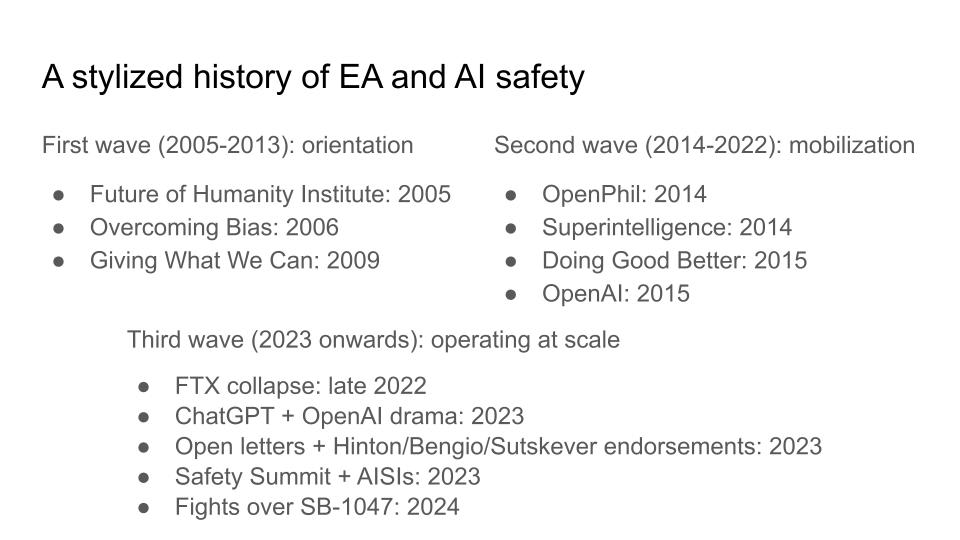

Let's start with a very stylized history of EA and AI safety. I'm going to talk about it in three waves. Wave number one I’ll say, a little arbitrarily, is 2005 to 2013. That's the orientation wave. This wave was basically about figuring out what was up with the world, introducing a bunch of big picture ideas. The Future of Humanity Institute, Overcoming Bias and LessWrong were primarily venues for laying out worldviews and ideas, and that was intertwined with Giving What We Can and the formation of the Oxford EA scene. I first found out about EA from a blog post on LessWrong back in 2010 or 2011 about how you should give effectively and then give emotionally separately.

So that was one wave. The next wave I'm going to call mobilization, and that's basically when things start getting real. People start trying to actually have big impacts on the world. So you have OpenPhil being spun out. You have a couple of books that are released around this time: Superintelligence, Doing Good Better. You have the foundation of OpenAI, and also around this time the formation of the DeepMind AGI safety team.

So at this point, there's a lot of energy being pushed towards: here's a set of ideas that matter. Let's start pushing towards these. I'm more familiar with this in the context of AI. I think a roughly analogous thing was happening in other cause areas as well. Maybe the dates are a little bit different.

Then I think the third wave is when things start mattering really at scale. So you have a point at which EA and AI safety started influencing the world as a whole on a macro level. The FTX collapse was an event with national and international implications, one of the biggest events of its kind. You had the launch of ChatGPT and various OpenAI dramas that transfixed the world. You had open letters about AI safety, endorsements by some of the leading figures in AI, safety summits, AI safety institutes, all of that stuff. It's no longer the case that EA and AI safety are just one movement that's just doing stuff off in its corner. This stuff is really playing out at scale.

To some extent this is true for other aspects of EA as well. A lot of other a lot of the key intellectual figures that are most prominent in American politics, people like Ezra Klein and Nate Silver and so on, really have been influenced by the worldview that was developed in this first wave and then mobilized in the second wave.

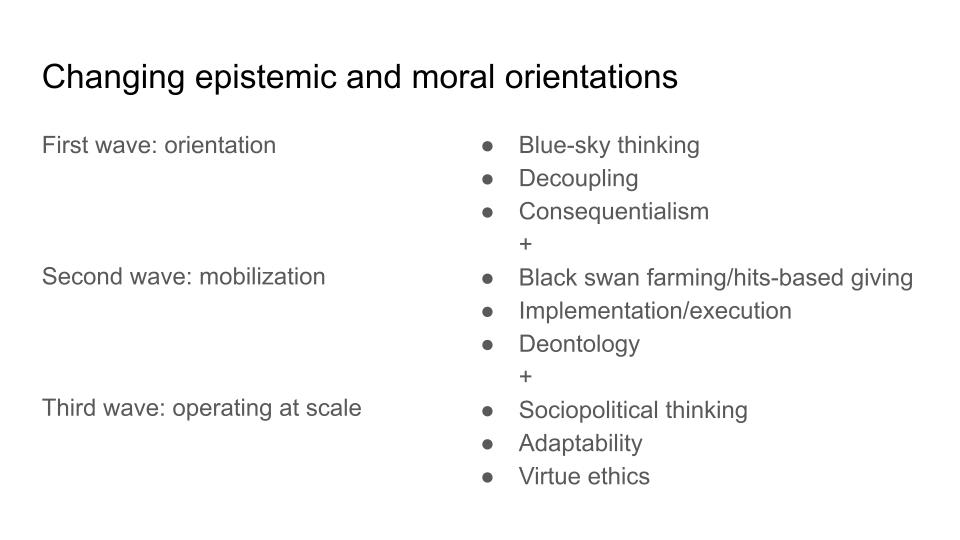

I think you need pretty different skills in order to do each of these types of things well. We’ve seen an evolution of what orientation you need to have in order to succeed at these types of waves, which to various extents we have or haven't had.

So for the first wave, it's thinking oriented. You need to be really willing to look beyond the frontiers of what other people are thinking about. You need to be high-decoupling. You're willing to transgress on existing norms or existing assumptions that people take for granted. And you want to be consequentialist. You want to think about what is the biggest impact I can have? What's the thing that people are missing? How can I scale?

As you start getting into the second wave, you need to add on another set of skills and orientations. One term for this in the startup context is black swan farming. Another term that OpenPhil has used is hits-based giving, the idea that you should really be optimizing for getting a few massive successes, and that's where most of your impact comes from. In order to do those massive successes well, you need the skill of implementation and execution. That's pretty different thing from sitting at an institute in Oxford thinking about the far future.

To do this well, I think the other thing you need is deontology. Because when you start actually doing stuff—when you start trying to scale a company, when you start trying to influence philanthropy—it's easy to see ways in which maybe you could lie a little bit. Maybe you can be a bit manipulative. In extreme cases, like FTX, it'd be totally deceitful. But even for people operating at much smaller scales, I think deontology here was one of the guiding principles that needed to be applied and in some cases was and in some cases wasn't.

Now we're in the third wave. What do we need for the third wave? I think it's a couple of things. Firstly, I think we can no longer think in terms of black swan farming or hit space giving. And the reason is that when you are operating at these very large scales, it's no longer enough to focus on having a big impact. You really need to make sure the impact is positive.

If you're trying to found a company, you want the company to grow as fast as possible. The downside is the company goes bust. That's not so bad. If you're trying to shape AI regulation, then you can have a massive positive impact. You can also have a massive negative impact. There's really a different set of mindset and set of skills required.

You need adaptability because on the timeframe that you might build a company or start a startup or start a charity, you can expect the rest of the world to remain fixed. But on the timeframe that you want to have a major political movement, on the timeframe that you want to reorient the U.S. government's approach to AI, a lot of stuff is coming at you. The whole world is, in some sense, weighing in on a lot of the interests that have historically been EA's interests.

And then thirdly, I think you want virtue ethics. The reason I say this is because when you are operating at scale, deontology just isn't enough to stop you from being a bad person. If you're operating a company, maybe you commit some fraud, as long as you don't step outside the clear lines of what's legal and what's moral, then people kind of expect that maybe you use shady business tactics or something.

But when you're a politician and you're being shady you can screw a lot of stuff up. When you're trying to do politics, and when you're in domains that have very few clear, bright lines, it's very hard to say you crossed a line here because it's all politics. The way to do this in a high integrity way that leads to other people wanting to work with you is really to focus on doing the right things for the right reasons. I think that's the thing that we need to have in order to operate at scale in this world.

So what does it look like to fuck up the third wave? The next couple of slides are deliberately a little provocative. You should take them 80% of how strongly I say them, and in general, maybe you should take a lot of the stuff I say 80% of how seriously I say it, because I'm very good at projecting confidence.

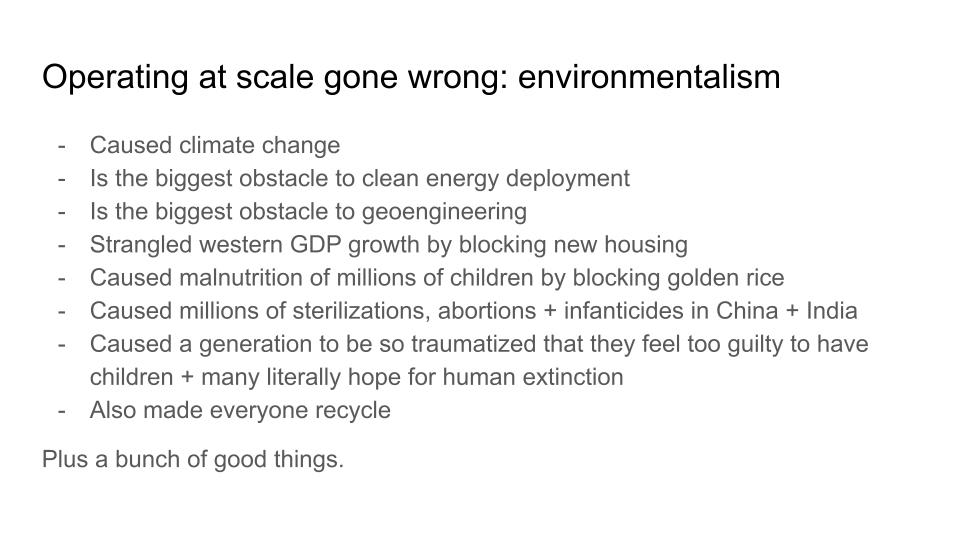

But I claim that one of the examples where operating at scale is just totally gone to shit is the environmentalist movement. I would somewhat controversially claim that by blocking nuclear power, environmentalism caused climate change. Via the Environmental Protection Act, environmentalism caused the biggest obstacle to clean energy deployment across America. Via opposition to geoengineering, it's one of the biggest obstacles to actually fixing climate change. The lack of growth of new housing in Western countries is one of the biggest problems that's holding back Western GDP growth and the types of innovation that you really want in order to protect the environment.

I can just keep going down here. I think the overpopulation movement really had dramatically bad consequences on a lot of the developing world. The blocking of golden rice itself was just an absolute catastrophe.

The point here is not to rag on environmentalism. The point is: here's a thing that sounds vaguely good and kind of fuzzy and everyone thinks it's pretty reasonable. There are all these intuitions that seem nice. And when you operate at scale and you're not being careful, you don't have the types of virtues or skills that I laid out in the last slide, you just really fuck a lot of stuff up. (I put recycling on there because I hate recycling. Honestly, it's more a symbol than anything else.)

I want to emphasize that there is a bunch of good stuff. I think environmentalism channeled a lot of money towards the development of solar. That was great. But if you look at the scale of how badly you can screw these things up when you're taking a mindset that is not adapted to operating at the scale of a global economy or global geopolitics, it's just staggering, really. I think a lot of these things here are just absolute moral catastrophes that we haven't really reckoned with.

Feel free to dispute this in office hours, for example, but take it seriously. Maybe I want to walk back these claims 20% or something, but I do want to point at the phenomenon.

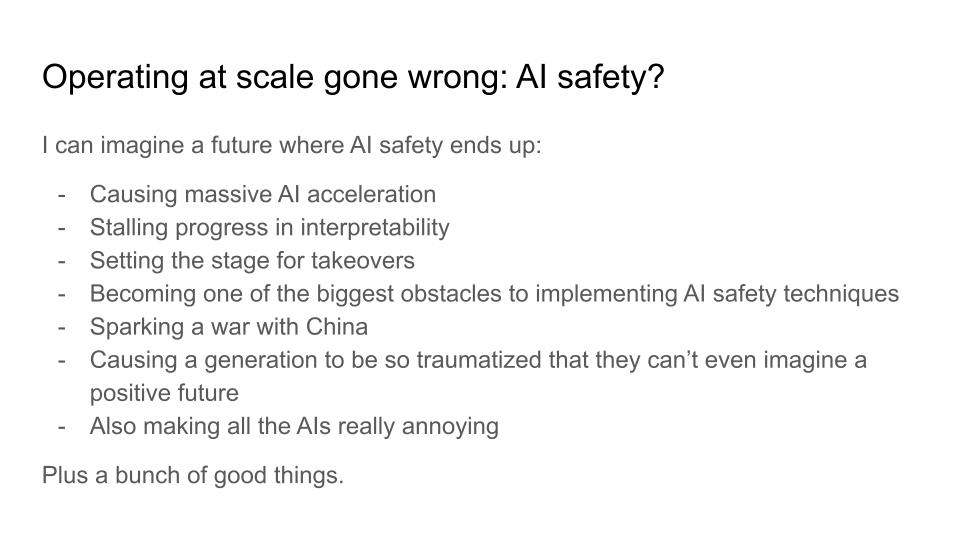

And then I want to say: okay, what if that happens for us? I can kind of see that future. I can kind of picture a future where AI safety causes a bunch of harms that are analogous to this type of thing:

- Causing massive AI acceleration (insofar as you think that's a harm).

- If you block open source, plausibly it stalls a bunch of progress on interpretability.

- If you centralize power over AI, then maybe that sets the stage itself for AI takeover.

- If you regulate AI companies in the wrong ways, then maybe you become one of the biggest obstacles to implementing AI safety techniques.

- If you pass a bunch of safety legislation that actually just adds on too much bureaucracy. Europe might just not be a player in AI because they just can't have startups forming partly because of things like the EU AI Act.

- The relationship of the U.S. and China, it's a big deal. I don't know what effect AI safety is going to have on that, but it's not clearly positive.

- Making AI really annoying—that sucks.

The thing I want to say here is I really do feel a strong sense of: man, I love the AI safety community. I think it's one of the groups of people who have organized to actually pursue the truth and actually push towards good stuff in the world more than almost anyone else. It's coalesced in a way that I feel a deep sense of affection for. And part of what makes me affectionate is precisely the ability to look at stuff like this and be like: okay, what do we do better?

All of these things are operating at such scale, what do we actually need to do in order to not accidentally screw up as badly as environmentalism (or your example of choice if you don't believe that particular one)?

Note: I plan to post these bounties separately, but am taking some more time beforehand to get the questions right.

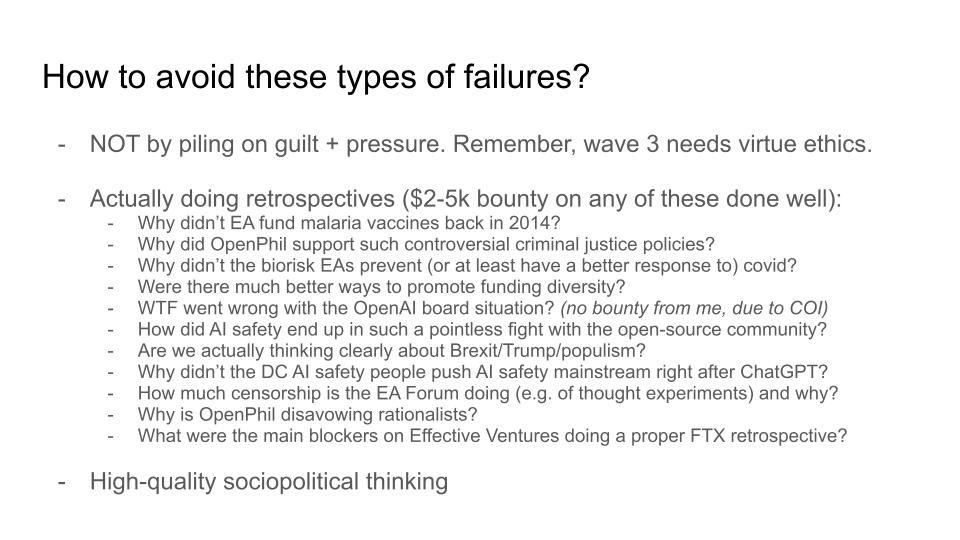

So do I have answers? Kind of. These are just tentative answers and I'll do a bit more object-level thinking later. This is the more meta element of the talk. There's one response: oh, boy, we better feel really guilty whenever we fuck up and apply a lot of pressure to make sure everyone's optimizing really hard for exactly the good things and exclude anyone who plausibly is gonna skew things in the wrong direction.

And I think this isn't quite right. Maybe that works at a startup. Maybe it works at a charity. I don't think it works in Wave 3, because of the arguments I gave before. Wave 3 needs virtue ethics. You just don't want people who are guilt-ridden and feeling a strong sense of duty and heroic responsibility to be in charge of very sensitive stuff. There's just a lot of ways that that can go badly. So I want to avoid that trap.

I want to have a more grounded view. These are hard problems to solve. And also, we have a bunch of really talented people who are pretty good at solving problems. We just need to look at them and start to think things through.

Here's a list of questions that I often ask myself. I don't really have time to answer a lot of these questions: hey, here's a thing that we could have done better, have we actually written up the lessons from that? Can we actually learn from it as a community?

I'm going to put up a post on this about how I'll offer bounties for people who write these up well, maybe $2,000 to $5,000-ish on these questions. I won't go through them in detail, but there's just a lot of stuff here. I feel like I want to learn from experience, because there's a lot of experience that the community has aggregated across itself. And I think there's a lot of insight to be gained—not in a beating ourselves up way, not in a "man, we really screwed up the malaria vaccines back in 2014" way—but by asking: yeah, what was going on there? Malaria was kind of our big thing. And then malaria vaccines turned out to be a big deal. Was there some missing mental emotion that needed to happen there? I don't know. I wasn't really thinking about it at the time. Maybe somebody was. Maybe somebody can tell me later. And the same thing for all of these others.

The other thing I really want is high-quality sociopolitical thinking, because when you are operating at this large scale, you really need to be thinking about the levers that are moving the world. Not just the local levers, but what's actually going on in the world. What are the trends that are shaping the next decade or two decades or half century or century? EA is really good in some ways at thinking about the future, and in other ways, tends to abstract away a little too much. We think about the long-term future of AI, but the next 20 years of politics, somehow doesn't feel as relevant. So I'm going to do some sociopolitical thinking in the second half of this talk.

Sociopolitical thinking and AI

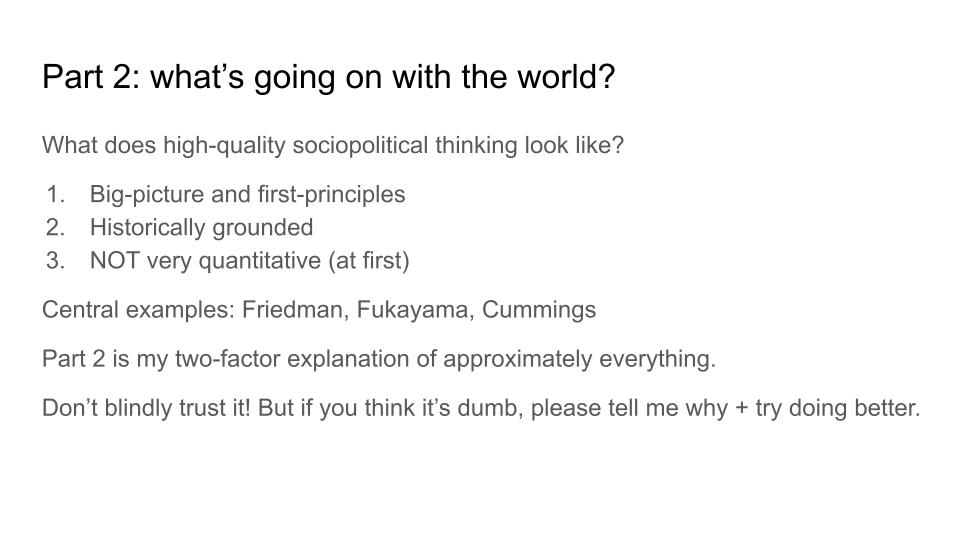

So here's part two of the talk: what's going on with the world? High-quality sociopolitical thinking, which is the sort of thing that can ground these large-scale Wave 3 actions, should be big picture, should be first principles, historically grounded insofar as you can. It’s pretty different from quantitative-type thinking that a lot of EAs are pretty good at. At first, the thing you're doing is trying to identify deep levers in the world, these sort of structural features of the world that other people haven't really noticed.

I will flag a couple of people who I think are really good at this. I think Friedman, talking about “The world is flat”; Fukuyama, talking about “The end of history”. Maybe they're wrong, maybe they're right, but it's a type of thinking that I want to see much more of. Dominic Cummings: whether you like him or you hate him, he was thinking about Brexit just at a deeper level than basically anyone else in the world. Everyone else was doing the economic debate about Brexit, and he was thinking about the sociocultural forces that shape nations on the time frame of centuries or decades. Maybe he screwed it up, maybe you didn't, but at the very least you want to engage with that type of thinking in order to actually understand how radical change is going to play out over the next few decades.

I am going to, in the rest of this talk, just tell you my two-factor model of what's going on with the world. And this is the bit where I'm definitely going a long way outside my domain of expertise, definitely this is just way bigger than it is all plausible for a two-factor model to try and do. So don't believe what I'm saying here, but I want to have people tell me that I'm being an idiot about this. I want you to do better than this. I want to engage with alternative conceptions of how to make sense of the world as a whole in such a way that we can then slot in a bunch of more concrete interventions like AI safety interventions or global health interventions or whatever.

Okay, here's my two-factor model. I want to understand the world in terms of two things that are happening right now:

- Technology dramatically increases returns to talent.

- Bureaucracy is eating the world.

In order to understand these two drivers of change, you can't just look at history because we are in the middle of these things playing out. These are effects that are live occurrences and we're in some sense at the peak of both of them. What do I mean by these?

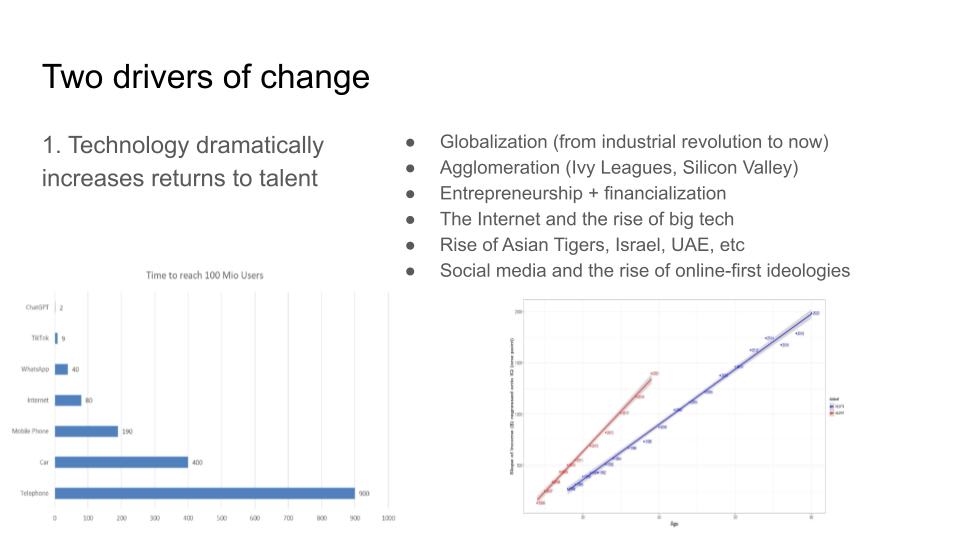

Number one, technology dramatically increases returns to talent. You should be thinking in terms of centuries. You can think about globalization from the Industrial Revolution, from colonialism. It used to be the case that no matter how good you were—no matter how good you were at inventing technology, no matter how good you were at politics—you could only affect your country, or as far as your logistics and supply chains reached.

And then it turned out that innovators in Britain, for example, could affect the rest of the world, for good or bad. This has played out gradually over time. It used to take a long time for exceptional talent to influence the world. It now takes much less time. So this graph on the left here is a chart of how long it took to get to 100 million users for different innovations. The telephone is at the bottom. That took a long time. I think it's 900 months down there. The car, the mobile phone, the internet, different social media. At the top, you see this tiny little thing that's ChatGPT. It's basically invisible how quickly it got to 100 million users. So if you can do something like that, if you can invent the telephone, if you can invent ChatGPT or whatever, the returns to being good enough to do that accumulate much faster and at much larger scales than they used to.

This is what you get with the agglomeration of talent in the Ivy Leagues and Silicon Valley. I think Silicon Valley right now is maybe the greatest agglomeration of talent in the history of humanity. That’s a bit of a self-serving claim because I live there, but I think it's just also true. And it's a weird perspective to have because something like this has never happened before. It has never been the case that you have a pool of so many people from across the world to draw on to get a bunch of talent in one place. In some sense it makes a lot of sense that this effect is driving a lot of unusual and unprecedented sociopolitical dynamics, as I'll talk about shortly.

The other graph here is an interesting one. It's the financial returns to IQ over two cohorts. The blue line is the older cohort, it's from 50 years ago or something. It's got some slope. And then the red line is a newer cohort. And that's a much steeper slope. What that means is basically for every extra IQ point you have, in the new cohort you get about twice as much money on average for that IQ point. That's a particularly interesting illustration of the broader base of technology increasing returns to talent.

Then we can quibble about whether or not it's precisely technology driving that. But I think it's intuitively compelling that if you’re an engineer at Google and you can make one change that is immediately rolled out to a billion people, of course being better at your job is gonna have these huge returns. Obviously the tech itself is unprecedented, but I think one way in which we don't think about it being unprecedented is that the ability to scale talent is just absurd. (I won’t say specific anecdotes of how much people are being headhunted for, but it's kind of crazy.)

Even people being in this room is an example of this technology dramatically increasing returns to talent. There's a certain ability to read ideas on the internet and then engage with them and then build a global community around them that just wouldn't have been possible even 50 years ago. It would have just been way harder to have a community that spans England and the East Coast and the West Coast and get people together in the same room. It's nuts, and now you can actually do that.

I should say the best example of this, which is obviously just crazy good entrepreneurs. If you were just the best in the world at the thing you do, then now you can make $100 billion. Not reliably, but Elon started a lot of companies and he just keeps succeeding because he is the best in the world at entrepreneurship.He couldn't previously have made $200 billion from that, and now he can. And the same is true for other people in this broad space. And so I should especially highlight that the returns are extreme at the extreme outlier ends of the talent scale.

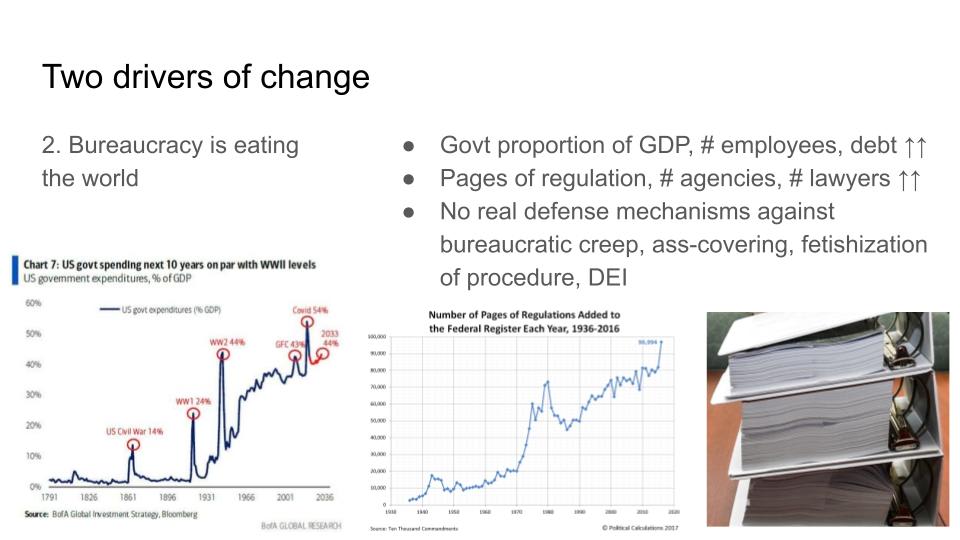

Second driver: bureaucracy is eating the world. You might say: sure there are all these returns to talent, but people used to get a lot of stuff done and now they can't. So the counterpoint to this, or the opposing force, is that there's just way more bureaucracy than there used to be. And this is true of the spending of governments across the world—this is the U.S. government spending as a proportion of GDP, and you can see these ratchet effects where it goes up and it just never goes back down again. And now it's at this crazy level of what, 50% or something?

So if you just look at the number of employees of the government [EDIT: this one is incorrect], the amount of debt that Western governments have… This graph here of pages of regulation, that's not even the total amount of regulation, that's the amount of regulation added per year. So if that's linearly increasing, then the actual amount of regulation is quadratically increasing. It's kind of nuts. The picture here is just one environmental review for one not even particularly important policy—I think it was congestion pricing—it took three years and then ultimately got vetoed anyway.

Libertarians have always talked about “there's too much regulation”, but I think it's underrated how this is not a fact about history, this is a thing we are living through—that we are living through the explosion of bureaucracy eating the world. The world does not have defense mechanisms against these kinds of bureaucratic creep. Bureaucracy is optimized for minimizing how much you can blame any individual person. So there's never a point at which the bureaucracy is able to take a stand or do the sensible policy or push back even against stuff that's blatantly illegal, like a bunch of the DEI stuff at American universities. It’s just really hard to draw a line and be like “hey, we shouldn't do this illegal thing”. Within a bureaucracy, nobody does it. And you have multitudes of examples.

So these are two very powerful forces that I think of as shaping the world in a way that they didn't 20 or 40 or 60 years ago.

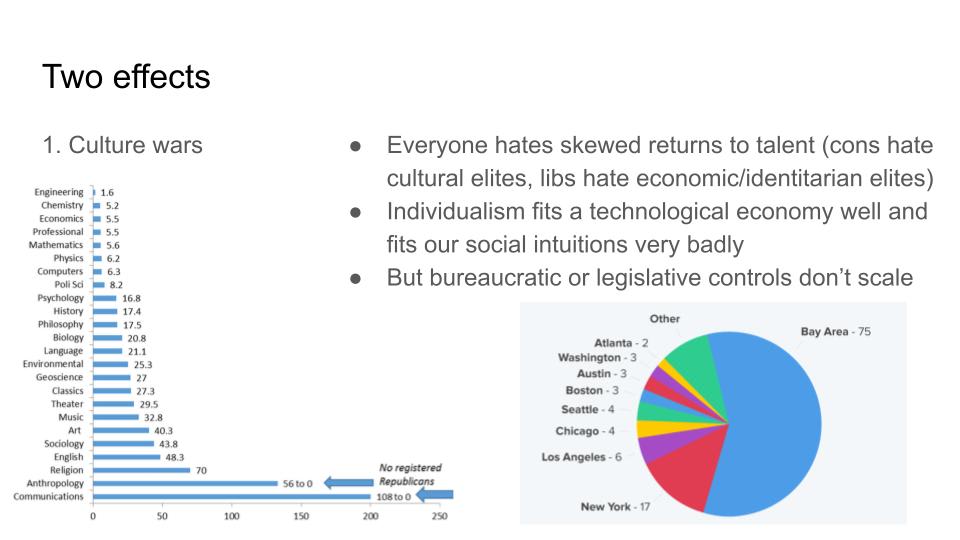

This will get back to more central EA topics eventually, but I'm trying to flesh out some of the ways in which I apply this lens or paradigm. One of them is culture wars. The thing about culture wars is that everyone really hates the extent to which the returns to talent are skewed towards outliers, but they hate it in different ways. Liberals hate economic and identitarian elites—the fact that one person can accumulate billions of dollars or that there are certain groups that end up outperforming and getting skewed returns. On the left, it's a visceral egalitarian force that makes them hate this.

On the right, I think a lot of conservatives and a lot of populist backlash are driven by the fact that the returns to cultural elitism are really high. There's just the small group of people in most Western countries—city elites, urban elites, coastal elites, whatever you want to call them—that can shape the culture of the entire country. And that's a pretty novel phenomenon. It didn't used to be the case that newspapers were so easily spread across literally the entire country—that the entirety of Britain is run so directly from London—that you have all the centralization in these cultural urban elites.

And one way you can think about the culture war is both sides are trying to push back on these dramatic returns to talent and both are just failing. The sort of controls that you might try and apply—the ways you might try and apply bureaucracy or apply legislation to even the playing field—just don’t really work. On both sides, people are in some sense helpless to push back on this stuff. They’re trying, they're really trying. But this is one of the key drivers of a lot of the cultural conflicts that we're seeing.

The graphs here on the left, we have the skew and political affiliation of university professors by different fields. Right at the top, I think that's like five to one, so even the least skewed are still really Democrat skewed. And then at the bottom, it's incredibly skewed to the point where there's no Republicans. This is the type of skewed returns to talent or skewed concentration of power that the right wing tends to hate.

And then on the right, you have the proportion of startups distributed across different cities in America. They do tend to agglomerate really hard, and there's just not that much you can do about it. Just because the returns to being in the number one best place in the world for something are just so high these days.

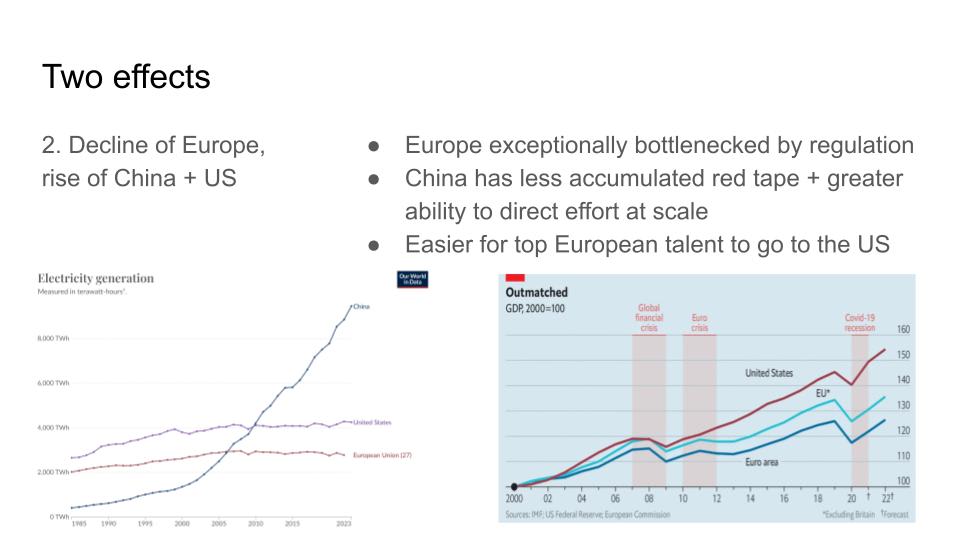

Second effect: I'll just talk through this very briefly, but I do think it's underrated how much the U.S. has overtaken the EU over the last decade or two decades in particular. You can see on the right, you have this crazy graph of U.S. growth being twice as high as European growth over the last 20 years. And I do think that it's basically down to regulation. I think the U.S. has a much freer regulatory environment, much more room to maneuver. In some countries in Europe, it's effectively illegal to found a startup because you have to jump through so many hoops, and the system is really not set up for that. In the U.S. at the very least, you have arbitrage between different states.

Then you have more fundamental freedoms as well. This is less a question of tax rates and more just a question of how much bureaucracy you have to deal with. But then conversely: why does the U.S. not have that much manufacturing? Why is it falling behind China in a bunch of ways? It's just really hard to do a lot of stuff when your energy policy is held back by radical bureaucracy. And this is basically the case in the U.S. The U.S. could have had nuclear abundance 50, 60 years ago. The reason that it doesn't have nuclear abundance is really not a technical problem, it's a regulation problem [which made it] economically infeasible to get us onto this virtuous cycle of improving nuclear. You can look at the ALARA (as low as reasonably achievable) standard for nuclear safety: basically no matter what the price, you just need to make nuclear safer. And that totally fucks the U.S.'s ability to have cheap energy, which is the driver of a lot of economic growth.

Okay. That was all super high level. I think it's a good framework in general. But why is it directly relevant to people in this room? My story here is: the more powerful AI gets, the more everyone just becomes an AI safety person. We've kind of seen this already: AI has just been advancing and over time, you see people falling into the AI safety camp with metronomic predictability. It starts with the most prescient and farsighted people in the field. You have Hinton, you have Bengio and then Ilya and so on. And it's just ticking its way through the whole field of ML and then the whole U.S. government, and so on. By the time AIs have the intelligence of a median human and the agency of a median human, it's just really hard to not be an AI safety person. So then I think the problem that we're going to face is maybe half of the AI safety people are fucking it up and we don't know which half.

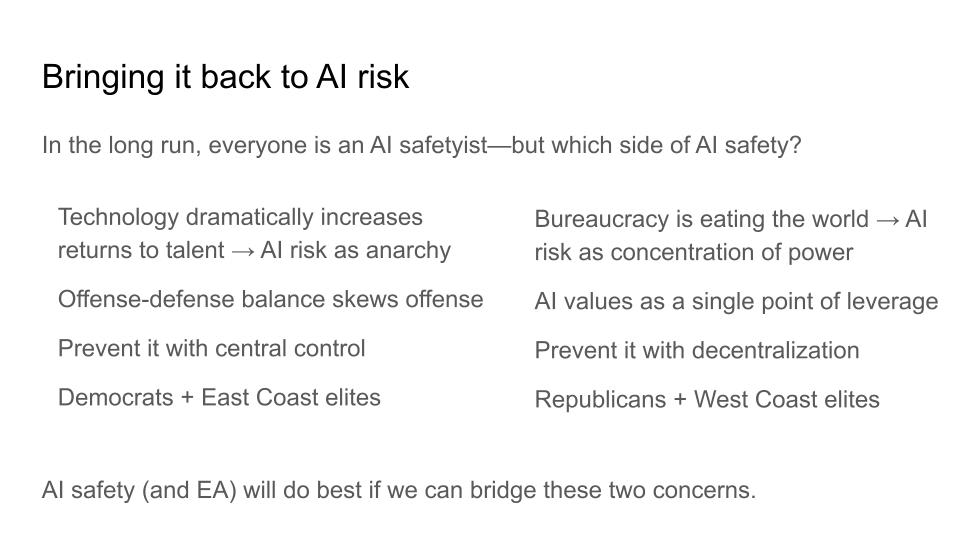

And you can see these two effects that I described [as corresponding to two conflicting groups in AI safety]. Suppose you are really worried about technology increasing the returns to talent—for example, you're really worried that anyone could produce bioweapons in the future. It used to be the case that you had to be one of the best biology researchers in the world in order to actually improve bioweapons. And in the future, maybe anyone with an LLM can do it. I claim people start to think of AI risk as basically anarchy. You have this offense defense balance that's skewed towards people being able to mess up the world because AI will give a small group of people—a small group of terrorists, a small group of companies—the ability to have these massive effects that can't be controlled.

So the natural response to this frame on AI risk is you want to prevent it with central control. You want to make sure that no terrorists get bioweapon-capable AIs. You want to make sure that you have enough regulation to oversee all the companies and so on and so forth. Broadly speaking, this is the approach that resonates the most with Democrats and East Coast elites. Because they've been watching Silicon Valley, these crazy returns to talent, the fact that one engineer at Facebook can now skew the news that's seen by literally the entire world.

And it's like: oh shit, we can't keep control of this. We have to do something about it. And that's part of why you see these increasing attempts to control stuff from the Democrats, and East Coast elites in general—Ivy League professors and the sort of people who inform Democrat policy.

The other side of AI safety is that you're really worried about bureaucracy eating the world. And if you're really worried about that, then a natural way of thinking about AI risk is that AI risk is about concentration of power. You have these AIs, and if power over them gets consolidated within the hands of a few people—maybe a few government bureaucrats, maybe a few countries, maybe a few companies—then suddenly you can have all the same centralized control dynamics playing out, but much easier and much worse.

Centralized control over the Facebook algorithm—sure, it can skew some things, but it's not very flexible. You can't do that much—at worst you can nudge it slightly in one direction or other, but it's pretty obvious. Centralized control of AI, what that gives you is the ability to very flexibly shape the way that maybe everyone in the entire country interacts with AI. So this is the Elon Musk angle on AI risk: AI risk is fundamentally a problem about concentration of power. Whereas a lot of AI governance people tend to think of it more like AI risk as anarchy. That's maybe why we've seen a lot of focus on bioweapons, because it's a central example of an AI risk that maps neatly onto this left-hand side.

So how do you prevent that? Well, if you're Elon or somebody who thinks similarly, you try and prevent it using decentralization. You’re like: man, we really don't want AI to be concentrated in the hands of a few people or to be concentrated in the hands of a few AIs. (I think both of these are kind of agnostic as to whether it's humans or AIs who are the misaligned agents, if you will.) And this is kind of the platform that Republicans now (and West Coast elites) are running on. It's this decentralization, freedom, AI safety via openness. Elon wants xAI to produce a maximally truth-seeking AI, really decentralizing control over information.

I think they both have reasonable points—both of these sides are getting at something sensible. Unfortunately, politics is incredibly polarized right now. And so it's just really hard to bridge this divide. One of my worries is that AI safety becomes as polarized as the Democrats and the Republicans currently are. You can imagine these two camps of AI safety people almost at war with each other.

I don't know how to prevent that right now. I have some ideas. There's social links you can use—people sitting down and trying to see each other's perspectives. But fundamentally, it's a really big divide and I see AI safety and maybe EA more generally as trying to have one foot on either side of this gap and being stretched more and more.

So my challenge to the people who are listening to this here or in the recording is: how do we bridge that? How do we make it so that both of these reasonable concerns of AI risk as anarchy and AI risk as concentration of power can be tackled in a way that everyone accepts as good?

You can think of the US Constitution as trying to bridge that gap. You want to prevent anarchy, but you also want to prevent concentration of power. And so you have this series of checks and balances. One of the ways we should be thinking about AI governance is as: how do we put in regulations that also have very strong checks and balances? And the bigger of a deal you think AI is going to be, the more like a constitution—the more robust—these types of checks and balances need to be. It can't just be that there's an agency that gets to veto or not veto AI deployments. It needs to be much more principled and much closer to something that both sides can trust.

That was a lot. Do I have more things to say here? Yeah, my guess is that most people in this room, or most people listening, instinctively skew towards the left side here. I instinctively, personally skew a bit more towards the right side. I just posted a thread on why I think it's probably better if the Republicans win this election on Twitter. So you can check that out—I think it might be going viral during this talk—if you want to get more of an instinctive sense for why the right side is reasonable. I think it's just a really hard problem. And this type of sociopolitical thinking that I've been trying to advocate for in this talk, that's the type of thinking that you need in order to actually bridge these hard-to-bridge gaps.

I don't have great solutions, so I'm gonna say a bunch of platitude-adjacent statements. The first one is this community should be really truth-seeking, much more than most political movements or most political actors, because that works in the long term.

I think it's led us to pretty good places so far and we want to keep doing it. In some sense if you're truth-seeking enough then you converge to alignment research anyway—I have this blog post on why alignment research is what happens if you're doing machine learning and you try to be incredibly principled about it, or incredibly scientific about it. So you can check out that post on LessWrong and various other places.

I also have a recent post on why history and philosophy of science is a really useful framework for thinking about these big picture questions and what would it look like to make progress on a lot of these very difficult issues, compared with being bayesian. I’m not a fan of bayesianism—to a weird extent it feels like a lot of the mistakes that the community has made have fallen out of bad epistemology. I'm biased towards thinking this because I'm a philosopher but it does seem like if you had a better decision theory and you weren't maximizing expected utility then you might not screw FTX up quite as badly, for instance.

On the principled mechanism design thing, the way I want to frame this is: right now we think about governance of AI in terms of taking governance tools and applying them to AI. I think this is not principled enough. Instead the thing we want to be thinking about is governance with AI— what would it look like if you had a set of rigorous principles that governed the way in which AI was deployed throughout governments, that were able to provide checks and balances. Able to have safeguards but also able to make governments way more efficient and way better at leveraging the power of AI to, for example, provide a neutral independent opinion like when there's a political conflict.

I don't know what this looks like yet, I just want to flag it as the right way to go. And I think part of the problems that people have been having in AI governance are that our options are to stop or to not stop because no existing governance tools are powerful enough to actually govern AI, it’s too crazy. And that’s true, but governing with AI opens up this whole new expanse of different possibilities I really want people to explore, particularly people who are thinking about this stuff in first-principles and historically informed ways.

That’s the fourth point here: EA and AI safety have been bad at that. I used the example of Dominic Cummings before as someone who is really good at that. So if you just read his stuff then maybe you get an intuition for the type of thinking that leads you to realize that there’s a layer of the Brexit debate deeper than what everyone else was looking at. What does that type of thinking lead you when you apply it to AI governance? I’ve been having some really interesting conversations in the last week in particular with people who are like: what if we think way bigger? If you really take AGI seriously then you shouldn’t just be thinking about historical changes on the scale of founding new countries, you should be thinking about historical changes on the scale of founding new types of countries.

I had a debate with a prominent AI founder recently about who would win in a war between Mars and Earth. And that’s a weird thing to think about, but given a bunch of beliefs that I have—I think SpaceX can probably get to Mars in a decade or two, I think AI will be able to autonomously invent a bunch of new technologies that allow you to set up a space colony, I think a lot of the sociopolitical dynamics of this can be complicated… Maybe this isn’t the thing we should be thinking about, but I want a reason why it’s not the type of thing we should be thinking about.

Maybe that’s a little too far. The more grounded version of this is: what if corporations end up with power analogous to governments, and the real conflicts are on that level. There's this concept of the network state, this distributed set of actors bound together with a common ideology. What if the conflicts that arise as AI gets developed look more like that than conflicts between traditional nation-states? This all sounds kind of weird and yet when I sit down and think “what are my beliefs about the future of technology? How does this interface with my sociopolitical and geopolitical beliefs?” weird stuff starts to fall out.

Lastly, synthesizing different moral frameworks. Right back at the beginning of the talk, I was like, look. We have these three waves. And we have consequentialism, we have deontology, and we have virtue ethics, as these skills that we want to develop as we pass through these three waves. A lot of this comes back to Derek Parfit. He was one of the founders of EA in a sense, and his big project was to synthesize morality—to say that all of these different moral theories are climbing the same mountain from different directions.

I think we’ve gotten to the point where I can kind of see that all of these things come together. If you do consequentialism, but you’re also shaping the world in these large-scale political ways, then the thing you need to do is to be virtuous. That’s the only thing that leads to good consequences. All of these frameworks share some conception of goodness, of means-end reasoning.

There’s something in the EA community around this that I’m a little worried about. I’ve got this post on how to have more cooperative AI safety strategies, which kind of gets into this. But I think a lot of it comes down to just having a rich conception of what it means to do good in this world. Can we not just “do good” in the sense of finding a target and running as hard as we can toward it, but instead think about ourselves as being on a team in some sense with the rest of humanity—who will be increasingly grappling with a lot of the issues I’ve laid out here?

What is the role of our community in helping the rest of humanity to grapple with this? I almost think of us as first responders. First responders are really important — but also, if they try to do the whole job themselves, they’re gonna totally mess it up. And I do feel the moral weight of a lot of the examples I laid out earlier—of what it looks like to really mess this up. There’s a lot of potential here. The ideas in this community—the ability to mobilize talent, the ability to get to the heart of things—it’s incredible. I love it. And I have this sense—not of obligation, exactly—but just…yeah, this is serious stuff. I think we can do it. I want us to take that seriously. I want us to make the future go much better. So I’m really excited about that. Thank you.

Just to respond to a narrow point because I think this is worth correcting as it arises: Most of the US/EU GDP growth gap you highlight is just population growth. In 2000 to 2022 the US population grew ~20%, vs. ~5% for the EU. That almost exactly explains the 55% vs. 35% growth gap in that time period on your graph; 1.55 / 1.2 * 1.05 = 1.36.

This shouldn't be surprising, because productivity in the 'big 3' of US / France / Germany track each other very closely and have done for quite some time. (Edit: I wasn't expecting this comment to blow up, and it seems I may have rushed this point. See Erich's comment below and my response.) Below source shows a slight increase in the gap, but of <5% over 20 years. If you look further down my post the Economist has the opposing conclusion, but again very thin margins. Mostly I think the right conclusion is that the productivity gap has barely changed relative to demographic factors.

I'm not really sure where the meme that there's some big / growing productivity difference due to regulation comes from, but I've never seen supporting data. To the extent culture or regulation is affecting that growth gap, it's almost entirely going to be from things that affect total working hours, e.g. restrictions on migration, paid leave, and lower birth rates[1], not from things like how easy it is to found a startup.

https://www.economist.com/graphic-detail/2023/10/04/productivity-has-grown-faster-in-western-europe-than-in-america

Fertility rates are actually pretty similar now, but the US had much higher fertility than Germany especially around 1980 - 2010, converging more recently, so it'll take a while for that to impact the relative sizes of the working populations.

This is weird because other sources do point towards a productivity gap. For example, this report concludes that "European productivity has experienced a marked deceleration since the 1970s, with the productivity gap between the Euro area and the United States widening significantly since 1995, a trend further intensified by the COVID-19 pandemic".

Specifically, it looks as if, since 1995, the GDP per capita gap between the US and the eurozone has remained very similar, but this is due to a widening productivity gap being cancelled out by a shrinking employment rate gap:

This report from Banque de France has it that "the EU-US gap has narrowed in terms of hours worked per capita but has widened in terms of GDP per hours worked", and that in France at least this can be attributed to "producers and heavy users of IT technologies":

The Draghi report says 72% of the EU-US GDP per capita gap is due to productivity, and only 28% is due to labour hours:

Part of the discrepancy may be that the OWID data only goes until 2019, whereas some of these other sources report that the gap has widened significantly since COVID? But that doesn't seem to be the case in the first plot above (it still shows a widening gap before COVID).

Or maybe most of the difference is due to comparing the US to France/Germany, versus also including countries like Greece and Italy that have seen much slower productivity growth. But that doesn't explain the France data above (it still shows a gap between France and the US, even before COVID).

Thanks for this. I already had some sense that historical productivity data varied, but this prompted me to look at how large those differences are and they are bigger than I realised. I made an edit to my original comment.

TL;DR: Current productivity people mostly agree about. Historical productivity they do not. Some sources, including those in the previous comment, think Germany was more productive than the US in the past, which makes being less productive now more damning compared to a perspective where this has always been the case.

***

For simplicity I'm going to focus on US vs. Germany in the first three bullets:

****

Where does that leave the conversation about European regulation? This is just my $0.02, but:

In my opinion the large divergences of opinion about the 90s, while academically interesting, are only indirectly relevant to the situation today. The situation today seems broadly accepted to be as follows:

I think that when Americans think about European regulations, they are mostly thinking about the Western and Northern countries. For example, when I ask Claude which EU countries have the strongest labour rights, the list of countries it gives me is entirely a subset of those countries. But unless you think replacing those regulations with US-style regulations would allow German productivity to significantly exceed US productivity, any claim that this would close the GDP per capita gap between the US and Germany - around 1.2x - without more hours being worked is not very reasonable. Let alone the GDP gap, which layers on the US' higher population growth.

Digging into Southern Europe and figuring out why e.g. Italy and Germany have failed to converge seems a lot more reasonable. Maybe regulation is part of that story. I don't know.

So I land pretty much where the Economist article is, which is why I quoted it:

I am eyeballing at page 66 and adding together 'TFP' and 'capital deepening' factors. I think that amounts to labour productivity, and indeed the report does say "labour productivity...ie the product of TFP and capital deepening". Less confident about this than the other figures though.

Unhelpfully, the data is displayed as % of 2015 productivity. I'm getting my claim from (a) OECD putting German 1995 productivity at 80% of 2015 levels, vs. the US being at 70% of 2015 levels and (b) 2022 productivity being 107% vs. 106% of 2022 levels. Given the OECD has 2022 US/German productivity virtually identical, I think the forced implication is that they think German productivity was >10% higher in 1995.

Most changed mind votes in history of EA comments? This blew my mind a bit, I feel like I've read so much about American productivity outpacing Europe, think this deserves a full length article.

If productivity is so similar, how come the US is quite a bit richer per capita? Is that solely accounted for by workers working longer hours?

Two factors come to mind.

It isn't. Prices are just inflated creating false growth. Real gdp, adjusted for variable inflation, shows dead even growth.

Inequality. There are more billionaires in the US siphoning money from across the globe due to the lack of effective capital regulation. Hence what effects do persist are partially explained as capital flight to the USA inflating the top of the per capital pyramid without in any way increasing actual worker productivity.

"Real gdp, adjusted for variable inflation, shows dead even growth. " I asked about gdp per capita right now, not growth rates over time. Do you have a source showing that the US doesn't actually have higher gdp per capita?

Inequality is probably part of the story, but I had a vague sense median real wages are higher in the US. Do you have a source saying that's wrong? Or that it goes away when you adjust for purchasing power?

Because you are so strongly pushing a particular political perspective on twitter-tech right=good roughly, I worry that your bounties are mostly just you paying people to say things you already believe about those topics. Insofar as you mean to persuade people on the left/centre of the community to change their views on these topics, maybe it would be better to do something like make the bounties conditional on people who disagree with your takes finding the investigations move their views in your direction.

I also find the use of the phrase "such controversial criminal justice policies" a bit rhetorical dark artsy and mildly incompatible with your calls for high intellectual integrity. It implies that a strong reason to be suspicious of Open Phil's actions has been given. But you don't really think the mere fact that a political intervention on an emotive, polarized topic is controversial is actually particularly informative about it. Everything on that sort of topic is controversial, including the negation of the Open Phil view on the US incarceration rate. The phrase would be ok if you were taking a very general view that we should be agnostic all political issues where smart, informed people disagree. But you're not doing that, you take lots of political stances in the piece: de-regulatory libertarianism, the claim that environmentalism has been net negative and Dominic Cummings can all accurately be described as "highly controversial".

Maybe I am making a mountain out of a molehill here. But I feel like rationalists themselves often catastrophise fairly minor slips into dark arts like this as strong evidence that someone lacks integrity. (I wouldn't say anything as strong as that myself; everyone does this kind of thing sometimes.) And I feel like if the NYT referred to AI safety as "tied to the controversial rationalist community" or to "highly controversial blogger Scott Alexander" you and other rationalists would be fairly unimpressed.

More substantively (maybe I should have started with this as it is a more important point), I think it is extremely easy to imagine the left/Democrat wing of AI safety becoming concerned with AI concentrating power, if it hasn't already. The entire techlash anti "surveillance" capitalism, "the algorithms push extremism" thing from left-leaning tech critics is ostensibly at least about the fact that a very small number of very big companies have acquired massive amounts of unaccountable power to shape political and economic outcomes. More generally, the American left has, I keep reading, been on a big anti-trust kick recently. The explicit point of anti-trust is to break up concentrations of power. (Regardless of whether you think it actually does that, that is how its proponents perceive it. They also tend to see it as "pro-market"; remember that Warren used to be a libertarian Republican before she was on the left.) In fact, Lina Khan's desire to do anti-trust stuff to big tech firms was probably one cause of Silicon Valley's rightward shift.

It is true that most people with these sort of views are currently very hostile to even the left-wing of AI safety, but lack of concern about X-risk from AI isn't the same thing as lack of concern about AI concentrating power. And eventually the power of AI will be so obvious that even these people have to concede that it is not just fancy autocorrect.

It is not true that all people with these sort of concerns only care private power and not the state either. Dislike of Palantir's nat sec ties is a big theme for a lot of these people, and many of them don't like the nat sec-y bits of the state very much either. Also a relatively prominent part of the left-wing critique of DOGE is the idea that it's the beginning of an attempt by Elon to seize personal effective control of large parts of the US federal bureaucracy, by seizing the boring bits of the bureaucracy that actually move money around. In my view people are correct to be skeptical that Musk will ultimately choose decentralising power over accumulating it for himself.

Now strictly speaking none of this is inconsistent with your claim that the left-wing of AI safety lacks concern about concentration of power, since virtually none of these anti-tech people are safetyists. But I think it still matters for predicting how much the left wing of safety will actually concentrate power, because future co-operation between them and the safetyists against the tech right and the big AI companies is a distinct possibility.

This is a fair complaint and roughly the reason I haven't put out the actual bounties yet—because I'm worried that they're a bit too skewed. I'm planning to think through this more carefully before I do; okay to DM you some questions?

I definitely agree with you with regard to corporate power (and see dislike of Palantir as an extension of that). But a huge part of the conflict driving the last election was "insiders" versus "outsiders"—to the extent that even historically Republican insiders like the Cheneys backed Harris. And it's hard for insiders to effectively oppose the growth of state power. For instance, the "govt insider" AI governance people I talk to tend to be the ones most strongly on the "AI risk as anarchy" side of the divide, and I take them as indicative of where other insiders will go once they take AI risk seriously.

But I take your point that the future is uncertain and I should be tracking the possibility of change here.

(This is not a defense of the current administration, it is very unclear whether they are actually effectively opposing the growth of state power, or seizing it for themselves, or just flailing.)

Yeah, this feel particularly weird because, coming from that kind of left-libertarian-ish perspective, I basically agree with most of it but also every time he tries to talk about object-level politics it feels like going into the bizarro universe and I would flip the polarity of the signs of all of it. Which is an impression I generally have with @richard_ngo's work in general, him being one of the few safetyists on the political right to not have capitulated to accelerationism-because-of-China (as most recently even Elon did). Still, I'll try to see if I have enough things to say to collect bounties.

Thanks for noticing this. I have a blog post coming out soon criticizing this exact capitulation.

I am torn between writing more about politics to clarify, and writing less about politics to focus on other stuff. I think I will compromise by trying to write about political dynamics more timelessly (e.g. as I did in this post, though I got a bit more object-level in the follow-up post).

I think this is a valid concern, but I think it's important to note that if Richard were a left-winger, this same concern wouldn't be there.

Depends how far left. I'd say centre-left views would get less push back, but not necessarily further left ones. But yeah fair point that there is a standard set of views in the community that he is somewhat outside.

To back this up: I mostly peruse non-rationalist, left leaning communities, and this is a concern in almost every one of them. There is a huge amount of concern and distrust of AI companies on the left.

Even AI skeptical people are concerned about this: AI that is not "transformative" can concentrate power. Most lefties think that AI art is shit, but they are still concerned that it will cost people jobs: this is not a contradiction as taking jobs does not mean AI needs to better than you, just cheaper. And if AI does massively improve, this is going to make them more likely to oppose it, not less.

AI art seems like a case of power becoming decentralized: before this week, few people could make Studio Ghibli art. Now everyone can.

Edit: Sincere apologies - when I read this, I read through the previous chain of comments quickly, and missed the importance of AI art specifically in titotal's comment above. This makes Lark's comment more reasonable than I assumed. It seems like we do disagree on a bunch of this topic, but much of my comment wasn't correct.

---

This comment makes me uncomfortable, especially with the upvotes. I have a lot of respect for you, and I agree with this specific example.

I don't think you were meaning anything bad here. But I'm very suspicious that this specific example is really representative in a meaningful sense.Often, when one person cites one and only single example of a thing, they are making an implicit argument that this example is decently representative. See theCooperative Principle(I've been paying more attention to this recently). So I assume readers might take, "Here's one example, it's probably the main one that matters. People seem to agree with the example, so they probably agree with the implication from it being the only example."Some specifics that come to my mind:

- In this specific example, it arguably makes it very difficult for Studio Ghibli to have control over a lot of their style. I'm sure that people at Studio Ghibli are very upset about this. Instead, OpenAI gets to make this accessible - but this is less an ideological choice but instead something that's clearly commercially beneficial for OpenAI. If OpenAI wanted to stop this, it could (at least, until much better open models come out). More broadly, it can be argued that a lot of forms of power are being brought from media groups like Studio Ghibli, to a few AI companies like OpenAI. You can definitely argue that this is a good or bad thing on the net, but I think this is not exactly "power is now more decentralized."

- I think it's easy to watch the trend lines and see where we might expect things to change. Generally, startups are highly subsidized in the short-term. Then they eventually "go bad" (see Enshittification). I'm absolutely sure that if/when OpenAI has serious monopoly power, they will do things that will upset a whole lot of people.

- China has been moderating the ability of their LLMs to say controversial things that would look bad for China. I suspect that the US will do this shortly. I'm not feeling very optimistic with Elon Musk with X.AI, though that is a broader discussion.

On the flip side of this, I could very much see it being frustrating as"I just wanted to leave a quick example. Can't there be some way to enter useful insights without people complaining about a lack of context?"I'm honestly not sure what the solution is here. I think online discussions are very hard, especially when people don't know each other very well, for reasons like this.But in the very short-term, I just want to gently push back on the implication of this example, for this audience.

I could very much imagine a more extensive analysis suggesting that OpenAI's image work promotes decentralization or centralization. But I think it's clearly a complex question, at very least. I personally think that people broadly being able to do AI art now is great, but I still find it a tricky issue.I'm not sure why you chose to frame your comment in such an unnecessarily aggressive way so I'm just going to ignore that and focus on the substance.

Yes, the Studio Ghibli example is representative of AI decentralizing power:

Thanks for providing more detail into your views.

Really sorry to hear that my comment above came off as aggressive. It was very much not meant like that. One mistake is that I too quickly read the comments above - that was my bad.

In terms of the specifics, I find your longer take interesting, though as I'm sure you expect, I disagree with a lot of it. There seem to be a lot of important background assumptions on this topic that both of us have.

I agree that there are a bunch of people on the left who are pushing for many bad regulations and ideas on this. But I think at the same time, some of them raise some certain good points (i.e. paranoia about power consolidation)

I feel like it's fair to say that power is complex. Things like ChatGPT's AI art will centralize power in some ways and decentralize it in others. On one hand, it's very much true that many people can create neat artwork that they couldn't before. But on the other, a bunch of key decisions and influence are being put into the hands of a few corporate actors, particularly ones with histories of being shady.

I think that some forms of IP protection make sense. I think this conversation gets much messier when it comes to LLMs, for which there just hasn't been good laws yet on how to adjust for them. I'd hope that future artists who come up with innovative techniques could get some significant ways of being compensated for their contributions. I'd hope that writers and innovators could similarly get certain kinds of credit and rewards for their work.

This isn't really the best example to use considering AI image generation is very much the one area where all the most popular models are open-weights and not controlled by big tech companies, so any attempt at regulating AI image generation would necessarily mean concentrating power and antagonizing the free and open source software community (something which I agree with OP is very ill-advised), and insofar as AI-skeptics are incapable of realizing that, they aren't reliable.

I basically agree with this, with one particular caveat, in that the EA and LW communities might eventually need to fight/block open source efforts due to issues like bioweapons, and it's very plausible that the open-source community refuses to stop open-sourcing models even if there is clear evidence that they can immensely help/automate biorisk, so while I think the fight was done too early, I think the fighty/uncooperative parts of making AI safe might eventually matter more than is recognized today.

If you mean Meta and Mistral I agree. I trust EleutherAI and probably DeepSeek to not release such models though, and they're more centrally who I meant.

I find it pretty difficult to see how to get broad engagement on this when being so obviously polemical / unbalanced in the examples.

As someone who is publicly quite critical of mainstream environmentalism, I still find the description here so extreme that it is hard to take seriously as more than a deeply partisan talking point.

The "Environmental Protection Act" doesn't exist, do you mean the "National Environmental Policy Act" (NEPA)?

Neither is it true that environmentalists are single-handedly responsible for nuclear declining and clearly modern environmentalism has done a huge amount of good by reducing water and air pollution.

I think your basic point -- that environmentalism had a lot more negative effects than commonly realized and that we should expect similar degrees of unintended negative effects for other issues -- is probably true (I certainly believe it).

But this point can be made with nuance and attention to detail that makes it something that people with different ideological priors can read and engage with constructively. I think the current framing comes across as "owning the libs" or "owning the enviros" in a way that makes it very difficult for those arguments to get uptake anywhere that is not quite right-coded.

Thanks for the feedback!

FWIW a bunch of the polemical elements were deliberate. My sense is something like: "All of these points are kinda well-known, but somehow people don't... join the dots together? Like, they think of each of them as unfortunate accidents, when they actually demonstrate that the movement itself is deeply broken."

There's a kind of viewpoint flip from being like "yeah I keep hearing about individual cases that sure seem bad but probably they'll do better next time" to "oh man, this is systemic". And I don't really know how to induce the viewpoint shift except by being kinda intense about it.

Upon reflection, I actually take this exchange to be an example of what I'm trying to address. Like, I gave a talk that was according to you "so extreme that it is hard to take seriously" and your three criticisms were:

I imagine you have better criticisms to make, but ultimately (as you mention) we do agree on the core point, and so in some sense the message I'm getting is "yeah, listen, environmentalism has messed up a bunch of stuff really badly, but you're not allowed to be mad about it".

And I basically just disagree with that. I do think being mad about it (or maybe "outraged" is a better term) will have some negative effects on my personal epistemics (which I'm trying carefully to manage). But given the scale of the harms caused, this level of criticism seems like an acceptable and proportional discursive move. (Though note that I'd have done things differently if I felt like criticism that severe was already common within the political bubble of my audience—I think outrage is much worse when it bandwagons.)

EDIT: what do you mean by "how to get broad engagement on this"? Like, you don't see how this could be interesting to a wider audience? You don't know how to engage with it yourself? Something else?

I think we are misunderstanding each other a bit.

I am in no way trying to imply that you shouldn't be mad about environmentalism's failings -- in fact, I am mad about them on a daily basis. I think if being mad about environmentalism's failing is the main point than what Ezra Klein and Derek Thompson are currently doing with Abundance is a good example of communicating many of your criticisms in a way optimized to land with those that need to hear it.

My point was merely that by framing the example in such extreme terms it will lose a lot of people despite being only very tangentially related to the main points you are trying to make. Maybe that's okay, but it didn't seem like your goal overall to make a point about environmentalism, so losing people on an example that is stated in such an extreme fashion did not seem worth it to me.

In fairness to Richard, I think it comes across in text a lot more strongly than in my view it came across listening on youtube

Ah, gotcha. Yepp, that's a fair point, and worth me being more careful about in the future.

I do think we differ a bit on how disagreeable we think advocacy should be, though. For example, I recently retweeted this criticism of Abundance, which is basically saying that they overly optimized for it to land with those who hear it.

And in general I think it's worth losing a bunch of listeners in order to convey things more deeply to the ones who remain (because if my own models of movement failure have been informed by environmentalism etc, it's hard to talk around them).

But in this particular case, yeah, probably a bit of an own goal to include the environmentalism stuff so strongly in an AI talk.

My quick take:

I think that at a high level you make some good points. I also think it's probably a good thing for some people who care about AI safety to appear to the current right as ideologically aligned with them.

At the same time, a lot of your framing matches incredibly well with what I see as current right-wing talking points.

"And in general I think it's worth losing a bunch of listeners in order to convey things more deeply to the ones who remain"

-> This comes across as absurd to me. I'm all for some people holding uncomfortable or difficult positions. But when those positions sound exactly like the kind of thing that would gain favor by a certain party, I have a very tough time thinking that the author is simultaneously optimizing for "conveying things deeply". Personally, I find a lot of the framing irrelevant, distracting, and problematic.

As an example, if I were talking to a right-wing audience, I wouldn't focus on example of racism in the South, if equally-good examples in other domains would do. I'd expect that such work would get in the way of good discussion on the mutual areas where myself and the audience would more easily agree.

Honestly, I have had a decent hypothesis that you are consciously doing all of this just in order to gain favor by some people on the right. I could see a lot of arguments people could make sense for this. But that hypothesis makes more sense for the Twitter stuff than here. Either way, it does make it difficult for me to know how to engage. On one hand, I am very uncomfortable and I highly disagree with a lot of MAGA thinking, including some of the frames you reference (which seem to fit that vibe), if you do honestly believe this stuff. Or on the other, you're actively lying about what you believe, in a critical topic, in an important set of public debates.

Anyway, this does feel like a pity of a situation. I think a lot of your work is quite good, and in theory, what read to me like the MAGA-aligned parts don't need to get in the way. But I realize we live in an environment where that's challenging.

(Also, if it's not obvious, I do like a lot of right-wing thinkers. I think that the George Mason libertarians are quite great, for example. But I personally have had a lot of trouble with the MAGA thinkers as of late. My overall problem is much less with conservative thinking than it is MAGA thinking.)

Occam's razor says that this is because I'm right-wing (in the MAGA sense not just the libertarian sense).

It seems like you're downweighting this hypothesis primarily because you personally have so much trouble with MAGA thinkers, to the point where you struggle to understand why I'd sincerely hold this position. Would you say that's a fair summary? If so hopefully some forthcoming writings of mine will help bridge this gap.

It seems like the other reason you're downweighting that hypothesis is because my framing seems unnecessarily provocative. But consider that I'm not actually optimizing for the average extent to which my audience changes their mind. I'm optimizing for something closer to the peak extent to which audience members change their mind (because I generally think of intellectual productivity as being heavy-tailed). When you're optimizing for that you may well do things like give a talk to a right-wing audience about racism in the south, because for each person there's a small chance that this example changes their worldview a lot.

I'm open to the idea that this is an ineffective or counterproductive strategy, which is why I concede above that this one probably went a bit too far. But I don't think it's absurd by any means.

Insofar as I'm doing something I don't reflectively endorse, I think it's probably just being too contrarian because I enjoy being contrarian. But I am trying to decrease the extent to which I enjoy being contrarian in proportion to how much I decrease my fear of social judgment (because if you only have the latter then you end up too conformist) and that's a somewhat slow process.

If you're referring to the part where I said I wasn't sure if you were faking it - I'd agree. From my standpoint, it seems like you've shifted to hold beliefs that both seem highly suspicious and highly convenient - this starts to raise the hypothesis that you're doing it, at least partially, strategically.

(I relatedly think that a lot of similar posturing is happening on both sides of the political isle. But I generally expect that the politicians and power figures are primarily doing this for strategic interests, while the news consumers are much more likely to actually genuinely believe it. I'd suspect that others here would think similar of me, if it were the case that we had a hard-left administration, and I suddenly changed my tune to be very in line with that.)

Again, this seems silly to me. For one thing, I think that while I don't always trust people's publicly-stated political viewpoints, I state their reasons for doing these sorts of things even less. I could imagine that your statement is what it honestly feels to you, but this just raises a bunch of alarm bells to me. Basically, if I'm trying to imagine someone coming up with a convincing reason to be highly and unnecessarily (from what I can tell) provocative, I'd expect them to raise some pretty wacky reasons for it. I'd guess that the answer is often simpler, like, "I find that trolling just brings with it more attention, and this is useful for me," or "I like bringing in provocative beliefs that I have, wherever I can, even if it hurts an essay about a very different topic. I do this because I care a great deal about spreading these specific beliefs. One convenient thing here is that I get to sell readers on an essay about X, but really, I'll use this as an opportunity to talk about Y instead."

Here, I just don't see how it helps. Maybe it attracts MAGA readers. But for the key points that aren't MAGA-aligned, I'd expect that this would just get less genuine attention, not more. To me it sounds like the question is, "Does a MAGA veneer help make intellectual work more appealing to smart people?" And this clearly sounds to me as pretty out-there.

To be clear, my example wasn't "I'm trying to talk to people in the south about racism" It's more like, "I'm trying to talk to people in the south about animal welfare, and in doing so, I bring up examples around South people being racist."

One could say, "But then, it's a good thing that you bring up points about racism to those people. Because it's actually more important that you teach those people about racism than it is animal welfare."

But that would match my second point above; "I like bringing in provocative beliefs that I have". This would sound like you're trying to sneakily talk about racism, pretending to talk about animal welfare for some reason.

The most obvious thing is that if you care about animal welfare, and give a presentation to the deep US South, you can avoid examples that villainizes people in the South.

I liked this part of your statement and can sympathize. I think that us having strong contrarians around is very important, but also think that being a contrarian comes with a bunch of potential dangers. Doing it well seems incredibly difficult. This isn't just an issue of "how to still contribute value to a community." It's also an issue of "not going personally insane, by chasing some feeling of uniqueness." From what I've seen, disagreeableness is a very high-variance strategy, and if you're not careful, it could go dramatically wrong.

Stepping back a bit - the main things that worry me here:

1. I think that disagreeable people often engage with patterns that are non-cooperative, like doing epistemic slight-of-hands and trolling people. I'm concerned that some of your work around this matches some of these patterns.

2. I'm nervous that you and/or others might slide into clearly-incorrect and dangerous MAGA worldviews. Typically the way for people to go crazy into any ideology is that they begin by testing the waters publicly with various statements. Very often, it seems like the conclusion of this is a place where they get really locked into the ideology. From here, it seems incredibly difficult to recover - for example, my guess is that Elon Musk has pissed off many non-MAGA folks, and at this point has very little way to go back without losing face. You writing using MAGA ideas both implies to me that you might be sliding this way, and worries me that you'll be encouraging more people to go this route (which I personally have a lot of trouble with).

I think you've done some good work and hope you can do so in the forward. At the same time, I'm going to feel anxious about such work whenever I suspect that (1) and (2) might be happening.

Yeah I got that. Let me flesh out an analogy a little more:

Suppose you want to pitch people in the south about animal welfare. And you have a hypothesis for why people in the south don't care much about animal welfare, which is that they tend to have smaller circles of moral concern than people in the north. Here are two types of example you could give:

My claims:

I personally spent a long time being like "yeah I guess AI safety might have messed up big-time by leading to the founding of the AGI labs" but then not really doing anything differently. I only snapped out of complacency when I got to observe first-hand a bunch of the drama at OpenAI (which inspired this post). And so I have a hypothesis that it's really valuable to have some experience where you're like "oh shit, that's what it looks like for something that seems really well-intentioned that everyone in my bubble is positive-ish about to make the world much worse". That's what I was trying to induce with my environmentalism slide (as best I can reconstruct, though note that the process by which I actually wrote it was much more intuitive and haphazard than the argument I'm making here).

Yeah, that is a reasonable fear to have (which is part of why I'm engaging extensively here about meta-level considerations, so you can see that I'm not just running on reflexive tribalism).