All posts

Thursday, 20 March 2025Thu, 20 Mar 2025

Thursday, 20 March 2025

Thu, 20 Mar 2025

Frontpage Posts

Personal Blogposts

Quick takes

The World Happiness Report 2025 is out!

I bang this drum a lot, but it does genuinely appear that once a country reaches the upper-middle income bracket, GDP doesn’t seem to matter much more.

Also featuring is a chapter from the Happier Lives Institute, where they compare the cost-effectiveness of improving wellbeing across multiple charities. They find that the top charities (including Pure Earth and Tamaika) might be 100x as cost-effective as others, especially those in high-income countries.

Over the years I've written some posts that are relevant to this week's debate topic. I collected and summarized some of them below:

"Disappointing Futures" Might Be As Important As Existential Risks

The best possible future is much better than a "normal" future. Even if we avert extinction, we might still miss out on >99% of the potential of the future.

Is Preventing Human Extinction Good?

A list of reasons why a human-controlled future might be net positive or negative. Overall I expect it to be net positive.

On Values Spreading

Hard to summarize but this post basically talks about spreading good values as a way of positively influencing the far future, some reasons why it might be a top intervention, and some problems with it.

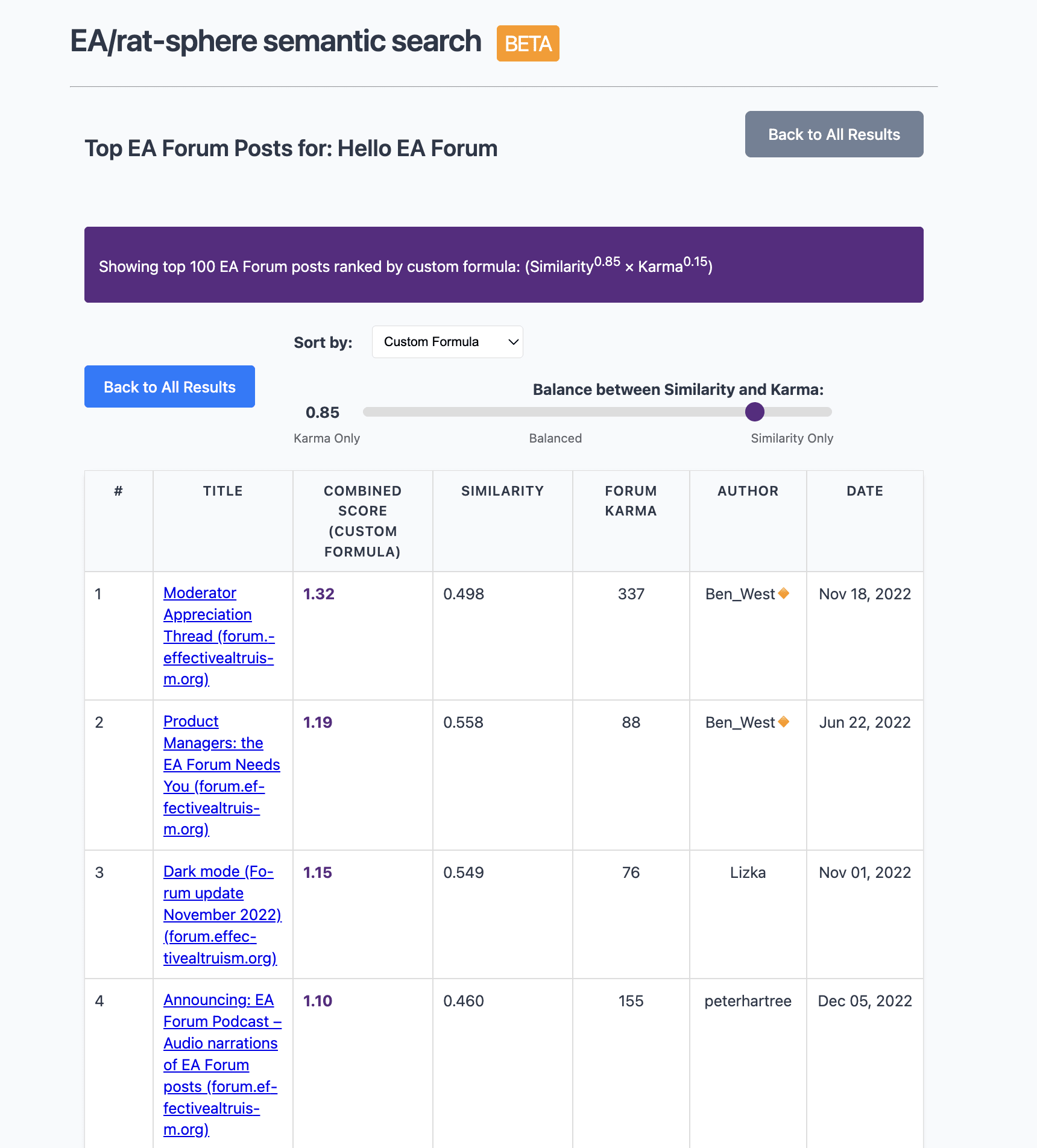

Sharing https://earec.net, semantic search for the EA + rationality ecosystem. Not fully up to date, sadly (doesn't have the last month or so of content). The current version is basically a minimal viable product!

On the results page there is also an option to see EA Forum only results which allow you to sort by a weighted combination of karma and semantic similarity thanks to the API!

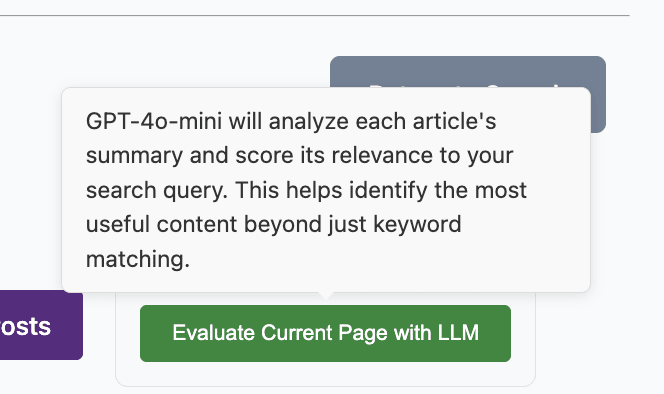

Final feature to note is that there's an option to have gpt-4o-mini "manually" read through the summary of each article on the current screen of results, which will give better evaluations of relevance to some query (e.g. "sources I can use for a project on X") than semantic similarity alone.

Still kinda janky - as I said, minimal viable product right now. Enjoy and feedback is welcome!

Thanks to @Nathan Young for commissioning this!

It seems like "what can we actually do to make the future better (if we have a future)?" is a question that keeps on coming up for people in the debate week.

I've thought about some things related to this, and thought it might be worth pulling some of those threads together (with apologies for leaving it kind of abstract). Roughly speaking, I think that:

* ~Optimal futures flow from having a good reflective process steering things

* It's sort of a race to have a good process steering before a bad process

* Averting AI takeover and averting human takeover are both ways to avoid the bad process thing (although of course it's possible to have a takeover still lead to a good process)

* We're going to need higher powered epistemic+coordination tech to build the good process

* But note that these tools are also very useful for avoiding falling into extinction or other bad trajectories, so this activity doesn't cleanly fall out on either side of the "make the future better" vs "make there be a future" debate

There are some other activities which might help make the future better without doing so much to increase the chance of having a future, e.g.:

* Try to propagate "good" values (I first wrote "enlightenment" instead of "good", since I think the truth-seeking element is especially important for ending up somewhere good; but others may differ), to make it more likely that they're well-represented in whatever entities end up steering

* Work to anticipate and reduce the risk of worst-case futures (e.g. by cutting off the types of process that might lead there)

However, these activities don't (to me) seem as high leverage for improving the future as the more mixed-purpose activities.

I want to see a bargain solver for AI alignment to groups: a technical solution that would allow AI systems to solve the pie cutting problem for groups and get them the most of what they want, for AI alignment. The best solutions I've seen for maximizing long run value involve using a bargain solver to decide what ASI does, which preserves the richness and cardinality of people's value functions and gives everyone as much of what they want as possible, weighted by importance. (See WWOTF Afterwards, the small literature on bargaining-theoretic approaches to moral uncertainty.) But existing democratic approaches to AI alignment seem to not be fully leveraging AI tools, and instead aligning AI systems to democratic processes that aren't empowered with AI tools (e.g. CIPs and CAIS'S alignment to the written output of citizens' assemblies.) Moreover, in my experience the best way to make something happen is just to build the solution. If you might be interested in building this tool and have the background, I would love to try to connect you to funding for it.

For deeper motivation see here.

The World Happiness Report 2025 is out!

I bang this drum a lot, but it does genuinely appear that once a country reaches the upper-middle income bracket, GDP doesn’t seem to matter much more.

Also featuring is a chapter from the Happier Lives Institute, where they compare the cost-effectiveness of improving wellbeing across multiple charities. They find that the top charities (including Pure Earth and Tamaika) might be 100x as cost-effective as others, especially those in high-income countries.