All posts

Today and yesterdayToday and yesterday

Today and yesterday

Today and yesterday

Frontpage Posts

Personal Blogposts

Past weekPast week

Past week

Past week

Frontpage Posts

Personal Blogposts

Past 14 daysPast 14 days

Past 14 days

Past 14 days

Frontpage Posts

Personal Blogposts

Past 31 days

Frontpage Posts

Personal Blogposts

Since May 1st

Frontpage Posts

Quick takes

I have a bunch of disagreements with Good Ventures and how they are allocating their funds, but also Dustin and Cari are plausibly the best people who ever lived.

Looks like Mechanize is choosing to be even more irresponsible than we previously thought. They're going straight for automating software engineering. Would love to hear their explanation for this.

"Software engineering automation isn't going fast enough"[1] - oh really?

This seems even less defensible than their previous explanation of how their work would benefit the world.

1. ^

Not an actual quote

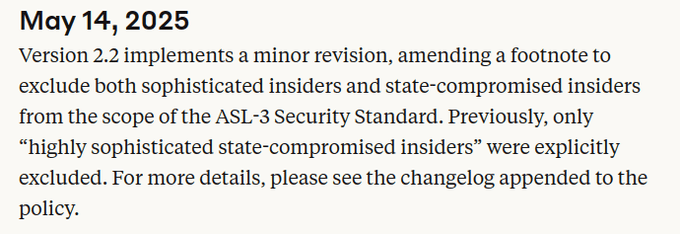

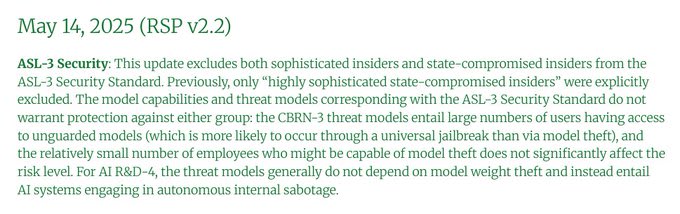

A week ago, Anthropic quietly weakened their ASL-3 security requirements. Yesterday, they announced ASL-3 protections.

I appreciate the mitigations, but quietly lowering the bar at the last minute so you can meet requirements isn't how safety policies are supposed to work.

(This was originally a tweet thread (https://x.com/RyanPGreenblatt/status/1925992236648464774) which I've converted into a quick take. I also posted it on LessWrong.)

What is the change and how does it affect security?

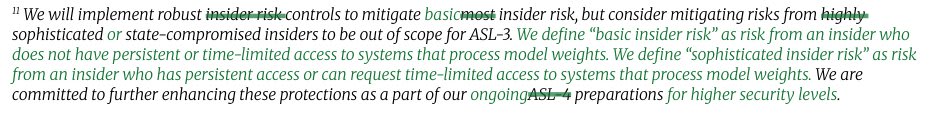

9 days ago, Anthropic changed their RSP so that ASL-3 no longer requires being robust to employees trying to steal model weights if the employee has any access to "systems that process model weights".

Anthropic claims this change is minor (and calls insiders with this access "sophisticated insiders").

But, I'm not so sure it's a small change: we don't know what fraction of employees could get this access and "systems that process model weights" isn't explained.

Naively, I'd guess that access to "systems that process model weights" includes employees being able to operate on the model weights in any way other than through a trusted API (a restricted API that we're very confident is secure). If that's right, it could be a high fraction! So, this might be a large reduction in the required level of security.

If this does actually apply to a large fraction of technical employees, then I'm also somewhat skeptical that Anthropic can actually be "highly protected" from (e.g.) organized cybercrime groups without meeting the original bar: hacking an insider and using their access is typical!

Also, one of the easiest ways for security-aware employees to evaluate security is to think about how easily they could steal the weights. So, if you don't aim to be robust to employees, it might be much harder for employees to evaluate the level of security and then complain about not meeting requirements[1].

Anthropic's justification and why I disagree

Anthropic justified the change by

I've now spoken to ~1,400 people as an advisor with 80,000 Hours, and if there's a quick thing I think is worth more people doing, it's doing a short reflection exercise about one's current situation.

Below are some (cluster of) questions I often ask in an advising call to facilitate this. I'm often surprised by how much purchase one can get simply from this -- noticing one's own motivations, weighing one's personal needs against a yearning for impact, identifying blind spots in current plans that could be triaged and easily addressed, etc.

A long list of semi-useful questions I often ask in an advising call

1. Your context:

1. What’s your current job like? (or like, for the roles you’ve had in the last few years…)

1. The role

2. The tasks and activities

3. Does it involve management?

4. What skills do you use? Which ones are you learning?

5. Is there something in your current job that you want to change, that you don’t like?

2. Default plan and tactics

1. What is your default plan?

2. How soon are you planning to move? How urgently do you need to get a job?

3. Have you been applying? Getting interviews, offers? Which roles? Why those roles?

4. Have you been networking? How? What is your current network?

5. Have you been doing any learning, upskilling? How have you been finding it?

6. How much time can you find to do things to make a job change? Have you considered e.g. a sabbatical or going down to a 3/4-day week?

7. What are you feeling blocked/bottlenecked by?

3. What are your preferences and/or constraints?

1. Money

2. Location

3. What kinds of tasks/skills would you want to use? (writing, speaking, project management, coding, math, your existing skills, etc.)

4. What skills do you want to develop?

5. Are you interested in leadership, management, or individual contribution?

6. Do you want to shoot for impact? H

There is going to be a Netflix series on SBF titled The Altruists, so EA will be back in the media. I don't know how EA will be portrayed in the show, but regardless, now is a great time to improve EA communications. More specifically, being a lot more loud about historical and current EA wins — we just don't talk about them enough!

A snippet from Netflix's official announcement post:

I have a bunch of disagreements with Good Ventures and how they are allocating their funds, but also Dustin and Cari are plausibly the best people who ever lived.