It seems to me that the information that betting so heavily on FTX and SBF was an avoidable failure. So what could we have done ex-ante to avoid it?

You have to suggest things we could have actually done with the information we had. Some examples of information we had:

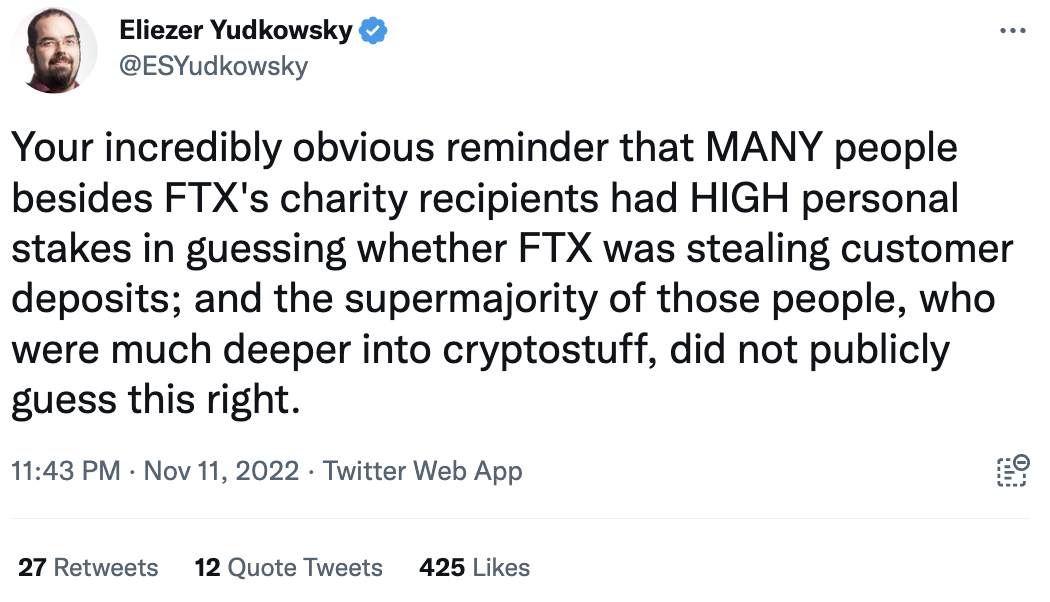

First, the best counterargument:

Then again, if we think we are better at spotting x-risks then these people maybe this should make us update towards being worse at predicting things.

Also I know there is a temptation to wait until the dust settles, but I don't think that's right. We are a community with useful information-gathering technology. We are capable of discussing here.

Things we knew at the time

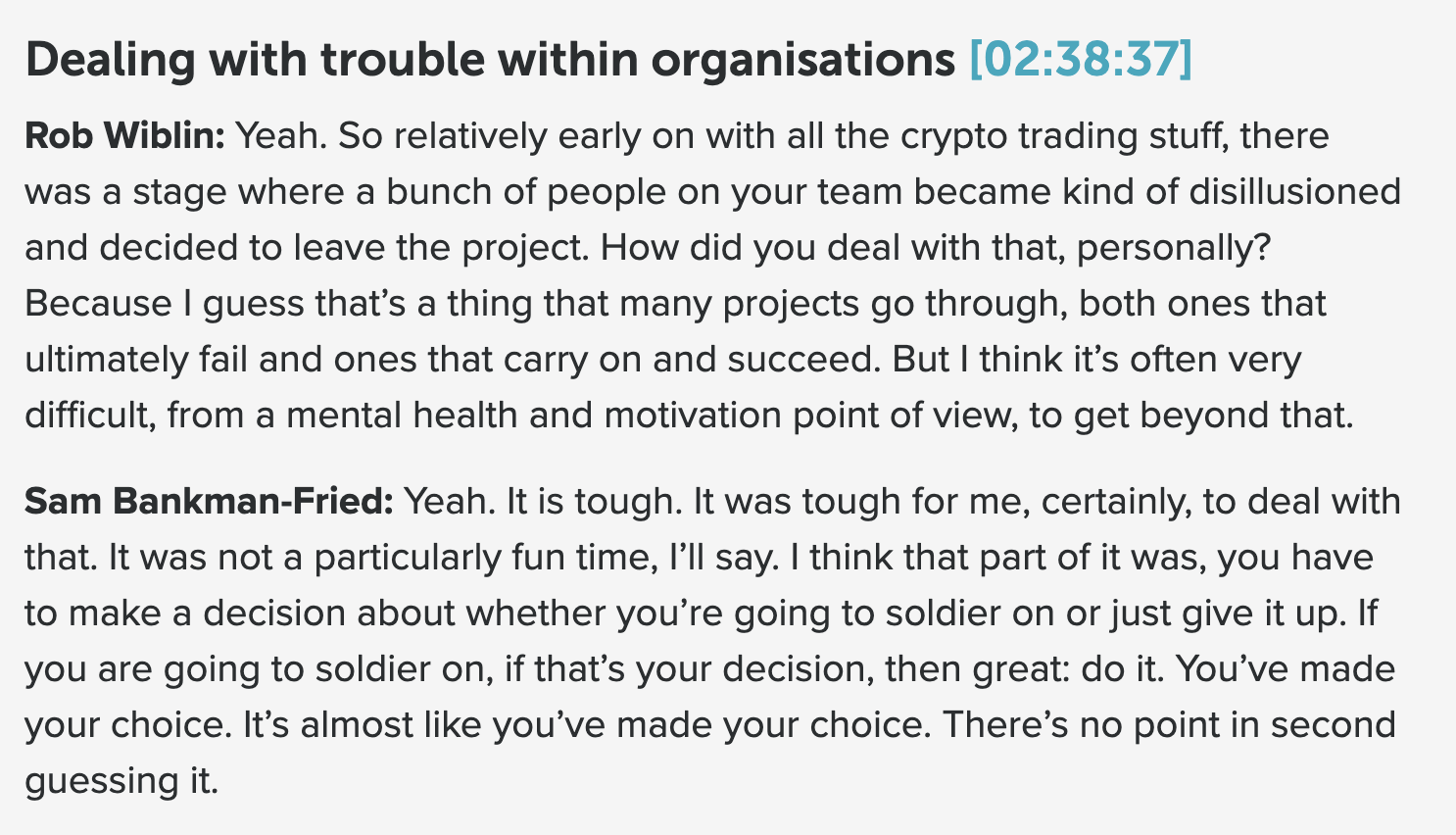

We knew that about half of Alameda left at one time. I'm pretty sure many are EAs or know them and they would have had some sense of this.

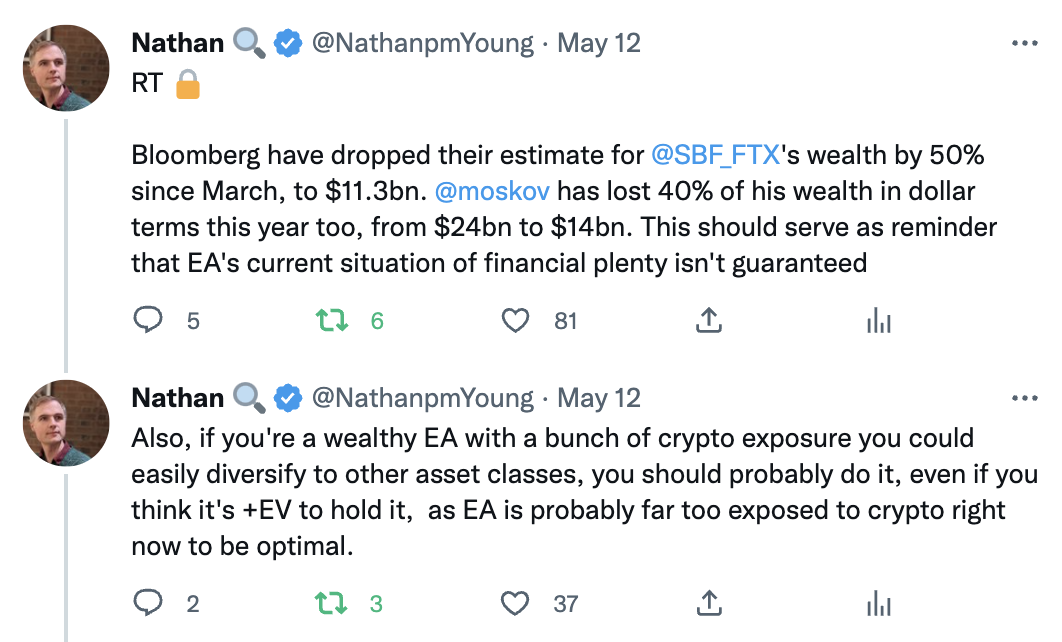

We knew that SBF's wealth was a very high proportion of effective altruism's total wealth. And we ought to have known that something that took him down would be catastrophic to us.

This was Charles Dillon's take, but he tweets behind a locked account and gave me permission to tweet it.

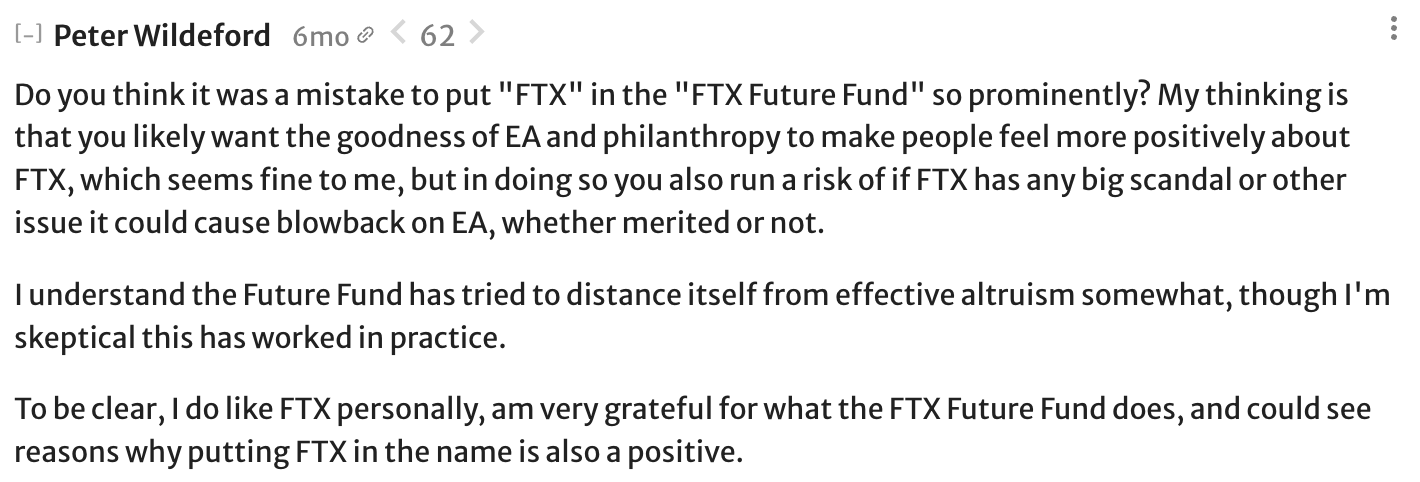

Peter Wildeford noted the possible reputational risk 6 months ago:

We knew that corruption is possible and that large institutions need to work hard to avoid being coopted by bad actors.

Many people found crypto distasteful or felt that crypto could have been a scam.

FTX's Chief Compliance Officer, Daniel S. Friedberg, had behaved fraudulently In the past. This from august 2021.

In 2013, an audio recording surfaced that made mincemeat of UB’s original version of events. The recording of an early 2008 meeting with the principal cheater (Russ Hamilton) features Daniel S. Friedberg actively conspiring with the other principals in attendance to (a) publicly obfuscate the source of the cheating, (b) minimize the amount of restitution made to players, and (c) force shareholders to shoulder most of the bill.

A good policy change should be at least general enough to prevent Ben Delo-style catastrophes too (a criminal crypto EA billionaire from a few years back.) Let's not overfit to this data point!

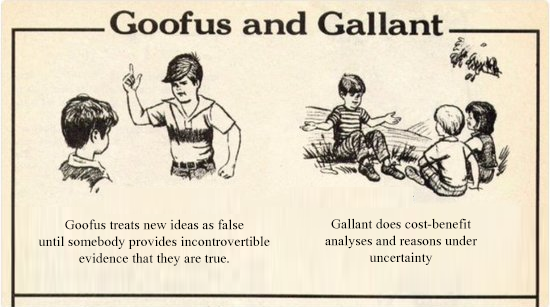

One takeaway I'm tossing about is adopting a much more skeptical posture towards the ultra-wealthy, by which I mean always having your guard up, even if they seem squeaky-clean (so far). With the ultra-wealthy/ultra-powerful, don't start from a position of trust and adjust downwards when you hear bad stories; do the opposite.

It can’t be right to cut all ties to the super-rich, for all the classic reasons (eg the feminist movement was sped up a lot by the funding which created the pill). Hundreds of thousands of people would die if we did this. Ambitious attempts to fix the world’s pressing problems depends on having resources.

But there are plenty of moderate options, too.

Here's three ways I think learning to mistrust the ultra-wealthy could help matters.

A general posture of skepticism towards power might have helped whenever someone raised qualms about FTX, or SBF himself, such that the warnings wouldn't have fallen on deaf ears and could be pieced together over time.

It would also motivate infrastructure to protect EA against SBFs and Delos: for example, ensuring good governance, and whistleblowing mechanisms, or asking billionaires to donate their wealth to separate entities now in advance of grants being allocated, so we know they're for real / the commitment is good. (Each of these mechanisms might not be a good idea, I don't know, I just mean that the general category probably has useful things in it.)

It would also imply 'stop valorizing them'. If we hadn't got to the point where I have a sticker by my bed reading 'What Would SBF Do?' (from EAG SF 2022*), EAs like me could hold our heads higher (I should probably remove that). Other ways to valorize billionaires include inviting them to events, podcasts, parties, working for them, and doing free PR for them. Let's think twice about that next time.

Not saying 'stop talking to Vitalik', but: the alliance with billionaires has been very cosy, and perhaps it should cool, or uneasy.

The above is strongly expressed, but I'm mainly trying this view on for size.

*produced by a random attendee rather than the EAG team

My current understanding is that Ben Delo was convicted over failure to implement a particular regulation that strikes me as not particularly tied to the goodness of humanity; I know very little about him one way or the other but do not currently have in my "poor moral standing" column on the basis of what I've heard.

Obvious potential conflicts of interest are obvious: MIRI received OP funding at one point that was passed through by way of Ben Delo. I don't recall meeting or speaking with Ben Delo at any length and don't make positive endorsement of him.