In my opinion, we have known that the risk of AI catastrophe is too high and too close for at least two years. At that point, it’s time to work on solutions (in my case, advocating an indefinite pause on frontier model development until it’s safe to proceed through protests and lobbying as leader of PauseAI US).

Not every policy proposal is as robust to timeline length as PauseAI. It can be totally worth it to make a quality timeline estimate, both to inform your own work and as a tool for outreach (like ai-2027.com). But most of these timeline updates simply are not decision-relevant if you have a strong intervention. If your intervention is so fragile and contingent that every little update to timeline forecasts matters, it’s probably too finicky to be working on in the first place.

I think people are psychologically drawn to discussing timelines all the time so that they can have the “right” answer and because it feels like a game, not because it really matters the day and the hour of… what are these timelines even leading up to anymore? They used to be to “AGI”, but (in my opinion) we’re basically already there. Point of no return? Some level of superintelligence? It’s telling that they are almost never measured in terms of actions we can take or opportunities for intervention.

Indeed, it’s not really the purpose of timelines to help us to act. I see people make bad updates on them all the time. I see people give up projects that have a chance of working but might not reach their peak returns until 2029 to spend a few precious months looking for a faster project that is, not surprisingly, also worse (or else why weren’t they doing it already?) and probably even lower EV over the same time period! For some reason, people tend to think they have to have their work completed by the “end” of the (median) timeline or else it won’t count, rather than seeing their impact as the integral over the entire project that does fall within the median timeline estimate or taking into account the worlds north of the median. I think these people would have done better to know like 90% less of the timeline discourse than they did.

I don’t think AI timelines pay rent for all the oxygen they take up, and they can be super scary to new people who want get involved without really helping them to action. Maybe I’m wrong and you find lots of action-relevant insights there. If it’s the case that timeline updates frequently update your actions, your intervention may not be robust enough to the assumptions that go into the timeline or the timeline’s uncertainty, anyway. In which case, you should probably pursue a more robust intervention. Like, if you are changing your strategy every time a new model drops with new capabilities that advance timelines, you clearly need to take a step back and account for more and more powerful models in your intervention in the first place. Looks like you shouldn’t be playing it so close to the trend line. PauseAI, for example, is an ask that works under a wide variety of scenarios, including AI development going faster than we thought, because it is not contingent on the exact level of development we have reached since we passed GPT-4.

Be part of the solution. Pick a timeline-robust intervention. Talk less about timelines and more about calling your Representatives.

Thanks Holly. I agree that fixating on just trying to answer the "AI timelines" question won't be productive for most people. Though, we all need to come to terms with it somehow. I like your callout for "timeline-robust interventions". I think that's a very important point. Though I'm not sure that implies calling your representatives.

I disagree that "we know what we need to know". To me, the proper conversation about timelines isn't just "when AGI", but rather, "at what times will a number of things happen", including various stages of post-AGI technology, and AI's dynamics with the world as a whole. It incorporates questions like "what kinds of AIs will be present".

This allows us to make more prudent interventions: What technical AI safety and AI governance you need depends on the nature of the AI that will be built. Important AI to address isn't just orthogonality thesis-driven paperclip maximizers.

I think seeing the way AI is emerging, that it's clear some classic AI safety challenges are not as relevant anymore. For example, it seems to me that "value learning" is looking much easier than classic AI safety advocates thought. But versions of many classic AI safety challenges are still relevant. The same issue remains: if we can't verify that something vastly more intelligent than us is acting in our interests, then we are in peril.

I don't think it would be right if everyone would be occupied with such AI timelines and AI scenarios questions, but I think they deserve very strong efforts. If you are trying to solve a problem, the most important thing to get right is what problem you're trying to solve. And what is the problem of AI safety? That depends on what kind of AI will be present in the world and what humans will be doing with it.

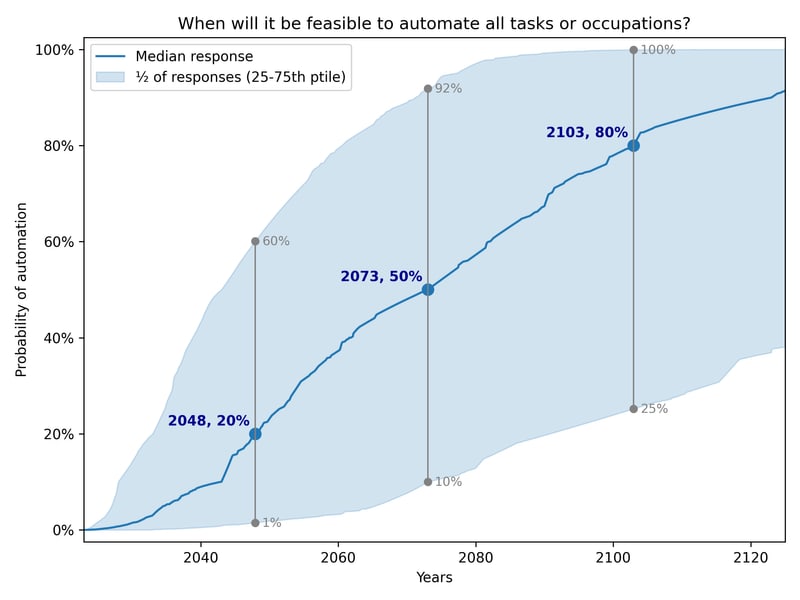

Yeah, I was mostly thinking about policy - if we're facing 90% unemployment, or existential risk, and need policy solutions, the difference between 5 and 7 years is immaterial. (There are important political differences, but the needed policies are identical.)