the Global Priorities Institute at Oxford University has shut down as of July. More information, publication list and additional groups on website. Surprised this hasn't been brought up given how important GPI was in establishing EA as a legitimate academic research space. By my count, barring Trajan House, it now appears that EA has officially been annexed from Oxford. This feels like a significant change post-FTX - I see pros and cons to not being tied to one university. Thoughts?

By my count, barring Trajan House, it now appears that EA has officially been annexed from Oxford

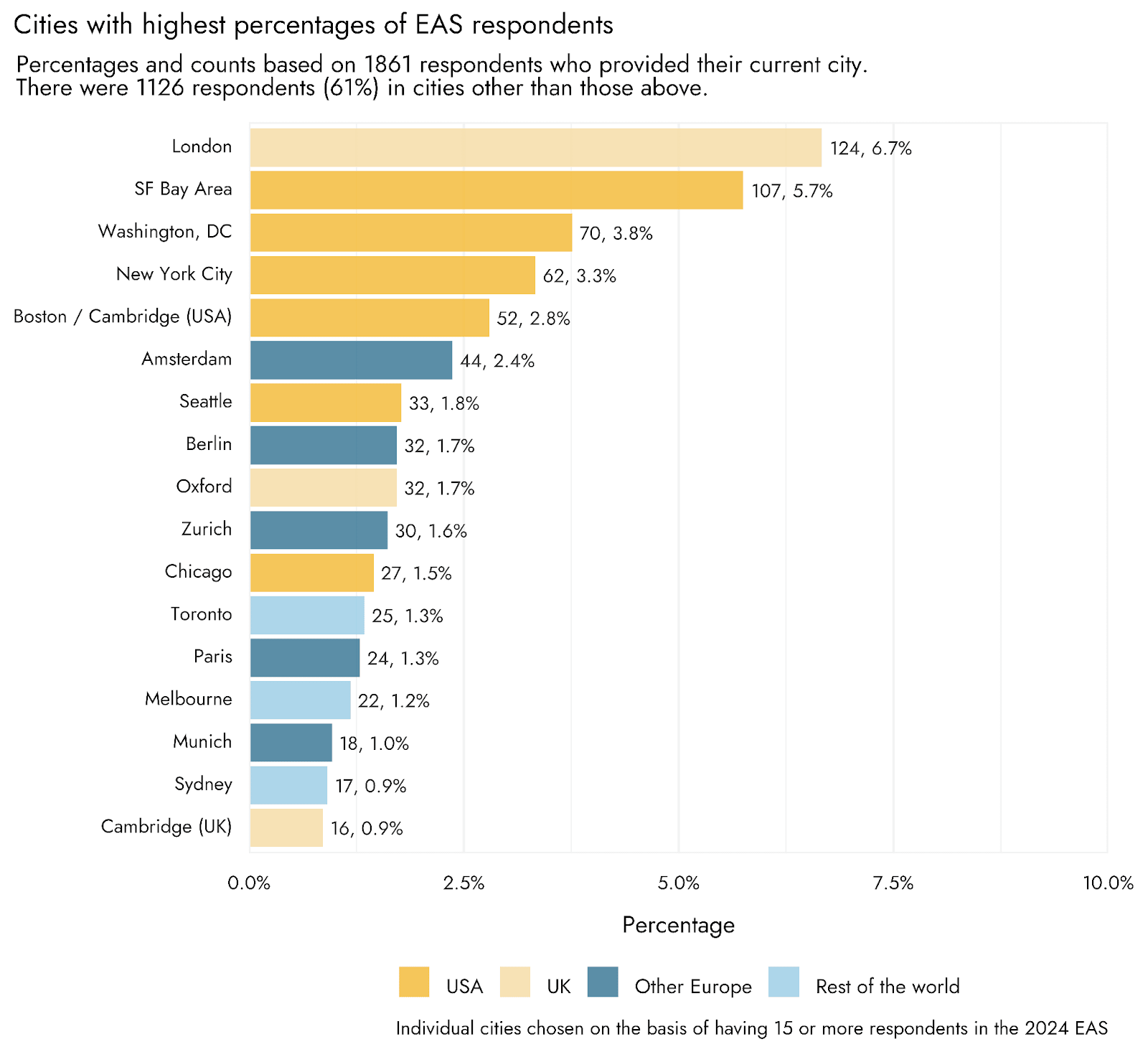

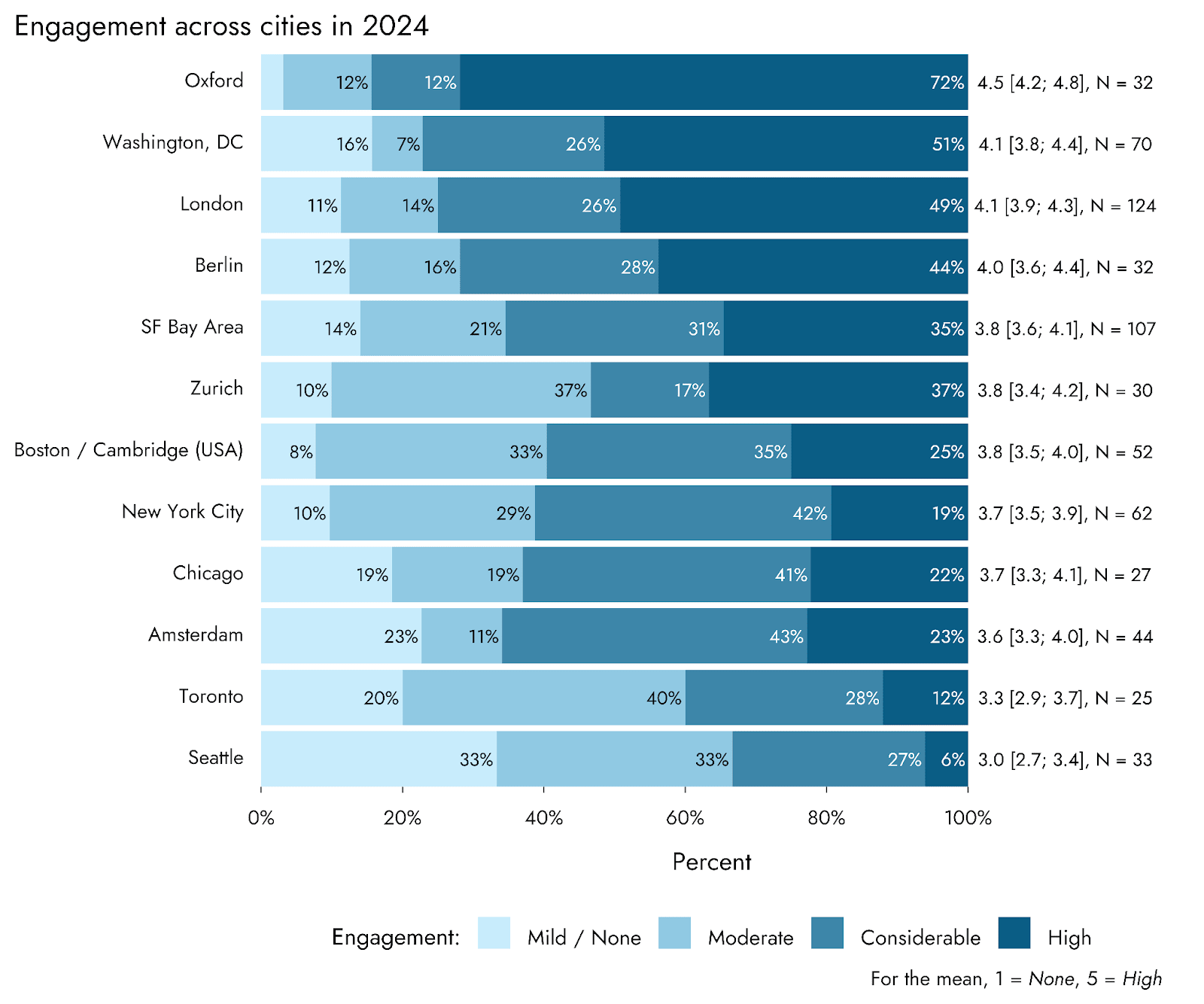

Do you mean Oxford University? That could be right (though a little strong, I'm sure it has its sympathisers). Noting that Oxford is still one of the cities (towns?) with the highest density of EAs in the world. People here are also very engaged (i.e. probably work in the space).

Forethought appears to have spun out and become a separate organization, Oxford Martin AIGI does have a handful of people who were affiliated with the former Future of Humanity Institute, but the OM AIGI itself is not directly affiliated with EA. Yes the college chapter EA Oxford is still running, but I meant professional working groups (sorry if that wasn't clear).

If you would like to receive email updates about any future research endeavours that continue the mission of the Global Priorities Institute, you can also sign up here.

I signed up for the mailing list! Is there any other way to support research similar to GPI's work—for instance, would it make sense to donate to Forethought Foundation?

That's great Eevee! Forethought has shifted to focusing on AGI (so yes, I'm sure they need support, but it isn't doing work like GPI, just to be clear). There is a list at the bottom of the page of other organizations but none of them work directly on the philosophical approach to cause and resource prioritization - which is what made GPI so valuable to the movement. My best recommendation would be to broadly support groups like Givewell, Giving What We Can and the Life You Can Save since they do some level of research (really all groups do). You could also follow specific researchers work and reach out directly to see if they need support.

EDIT: just confirmed that FHI shut down as of April 16, 2024

It sounds like the Future of Humanity Institute may be permanently shut down.

Background: FHI went on a hiring freeze/pause back in 2021 with the majority of staff leaving (many left with the spin-off of the Centre for the Governance of AI) and moved to other EA organizations. Since then there has been no public communication regarding its future return, until now...

The Director, Nick Bostrom, updated the bio section on his website with the following commentary [bolding mine]:

"...Those were heady years. FHI was a unique place - extremely intellectually alive and creative - and remarkable progress was made. FHI was also quite fertile, spawning a number of academic offshoots, nonprofits, and foundations. It helped incubate the AI safety research field, the existential risk and rationalist communities, and the effective altruism movement. Ideas and concepts born within this small research center have since spread far and wide, and many of its alumni have gone on to important positions in other institutions.

Today, there is a much broader base of support for the kind of work that FHI was set up to enable, and it has basically served out its purpose. (The local faculty administrative bureaucracy has also become increasingly stifling.) I think those who were there during its heyday will remember it fondly. I feel privileged to have been a part of it and to have worked with the many remarkable individuals who flocked around it."

This language suggests that FHI has officially closed. Can anyone at Trajan/Oxford confirm?

Also curious if there is any project in place to conduct a post mortem on the impact FHI has had on the many different fields and movements? I think it's important to ensure that FHI is remembered as a significant nexus point for many influential ideas and people who may impact the long term.

In other news, Bostrom's new book "Deep Utopia" is available for pre-order (coming March 27th).

Further evidence: The 80,000 Hours website footer no longer mentions FHI. Until February 2023, the footer contained the following statement:

We're affiliated with the Future of Humanity Institute and the Global Priorities Institute at the University of Oxford.

By February 21, that statement was replaced with a paragraph simply stating that 80k is part of EV. The references to GPI, CEA and GWWC were also removed:

Yeah, it looks like the FHI website's news section hasn't been updated since 2021. Nor are there any publications since 2021.

Atlas Fellowship has announced it's shutting down its program - see full letter on their site: https://www.atlasfellowship.org Reasons listed for the decision are 1) funding landscape has changed 2) the programs were less impactful than expected and 3) some staff think they'll have more impact pursuing careers in AI safety.

The Effective Ventures Foundation UK’s Full Accounts for Fiscal Year 2022 has been released via the UK companies house filings (August 30 2023 entry - it won't let me direct link the PDF).

- Important to note that as of June 2022 “EV UK is no longer the sole member of EV US and now operate as separate organizations but coordinate per an affiliation agreement (p11).”

- It’s noted that Open Philanthropy was, for the 2021/2022 fiscal year, the primary funder for the organization (p8).

- EVF (UK&US) had consolidated income of just over £138 million (as of June 2022). That’s a ~£95 million increase from 2021.

- Consolidated expenses for 2022 were ~ £79 million - an increase of £56 million from 2021 (still p8).

- By end of fiscal year consolidated net funds were just over £87 million of which £45.7 million were unrestricted.

- (p10) outlines EVF’s approach to risk management and mentions FTX collapse.

- A lot of boiler plate in this document so you may want to skip ahead to page 26 for more specific breakdowns

- EVF made grants totaling ~£50 million (to institutions and 826 individuals) an almost £42 million increase in one year (p27)

- A list of grant breakdowns (p28) ; a lot of recognizable organizations listed from AMF to BERI and ACE

- also a handful of orgs I do not recognize or vague groupings like “other EA organizations” for almost £3 million

- Expenses details (p30) main programs are (1) Core Activities (2) 80,000 Hours (3) Forethought and (4) Grant-making

- Expenses totaled £79 million for 2022 (a £65 million increase from 2021) which seems like a huge jump for just one year

- further expense details are on (p31-33) and tentatively show a £23.3 million jump between 2021 and 2022 [but the table line items are NOT the same across 2021/2022 so it’s hard to tell - if anyone can break this down better please do in the comments]

- We may now have a more accurate number of £1.6 million spent on marketing for What We Owe The Future (which isn’t the $10 million I’ve heard floating around, but still seems like a lot for one book) (p31)

- staffing details mentioned (p35)

- cryptocurrencies on (p37)

- real estate (p39) <£15 million went to Wytham Abbey and <£1.5 million went to Lakeside (another relatively new EA property)

- More details on Specific Organizations under EVFs purview begin (p43) specifically beginning fiscal year balances, income, expenses and end of fiscal year balance

- The only thing that stood out to me on this page - Forethought overspent by ~£2 million?? was it the marketing?

- (p46) FHI/GPI also overspent (on office costs) but Forethought doesn’t list their reasons only that they got a grant to cover it (from whom, Open Phil or a private donor?)

- Restricted funds analysis on p47

- p53 (last one!) notes DGB has earned <£100 on royalties for 2021/2022 and the Precipice has earned £37,293 for both years

- p53 mentions EVF UK received donations of < £1.5 million from the FTX Foundation AND that the CEO of FTX was on the Board

- p53 mentions the charity commission investigation, name change from CEA and separation of EVF UK from EVF US

* A reminder that both EVF UK and US annual reports can be found on their website

EVF US has also released their 2022 990 tax form

* Note the fiscal year 2021-2022 (June) preceded the FTX collapse and leadership changes.

If I messed up any of these numbers please let me know and I'll update. Thanks!

I'm not an accountant, but some of the jump between FY 2021 and FY 2022 may have to do with how EVF US was treated in certain respects?

On grantmaking, it appears that almost all of those grants came out of restricted funds (p. 30 / 32nd page of PDF).

The reported staff compensation (p. 36 / 38th page of PDF) does suggest the existence of many more staff salaries in the higher reporting bands in 2022 than 2021. However, could EVF US being a subsidiary of EVF UK for the 2022 reports impact this?

I skimmed the 990 -- also for FY 2022, which ended in June 2022 -- less carefully, but there is more granularity on some smaller grants if you want it on pp. 33-59. There are also specific salaries for trustees and the five highest paid employees (p. 7). About $8MM in 211 crypto contributions (p. 63) -- a figure that suggests some degree of reliance on donations from the crypto industry (don't know if these are FTX-related or not).

In case you haven't seen it, CEA has redone their website. I like the new look and the content makes it much easier to understand the scope of their work. Bravo to whomever worked on this!

Wytham Abbey soft-launched earlier this year with it's own team, but has now formally been added to EV's list of projects and is accepting workshop applications https://www.wythamabbey.org

EA in the wild: I'm having trouble adding a screenshot but I recently made an online purchase and at the bottom of the checkout page was a "give 1% of your purchase to a high-impact cause" - and it was featuring Giving What We Can's funds!

Always fun to see EA in unexpected places. :)

Yes it was!