The Centre for Exploratory Altruism Research (CEARCH) is an EA organization working on cause prioritization research as well as grantmaking and donor advisory. This project was commissioned by the leadership of the Meta Charity Funders (MCF) – also known as the Meta Charity Funding Circle (MCFC) – with the objective of identifying what is underfunded vs overfunded in EA meta. The views expressed in this report are CEARCH's and do not necessarily reflect the position of the MCF.

Generally, by meta we refer to projects whose theory of change is indirect, and involving improving the EA movement's ability to do good – for example, via cause/intervention/charity prioritization (i.e. improving our knowledge of what is cost-effective); effective giving (i.e. increasing the pool of money donated in an impact-oriented way); or talent development (i.e. increasing the pool and ability of people willing and able to work in impactful careers).

The full report may be found here (link). Note that the public version of the report is partially redacted, to respect the confidentiality of certain grants, as well as the anonymity of the people whom we interviewed or surveyed.

Quantitative Findings

Detailed Findings

To access our detailed findings, refer to our spreadsheet (link).

Overall Meta Funding

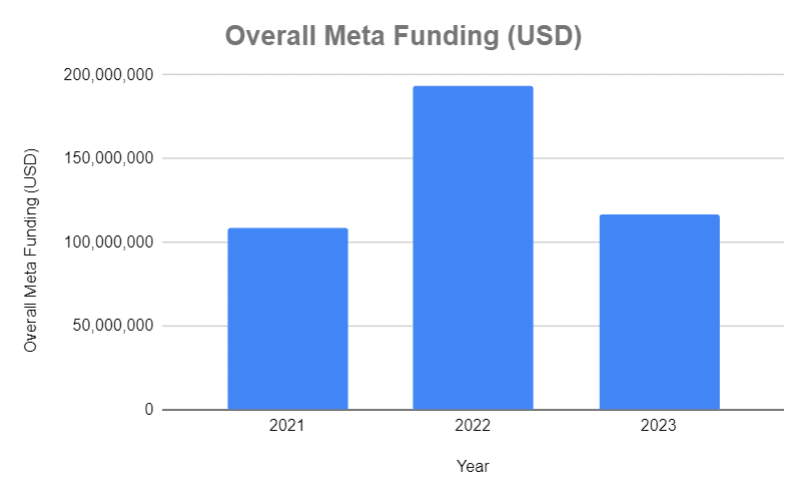

Aggregate EA meta funding saw rapid growth and equally rapid contraction over 2021 to 2023 – growing from 109 million in 2021 to 193 million in 2022, before shrinking back to 117 million in 2023. The analysis excludes FTX, as ongoing clawbacks mean that their funding has functioned less as grants and more as loans.

- Open Philanthropy is by far the biggest funder in the space, and changes in the meta funding landscape are largely driven by changes in OP's spending. And indeed, OP's global catastrophic risks (GCR) capacity building grants tripled from 2021 to 2022, before falling to twice the 2021 baseline in 2023.

- This finding is in line with Tyler Maule's previous analysis.

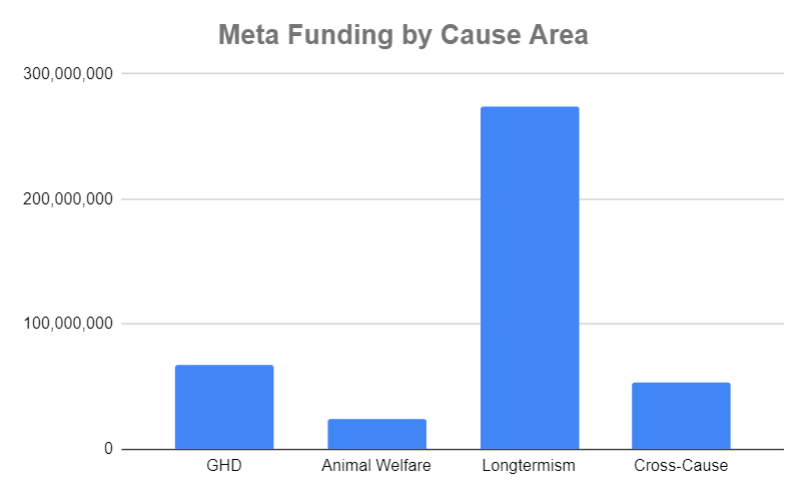

Meta Funding by Cause Area

The funding allocation by cause was, in descending order: (most funding) longtermism (i.e. AI, biosecurity, nuclear etc) (274 million) >> global health and development (GHD) (67 million) > cross-cause (53 million) > animal welfare (25 million) (least funding).

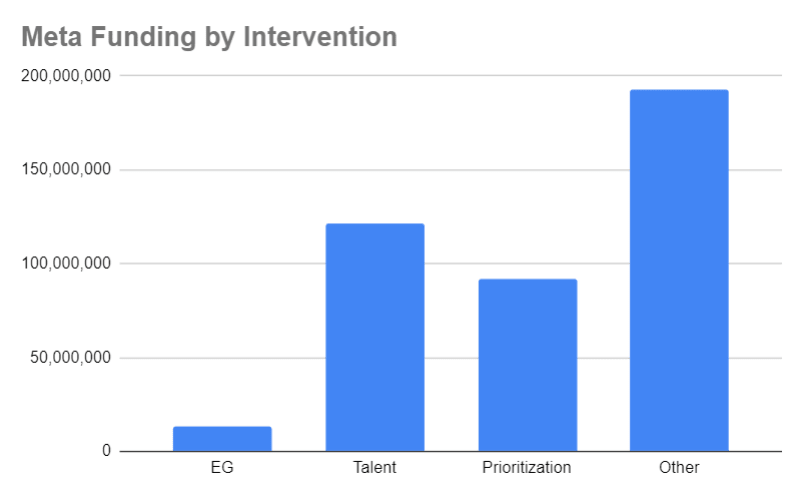

Meta Funding by Intervention

The funding allocation by intervention was, in descending order: (most funding) other/miscellaneous (e.g. general community building, including by national/local EA organizations; events; community infrastructure; co-working spaces; fellowships for community builders; production of EA-adjacent media content; translation projects; student outreach; and book purchases etc) (193 million) > talent (121 million) > prioritization (92 million) >> effective giving (13 million) (least funding). One note of caution – we believe our results overstate how well funded prioritization is, relative to the other three intervention types.

- We take into account what grantmakers spend internally on prioritization research, but for lack of time, we do not perform an equivalent analysis for non-grantmakers (i.e. imputing their budget to effective giving, talent, and other/miscellaneous).

- For simplicity, we classified all grantmakers (except GWWC) as engaging in prioritization, though some grantmakers (e.g. Founders Pledge, Longview) also do effective giving work.

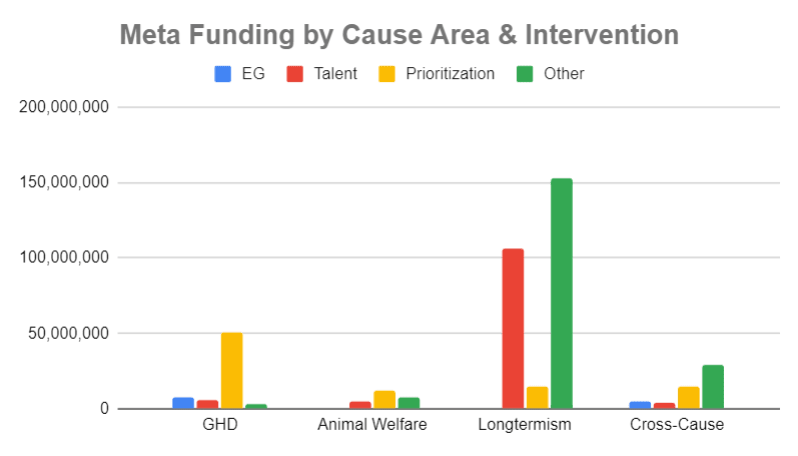

Meta Funding by Cause Area & Intervention

The funding allocation by cause/intervention subgroup was as follows:

- The areas with the most funding were: longtermist other/miscellaneous (153 million) > longtermist talent (106 million) > GHD prioritization (51 million).

- The areas with the least funding were GHD other/miscellaneous (3,000,000) > animal welfare effective giving (500,000) > longtermist effective giving (100,000).

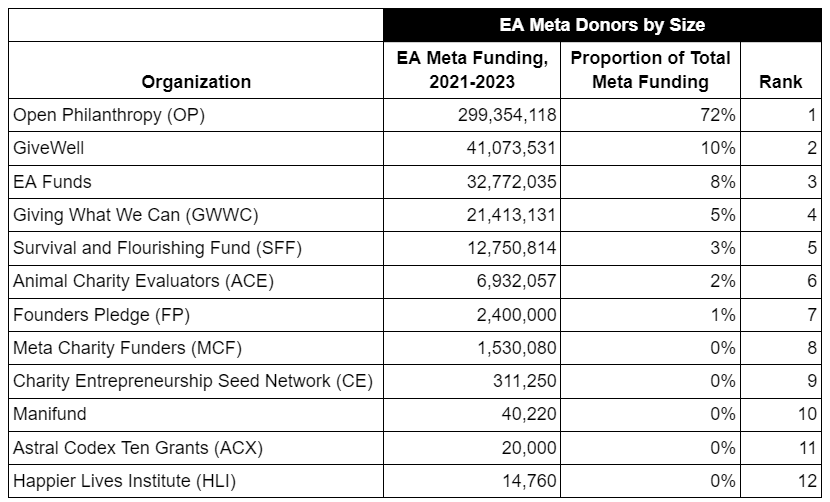

Meta Funding by Organization & Size Ranking

Open Philanthropy is by far the largest funder in the meta space, having given 299 million between 2021-2023; the next two biggest funders are GiveWell (41 million), which also receives OP funding, and EA Funds (which is also funded by OP to a very significant extent).

Meta Funding by Cause Area & Organization

- OP has allocated the lion's share of its meta funding towards longtermism.

- EA Funds, MCF, GWWC and CE have prioritized cross-cause meta.

- As expected, GiveWell, ACE and SFF naturally focus on GHD, animal welfare and longtermist meta respectively.

- FP has supported GHD meta, as has HLI.

- ACX and Manifund have mainly (or exclusively) given to longtermist meta.

How Geography Affects Funding

The survey we conducted finds no strong evidence of funding being geographically biased towards the US/UK relative to the rest of the world; however, there is considerable reason to be sceptical of these findings:

- The small sample size leads to low power, and a low probability of rejecting the null hypothesis even if there is a real relationship between geography and funding success.

- Selection bias likely in play. Organizations/individuals who have funding are systematically less likely to take the survey (since they are likely to be busy working on their funded projects – in contrast to organizations/individuals who did not get funding, who might find the survey an outlet for airing their dissatisfaction with funders). Hence, even if it were true that US/UK projects are more likely to be funded, this will not show up in the data, due to funded organizations/individuals self-selecting out of the survey pool.

- Applicants presumably decide to apply for funding based on their perceived chances of success. If US/UK organizations/individuals genuinely have a better chance of securing funding, and knew this fact, then even lower quality projects would choose to apply, thus lowering the average quality of US/UK applications in the pool. Hence, we end up comparing higher quality projects from the rest of the world to lower quality projects from the US/UK, and equal success in fundraising is not evidence that projects of identical quality from different parts of the world have genuinely equal access to funding.

Overall, we would not update much away from a prior that US/UK-based projects indeed find it easier to get funding due to proximity to grantmakers and donors in the US and UK. To put things in context, the biggest grantmakers on our list are generally based in SF or London (though this is also a generalization to the extent that the teams are global in nature).

Core vs Non-Core Funders

Overall, the EA meta grantmaking landscape is highly concentrated, with core funders (i.e. OP, EA Funds & SFF) making up 82% of total funding.

Biggest EA Organizations

The funding landscape is also fairly skewed on the recipients' end, with the top 10 most important EA organizations receiving 31% of total funding.

Expenditure Categories

When EA organizations spend, they tend to spend approximately 58% of their budget on salary, 8% on non-salary staff costs, and 35% on all other costs.

Qualitative Findings

Outline

We interviewed numerous experts, including but not limited to staff employed by (or donors associated with) the following organizations: OP, EA Funds, MCF, GiveWell, ACE, SFF, FP, GWWC, CE, HLI and CEA. We also surveyed the EA community at large. We are grateful to everyone involved for their valuable insights and invaluable help.

Prioritizing Direct vs Meta

Crucial considerations include (a) the cost-effectiveness of the direct work you yourself would otherwise fund, relative to the cost-effectiveness of the direct work the average new EA donor funds; and (b) whether opportunities are getting better or worse.

Prioritizing Between Causes

Whether to prioritize animal welfare relative to GHD depends on welfare ranges and the effectiveness of top animal interventions, particularly advocacy, in light of counterfactual attribution of advocacy success and declining marginal returns. Whether to prioritize longtermism relative to GHD and animal welfare turns on philosophical considerations (e.g. the person-affecting view vs totalism) and epistemic considerations (e.g. theoretical reasoning vs empirical evidence).

Prioritizing Between Interventions

- Effective Giving: Giving What We Can does appear to generate more money than they spend. There are legitimate concerns that GWWC's current estimate of their own cost-effectiveness is overly optimistic, but a previous shallow appraisal by CEARCH identified no significant flaws, and we believe that GWWC's giving multiplier is directionally correct (i.e. positive).

- Effective giving and the talent pipeline are not necessarily mutually exclusive – GWWC is an important source of talent for EA, and it is counterproductive to reduce effective giving outreach efforts.

- There is a strong case to be made that EA has a backwards approach where we should be focusing EG outreach efforts on high income individuals in rich countries, particularly the US, and yet it is these same people who end up doing a lot of the research and direct work (e.g. in AI), while the people who do EG in practice have a lot less intrinsic earning potential.

- Similarly, it is arguable that younger EAs should be encouraged to do earning-to-give/normal careers where they donate, since one has fewer useful skills and less experience while young, and money is the most useful thing one possesses then (especially in a context where children or mortgages are not a concern). In contrast, EAs can contribute more in direct work when older, when in possession of greater practical skills and experience. The exception to this rule would be EA charity entrepreneurship, where younger people can afford to bear more risk.

- Effective giving and the talent pipeline are not necessarily mutually exclusive – GWWC is an important source of talent for EA, and it is counterproductive to reduce effective giving outreach efforts.

- Talent: The flipside to the above discussion is that our talent outreach might be worth refocusing, both geographically and in terms of age. Geographically, India may be a standout opportunity for getting talent to do research/direct work in a counterfactually cheap way. In terms of age prioritization, it is suboptimal that EA focuses more on outreach to university students or young professionals as opposed to mid-career people with greater expertise and experience.

- Prioritization: GHD cause prioritization may be a good investment if a funder has a higher risk tolerance/a greater willingness to accept a hits-based approach (e.g. health policy advocacy) compared to the average GHD funder.

- Community building: This may be a less valuable intervention unless community building organizations have clearer theories of change and externally validated cost-effectiveness analyses that show how their work produces impact and not just engagement.

Open Philanthropy & EA Funds

- Open Philanthropy: (1) The GHW EA team seems likely to continue prioritizing effective giving; (2) the animal welfare team is currently prioritizing a mix of interventions; and (3) the global catastrophic risks (GCR) capacity building team will likely continue prioritizing AGI risk.

- There has been increasing criticism of OP, stemming from (a) cause level disagreements (neartermists vs longtermists, animal welfare vs GHD); (b) strategic disagreements (e.g. lack of leadership; and spending on GCR and EA community building doing little, or perhaps even accelerating AI risk); and (c) concerns over the dominance of OP warping the community to reduce accountability and impact.

- EA Funds: The EA Funds chair has clarified that EAIF would only really coordinate with OP, since they're reliably around; only if the MCF was around for some time, would EA Funds find it worth factoring into their plans.

- On the issue of EA Funds's performance, we found a convergence in opinion amongst both experts and the EA community at large. It was generally thought that (a) EA Funds leadership has not performed too well relative to the complex needs of the ecosystem; and (b) the EAIF in particular has not been operating smoothly. Our own assessment is that the criticism is not without merit, and overall, we are (a) concerned about EAIF's current ability to fully support an effective EA meta ecosystem; and (b) uncertain as to whether this will improve in the medium term. On the positive side, we believe improvement to be possible, and in some respects fairly straightforward (e.g. hiring additional staff to process EAIF grants).

Trends & Uncertainty

- Trends: Potential trends of note within the funding space include (a) more money being available on the longtermist side due to massive differences in willingness-to-pay between different parts of OP; (b) an increasing trend towards funding effective giving; and (c) much greater interest in funding field- and movement-building outside of EA (e.g. AI safety field-building)

- Uncertainty: The meta funding landscape faces high uncertainty in the short-to-medium term, particularly due to (a) turnover at OP (i.e. James Snowden and Claire Zabel moving out of their roles); (b) uncertainty at EA Funds, both in terms of what it wants to do and what funding it has; and (c) the short duration of time since FTX collapsed, with the consequences still unfolding – notably, clawbacks are still occuring.

Hey, Arden from 80,000 Hours here –

I haven't read the full report, but given the time sensitivity with commenting on forum posts, I wanted to quickly provide some information relevant to some of the 80k mentions in the qualitative comments, which were flagged to me.

Regarding whether we have public measures of our impact & what they show

It is indeed hard to measure how much our programmes counterfactually help move talent to high impact causes in a way that increases global welfare, but we do try to do this.

From the 2022 report the relevant section is here. Copying it in as there are a bunch of links.

Some elaboration:

Regarding the extent to which we are cause neutral & whether we've been misleading about this

We do strive to be cause neutral, in the sense that we try to prioritize working on the issues where we think we can have the highest marginal impact (rather than committing to a particular cause for other reasons).

For the past several years we've thought that the most pressing problem is AI safety, so have put much of our effort there (Some 80k programmes focus on it more than others – I reckon for some it's a majority, but it hasn't been true that as an org we “almost exclusively focus on AI risk.” (a bit more on that here.))

In other words, we're cause neutral, but not cause *agnostic* - we have a view about what's most pressing. (Of course we could be wrong or thinking about this badly, but I take that to be a different concern.)

The most prominent place we describe our problem prioritization is our problem profiles page – which is one of our most popular pages. We describe our list of issues this way: "These areas are ranked roughly by our guess at the expected impact of an additional person working on them, assuming your ability to contribute to solving each is similar (though there’s a lot of variation in the impact of work within each issue as well). (Here's also a past comment from me on a related issue.)

Regarding the concern about us harming talented EAs by causing them to choose bad early career jobs

To the extent that this has happened this is quite serious – helping talented people have higher impact careers is our entire point! I think we will always sometimes fail to give good advice (given the diversity & complexity of people's situations & the world), but we do try to aggressively minimise negative impacts, and if people think any particular part of our advice is unhelpful, we'd like them to contact us about it! (I'm arden@80000hours.org & I can pass them on to the relevant people.)

We do also try to find evidence of negative impact, e.g. using our user survey, and it seems dramatically less common than the positive impact (see the stats above), though there are of course selection effects with that kind of method so one can't take that at face value!

Regarding our advice on working at AI companies and whether this increases AI risk

This is a good worry and we talk a lot about this internally! We wrote about this here.

Yeah many of those things seem right to me.

I suspect the crux might be that I don't necessarily think it's a bad thing if "the casual reader of the website doesn't understand that 80k basically works on AGI". E.g. if 80k adds value to someone as they go through the career guide, even if they don' realise that "the organization strongly recommends AI over the rest, or that x-risk gets the lion's share of organizational resources", is there a problem?

I would be concerned if 80k was not adding value. E.g. I can imagine more salesly tactics that look like maki... (read more)