Summary

This document seeks to outline why I feel uneasy about high existential risk estimates from AGI (e.g., 80% doom by 2070). When I try to verbalize this, I view considerations like

- selection effects at the level of which arguments are discovered and distributed

- community epistemic problems, and

- increased uncertainty due to chains of reasoning with imperfect concepts

as real and important.

I still think that existential risk from AGI is important. But I don’t view it as certain or close to certain, and I think that something is going wrong when people see it as all but assured.

Discussion of weaknesses

I think that this document was important for me personally to write up. However, I also think that it has some significant weaknesses:

- There is some danger in verbalization leading to rationalization.

- It alternates controversial points with points that are dead obvious.

- It is to a large extent a reaction to my imperfectly digested understanding of a worldview pushed around the ESPR/CFAR/MIRI/LessWrong cluster from 2016-2019, which nobody might hold now.

In response to these weaknesses:

- I want to keep in mind that I do want to give weight to my gut feeling, and that I might want to update on a feeling of uneasiness rather than on its accompanying reasonings or rationalizations.

- Readers might want to keep in mind that parts of this post may look like a bravery debate. But on the other hand, I've seen that the points which people consider obvious and uncontroversial vary from person to person, so I don’t get the impression that there is that much I can do on my end for the effort that I’m willing to spend.

- Readers might want to keep in mind that actual AI safety people and AI safety proponents may hold more nuanced views, and that to a large extent I am arguing against a “Nuño of the past” view.

Despite these flaws, I think that this text was personally important for me to write up, and it might also have some utility to readers.

Uneasiness about chains of reasoning with imperfect concepts

Uneasiness about conjunctiveness

It’s not clear to me how conjunctive AI doom is. Proponents will argue that it is very disjunctive, that there are lot of ways that things could go wrong. I’m not so sure.

In particular, when you see that a parsimonious decomposition (like Carlsmith’s) tends to generate lower estimates, you can conclude:

- That the method is producing a biased result, and trying to account for that

- That the topic under discussion is, in itself, conjunctive: that there are several steps that need to be satisfied. For example, “AI causing a big catastrophe” and “AI causing human exinction given that it has caused a large catastrophe” seem like they are two distinct steps that would need to be modelled separately,

I feel uneasy about only doing 1.) and not doing 2.) I think that the principled answer might be to split some probability into each case. Overall, though, I’d tend to think that AI risk is more conjunctive than it is disjunctive

I also feel uneasy about the social pressure in my particular social bubble. I think that the social pressure is for me to just accept Nate Soares’ argument here that Carlsmith’s method is biased, rather than to probabilistically incorporate it into my calculations. As in “oh, yes, people know that conjunctive chains of reasoning have been debunked, Nate Soares addressed that in a blogpost saying that they are biased”.

I don’t trust the concepts

My understanding is that MIRI and others’ work started in the 2000s. As such, their understanding of the shape that an AI would take doesn’t particularly resemble current deep learning approaches.

In particular, I think that many of the initial arguments that I most absorbed were motivated by something like an AIXI (Somolonoff induction + some decision theory). Or, alternatively, by imagining what a very buffed-up Eurisko would look like. This seems to be like a fruitful generative approach which can generate things that could go wrong, rather than demonstrating that something will go wrong, or pointing to failures that we know will happen.

As deep learning attains more and more success, I think that some of the old concerns port over. But I am not sure which ones, to what extent, and in which context. This leads me to reduce some of my probability. Some concerns that apply to a more formidable Eurisko but which may not apply by default to near-term AI systems:

- Alien values

- Maximalist desire for world domination

- Convergence to a utility function

- Very competent strategizing, of the “treacherous turn” variety

- Self-improvement

- etc.

Uneasiness about in-the-limit reasoning

One particular form of argument, or chain of reasoning, goes like:

- An arbitrarily intelligent/capable/powerful process would be of great danger to humanity. This implies that there is some point, either at arbitrary intelligence or before it, such that a very intelligent process would start to be and then definitely be a great danger to humanity.

- If the field of artificial intelligence continues improving, eventually we will get processes that are first as intelligent/capable/powerful as a single human mind, and then greatly exceed it.

- This would be dangerous

The thing is, I agree with that chain of reasoning. But I see it as applying in the limit, and I am much more doubtful about it being used to justify specific dangers in the near future. In particular, I think that dangers that may appear in the long-run may manifest in limited and less dangerous form in earlier on.

I see various attempts to give models of AI timelines as approximate. In particular:

- Even if an approach is accurate at predicting when above-human level intelligence/power/capabilities would arise

- This doesn’t mean that the dangers of in-the-limit superintelligence would manifest at the same time

AGI, so what?

For a given operationalization of AGI, e.g., good enough to be forecasted on, I think that there is some possibility that we will reach such a level of capabilities, and yet that this will not be very impressive or world-changing, even if it would have looked like magic to previous generations. More specifically, it seems plausible that AI will continue to improve without soon reaching high shock levels which exceed humanity’s ability to adapt.

This would be similar to how the industrial revolution was transformative but not that transformative. One possible scenario for this might be a world where we have pretty advanced AI systems, but we have adapted to that, in the same way that we have adapted to electricity, the internet, recommender systems, or social media. Or, in other words, once I concede that AGI could be as transformative as the industrial revolution, I don't have to concede that it would be maximally transformative.

I don’t trust chains of reasoning with imperfect concepts

The concerns in this section, when combined, make me uneasy about chains of reasoning that rely on imperfect concepts. Those chains may be very conjunctive, and they may apply to the behaviour of an in-the-limit-superintelligent system, but they may not be as action-guiding for systems in our near to medium term future.

For an example of the type of problem that I am worried about, but in a different domain, consider Georgism, the idea of deriving all government revenues from a land value tax. From a recent blogpost by David Friedman: “since it is taxing something in perfectly inelastic supply, taxing it does not lead to any inefficient economic decisions. The site value does not depend on any decisions made by its owner, so a tax on it does not distort his decisions, unlike a tax on income or produced goods.”

Now, this reasoning appears to be sound. Many people have been persuaded by it. However, because the concepts are imperfect, there can still be flaws. One possible flaw might be that the land value would have to be measured, and that inefficiency might come from there. Another possible flaw was recently pointed out by David Friedman in the blogpost linked above, which I understand as follows: the land value tax rewards counterfactual improvement, and this leads to predictable inefficiencies because you want to be rewarding Shapley value instead, which is much more difficult to estimate.

I think that these issues are fairly severe when attempting to make predictions for events further in the horizon, e.g., ten, thirty years. The concepts shift like sand under your feet.

Uneasiness about selection effects at the level of arguments

I am uneasy about what I see as selection effects at the level of arguments. I think that there is a small but intelligent community of people who have spent significant time producing some convincing arguments about AGI, but no community which has spent the same amount of effort looking for arguments against.

Here is a neat blogpost by Phil Trammel on this topic.

Here are some excerpts from a casual discussion among Samotsvety Forecasting team members:

The selection effect story seems pretty broadly applicable to me. I'd guess most Christian apologists, Libertarians, Marxists, etc. etc. etc. have a genuine sense of dialectical superiority: "All of these common objections are rebutted in our FAQ, yet our opponents aren't even aware of these devastating objections to their position", etc. etc.

You could throw in bias in evaluation too, but straightforward selection would give this impression even to the fair-minded who happen to end up in this corner of idea space. There are many more 'full time' (e.g.) Christian apologists than anti-apologists, so the balance of argumentative resources (and so apparent balance of reason) will often look slanted.

This doesn't mean the view in question is wrong: back in my misspent youth there were similar resources re, arguing for evolution vs. creationists/ID (https://www.talkorigins.org/). But it does strongly undercut "but actually looking at the merits clearly favours my team" alone as this isn't truth tracking (more relevant would be 'cognitive epidemiology' steers: more informed people tend to gravitate to one side or another, proponents/opponents appear more epistemically able, etc.)

An example for me is Christian theology. In particular, consider Aquinas' five proofs of good (summarized in Wikipedia), or the various ontological arguments. Back in the day, in took me a bit to a) understand what exactly they are saying, and b) understand why they don't go through. The five ways in particular were written to reassure Dominican priests who might be doubting, and in their time they did work for that purpose, because the topic is complex and hard to grasp.

You should be worried about the 'Christian apologist' (or philosophy of religion, etc.) selection effect when those likely to discuss the view are selected for sympathy for it. Concretely, if on acquaintance with the case for AI risk your reflex is 'that's BS, there's no way this is more than 1/million', you probably aren't going to spend lots of time being a dissident in this 'field' versus going off to do something else.

This gets more worrying the more generally epistemically virtuous folks are 'bouncing off': e.g. neuroscientists who think relevant capabilities are beyond the ken of 'just add moar layers', ML Engineers who think progress in the field is more plodding than extraordinary, policy folks who think it will be basically safe by default etc. The point is this distorts the apparent balance of reason - maybe this is like Marxism, or NGDP targetting, or Georgism, or general semantics, perhaps many of which we will recognise were off on the wrong track.

(Or, if you prefer being strictly object-level, it means the strongest case for scepticism is unlikely to be promulgated. If you could pin folks bouncing off down to explain their scepticism, their arguments probably won't be that strong/have good rebuttals from the AI risk crowd. But if you could force them to spend years working on their arguments, maybe their case would be much more competitive with proponent SOTA).

It is general in the sense there is a spectrum from (e.g.) evolutionary biology to (e.g.) Timecube theory, but AI risk is somewhere in the range where it is a significant consideration.

It obviously isn't an infallible one: it would apply to early stage contrarian scientific theories and doesn't track whether or not they are ultimately vindicated. You rightly anticipated the base-rate-y reply I would make.

Garfinkel and Shah still think AI is a very big deal, and identifying them at the sceptical end indicates how far afield from 'elite common sense' (or similar) AI risk discussion is. Likewise I doubt that there are some incentives to by a dissident from this consensus means there isn't a general trend in selection for those more intuitively predisposed to AI concern.

There are some possible counterpoints to this, and other Samotsvety Forecasting team members made those, and that’s fine. But my individual impression is that the selection effects argument packs a whole lot of punch behind it.

One particular dynamic that I’ve seen some gung-ho AI risk people mention is that (paraphrasing): “New people each have their own unique snowflake reasons for rejecting their particular theory of how AI doom will develop. So I can convince each particular person, but only by talking to them individually about their objections.”

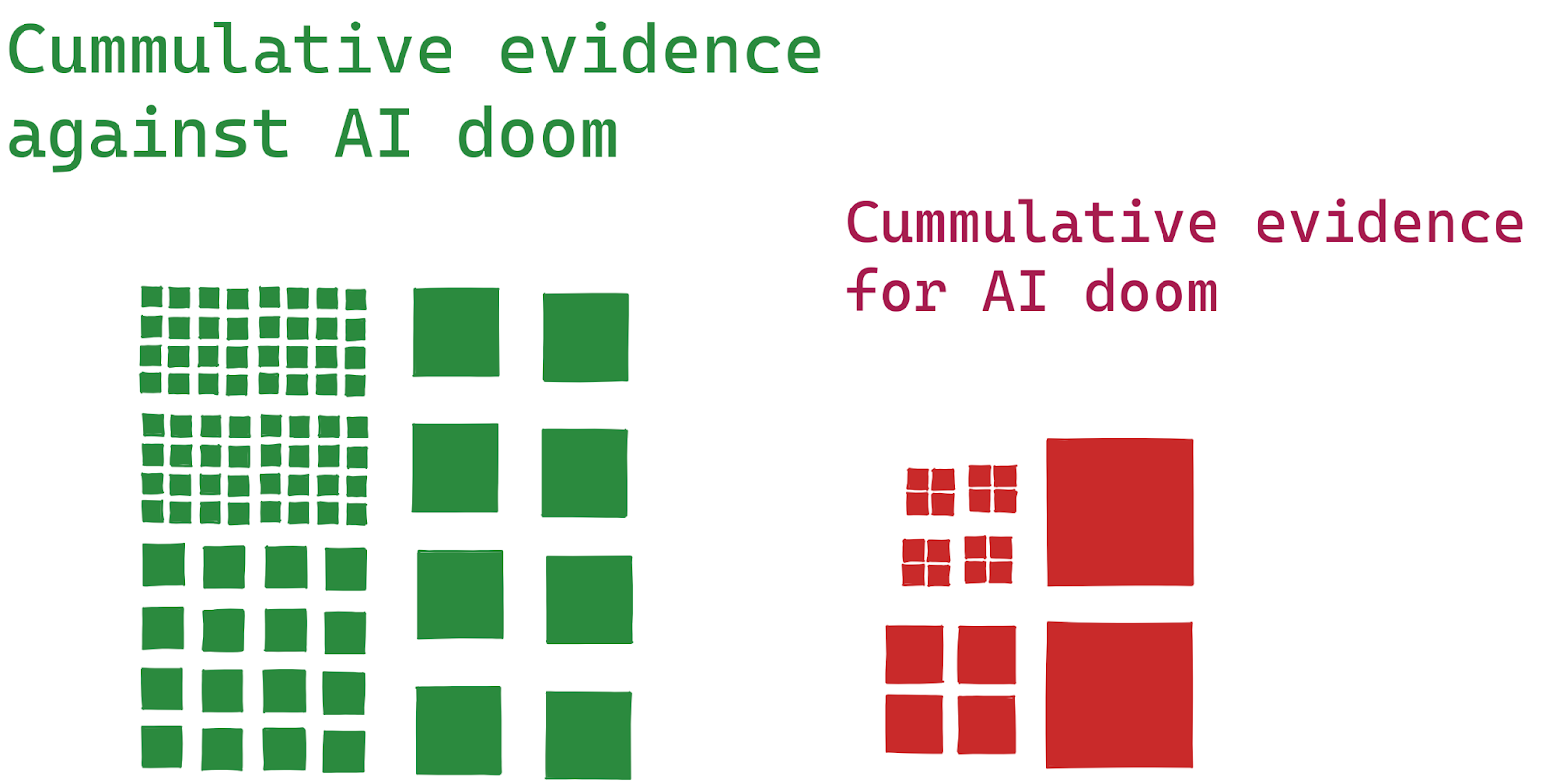

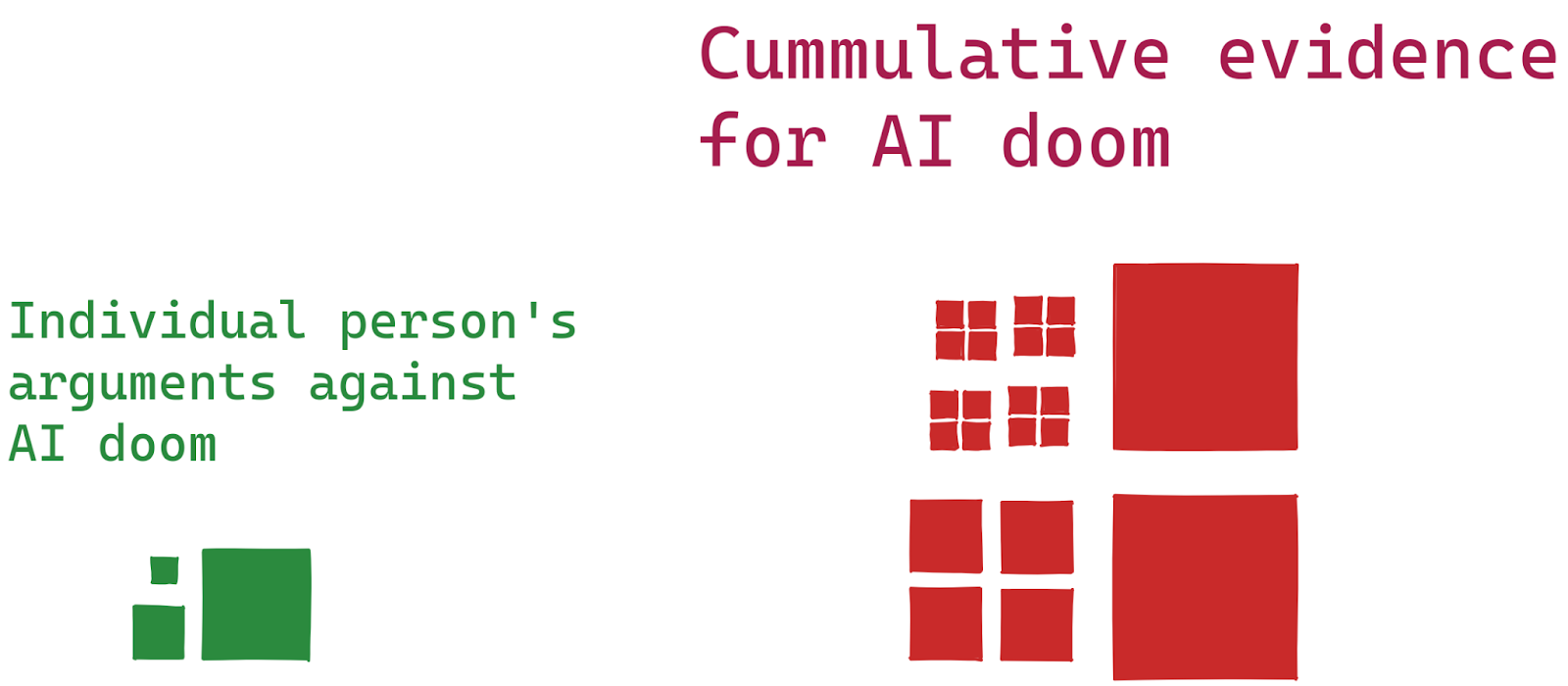

So, in illustration, the overall balance could look something like:

Whereas the individual matchup could look something like:

And so you would expect the natural belief dynamics stemming from that type of matchup.

What you would want to do is to have all the evidence for and against, and then weigh it.

I also think that there are selection effects around which evidence surfaces on each side, rather than only around which arguments people start out with.

It is interesting that when people move to the Bay area, this is often very “helpful” for them in terms of updating towards higher AI risk. I think that this is a sign that a bunch of social fuckery is going on. In particular, I think it might be the case that Bay area movement leaders identify arguments for shorter timelines and higher probability of x-risk with “the rational”, which produces strong social incentives to be persuaded and to come up with arguments in one direction.

More specifically, I think that “if I isolate people from their normal context, they are more likely to agree with my idiosyncratic beliefs” is a mechanisms that works for many types of beliefs, not just true ones. And more generally, I think that “AI doom is near” and associated beliefs are a memeplex, and I am inclined to discount their specifics.

Miscellanea

Difference between in-argument reasoning and all-things-considered reasoning

I’d also tend to differentiate between the probability that an argument or a model gives, and the all-things considered probability. For example, I might look at Ajeya’s timeline, and I might generate a probability by inputting my curves in its model. But then I would probably add additional uncertainty on top of that model.

My weak impression is that some of the most gung-ho people do not do this.

Methodological uncertainty

It’s unclear whether we can get good accuracy predicting dynamics that may happen across decades. I might be inclined to discount further based on that. One particular uncertainty that I worry about is that we can get “AI will be a big deal and be dangerous”, but that danger taking a different shape than what we expected.

For this reason, I am more sympathetic to tools other than forecasting for long-term decision-making, e.g., as outlined here.

Uncertainty about unknown unknowns

I think that unknown unknowns mostly delay AGI. E.g., covid, nuclear war, and many other things could lead to supply chain disruptions. There are unknown unknowns in the other direction, but the higher one's probability goes, the more unknown unknowns should shift one towards 50%.

Updating on virtue

I think that updating on virtue is a legitimate move. By this I mean to notice how morally or epistemically virtuous someone is, to update based on that about whether their arguments are made in good faith or from a desire to control, and to assign them more or less weight accordingly.

I think that a bunch of people around the CFAR cluster that I was exposed to weren't particularly virtuous and willing to go to great lengths to convince people that AI is important. In particular, I think that isolating people from the normal flow of their lives for extended periods has an unreasonable effectiveness at making them more pliable and receptive to new and weird ideas, whether they are right or wrong. I am a bit freaked out about the extent to which ESPR, a rationality camp for kids in which I participated, did that.

(Brief aside: An ESPR instructor points out that ESPR separated itself from CFAR after 2019, and has been trying to mitigate these factors. I do think that the difference is important, but this post isn't about ESPR in particular but about AI doom skepticism and so will not be taking particular care here.)

Here is a comment from a CFAR cofounder, which has since left the organization, taken from this Facebook comment thread (paragraph divisions added by me):

Question by bystander: Around 3 minutes, you mention that looking back, you don't think CFAR's real drive was _actually_ making people think better. Would be curious to hear you elaborate on what you think the real drive was.

Answer: I'm not going to go into it a ton here. It'll take a bit for me to articulate it in a way that really lands as true to me. But a clear-to-me piece is, CFAR always fetishized the end of the world. It had more to do with injecting people with that narrative and propping itself up as important.

We did a lot of moral worrying about what "better thinking" even means and whether we're helping our participants do that, and we tried to fulfill our moral duty by collecting information that was kind of related to that, but that information and worrying could never meaningfully touch questions like "Are these workshops worth doing at all?" We would ASK those questions periodically, but they had zero impact on CFAR's overall strategy.

The actual drive in the background was a lot more like "Keep running workshops that wow people" with an additional (usually consciously (!) hidden) thread about luring people into being scared about AI risk in a very particular way and possibly recruiting them to MIRI-type projects.

Even from the very beginning CFAR simply COULD NOT be honest about what it was doing or bring anything like a collaborative tone to its participants. We would infantilize them by deciding what they needed to hear and practice basically without talking to them about it or knowing hardly anything about their lives or inner struggles, and we'd organize the workshop and lectures to suppress their inclination to notice this and object.

That has nothing to do with grounding people in their inner knowing; it's exactly the opposite. But it's a great tool for feeling important and getting validation and coercing manipulable people into donating time and money to a Worthy Cause™ we'd specified ahead of time. Because we're the rational ones, right? 😛

The switch Anna pushed back in 2016 to CFAR being explicitly about xrisk was in fact a shift to more honesty; it just abysmally failed the is/ought distinction in my opinion. And, CFAR still couldn't quite make the leap to full honest transparency even then. ("Rationality for its own sake for the sake of existential risk" is doublespeak gibberish. Philosophical summersaults won't save the fact that the energy behind a statement like that is more about controlling others' impressions than it is about being goddamned honest about what the desire and intention really is.)

The dynamics at ESPR, a rationality camp I was involved with, were at times a bit more dysfunctional than that, particularly before 2019. For that reason, I am inclined to update downwards. I think that this is a personal update, and I don’t necessarily expect it to generalize.

I think that some of the same considerations that I have about ESPR might also hold for those who have interacted with people seeking to persuade, e.g., mainline CFAR workshops, 80,000 hours career advising calls, ATLAS, or similar. But to be clear I haven't interacted much with those other groups myself and my sense is that CFAR—which organized the iterations of ESPR up to 2019— went off the guardrails but that these other organizations haven't.

Industry vs AI safety community

It’s unclear to me what the views of industry people are. In particular, the question seems a bit confused. I want to get at the independent impression that people get from working with state-of-the-art AI models. But industry people may already be influenced by AI safety community concerns, so it’s unclear how to isolate the independent impression. Doesn’t seem undoable, though.

Suggested decompositions

The above reasons for skepticism lead me to suggest the following decompositions for my forecasting group, Samotsvety, to use when forecasting AGI and its risks:

Very broad decomposition

I:

- Will AGI be a big deal?

- Conditional on it being “a big deal”, will it lead to problems?

- Will those problems be existential?

II:

- AI capabilities will continue advancing

- The advancement of AI capabilities will lead to social problems

- … and eventually to a great catastrophe

- … and eventually to human extinction

Are we right about this stuff decomposition

- We are right about this AGI stuff

- This AGI stuff implies that AGI will be dangerous

- … and it will lead to human extinction

Inside the model/outside the model decomposition

I:

- Model/Decomposition X gives a probability

- Are the concepts in the decomposition robust enough to support chains of inference?

- What is the probability of existential risk if they aren’t?

I:

- Model/Decomposition X gives a probability

- Is model X roughly correct?

- Are the concepts in the decomposition robust enough to support chains of inference?

- Will the implicit assumptions that it is making pan out?

- What is the probability of existential risk if model X is not correct?

Implications of skepticism

I view the above as moving me away from certainty that we will get AGI in the short term. For instance, I think that having 70 or 80%+ probabilities on AI catastrophe within our lifetimes is probably just incorrect, insofar as a probability can be incorrect.

Anecdotally, I recently met someone at an EA social event that a) was uncalibrated, e.g., on Open Philanthropy’s calibration tool, but b) assigned 96% to AGI doom by 2070. Pretty wild stuff.

Ultimately, I’m personally somewhere around 40% for "By 2070, will it become possible and financially feasible to build advanced-power-seeking AI systems?", and somewhere around 10% for doom. I don’t think that the difference matters all that much for practical purposes, but:

- I am marginally more concerned about unknown unknowns and other non-AI risks

- I would view interventions that increase civilizational robustness (e.g., bunkers) more favourably, because these are a bit more robust to unknown risks and could protect against a wider range or risks

- I don’t view AGI soon as particularly likely

- I view a stance which “aims to safeguard humanity through the 21st century” as more appealing than “Oh fuck AGI risk”

Conclusion

I’ve tried to outline some factors about why I feel uneasy with high existential risk estimates. I view the most important points as:

- Distrust of reasoning chains using fuzzy concepts

- Distrust of selection effects at the level of arguments

- Distrust of community dynamics

It’s not clear to me whether I have bound myself into a situation in which I can’t update from other people’s object-level arguments. I might well have, and it would lead to me playing in a perhaps-unnecessary hard mode.

If so, I could still update from e.g.:

- Trying to make predictions, and seeing which generators are more predictive of AI progress

- Investigations that I do myself, that lead me to acquire independent impressions, like playing with state-of-the-art models

- Deferring to people that I trust independently, e.g., Gwern

Lastly, I would loathe it if the same selection effects applied to this document: If I spent a few days putting this document together, it seems easy for the AI safety community to easily put a few cumulative weeks into arguing against this document, just by virtue of being a community.

This is all.

Acknowledgements

I am grateful to the Samotsvety forecasters that have discussed this topic with me, and to Ozzie Gooen for comments and review. The above post doesn't necessarily represent the views of other people at the Quantified Uncertainty Research Institute, which nonetheless supports my research.

One of my main high-level hesitations with AI doom and futility arguments is something like this, from Katja Grace:

"Omnipotent" is the impression I get from a lot of the characterization of AGI.

Another recent specific example here.

Similarly, I've had the impression that specific AI takeover scenarios don't engage enough with the ways they could fail for the AI. Some are based prima... (read more)

[writing in my personal capacity, but asked an 80k colleague if it seemed fine for me to post this]

Thanks a lot for writing this - I agree with a lot of (most of?) of what's here.

One thing I'm a bit unsure of is the extent to which these worries have implications for the beliefs of those of us who are hovering more around 5% x-risk this century from AI, and who are one step removed from the bay area epistemic and social environment you write about. My guess is that they don't have much implication for most of us, because (though what you say is way better articulated) some of this is already naturally getting into people's estimates.

e.g. in my case, basically I think a lot of what you're writing about is sort of why for my all-things-considered beliefs I partly "defer at a discount" to people who know a ton about AI and have high x-risk estimates. Like I take their arguments, find them pretty persuasive, end up at some lower but still middlingly high probability, and then just sort of downgrade everything because of worries like the ones you cite, which I think is part of why I end up near 5%.

This kind of thing does have the problematic effect probably of incentivising the bay area folks to have more and more extreme probabilities - so that, to the extent that they care, quasi-normies like me will end up with a higher probability - closer to the truth in their view - after deferring at a discount.

The 5% figure seems pretty common, and I think this might also be a symptom of risk inflation.

There is a huge degree of uncertainty around this topic. The factors involved in any prediction very by many orders of magnitude, so it seems like we should expect the estimates to vary by orders of magnitude as well. So you might get some people saying the odds are 1 in 20, or 1 in 1000, or 1 in a million, and I don't see how any of those estimates can be ruled out as unreasonable. Yet I hardly see anyone giving estimates of 0.1% or 0.001%.

I think people are using 5% as a stand in for "can't rule it out". Like why did you settle at 1 in 20 instead of 1 in a thousand?

[context: I'm one of the advisors, and manage some of the others, but am describing my individual attitude below]

FWIW I don't think the balance you indicated is that tricky, and think that conceiving of what I'm doing when I speak to people as 'charismatic persuasion' would be a big mistake for me to make. I try to:

- Say things I think are true, and explain why I think them (both the internal logic and external evidence if it exists) and how confident I am.

- Ask people questions in a way which helps them clarify what they think is true, and which things they are more or less sure of.

- Make tradeoffs (e.g. between a location preference and a desire for a particular job) explicit to people who I think might be missing that they need to make one, but usually not then suggesting which tradeoff to make, but instead that they go and think about it/talk to other people affected by it.

- Encourage people to think through things for themselves, usually suggesting resources which will help them do that/give a useful perspective as well as just saying 'this seems worth you taking time to think about'.

- To the extent that I'm paying attention to how other people perceive me[

... (read more)I think writing this sort of thing up is really good; thanks for this, Nuno. :)

It sounds like your social environment might be conflating four different claims:

- "I personally find Nate's arguments for disjunctiveness compelling, so I have relatively high p(doom)."

- "Nate's arguments have debunked the idea that AI risk is conjunctive, in the sense that he's given a completely ironclad argument for this that no remotely reasonable person could disagree with."

- "Nate and Eliezer have debunked the idea that multiple-stages-style reasoning is generically reliable (e.g., in the absence of very strong prior reasons to think that a class of scenarios is conjunctive / unlikely on priors)."

- "Nate has shown that it's unreasonable to treat anything as conjunctive, and that we can

... (read more)That paints a pretty fucked up picture of early-CFAR's dynamics. I've heard a lot of conflicting stories about CFAR in this respect, usually quite vague (and there are nonzero ex-CFAR staffers who I just flatly don't trust to report things accurately). I'd be interested to hear from Anna or other early CFAR staff about whether this matches their impressions of how things went down. It unfortunately sounds to me like a pretty realistic way this sort of thing can play ... (read more)

Surely not. Neither of those make any arguments about AI, just about software generally. If you literally think those two are sufficient arguments for concluding "AI kills us with high probability" I don't see why you don't conclude "Powerpoint kills us with high probability".

By "general intelligence" I mean "whatever it is that lets human brains do astrophysics, category theory, etc. even though our brains evolved under literally zero selection pressure to solve astrophysics or category theory problems".

Human brains aren't perfectly general, and not all narrow AIs/animals are equally narrow. (E.g., AlphaZero is more general than AlphaGo.) But it sure is interesting that humans evolved cognitive abilities that unlock all of these sciences at once, with zero evolutionary fine-tuning of the brain aimed at equipping us for any of those sciences. Evolution just stumbled into a solution to other problems, that happened to generalize to billions of wildly novel tasks.

To get more concrete:

- AlphaGo is a very impressive reasoner, but its hypothesis space is limited to sequences of Go board states rather than sequences of states of the physical universe. Efficiently reasoning about the physical universe requires solving at least some problems (which might be solved by the AGI's programmer, and/or solved by the algorithm that finds the AGI in program-space; and some such problems may be solved by the AGI itself in the course of refining its thinking) that are diffe

... (read more)Ok, so thinking about this, one trouble with answering your comment this is that you have a self-consistent worldview which has contrary implications to some of the stuff I hold, but I feel that you are not giving answers with reference to stuff that I already hold, but rather to stuff that further references that worldview.

Let me know if this feels way off.

So I'm going to just pick one object-level argument and dig in to that:

Well, I think that the question is, increased p(doom) compared to what, e.g., what were your default expectations before the DL revollution?

- Compared to equivalent progress in a seed AI which has a utility function

- Deep learning seems like it has some advantages, e.g,.: it is [doing the kinds of t

... (read more)Sure! It would depend on what you mean by "an argument against AI risk":

- If you mean "What's the main argument that makes you more optimistic about AI outcomes?", I made a list of these in 2018.

- If you mean "What's the likeliest way you think it could turn out that aligning AGI is unnecessary in order to do a pivotal act / initiate an as-long-as-needed reflection?", I'd currently guess it's using strong narrow-AI systems to accelerate you to Drexlerian nanotechnology (which can then be used to build powerful things like "large numbers of fast-running human whole-brain emulations").

- If you mean "What's the likeliest way you think it could turn out that humanity's current trajectory is basically OK / no huge actions or trajectory changes are required?", I'd say that the likeliest scenario is one where AGI kills all humans, but this isn't a complete catastrophe for the future value of the reachable universe because the AGI turns out to be less like a paperclip maximizer and more like a weird sentient alien that wants to fill the universe with extremely-weird-but-awesome alien civilizations. This sort of scenario is discussed in Superintelligent AI is necessary for an amazing future, but

... (read more)This article is... really bad.

It's mostly a summary of Yudkowsky/Bostrom ideas, but with a bunch of the ideas garbled and misunderstood.

Mitchell says that one of the core assumptions of AI risk arguments is "that any goal could be 'inserted' by humans into a superintelligent AI agent". But that's not true, and in fact a lot of the risk comes from the fact that we have no idea how to'insert' a goal into an AGI system.

The paperclip maximizer hypothetical here is a misunderstanding of the original idea. (Though it's faithful to the version Bostrom gives in Superintelligence.) And the misunderstanding seems to have caused Mitchell to misunderstood a bunch of other things about the alignment problem. Picking one of many examples of just-plain-false claims:

"And importantly, in keeping with Bostrom’s orthogonality thesis, the machine has achieved superintelligence without having any of its own goals or values, instead waiting for goals to be inserted by humans."

The article also says that "research efforts on alignment are underway at universities around the world and at big AI companies such as Google, Meta and OpenAI". I assume Google here ... (read more)

Since we're talking about p(doom), this sounds like a claim that my job at MIRI is to generate arguments for worrying more about AGI, and we haven't hired anybody whose job it is to generate arguments for worrying less.

Well, I'm happy to be able to cite that thing I wrote with a long list of reasons to worry less about AGI risk!

(Link)

Thanks for winding back through the conversation so far, as you understood it; that helped me understand better where you're coming from.

Nuno said: "Idk, I can't help but notice that your title at MIRI is 'Research Communications', but there is nobody paid by the 'Machine Intelligence Skepticism Institute' to put forth claims that you are wrong."

I interpreted that as Nuno saying: MIRI is giving arguments for stuff, but I cited an allegation that CFAR is being dishonest, manipulative, and one-sided in their evaluation of AI risk arguments, and I note that MIRI is a one-sided doomer org that gives arguments for your side, while there's nobody paid to raise counter-points.

My response was a concrete example showing that MIRI isn't a one-sided doomer org that only gives arguments for doom. That isn't a proof that we're correct about this stuff, but it's a data point against "MIRI is a one-sided doomer org that only gives arguments for doom". And it's at least some evidence that we aren't doing the specific dishonest thing Nuno accused CFAR... (read more)

I think the blunt MIRI-statement you're wanting is here:

... (read more)Just a brief comment to say that I definitely appreciated you writing this post up, as well as linking to Phil's blog post! I share many of these uncertainties, but have often just assumed I'm missing some important object-level knowledge, so it's nice to see this spelled out more explicitly by someone with more exposure to the relevant communities. Hope you get some engagement!

I know several people from outside EA (Ivy League, Ex-FANG, work in ML, startup, Bay Area) and they share the same "skepticism" (in quotes because it's not the right word, their view is more negative).

I suspect one aspect of the problem is the sort of "Timnit Gebru"-style yelling and also sneering, often from the leftist community that is opposed to EA more broadly (but much of this leftist sneering was nucleated by the Bay Area communities).

This gives proponents of AI-safety an easy target, funneling online discourse into a cul de sac of tribalism. I suspect this dynamic is deliberately cultivated on both sides, a system ultimately supported by a lot of crypto/tech wealth. This leads to where we are today, where someone like Bruce (not to mention many young people) get confused.

I don't follow Timnit closely, but I'm fairly unconvinced by much of what I think you're referring to RE: "Timnit Gebru-style yelling / sneering", and I don't want to give the impression that my uncertainties are strongly influenced by this, or by AI-safety community pushback to those kinds of sneering. I'd be hesitant to agree that I share these views that you are attributing to me, since I don't really know what you are referring to RE: folks who share "the same skepticism" (but some more negative version).

When I talk about uncertainty, some of these are really just the things that Nuno is pointing out in this post. Concrete examples of what some of these uncertainties look like in practice for me personally include:

- I don't have a good inside view on timelines, but when EY says our probability of survival is ~0% this seems like an extraordinary claim that doesn't seem to be very well supported or argued for, and something I intuitively want to reject outright, but don't have the object level expertise to meaningfully do so. I don't know the extent to which EY's views are representative or highly influential in current AI safety efforts, and I can imagine a world where there's too

... (read more)Thank you for this useful content and explaining your beliefs.

My comment is claiming a dynamic that is upstream of, and produces the information environment you are in. This produces your "skepticism" or "uncertainty". To expand on this, without this dynamic, the facts and truth would be clearer and you would not be uncertain or feel the need to update your beliefs in response to a forum post.

My comment is not implying you are influenced by "Gebru-style" content directly. It is sort of implying the opposite/orthogonal. The fact you felt it necessary to distance yourself from Gebru several times in your comment, essentially because a comment mentioned her name, makes this very point itself.

... (read more)Yes, I think it's good that there is basically consensus here on AGI doom being a serious problem; the argument seems to be one of degree. Even OP says p(AGI doom by 2070) ~ 10%.

Personally I have trouble understanding this post. Could you write simpler?

Here are my notes which might not be easier to understand, but they are shorter and capture the key ideas:

- Uneasiness about chains of reasoning with imperfect concepts

- Uneasy about conjunctiveness: It’s not clear how conjunctive AI doom is (AI doom being conjunctive would mean that Thing A and Thing B and Thing C all have to happen or be true in order for AI doom; this is opposed to being disjunctive where either A, or B, or C would be sufficient for AI Doom), and Nate Soares’s response to Carlsmith’s powerseeking AI report is not a silver bullet; there is social pressure in some places to just accept that Carlsmith’s report uses a biased methodology and to move on. But obviously there’s some element of conjunctiveness that has to be dealt with.

- Don’t trust the concepts: a lot of the early AI Risk discussion’s came before Deep Learning. Some of the concepts should port over to near-term-likely AI systems, but not all of them (e.g., Alien values, Maximalist desire for world domination)

- Uneasiness about in-the-limit reasoning: Many arguments go something like this: an arbitrarily intelligent AI will adopt instrumental power seeking tendencies and this will be very bad for humanity; progr

... (read more)You might also enjoy this tweet summary.

The above is condescending/a bit too simple, but I thought it was funny, hope you get some use out of it.

I strongly agree on the dubious epistemics. A couple of corroborating experiences:

- When I looked at how people understood the orthogonality thesis, my impression was that somewhere from 20-80% of EAs believed that it showed that AI misalignment was extremely likely rather than (as, if anything, it actually shows) that it's not literally impossible.

- These seemingly included an 80k careers advisor who suggested it as a reason why I should be much more concerned about AI; Will MacAskill, who in WWOtF describes 'The scenario most closely associated with [the book Superintelligence being] one in which a single AI agent designs better and better versions of itself, quickly developing abilities far greater than the abilities of all of humanity combined. Almost certainly, its aims would not be the same as humanity’s aims'; and some or all of the authors of the paper 'Long term cost-effectiveness of resilient foods for global catastrophes compared to artificial general intelligence' (David Denkenberger, Anders Sandberg, Ross John Tieman, and Joshua M. Pearce) which states 'the goals of the intelligence are essentially arbitrary [48]', with the reference pointing to Bostrom's ortho

... (read more)Hi! Wanted to follow up as the author of the 80k software engineering career review, as I don't think this gives an accurate impression. A few things to say:

- I try to have unusually high standards for explaining why I believe the things I write, so I really appreciate people pushing on issues like this.

- At the time, when you responded to <the Anthropic person>, you said "I think <the Anthropic person> is probably right" (although you added "I don't think it's a good idea to take this sort of claim on trust for important career prioritisation research").

- When I leave claims like this unsourced, it’s usually because I (and my editors) think they’re fairly weak claims, and/or they lack a clear source to reference. That is, the claim is effectively is a piece of research based on general knowledge (e.g. I wouldn't source the claim "Biden is the President of the USA”) and/or interviews with a range of experts, and the claim is weak or unimportant enough not to investigate further. (FWIW I think it’s likely I should have prioritised writing a longer footnote on why I believe this claim.)

... (read more)The closest data is the three surveys of NeurIPS researchers, but thes

Cheers! You might want to follow up with 80,000 hours on the epistemics point, e.g., alexrjl would probably be interested in hearing about and then potentially addressing your complaints.

This seems like a pretty general argument for being sceptical of anything, including EA!

I agree. In particular, it's a pretty general argument to be more skeptical of something than its most gung-ho advocates. And its stronger the more you think that these dynamics are going on. But for example, e.g., physics subject to experimental verification pretty much void this sort of objection.

I like this, and think its healthy. I recommend talking to Quintin Pope for a smart person who has thought a lot about alignment, and came to the informed, inside-view conclusion that we have a 5% chance of doom (or just reading his posts or comments). He has updated me downwards on doom a lot.

Hopefully it gets you in a position where you're able to update more on evidence that I think is evidence, by getting you into a state where you have a better picture of what the best arguments against doom would be.

Is 5% low? 5% still strikes me as a "preventing this outcome should plausibly be civilization's #1 priority" level of risk.

I earlier gave some feedback on this, but more recently spent more time with it. I sent these comments to Nuno, and thought they could also be interesting to people here.

- I think it’s pretty strong and important (as in, an important topic).

- The first half in particular seems pretty dense. I could imagine some rewriting making it more understandable.

- Many of the key points seem more encompassing than just AI. “Selection effects”, “being in the Bay Area” / “community epistemic problems”. I think I’d wish these could be presented as separate posts than linked to

... (read more)The conjunctive/disjunctive dichotomy seems to be a major crux when it comes to AI x-risk. How much do belief in human progress, belief in a just world, the Long Peace, or even deep-rooted-by-evolution (genetic) collective optimism (all things in the "memetic water supply") play into the belief that the default is not-doom? Even as an atheist, I think it's sometimes difficult (not least because it's depressing to think about) to fully appreciate that we are "beyond the reach of God".

Note: After talking with an instructor, I added a "brief aside" section pointing out that ESPR separated itself from CFAR in 2019 and has been trying to mitigate the factors I complain about since then. This is important for ESPR in terms of not having its public reputation destroyed, but doesn't really affect the central points in the post.

Appreciated this post! Have you considered crossposting this to Lesswrong? Seems like an important audience for this.

I completely agree with this position, but my take is different: Nuclear war risk is high all the time, and all geopolitical and climate risks can increase it. It is perhaps not existential for the species, but certainly it is for cilivization. Given this, for me it is the top risk, and to some extent, all efforts for progress, political stabilization, climate risk mitigation are modestly important in themselves, and massively important to affect nuclear war risk.

Now, the problem with AI risk is that our understanding of why and how IA works is limit... (read more)

I do note one is not like the others here. Marxism is probably way more wrong than any of the other beliefs, and I feel like the inclusion of the others rather weakens the case here.

Alien values are guaranteed unless we explicitly impart non-alien ethics to AI, which we currently don't know how to do, and don't know (or can't agree) what that ethics should be like. Next two points are synonyms and are also basically synonyms to "alien values". The treacherous turn is indeed unlikely (link).

Self-improvement is given, the only question is where is the "ceiling" of this improveme... (read more)