Around the end of Feb 2024 I attended the Summit on Existential Risk and EAG: Bay Area (GCRs), during which I did 25+ one-on-ones about the needs and gaps in the EA-adjacent catastrophic risk landscape, and how they’ve changed.

The meetings were mostly with senior managers or researchers in the field who I think are worth listening to (unfortunately I can’t share names). Below is how I’d summarise the main themes in what was said.

If you have different impressions of the landscape, I’d be keen to hear them.

- There’s been a big increase in the number of people working on AI safety, partly driven by a reallocation of effort (e.g. Rethink Priorities starting an AI policy think tank); and partly driven by new people entering the field after its newfound prominence.

- Allocation in the landscape seems more efficient than in the past – it’s harder to identify especially neglected interventions, causes, money, or skill-sets. That means it’s become more important to choose based on your motivations. That said, here’s a few ideas for neglected gaps:

- Within AI risk, it seems plausible the community is somewhat too focused on risks from misalignment rather than mis-use or concentration of power.

- There’s currently very little work going into issues that arise even if AI is aligned, including the deployment problem, Will MacAskill’s “grand challenges” and Lukas Finnveden’s list of project ideas. If you put significant probability on alignment being solved, some of these could have high importance too; though most are at the stage where they can’t absorb a large number of people.

- Within these, digital sentience was the hottest topic, but to me it doesn’t obviously seem like the most pressing of these other issues. (Though doing field building for digital sentience is among the more shovel ready of these ideas.)

- The concrete entrepreneurial idea that came up the most, and seemed most interesting to me, was founding orgs that use AI to improve epistemics / forecasting / decision-making (I have a draft post on this – comments welcome).

- Post-FTX, funding has become even more dramatically concentrated under Open Philanthropy, so finding new donors seems like a much bigger priority than in the past. (It seems plausible to me that $1bn in a foundation independent from OP could be worth several times that amount added to OP.)

- In addition, donors have less money than in the past, while the number of opportunities to fund things in AI safety has increased dramatically, which means marginal funding opportunities seem higher value than in the past (as a concrete example, nuclear security is getting almost no funding from the community, and perhaps only ~$30m of philanthropic funding in total).

- Both points mean efforts to start new foundations, fundraise and earn to give all seem more valuable compared to a couple of years ago.

- Many people mentioned comms as the biggest issue facing both AI safety and EA. EA has been losing its battle for messaging, and AI safety is in danger of losing its too (with both a new powerful anti-regulation tech lobby and the more left-wing AI ethics scene branding it as sci-fi, doomer, cultish and in bed with labs).

- People might be neglecting measures that would help in very short timelines (e.g. transformative AI in under 3 years), though that might be because most people are unable to do much in these scenarios.

- Right now, directly talking about AI safety seems to get more people in the door than talking about EA, so some community building efforts have switched to that.

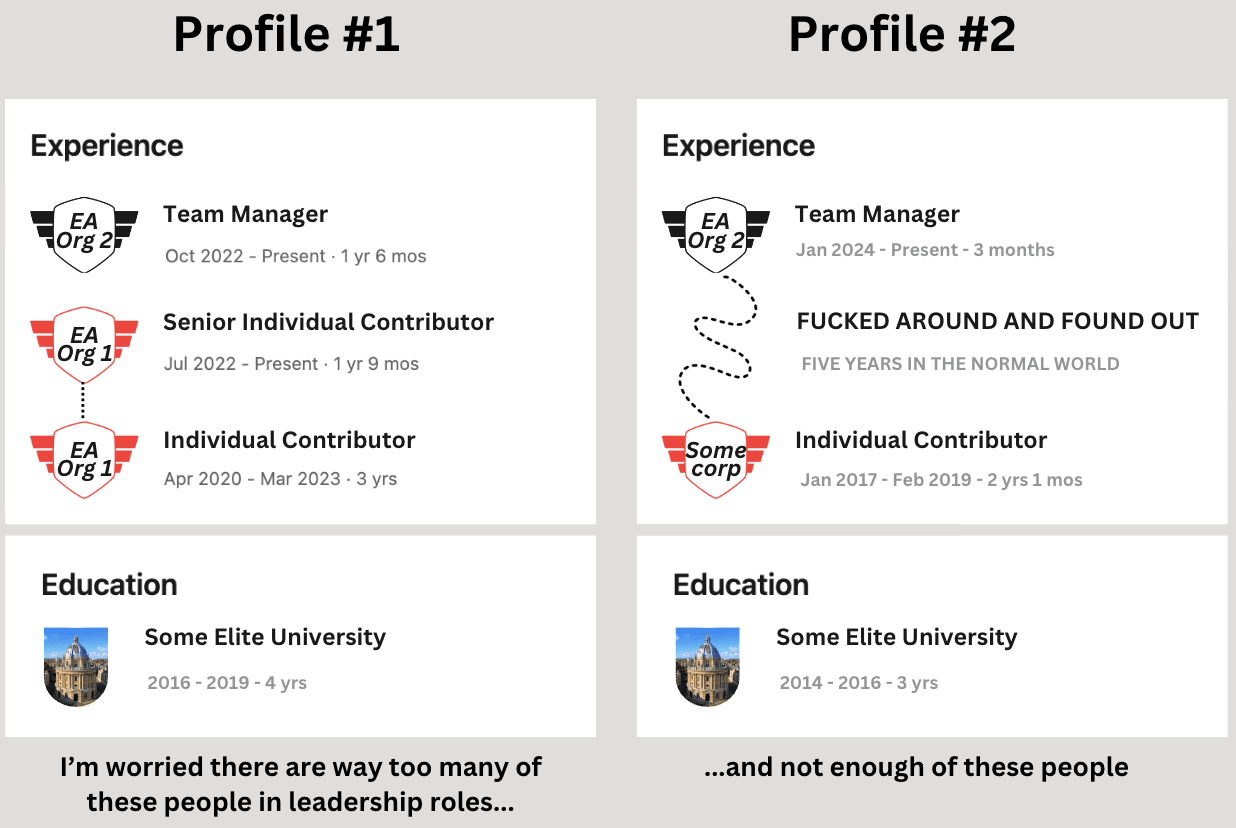

- There’s been a recent influx in junior people interested in AI safety, so it seems plausible the biggest bottleneck again lies with mentoring & management, rather than recruiting more junior people.

- Randomly: there seems to have been a trend of former leaders and managers switching back to object level work.

I think the 80K profile notes (in a footnote) that their $1-10 billion guess includes many different kinds of government spending. I would guess it includes things like nonproliferation programs and fissile materials security, nuclear reactor safety, and probably the maintenance of parts of the nuclear weapons enterprise -- much of it at best tangentially related to preventing nuclear war.

So I think the number is a bit misleading (not unlike adding up AI ethics spending and AI capabilities spending and concluding that AI safety is not neglected). You can look at the single biggest grant under "nuclear issues" in the Peace and Security Funding Index (admittedly an imperfect database): it's the U.S. Overseas Private Investment Corporation (a former government funder) paying for spent nuclear fuel storage in Maryland...

A way to get at a better estimate of non-philanthropic spending might be to go through relevant parts of the State International Affairs Budget, the Bureau of Arms Control, Deterrence and Stability (ADS, formerly Arms Control, Verification, and Compliance), and some DoD entities (like DTRA), and a small handful of others, add those up, and add some uncertainty around your estimates. You would get a much lower number (Arms Control, Verification, and Compliance budget was only $31.2 million in FY 2013 according to Wikipedia -- don't have time to dive into more recent numbers rn).

All of which is to say that I think Ben's observation that "nuclear security is getting almost no funding" is true in some sense both for funders focused on extreme risks (where Founders Pledge and Longview are the only ones) and for the field in general