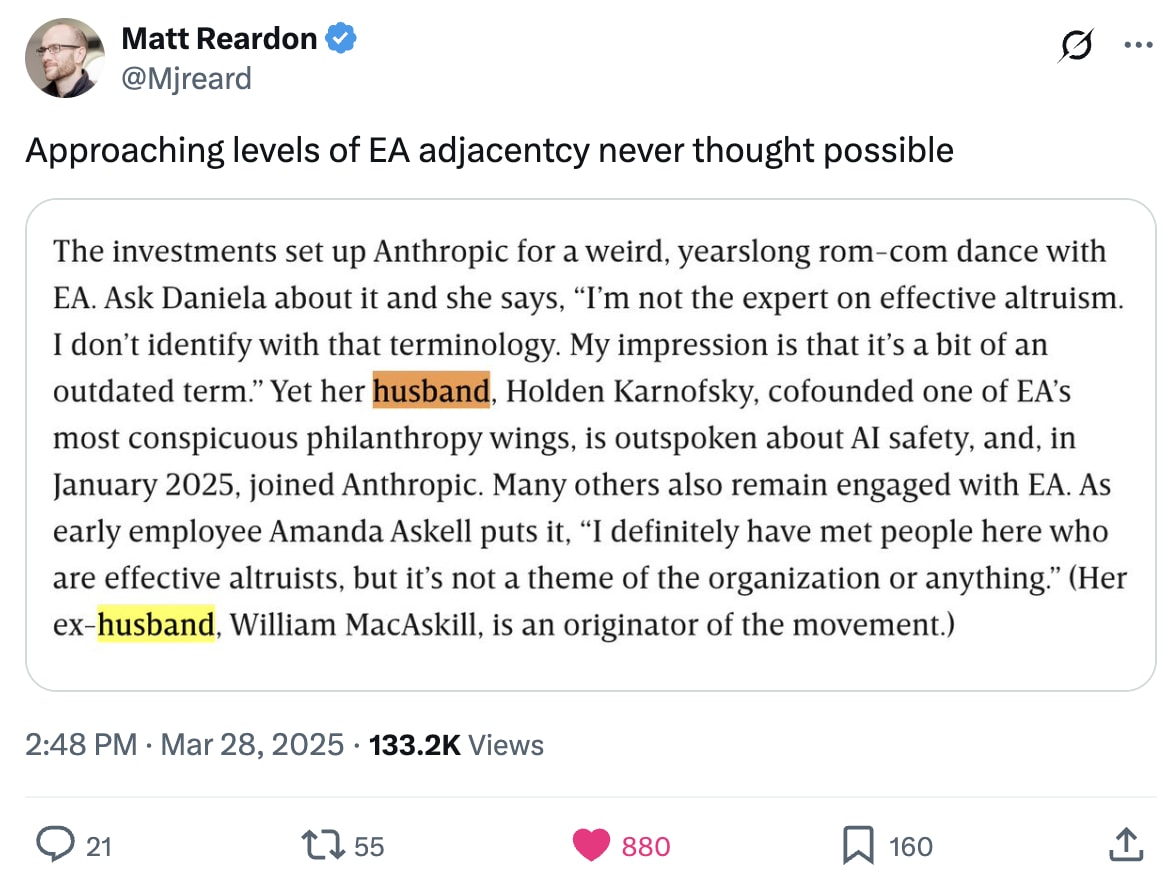

In a recent Wired article about Anthropic, there's a section where Anthropic's president, Daniela Amodei, and early employee Amanda Askell seem to suggest there's little connection between Anthropic and the EA movement:

Ask Daniela about it and she says, "I'm not the expert on effective altruism. I don't identify with that terminology. My impression is that it's a bit of an outdated term". Yet her husband, Holden Karnofsky, cofounded one of EA's most conspicuous philanthropy wings, is outspoken about AI safety, and, in January 2025, joined Anthropic. Many others also remain engaged with EA. As early employee Amanda Askell puts it, "I definitely have met people here who are effective altruists, but it's not a theme of the organization or anything". (Her ex-husband, William MacAskill, is an originator of the movement.)

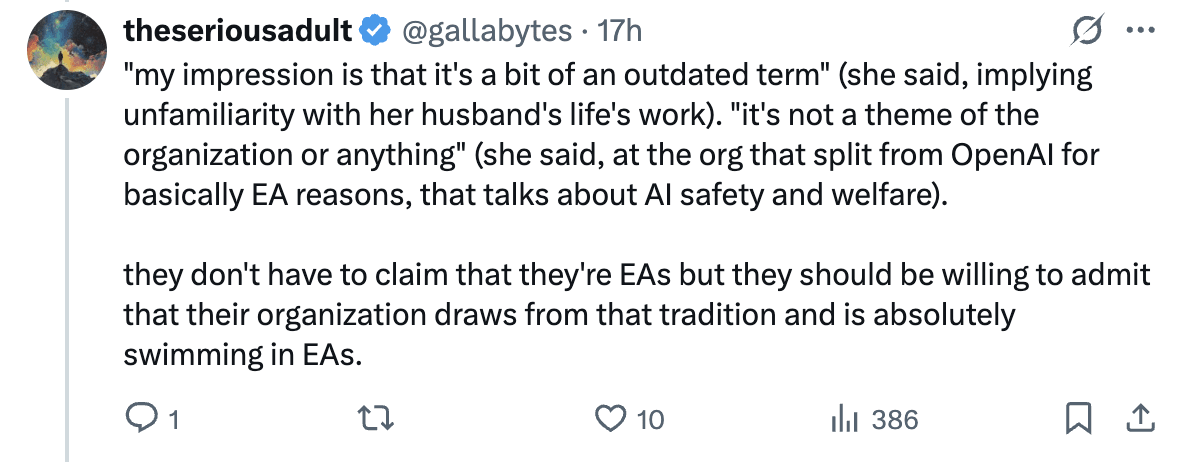

This led multiple people on Twitter to call out how bizarre this is:

In my eyes, there is a large and obvious connection between Anthropic and the EA community. In addition to the ties mentioned above:

- Dario, Anthropic’s CEO, was the 43rd signatory of the Giving What We Can pledge and wrote a guest post for the GiveWell blog. He also lived in a group house with Holden Karnofsky and Paul Christiano at a time when Paul and Dario were technical advisors to Open Philanthropy.

- Amanda Askell was the 67th signatory of the GWWC pledge.

- Many early and senior employees identify as effective altruists and/or previously worked for EA organisations

- Anthropic has a "Long-Term Benefit Trust" which, in theory, can exercise significant control over the company. The current members are:

- Zach Robinson - CEO of the Centre for Effective Altruism.

- Neil Buddy Shah - CEO of the Clinton Health Access Initiative, former Managing Director at GiveWell and speaker at multiple EA Global conferences

- Kanika Bahl - CEO of Evidence Action, a long-term grantee of GiveWell.

- Three of EA’s largest funders historically (Dustin Moskovitz, Sam Bankman-Fried and Jann Tallinn) were early investors in Anthropic.

- Anthropic has hired a "model welfare lead" and seems to be the company most concerned about AI sentience, an issue that's discussed little outside of EA circles.

- On the Future of Life podcast, Daniela said, "I think since we [Dario and her] were very, very small, we've always had this special bond around really wanting to make the world better or wanting to help people" and "he [Dario] was actually a very early GiveWell fan I think in 2007 or 2008."

- The Anthropic co-founders have apparently made a pledge to donate 80% of their Anthropic equity (mentioned in passing during a conversation between them here and discussed more here)

- Their first company value states, "We strive to make decisions that maximize positive outcomes for humanity in the long run."

It's perfectly fine if Daniela and Dario choose not to personally identify with EA (despite having lots of associations) and I'm not suggesting that Anthropic needs to brand itself as an EA organisation. But I think it’s dishonest to suggest there aren’t strong ties between Anthropic and the EA community. When asked, they could simply say something like, "yes, many people at Anthropic are motivated by EA principles."

It appears that Anthropic has made a communications decision to distance itself from the EA community, likely because of negative associations the EA brand has in some circles. It's not clear to me that this is even in their immediate self-interest. I think it’s a bad look to be so evasive about things that can be easily verified (as evidenced by the twitter response).

This also personally makes me trust them less to act honestly in the future when the stakes are higher. Many people regard Anthropic as the most responsible frontier AI company. And it seems like something they genuinely care about—they invest a ton in AI safety, security and governance. Honest and straightforward communication seems important to maintain this trust.

I'm a bit confused about people suggesting this is defendable.

"I'm not the expert on effective altruism. I don't identify with that terminology. My impression is that it's a bit of an outdated term".

There are three statements here

1. I'm not the expert on effective altruism - Its hard to see this as anything other than a lie. She's married to Holden Karnofsky and knows ALL about Effective Altruism. She would probably destroy me on a "Do you understand EA" quiz.... I wonder how @Holden Karnofsky feels about this?

2. I don't identify with that terminology. - yes true at least now! Maybe she's still got some residual warmth for us deep in her heart?

3. My impression is that it's a bit of an outdated term". - Her husband set up 2 of the biggest EA (or heavily EA based) institutions that are still going strong today. On what planet is it an "outdated" term? Perhaps on the planet where your main goal is growing and defending your company?

In addition to the clear associations from the OP, from Their wedding page 2017 seemingly written by Daniela "We are both excited about effective altruism: using evidence and reason to figure out how to benefit others as much as possible, and taking action on that basis. For gifts we’re asking for donations to charities recommended by GiveWell, an organization Holden co-founded."

If you want to distance yourself from EA, do it and be honest. If you'd rather not comment, don't comment. But don't obfuscate and lie pretending you don't know about EA and downplay the movement.

I'm all for giving people the benefit of the doubt, but there doesn't seem to be reasonable doubt here.

I don't love raising this as its largely speculation on my part, but there might still be a undercurrent of copium within the EA community by people who backed, or still back Anthropic as the "best" of the AI acceleration bunch (which they quite possibly are) and want to hold that close after failing with Open AI...

Exactly. Daniela and the senior leadership at one of the frontier AI labs are not the same as someone's random office colleague. There's a clear public interest angle here in terms of understanding the political and social affiliations of powerful and influential people - which is simply absent in the case you describe.