There has already been ample discussion of what norms and taboos should exist in the EA community, especially over the past ten months. Below, I'm sharing an incomplete list of actions and dynamics I would strongly encourage EAs and EA organizations to either strictly avoid or treat as warranting a serious—and possibly ongoing—risk analysis.

I believe there is a reasonable risk should EAs:

- Live with coworkers, especially when there is a power differential and especially when there is a direct report relationship

- Date coworkers, especially when there is a power differential and especially when there is a direct report relationship

- Promote[1] drug use among coworkers, including legal drugs, and including alcohol and stimulants

- Live with their funders/grantees, especially when substantial conflict-of-interest mechanisms are not active

- Date their funders/grantees, especially when substantial conflict-of-interest mechanisms are not active

- Date the partner of their funder/grantee, especially when substantial conflict-of-interest mechanisms are not active

- Retain someone as a full-time contractor or grant recipient for the long term, especially when it might not adhere to legal guidelines

- Offer employer-provided housing for more than a predefined and very short period of time, thereby making an employee’s housing dependent on their continued employment and allowing an employer access to an employee’s personal living space

Potentially more controversial, two aspects of the community I believe have substantial downsides that the community has insufficiently discussed or addressed:

- EA™ Group Houses and the branding of private, personal spaces as “EA”

- "Work trials" that require interruption of regular employment to complete, such that those currently employed full-time must leave their existing job to be considered for a prospective job

As said, this list is far from complete and I imagine people may disagree with portions of it. I’m hoping to stake this as a position held by some EAs and I’m hoping this post can serve as a prompt for further discussion and assessment.

- ^

“Promote” is an ambiguous term here. I think this is true to life in that one person’s enthusiastic endorsement of a drug is another person’s peer pressure.

I'm concerned about EA falling into the standard "risk-averse bureaucracy" failure mode. Every time something visibly bad happens, the bureaucracy puts a bunch of safeguards in place. Over time the drag created by the safeguards does a lot of harm, but because the harm isn't as visible, the bureaucracy doesn't work as effectively to reduce it.

I would like to see Fermi estimates for some of these, including explicit estimates of less-visible downsides. For example, consider EA co-living, including for co-workers. If this was banned universally, my guess is that it would mean EAs paying many thousands of dollars extra in rent for housing and/or office space per month. It would probably lead to reduced motivation, increased loneliness, and wasted commute time among EAs. EA funding would become more scarce, likely triggering Goodharting for EAs who want to obtain funding, or people dying senselessly in the developing world.

A ban on co-living doesn't seem very cost-effective to me. It seems to me that expanding initiatives like Basefund would achieve something similar, but be far more cost-effective.

I consider power-dynamics safeguards that make sure, for example, that anyone can quit their job and still have a place to stay - to be deontological. You won't change my mind easily using a cost-benefit analysis, if the argument will be something like "for the greater good, it's ok to make it very hard for some people to quit, because it will save EA money that can be used to save more lives".

This is similar to how it would be hard to convince me that stealing is a good idea - even if we can use the money to buy bed nets.

I can elaborate if you don't agree. (or maybe you totally agree and then there's no need)

Also, I wouldn't make one group out of all the "risk-averse bureaucracy". Preventing the abuse of power dynamics is a specific instance that should get special treatment imo

A question here is something like "how do norms get removed?" what process allows people more freedom if we have restricted too much.

I agree that we should be wary of falling into the "risk-averse bureaucracy" failure mode, and I also think co-living for co-workers at a similar seniority level is fine (it is also normal outside EA). I also think there might be a good case for EA houses trying to have people from different orgs.

However, I'd like to point out that any Fermi estimates here would be fairly pointless. There are many different inputs you would need, and the reasonable range for each input is very wide, particularly with "potential reputational harm to EA from bad thing happening", which can range from nothing to FTX-level or far worse.

If it's pointless to do a Fermi estimate, that sounds a lot like saying it's pointless to try and figure out whether a ban is a good idea?

If that's the case, I favor letting people decide for themselves -- individuals understand their particular context, and can make a guess that's customized for their particular situation.

The most plausible version of this argument is not that someone will be a good housemate just because they are EA. It is that banning or discouraging EA co-living makes it more difficult for people to find any co-living arrangement.

It seems a little weird to me that most of the replies to this post are jumping to the practicalities/logistics of how we should/shouldn't implement official, explicit, community-wide bans on these risky behaviours.

I totally agree with OP that all the things listsed above generally cause more harm than good. Most people in other cultures/communities would agree that they're the kind of thing which should be avoided, and most other people succeed in avoiding them without creatiing any explicit institution responsible for drawing a specific line between correct/incorrect behavior or implementing overt enforcment mechanisms.

If many in the community don't like these kind of behaviours, we can all contribute to preventing them by judging things on a case-by-case basis and gently but firmly letting our peers know when we dissaprove of their choices. If enough people softly disaprove of things like drug use, or messy webs of romantic entanglement - this can go a long way towards reducing their prevalance. No need to draw bright lines in the sand or enshrine these norms in writing as exact rules.

A lot of these are phrased in ways that immediately sound like "oh, well of course you wouldn't do that", but the situations in which people end up doing them are generally much more ambiguous. When I think over my ~15y in the workplace I can find examples of essentially all of these, in my life or a close coworker, that I wouldn't classify as probably a bad idea:

In ~2016 one of my housemates who I'd been friends with for 5+ years recruited another of my housemates (who I'd been friends with for 10+ years) to join an EA-aligned company. The next year they recruited me as well. None of us reported to each other, but both of them were tenants of mine (my wife and I owned the house) and we were something like three of five people working for the company in Boston at the time.

I do think there was risk here, but I also think it was a pretty small one, and this would not have been a good reason for either me or my other housemate to say "sorry, not going to consider this job".

... (read more)These comments are helpful but I'm still having a difficult time zeroing in on a guiding heuristic here. And I feel mildly frustrated by the counterexamples in the same way I do reading "well, they were always nice to me" comments on a post about a bad actor who deeply harmed someone or hearing someone who routinely drives drunk say "well, I've never caused an accident." I think most (but not all) of my list falls into a category something like "fine or tolerable 9 times out of 10, but really bad, messy, or harmful that other 1 time such that it may make those other 9 times less/not worth it." I'm not sure of the actual probabilities and they definitely vary by bullet point.

In your case in particular, I'll note that a good chunk of your examples either directly involve Julia or involve you (the spouse of Julia, who I assume had Julia as a sounding board). This seems like a rare situation of being particularly well-positioned to deal with complicated situations. Arguably, if anyone can navigate complicated or risky situations well, it will be a community health professional. I'd assume something like 95% of people will be worse at handling a situation that goes south, and maybe >25% of people will be distinctly bad at it. So what norms should be established that factor in this potential? And how do we universalize in a way that makes the risk clear, promotes open risk analysis, and prevents situations that will get really bad should they get bad?

(own views only)

On a different tangent, I think you're treating "X with funder/grantee" relationships as symmetric. However, from my standpoint, the primary responsibility lies with the funder (and the organizations they represent) to manage their professional roles appropriately.

A grantmaker has a professional obligation to their employer and donors to avoid compromising their judgment and/or abusing their power; a grantee has no such professional obligations (Analogously, if Bob cheats on his wife with Candice, in Western liberal societies we generally understand that the bulk of the blame should fall on Bob, not Candice).

This is even more the case if you extend the professional limitations to partners-of-partners. Someone going on a date with me did not consent to have their future dating pool restricted by the community, especially if applied retroactively.

(Speaking for myself, in a personal capacity) When I see posts like this, I'm more than a bit confused about which funder/grantee relationships people are thinking about should be included.

Does it include:

- Actual active funder of an actual active grantee?

- eg Alice was the primary investigator of Bob's grant. Alice and Bob are housemates who share a two-person apartment. For some reason this was never flagged.

- A grantmaker in an organization which funded a grantee, but the grantmaker will never be involved in an actual grantmaking decision involving the grantee due to COI reasons?

- eg, Casey and Dylan are thinking of dating; Casey followed organizational protocol and marked themselves as abstaining from any grantmaking decisions involving Dylan's org.

- A grantmaker in an organization that funds the grantee, but the grantmaker will never be involved in an ac

... (read more)See here, here, here, here, and here. The reported COIs are all financial rather than personal[1], however the concern with billionaires giving gifts to supreme court justices is under most conditions more concerning than for grantmakers receiving gifts (from non-grantees). The primary worry for justices (at least in recent years) is with money buying influence, whereas the primary worry for grantmakers (iiuc) is with influence buying money.

Indeed, a common defense for non-disclosure appears to be "X billionaire is a personal friend of mine offering personal gifts," to demonstrate/claim it's not a financial/corporate transaction.

I think all of these have costs, and those costs should be recognized. But sometimes the benefits are worth it, banning the practice would make things worse, and the actually helpful thing is to come up with better solutions.

E.g.

> [don't have] "Work trials" that require interruption of regular employment to complete, such that those currently employed full-time must leave their existing job to be considered for a prospective job

The fact that people need to, at best, spend all their vacation days on work trials, and maybe take huge risks with their livelihood, is bad. A good friend of mine was badly burned on this, and I think less of the organization for it. OTOH, work trials are really informative. If we removed work trials I expect that: EA jobs become even more of a club (because they have references hirers personally know), jobs are performed worse (because the hiring decision was made with less information), many people get fired shortly after starting, and more emphasis on unpaid work (as an alternate source of information).

No one is doing disruptive work trials to be mean, they're a solution to the fact that interviews aren't very informative. Could they be better implemented? Probably. Are there much better solutions out there? I hope so. But if you just take this option away I suspect things get worse.

to use a harder one: I agree co-workers dating has a lot of potential complications, and power differentials make it worse. But the counterfactual isn't necessarily "everyone gets jobs as good or better, with no negative consequences.". It's things like

I have a friend who has provided hundreds of hours of labor towards EA causes, unpaid. The lack of payment isn't due to lack of value- worse projects in her subfield get funded all the time. But her partner works at a major grantmaker, and she's not very assertive, and so it never happens.

You can blame this on her being unassertive, but protecting unassertive people is a major point of these rules.

Some of these seem fine to me as norms, some of them seem bad. Some concrete cases:

Many startups start from someone's living room. LessWrong was built in the Event Horizon living room. This was great, I don't think it hurt anyone, and it also helped the organization survive through the pandemic, which I think was quite good.

I don't understand this. There exist many long-term contracting relationships that Lightcone engages in. Seems totally fine to me. Also, many people prefer to be grant recipients instead of employees, those come with totally different relationship dynamics.

I also find this kind of dicey, though at least in Lightcone's case I think it's definitely worth it, and I know of ... (read more)

Just to hopefully quickly clarify this point in particular: There is a legal distinction between full-time employees and contractors that has legal implications, and I think EA orgs somewhat frequently misclassify. It's totally possible Lightcone has long-term contractor or grant recipient relationships that are fully above board and happy for all involved; however, I know some organizations do not. This can be a cost-saving mechanism for the organization that comes at the expense of not just their adherence to employment law, but also security for their team (e.g. everything from eligibility for benefits, to increased individual tax burden, to ineligibility for unemployment compensation).

To respond to the other points:

I'm glad this went well for LessWrong! Sometimes, however, people discover their beloved roommate is a very bad coworker and it leads to a major blowup. I think this should be treated similarly to family business ventures: you and your brother or you and your spouse might work phenomenally together professionally, or it might destroy your family and end your marriage.

Another important distinction here might be the difference between a stable living situation predating a new shared project vs. an existing organization's staff deciding to live together or pressuring new employees to live with other employees.

Either way, I think there is a risk that a discussion about an important campaign strategy turns into an argument about whose turn it is to wash the dishes.

... (read more)I wouldn't support a ban on all these practices, but surely they are a "risk"?

What if you just broke up with a coworker in a small office? What if you live together, and they are the type of roommate who drives everyone up the wall by failing to do the dishes on time, etc.? What if the roommate/partner needs to be fired for non-performance, and now you have a tremendously awkward situation at home?

It's hard enough to execute at work on a high level, and see eye-to-eye with co-workers about strategy, workplace communication, and everything else. Add in romance and roommates (in a small office), and it obviously can lead to even more complicated and difficult scenarios.

Consider why it's the case that every few months there is a long article about some organization that, in the best case, looks like it was run with the professionalism and adult supervision of a frat house.

The OP is not titled "An incomplete list of activities that EA orgs should think about before doing" it is "An incomplete list of activites that EA orgs Probably Shouldn't Do". I agree that most of the things listed in the OP seem reasonable to think about and take into account in a risk analysis, but I doubt the OP is actually contributing to people doing much more of that.

I would love a post that would go into more detail on "when each of these seems appropriate to me", which seems much more helpful to me.

I think "probably shouldn't" is fair. In most cases, these should be avoided. However, in scenarios with appropriate mitigations and/or thought given, they can be fine. You've given some examples of doing this.

In terms of the post, it has generated lots of good discussion about which of these norms the community might want to adopt or how they should be modified. Therefore, the post is valuable in its current form. Its a discussion starter IMO, not a list of things that should be cited in future.

Can we add "ask their employees to break the law" to this

A gripe I have with EA is that it is not radical enough. The american civil rights movement of 1950-1960s was very effective and altruistic, even though it's members were arrested, and it's leaders were wiretapped by the FBI and assassinated in suspicious ways. Or consider the stonewall riots.

More contemporarily, I think Uber is good for the world counterfactually. It's good that Nakamoto made bitcoin. It's good that Snowden leaked the NSA stuff. (probably, I'm less sure about the impact of these examples.)

Most crime is bad, and most altruistic crime is ineffective or counterproductive. But not all.

Edited to clarify that my experiences were all with the same organization.

Some personal examples:

I worked for an EA-adjacent organization and was repeatedly asked, and witnessed co-workers being asked, to use campaign donation data to solicit people for political and charitable donations. This is illegal[1]. My employer openly stated they knew it was illegal, but said that it was fine because "everyone does it and we need the money". I was also asked, and witnessed other people being told, to falsify financial reports to funders to make it look like we had spent grant money that, in various situations, we either: hadn't spent, didn't know whether we'd spent it or not, or may have spent on things that the grant hadn't been given for. Depending on how the specific sums of monies had actually been spent, that was anywhere from illegal to at least a massive breach of the contracts with our funders.

https://www.fec.gov/updates/sale-or-use-contributor-information/

- Voluntary human challenge trials

- Run a real money prediction market for US citizens

- Random compliance stuff that startups don't always bother with: GDPR, purchased mailing lists, D&I training in california, ...

Here are some illegal (or gray-legal) things that I'd consider effectively altruistic though I predict no "EA" org will ever do:

- Produce medicine without a patent

- Pill-mill prescription-as-a-service for certain medications

- Embryo selection or human genome editing for intelligence

- Forge college degrees

- Sell organs

- Sex work earn-to-give

- Helping illegal immigration

Work trials (paid, obviously) are awesome for better hiring, especially if you're seeking to get good candidates that don't fulfill the traditional criteria (e.g. coming from an elite US/UK university). Many job seekers don't have a current employment.

Living with other EAs or your coworkers is mostly fine too, especially if you're in a normal living situation, like most EA group houses are.

These suggestions aren't great. I agree with the "Don't date" ones, but these were already argued for before.

In general, I think it is helpful in discussing work trials if people (including the OP) distinguished between three different things that are commonly called work trials:

I'm not sure which of these the OP was including in her claim. Presumably not the first one?

I wonder if there should be some set of standards that orgs can opt into and know that they will be audited for compliance? I am very sensitive to the tradeoffs here with being a small org that can move quickly, so maybe pledging to not to do these things could be like a higher level of certification for orgs large and secure enough to handle the overhead?

Thanks for getting the ball rolling on this discussion! This can often be a thankless task as even if people agree with 70-80% of what you wrote, unfortunately, their comments will naturally focus on the parts where they disagree with you.

On the other hand, parts of this seems to go too far. For example, would organising an after-work drinks count as "promoting drugs among coworkers, including alcohol"?

This isn't the only example where I'd like to see a bit more nuance and thought.

I think these would be great norms to promote within EA. Although I think in a start-up scenario, living with / dating co-workers at the same organisational power level is pretty fine.

I think you're right that there's some risk in these situations. But also: work is one of the main places where one is able to meet people, including potential romantic partners. Norms against dating co-workers therefore seem quite costly in lost romance, which I think is a big deal! I think it's probably worth having norms against the cases you single out as especially risky, but otherwise, I'd rather our norms be laissez-faire.

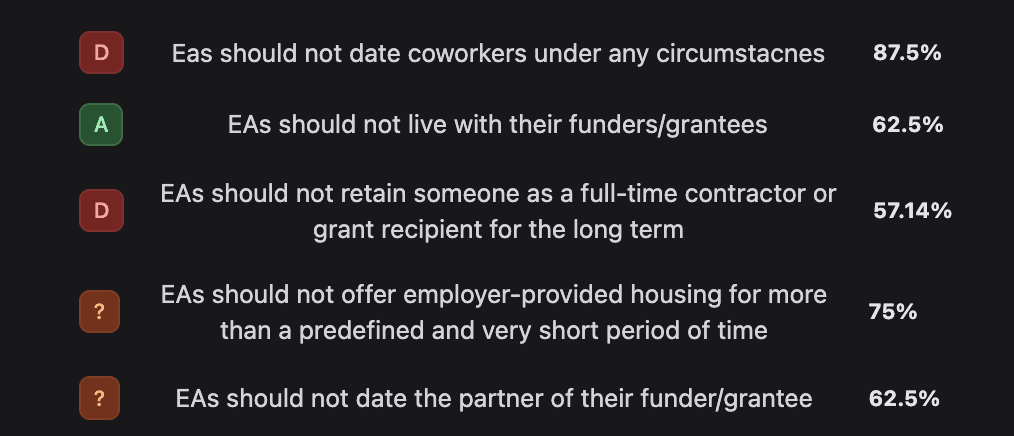

I made a poll. Now you can anonymously say which of these norms you agree and disagree with.

https://viewpoints.xyz/polls/ea-norms

The results screen is not working, talking to devs, but as an illustrative point, note how much ambiguity there already is on many of these points. These are my own votes, selectively chosen so take with a large chunk of salt.

87% disagree with "EAs should not date coworkers"

62% think that not dating the partner of a funder/grantee is complicated.

I don't think the conversation is easy to have without a degree of anonymity. Few people are gonna publicly say they think that dating funders' partners is fine, but many people are at least unsure.

Note I'm not saying Rocky's list is wrong. I am saying we don't have a way for the less acceptable side to argue it.

Thank you for sharing this. I think that this is a great way to encourage more conversation about these practices/behaviors.

I think this pushes a set of norms as a fait accomplis when many seem murkier to me. In particular, among the things you think are clearly a risk are several situations that I do not think orgs should have on their risk list unless there are other external reasons.

Can we wait a bit after the current complex discussion before pushing out lists of norms that are hard to disagree with publicly.

Edit Thank you @Rockwell for starting the discussion. Apologies for being a bit grumpy above.

Hmm if you have a five-person org, I feel like it straightforwardly is a risk factor if one of the employees date each other. This is true even if both of them are individual contributors, there are no power differentials, etc.

It matters much less (arguably ~0) at say Google's scale if a random data analyst working on Google Ads is dating a random software engineer working on Google Cloud. I'm not sure where the cutoff is, anything from 20 to 200 seems defensible to me.

You might believe that the ceteris paribus risk is not worth correcting for due to other values or pragmatics (professionalism norms against intruding on employees' personal lives; thinking that the costs of breaking up a relationship is greater than the benefits you gain from the reduced organizational risk[1], etc). But it absolutely is a risk.

- ^

... (read more)for starters, I expect a reasonable fraction of those conversations to end with one of the employees quitting the comp

Perhaps we could change the wording from "Date coworkers" to something like "Date people you work with." After all, the former phrasing allows dating a vendor or a contractor, while prohibiting a random data analyst working on Google Ads from dating a random software engineer working on Google Cloud.

I think this is a bad idea. Suggesting someone 'get a diagnosis' is a terrible approach to health and medical advice. Giving someone your own prescribed medication is also a bad idea, and is exactly the kind of norm-crossing ickiness that should be reduced/eliminated. The version I would endorse is:

"Have you considered whether you might have ADHD? It might be a good idea to talk to a doctor about these issues you're having, as medication can be helpful here."

Wouldn't this be illegal? In which case, I think it's pretty clearly at least a risk.

If someone has been struggling to get their prescription and someone else lends them some for a few days, that is illegal. And if I was their boss and I thought this might be about to take place, I would walk out of the room.

Generally I agree that following the law is good, but I think legal norms around medication are way too strict and I wouldn't want to be the reason someone gets theirs.

This article is both about risks and norms. And I don't want anyone who helps/lends their medication to be considered a norm-breaking EA. Notably because that's quite a lot people.

I'll just flag that this is a different situation to the one in your other comment, and I don't think it raises any ethical difficulties.

Thanks for re-emphasizing these norms.

For those who disagree with these norms, these sources help explain why they are important:

The sub-population that disagrees with these norms has substantial overlap with the sub-population that would most benefit from them.

I suspect that there is significant geographic concentration in the bay area as well, because of a culmination of factors including housing prices pushing people to group homes, gender ratios, tech/startup culture, and the co-existence of the rat community.

I don't think the situation will get better, despite the attempt at norm setting, until the locus of EA money and power isn't as concentrated in the bay area.

I conditionally disagree with the "Work trials". I think EA companies doing work trials is a pretty positive innovation that EA in particular does that enables them to hire for a better fit than not doing work trials. This is good in the long run for potential employees and for the employer.

This is conditional on

I can anticipate some reasonable disagreement on this matter but it seems to me it's not so unreasonable to give these work trials even if they last long enough that it requires the candidate to take a few days of leave off work. Being paid for the trial itself should compensate for the inconvenience. More than a week of time seems like it should have even stronger justification and I wouldn't endorse that.

Although I understand this process would still require some inconvenience to the candidate and their current employer, on balance, if the candidate is well-paid, it seems like a reasonable trade-off to ask for, considering the benefit to the candidate and the employer for finding a good fit.

What does this mean? Is this referring to people living with other EAs and referring to it as an EA group house?

It's time to create separate brands for the professional and the social/personal aspects of EA.

The conflation of the two is at the source of a lot the issues, and folks should be able to opt into one and not the other.

Thanks for writing up your views here! I think it might be quite valuable to have more open conversations about what norms there's consensus on and which ones there aren't, which this helps spark.

I fixed the results page of the poll and I think it's pretty interesting.

Poll: https://viewpoints.xyz/polls/ea-norms

Results: https://viewpoints.xyz/polls/ea-norms/results

Most EAs seem to think some of these risk behaviours are fine.

Likewise there is a lot of disagreement.

And there was a lot of uncertainty (the question mark option)

I'm glad you wrote this post. Mostly before reading this post, I wrote a draft for what I want my personal conflict of interest policy to be, especially with regard to personal and professional relationships. Changing community norms can be hard, but changing my norms might be as easy as leaving a persuasive comment! I'm open to feedback and suggestions here for anybody interested.

Great suggestions, genuinely.

Now, let's count off those who are already in such situations, many of whom are at the highest levels of EA, some who have been successfully navigating all this complexity for a decade. What are their secrets? Can there, maybe, be some lessons from them that can mitigate some of the ill-effects for those who in the future are still going to inevitably find themselves there?

I think I largely agree with this list, and want to make a related point, which is that I think it's better to start with an organizational culture that errs on the side of being too professional, since 1) I think it's easier to relax a professional culture over time than it is to go in the other direction and 2) the risks of being too professional generally seem smaller than the risks of being insufficiently professional.

While I broadly agree with Rocky's list I want to push back a little vs. your points:

Re your (2): I've found that small entities are in a constant struggle for survival, and must move fast and focus on the most important problems unique to their ability to make a difference in the world. Small-seeming requirements like "new hires have to find their own housing" can easily make the difference between being able to move quickly vs. slowly on some project that makes or breaks the company. I think for new entities the risks of incurring large costs before you have 'proven yourself' are quite high.

My experience also disagrees with your (1): As my company has grown, we have had many forces naturally pushing in the direction of "more professional": new hires tend to be much more worried about blame about doing things too quick-and-dirty rather than incurring costs on the business in order to do things the buttoned-up way; I've stepped in more often to accept a risk rather than to prevent one although I certainly do both!

(Side note: as a potential counterpoint to the above, I do note that Alameda/FTX was clearly well below professional standards at >200 employees - my assumption is that Sam/execs were constantly stepping in to keep the culture the way they wanted it. If I learned that somehow most of the 200 employees were pushing in the direction of less professionalism on their own, I would update to agree with you on (1).)

I don't want to speak for Lincoln, but it straightforwardly seems obvious to me that if you need non-Senegalese employees to do a stint in Senegal (already a pretty high ask for some people), providing housing (and other amenities you don't usually need to provide if your employees are working out of metropolitan areas in their home countries) is more likely to ease the transition. You might believe that the risks are so high that it's not worth the benefits, but the benefits are definitely real.

Typo? “I believe there is a reasonable risk should EAs:”

Do you mean “a reasonable risk if EAs” or “a reasonable risk that EAs should not…”

The wording is confusing to me