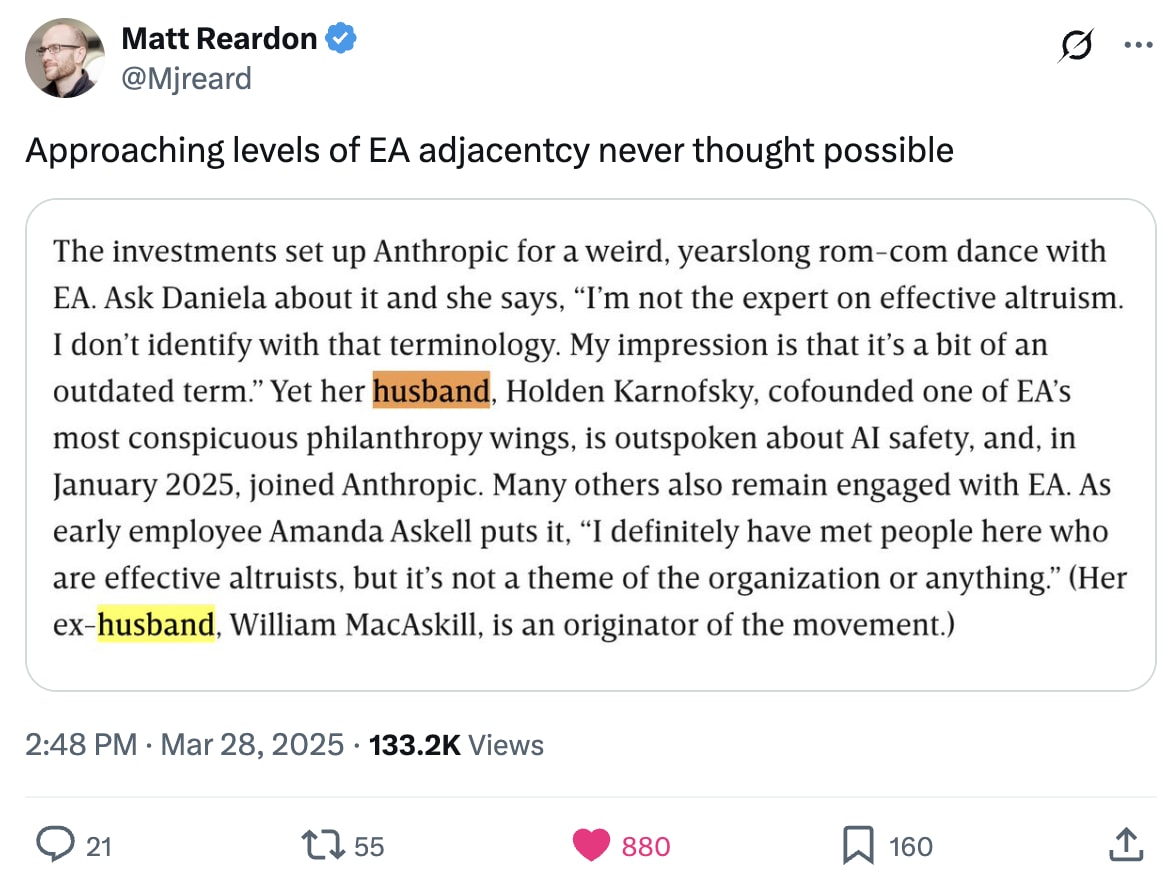

In a recent Wired article about Anthropic, there's a section where Anthropic's president, Daniela Amodei, and early employee Amanda Askell seem to suggest there's little connection between Anthropic and the EA movement:

Ask Daniela about it and she says, "I'm not the expert on effective altruism. I don't identify with that terminology. My impression is that it's a bit of an outdated term". Yet her husband, Holden Karnofsky, cofounded one of EA's most conspicuous philanthropy wings, is outspoken about AI safety, and, in January 2025, joined Anthropic. Many others also remain engaged with EA. As early employee Amanda Askell puts it, "I definitely have met people here who are effective altruists, but it's not a theme of the organization or anything". (Her ex-husband, William MacAskill, is an originator of the movement.)

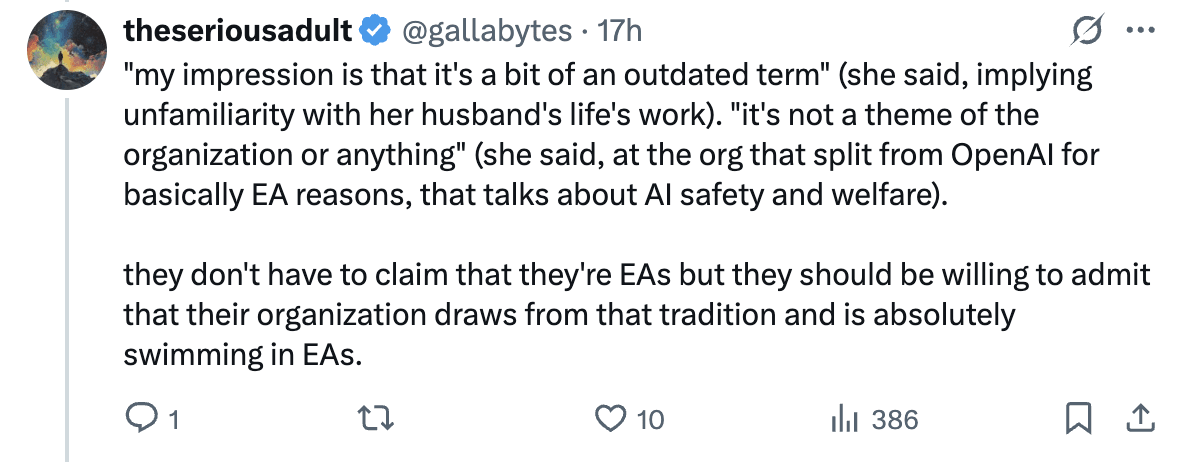

This led multiple people on Twitter to call out how bizarre this is:

In my eyes, there is a large and obvious connection between Anthropic and the EA community. In addition to the ties mentioned above:

- Dario, Anthropic’s CEO, was the 43rd signatory of the Giving What We Can pledge and wrote a guest post for the GiveWell blog. He also lived in a group house with Holden Karnofsky and Paul Christiano at a time when Paul and Dario were technical advisors to Open Philanthropy.

- Amanda Askell was the 67th signatory of the GWWC pledge.

- Many early and senior employees identify as effective altruists and/or previously worked for EA organisations

- Anthropic has a "Long-Term Benefit Trust" which, in theory, can exercise significant control over the company. The current members are:

- Zach Robinson - CEO of the Centre for Effective Altruism.

- Neil Buddy Shah - CEO of the Clinton Health Access Initiative, former Managing Director at GiveWell and speaker at multiple EA Global conferences

- Kanika Bahl - CEO of Evidence Action, a long-term grantee of GiveWell.

- Three of EA’s largest funders historically (Dustin Moskovitz, Sam Bankman-Fried and Jann Tallinn) were early investors in Anthropic.

- Anthropic has hired a "model welfare lead" and seems to be the company most concerned about AI sentience, an issue that's discussed little outside of EA circles.

- On the Future of Life podcast, Daniela said, "I think since we [Dario and her] were very, very small, we've always had this special bond around really wanting to make the world better or wanting to help people" and "he [Dario] was actually a very early GiveWell fan I think in 2007 or 2008."

- The Anthropic co-founders have apparently made a pledge to donate 80% of their Anthropic equity (mentioned in passing during a conversation between them here and discussed more here)

- Their first company value states, "We strive to make decisions that maximize positive outcomes for humanity in the long run."

It's perfectly fine if Daniela and Dario choose not to personally identify with EA (despite having lots of associations) and I'm not suggesting that Anthropic needs to brand itself as an EA organisation. But I think it’s dishonest to suggest there aren’t strong ties between Anthropic and the EA community. When asked, they could simply say something like, "yes, many people at Anthropic are motivated by EA principles."

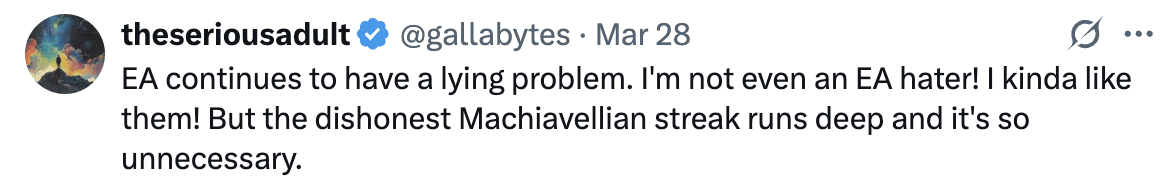

It appears that Anthropic has made a communications decision to distance itself from the EA community, likely because of negative associations the EA brand has in some circles. It's not clear to me that this is even in their immediate self-interest. I think it’s a bad look to be so evasive about things that can be easily verified (as evidenced by the twitter response).

This also personally makes me trust them less to act honestly in the future when the stakes are higher. Many people regard Anthropic as the most responsible frontier AI company. And it seems like something they genuinely care about—they invest a ton in AI safety, security and governance. Honest and straightforward communication seems important to maintain this trust.

Now that you mention this, I think it's worth flagging the conflict of interest between EA and Anthropic that it poses. Although it's a little awkward to ascribe conflicts of interest to movements, I think a belief that ideological allies hold vast amounts of wealth in a specific company -- especially combined with a hope that such allies will use said wealth to further the movement's objectives -- qualifies.

There are a couple of layers to that. First, there's a concern that the financial entanglement with Anthropic could influence EA actors, such as by pulling punches on Anthropic, punching extra-hard on OpenAI, or shading policy proposals in Anthropic's favor. Relatedly, people may hesitate to criticize Anthropic (or make policy proposals hostile to it) because their actual or potential funders have Anthropic entanglements, whether or not the funders would actually act in a conflicted manner.

By analogy, I don't see EA as a credible source on the virtues and drawbacks of crypto or Asana. The difference is that neither crypto nor management software are EA cause areas, so those conflicts are less likely to impinge on core EA work than the conflict regarding Anthropic.

The next layer is that a reasonable observer would discount some EA actions and proposals based on the COI. To a somewhat informed member of the general public or a policymaker, I think establishing the financial COI creates a burden shift, under which EA bears an affirmative burden of establishing that its actions and proposals are free of taint. That's a hard burden to meet in a highly technical and fast-developing field. And some powerful entities (e.g., OpenAI) would be incentivized to hammer on the COI if people start listening to EA more.

I'm not sure how to mitigate this COI, although some sort of firewall between funders with Anthropic entanglements and grantmakers might help some.

(In this particular case, how Anthropic communicates about EA is more a meta concern, and so I don't feel the COI in the same way I would if the concern about Anthropic were at the object level. Also, being comprised of social animals, EA cares about its reputation for more than instrumental reasons -- so to the extent that there is a pro-Anthropic COI it may largely counteract that effect. However, I still think it's generally worth explicitly raising and considering the COI where Anthropic-related conduct is being considered.)