All posts

December 2022

Frontpage Posts

Quick takes

tl;dr:

In the context of interpersonal harm:

1. I think we should be more willing than we currently are to ban or softban people.

2. I think we should not assume that CEA's Community Health team "has everything covered"

3. I think more people should feel empowered to tell CEA CH about their concerns, even (especially?) if other people appear to not pay attention or do not think it's a major concern.

4. I think the community is responsible for helping the CEA CH team with having a stronger mandate to deal with interpersonal harm, including some degree of acceptance of mistakes of overzealous moderation.

(all views my own) I want to publicly register what I've said privately for a while:

For people (usually but not always men) who we have considerable suspicion that they've been responsible for significant direct harm within the community, we should be significantly more willing than we currently are to take on more actions and the associated tradeoffs of limiting their ability to cause more harm in the community.

Some of these actions may look pretty informal/unofficial (gossip, explicitly warning newcomers against specific people, keep an unofficial eye out for some people during parties, etc). However, in the context of a highly distributed community with many people (including newcomers) that's also embedded within a professional network, we should be willing to take more explicit and formal actions as well.

This means I broadly think we should increase our willingness to a) ban potentially harmful people from events, b) reduce grants we make to people in ways that increase harmful people's power, c) warn organizational leaders about hiring people in positions of power/contact with potentially vulnerable people.

I expect taking this seriously to involve taking on nontrivial costs. However, I think this is probably worth it.

I'm not sure why my opinion here is different from others[1]', however I will try to share some generators of my opinion, in case

EA Forum discourse tracks actual stakes very poorly

Examples:

1. There have been many posts about EA spending lots of money, but to my knowledge no posts about the failure to hedge crypto exposure against the crypto crash of the last year, or the failure to hedge Meta/Asana stock, or EA’s failure to produce more billion-dollar start-ups. EA spending norms seem responsible for $1m–$30m of 2022 expenses, but failures to preserve/increase EA assets seem responsible for $1b–$30b of 2022 financial losses, a ~1000x difference.

2. People are demanding transparency about the purchase of Wytham Abbey (£15m), but they’re not discussing whether it was a good idea to invest $580m in Anthropic (HT to someone else for this example). The financial difference is ~30x, the potential impact difference seems much greater still.

Basically I think EA Forum discourse, Karma voting, and the inflation-adjusted overview of top posts completely fails to correctly track the importance of the ideas presented there. Karma seems to be useful to decide which comments to read, but otherwise its use seems fairly limited.

(Here's a related post.)

A Personal Apology

I think I’m significantly more involved than most people I know in tying the fate of effective altruism in general, and Rethink Priorities in particular, with that of FTX. This probably led to rather bad consequences ex post, and I’m very sorry for this.

I don’t think I’m meaningfully responsible for the biggest potential issue with FTX. I was not aware of the alleged highly unethical behavior (including severe mismanagement of consumer funds) at FTX. I also have not, to my knowledge, contributed meaningfully to the relevant reputational laundering or branding that led innocent external depositors to put money in FTX. The lack of influence there is not because I had any relevant special knowledge of FTX, but because I have primarily focused on building an audience within the effective altruism community, who are typically skeptical of cryptocurrency, and because I have actively avoided encouraging others to invest in cryptocurrency. I’m personally pretty skeptical about the alleged social value of pure financialization in general and cryptocurrency in particular, and also I’ve always thought of crypto as a substantially more risky asset than many retail investors are led to believe.[1]

However, I think I contributed both reputationally and operationally to tying FTX in with the effective altruism community. Most saliently, I’ve done the following:

1. I decided on my team’s strategic focus on longtermist megaprojects in large part due to anticipated investments in world-saving activities from FTX. Clearly this did not pan out.

2. In much of 2022, I strongly encouraged the rest of Rethink Priorities, including my manager, to seriously consider incorporating influencing the Future Fund into our organization’s Theory of Change.

1. Fortunately RP’s leadership was more generally skeptical of tying in our fate with cryptocurrency than I was, and took steps to minimize exposure.

3. I was a regranter for the Future Fund in a personal capacity (n

Consider radical changes without freaking out

As someone running an organization, I frequently entertain crazy alternatives, such as shutting down our summer fellowship to instead launch a school, moving the organization to a different continent, or shutting down the organization so the cofounders can go work in AI policy.

I think it's important for individuals and organizations to have the ability to entertain crazy alternatives because it makes it more likely that they escape local optima and find projects/ideas that are vastly more impactful.

Entertaining crazy alternatives can be mentally stressful: it can cause you or others in your organization to be concerned that their impact, social environment, job, or financial situation is insecure. This can be addressed by pointing out why these discussions are important, a clear mental distinction between brainstorming mode and decision-making, and a shared understanding that big changes will be made carefully.

Why considering radical changes seems important

The best projects are orders of magnitude more impactful than good ones. Moving from a local optimum to a global one often involves big changes, and the path isn't always very smooth. Killing your darlings can be painful. The most successful companies and projects typically have reinvented themselves multiple times until they settled on the activity that was most successful. Having a wide mental and organizational Overton window seems crucial for being able to make pivots that can increase your impact several-fold.

When I took on leadership at CLR, we still had several other projects, such as REG, which raised $15 million for EA charities at a cost of $500k. That might sound impressive, but in the greater scheme of things raising a few million wasn't very useful given that the best money-making opportunities could make a lot more per staff per year, and EA wasn't funding-constrained anymore. It took me way too long to realize this, and only my successor st

The whole/only real point of the effective altruism community is to do the most good.

If the continued existence of the community does the most good,

I desire to believe that the continued existence of the community does the most good;

If ending the community does the most good,

I desire to believe that ending the community does the most good;

Let me not become attached to beliefs I may not want.

November 2022

Frontpage Posts

Quick takes

I am a bit worried people are going to massively overcorrect on the FTX debacle in ways that don't really matter and impose needless costs in various ways. We should make sure to get a clear picture of what happened first and foremost.

Seems prima facie bad that orgs in the Effective Ventures umbrella ask employees, contractors and volunteers to sign NDAs without reassurance of whistleblower protections. Even if the content of the NDA is mundane, it will have a chilling effect.

I’ve been thinking hard about whether to publicly comment more on FTX in the near term. Much for the reasons Holden gives here, and for some of the reasons given here, I’ve decided against saying any more than I’ve already said for now.

I’m still in the process of understanding what happened, and processing the new information that comes in every day. I'm also still working through my views on how I and the EA community could and should respond.

I know this might be dissatisfying, and I’m really sorry about that, but I think it’s the right call, and will ultimately lead to a better and more helpful response.

The FTX crisis through the lens of wikipedia pageviews.

(Relevant: comparing the amounts donated and defrauded by EAs)

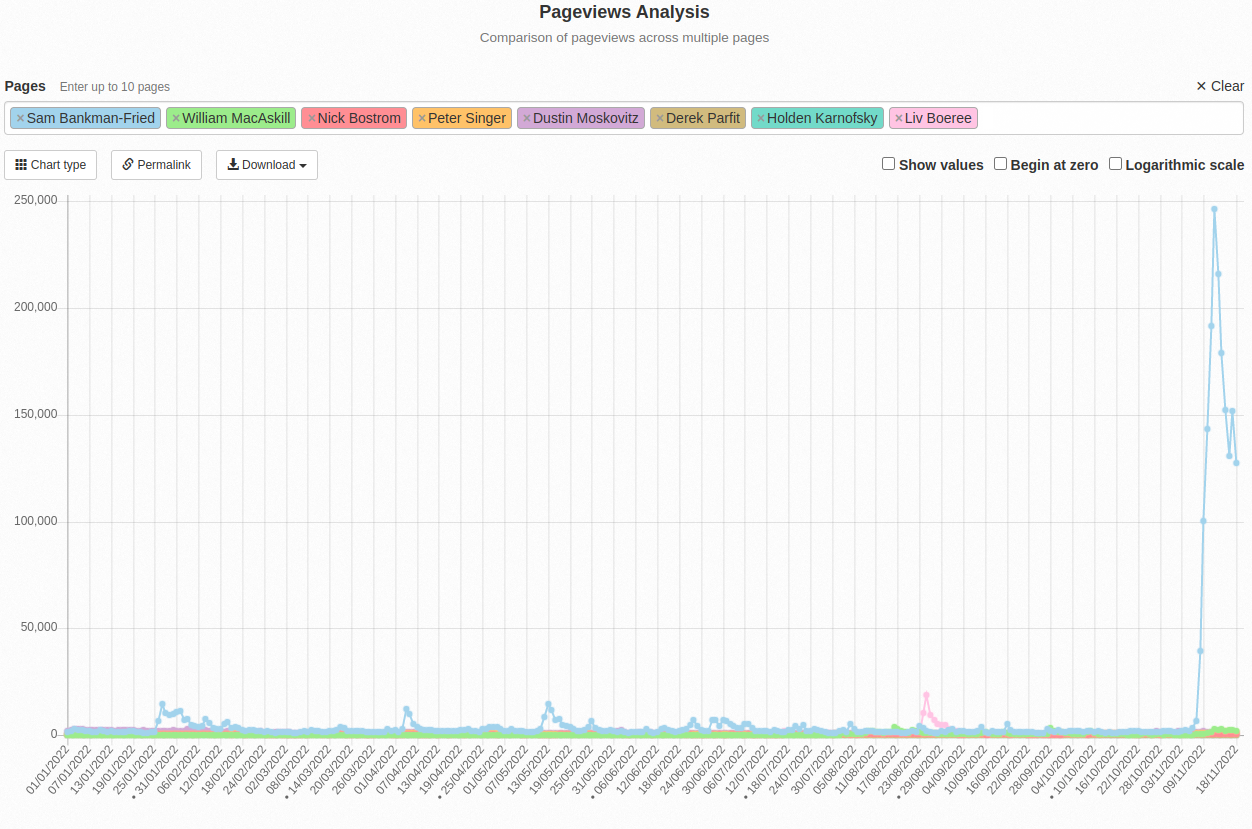

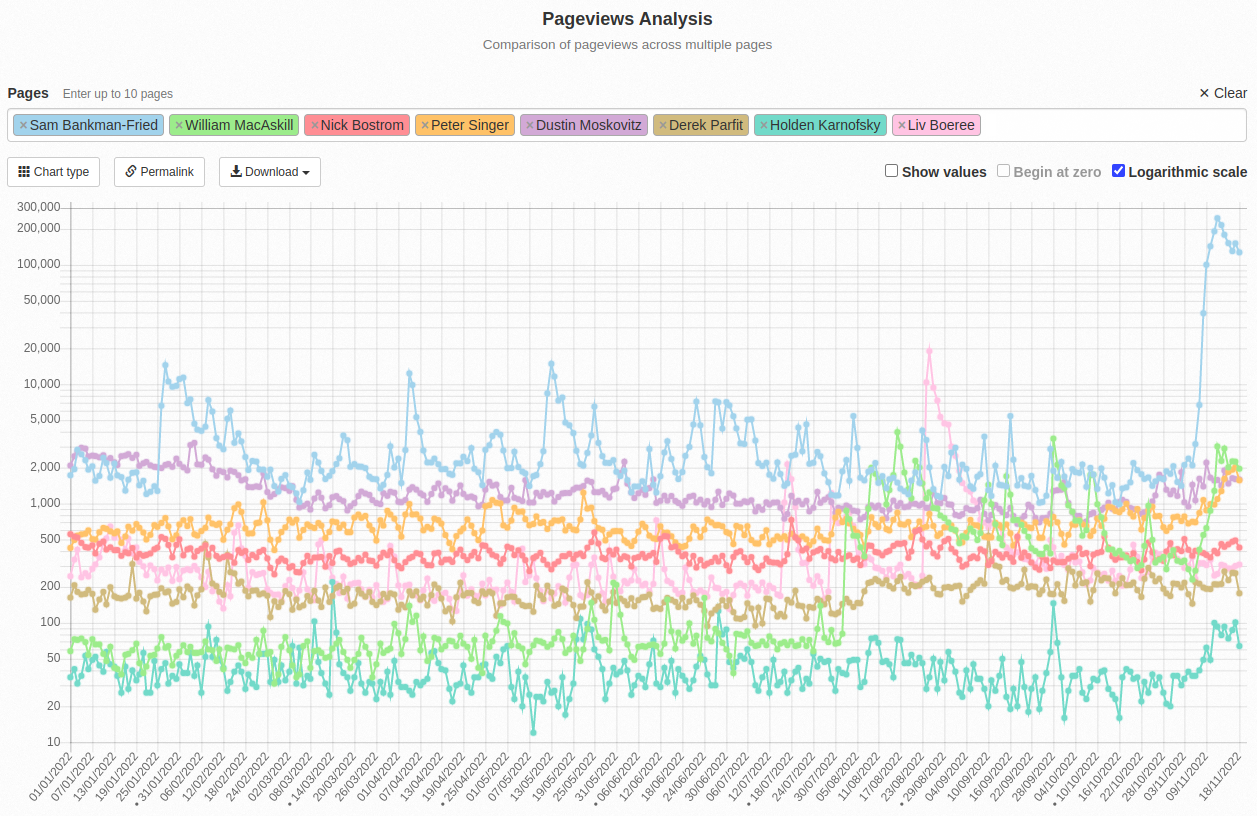

1. In the last two weeks, SBF has about about 2M views to his wikipedia page. This absolutely dwarfs the number of pageviews to any major EA previously.

2. Viewing the same graph on a logarithmic scale, we can see that even before the recent crisis, SBF was the best known EA. Second was Moskovitz, and roughly tied at third are Singer and Macaskill.

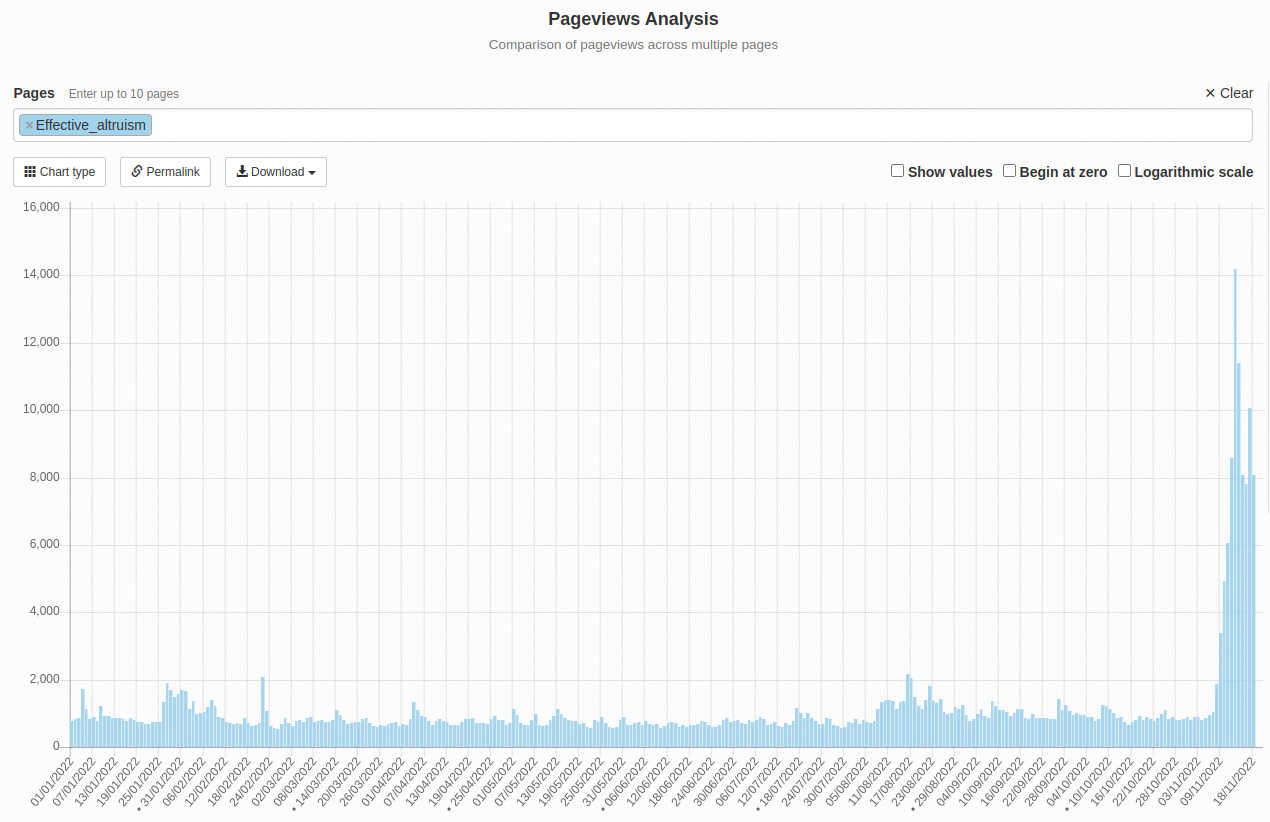

3. Since the scandal, many people will have heard about effective altruism, in a negative light. It has been accumulating pageviews at about 10x the normal rate. If pageviews are a good guide, then 2% of people who had heard about effective altruism ever would have heard about it in the last two weeks, through the FTX implosion.

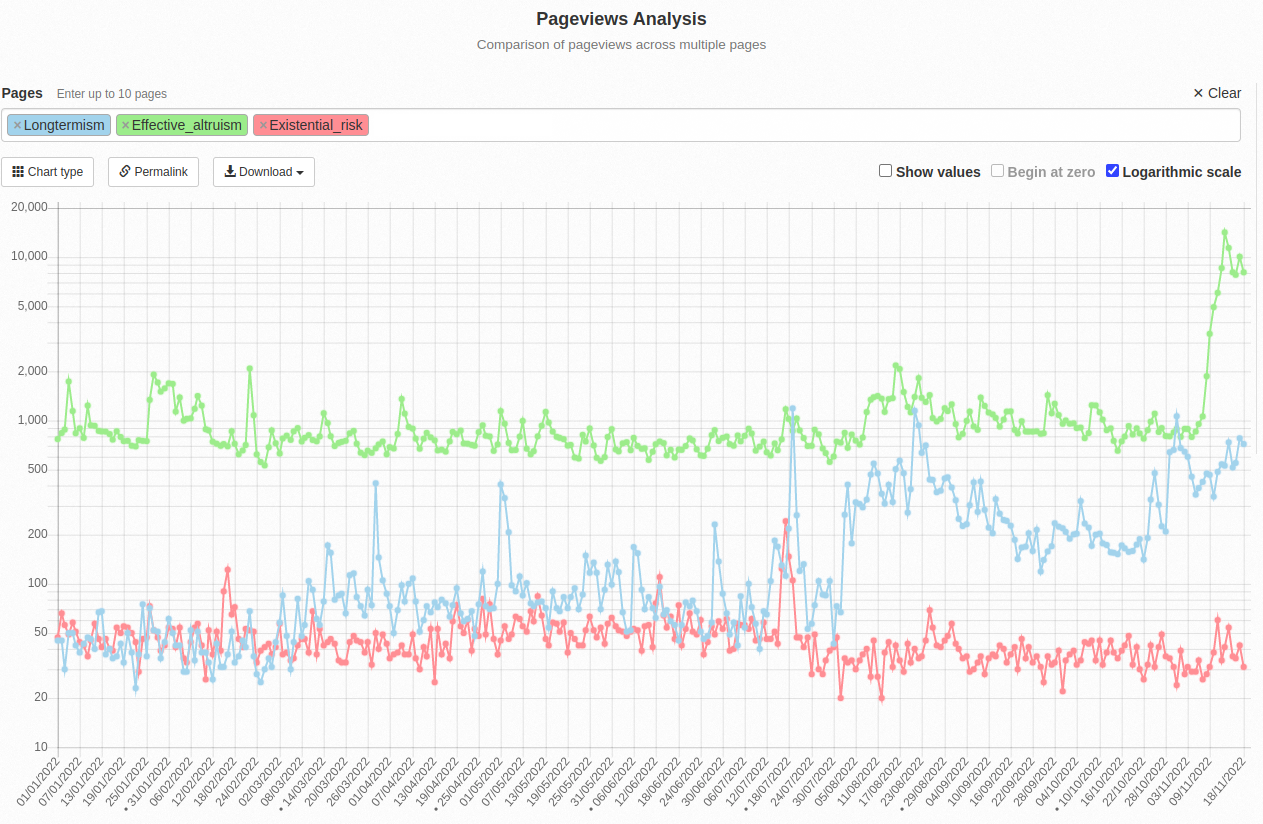

4. Interest in "longtermism" has been only weakly affected by the FTX implosion, and "existential risk" not at all.

Given this and the fact that two books and a film are on the way, I think that "effective altruism" doesn't have any brand value anymore is more likely than not to lose all its brand value. Whereas "existential risk" is far enough removed that it is untainted by these events. "Longtermism" is somewhere in-between.

Doing Doing Good Better Better

The general idea: We need more great books!

I've been trying to find people willing and able to write quality books and have had a hard time finding anyone. "Doing Doing Good Better Better" seems one of the highest-EV projects, and EA Funds (during my tenure) received basically no book proposals, as far as I remember. I'd love to help throw a lot of resources after an upcoming book project by someone competent who isn't established in the community yet.

The current canonical EA books—DGB, WWOTF, The Precipice—seem pretty good to me. But only very few people have undertaken serious attempts to write excellent EA books.

It generally seems to me that EA book projects have mainly been attempted by people (primarily men) who seem driven by prestige. I'm not sure if this is good—it means they're going to be especially motivated to do a great job, but it seems uncorrelated with writing skill, so we're probably missing out on a lot of talented writers.

In particular, I think there is still room for more broad, ambitious, canonical EA books, i.e. ones that can be given as a general EA introduction to a broad range of people, rather than a narrow treatment of e.g. niche areas in philosophy. I feel most excited about proposals that have the potential to become a canonical resource for getting talented people interested in rationality and EA.

Perhaps writing a book requires you to put yourself forward in a way that's uncomfortable for most people, leaving only prestige-driven authors actually pursuing book projects? If true, I think this is bad, and I want to encourage people who feel shy about putting themselves out there to attempt it. If you like, you could apply for a grant and partly leave it to the grantmakers to decide if it's a good idea.

A more specific take: Books as culture-building

What's the secret sauce of the EA community? I.e., what are the key ideas, skills, cultural aspects, and other properties of the EA community tha

October 2022

Frontpage Posts

Quick takes

The Forum moderation team has been made aware that Kerry Vaughn published a tweet thread that, among other things, accuses a Forum user of doing things that violate our norms. Most importantly:

The user in question said this information came from searching LinkedIn for people who had listed themselves as having worked at Leverage and related organizations.

This is not "doxing" and it’s unclear to us why Kerry would use this term: for example, there was no attempt to connect anonymous and real names, which seems to be a key part of the definition of “doxing”. In any case, we do not consider this to be a violation of our norms.

At one point Forum moderators got a report that some of the information about these people was inaccurate. We tried to get in touch with the then-anonymous user, and when we were unable to, we redacted the names from the comment. Later, the user noticed the change and replaced the names. One of CEA’s staff asked the user to encode the names to allow those people more privacy, and the user did so.

Kerry says that a former Leverage staff member “requests that people not include her last name or the names of other people at Leverage” and indicates the user broke this request. However, the post in question requests that the author’s last name not be used in reference to that post, rather than in general. The comment in question doesn’t refer to the former staff member’s post at all, and was originally written more than a year before the post. So we do not view this comment as disregarding someone’s request for privacy.

Kerry makes several other accusations, and we similarly do not believe them to be violations of this Forum's norms. We have shared our analysis of these accusations with Leverage; they are, of course, entitled to disagree with us (and publicly state their disagreement), but the moderation team wants to make clear that we take enforcement of our norms seriously.

We would also like to take this opportunity to remind everyone th

EA Funds rejected my grant application for a small exploratory community building grant, and did not leave any actual feedback beyond a generic rejection. On top of that, in the same email they leave a link asking for feedback "How did we do? We would really appreciate your feedback on your experience applying for a grant. It takes just a minute and helps us improve." Lol. I wish they could have taken a minute and given me feedback. It sure would help me improve!

After having done community building for almost a year as a volunteer this feels really frustrating and extremely demotivating.

My heart longs to work more directly to help animals. I'm doing things that are meta-meta-meta-meta removed from actions that feel like they're actually helping anyone, all the while the globe is on moral fire. At times like this, I need to remind myself of Ahmdal's Law and Inventor's Paradox. And to reaffirm that I need to try to have faith in my own judgment of what is likely to produce good consequences, because no one else will be able to know all the relevant details about me, and also because otherwise I can't hope to learn.[1]

Ahmdal's Law tells you the obvious thing that for a complex process, the maximum percentage speedup you can achieve by optimising one of its sub-processes is hard limited by the fraction of time that sub-process gets used. It's loosely analogous to the idea that if you work on a specific direct cause, the maximum impact you can have is limited by the scale of that cause, whereas if you work on a cause that feeds into the direct cause, you have a larger theoretical limit (although that far from guarantees larger impact in practice). I don't know a better term for this, so I'm using "Ahmdal's Law".

The Inventor's Paradox is the curious observation that when you're trying to solve a problem, it's often easier to try to solve a more general problem that includes the original as a consequence.[2]

Consider the problem of adding up all the numbers from 1 to 99. You could attack this by going through 99 steps of addition like so: 1+2+3+...97+98+99.

Or you could take a step back and look for more general problem-solving techniques. Ask yourself, how do you solve all 1-iterative addition problems? You could rearrange it as:

(1+99)+(2+98)+...+(48+52)+(49+51)+50=100×49+50

For a sequence of N numbers, you can add the first number (n0=1) to the last number (nN=99) and multiply the result by how many times you can pair them up like that (n0+nn)+(n1+nn−1)+(n2+nn−2)+... plus if there's a single number left (50) you just add that at the end. As you

If you type "#" follwed by the title of a post and press enter it will link that post.

Example:

Examples of Successful Selective Disclosure in the Life Sciences

This is wild

I (with lots of help from my colleague Marie Davidsen Buhl) made a database of resources relevant nanotechnology strategy research, with articles sorted by relevance for people new to the area. I hope it will be useful for people who want to look into doing research in this area.

September 2022

Frontpage Posts

Quick takes

EA forum content might be declining in quality. Here are some possible mechanisms:

1. Newer EAs have worse takes on average, because the current processes of recruitment and outreach produce a worse distribution than the old ones

2. Newer EAs are too junior to have good takes yet. It's just that the growth rate has increased so there's a higher proportion of them.

3. People who have better thoughts get hired at EA orgs and are too busy to post. There is anticorrelation between the amount of time people have to post on EA Forum and the quality of person.

4. Controversial content, rather than good content, gets the most engagement.

5. Although we want more object-level discussion, everyone can weigh in on meta/community stuff, whereas they only know about their own cause areas. Therefore community content, especially shallow criticism, gets upvoted more. There could be a similar effect for posts by well-known EA figures.

6. Contests like the criticism contest decrease average quality, because the type of person who would enter a contest to win money on average has worse takes than the type of person who has genuine deep criticism. There were 232 posts for the criticism contest, and 158 for the Cause Exploration Prizes, which combined is more top-level posts than the entire forum in any month except August 2022.

7. EA Forum is turning into a place primarily optimized for people to feel welcome and talk about EA, rather than impact.

8. All of this is exacerbated as the most careful and rational thinkers flee somewhere else, expecting that they won't get good quality engagement on EA Forum

I noticed this a while ago. I don't see large numbers of low-quality low-karma posts as a big problem though (except that it has some reputation cost for people finding the Forum for the first time). What really worries me is the fraction of high-karma posts that neither original, rigorous, or useful. I suggested some server-side fixes for this.

PS: #3 has always been true, unless you're claiming that more of their output is private these days.

The Scout Mindset deserved 1/10th of the marketing campaign of WWOTF. Galef is a great figurehead for rational thinking and it would have been worth it to try and make her a public figure.

We now have google-docs-style collaborative editing. Thanks a bunch to Jim Babcock of LessWrong for developing this feature.

Honestly I don't understand the mentality of being skeptical of lots of spending on EA outreach. Didn't we have the fight about overhead ratios, fundraising costs, etc with Charity Navigator many years ago? (and afaict decisively won).

tl;dr:

In the context of interpersonal harm:

1. I think we should be more willing than we currently are to ban or softban people.

2. I think we should not assume that CEA's Community Health team "has everything covered"

3. I think more people should feel empowered to tell CEA CH about their concerns, even (especially?) if other people appear to not pay attention or do not think it's a major concern.

4. I think the community is responsible for helping the CEA CH team with having a stronger mandate to deal with interpersonal harm, including some degree of acceptance of mistakes of overzealous moderation.

(all views my own) I want to publicly register what I've said privately for a while:

For people (usually but not always men) who we have considerable suspicion that they've been responsible for significant direct harm within the community, we should be significantly more willing than we currently are to take on more actions and the associated tradeoffs of limiting their ability to cause more harm in the community.

Some of these actions may look pretty informal/unofficial (gossip, explicitly warning newcomers against specific people, keep an unofficial eye out for some people during parties, etc). However, in the context of a highly distributed community with many people (including newcomers) that's also embedded within a professional network, we should be willing to take more explicit and formal actions as well.

This means I broadly think we should increase our willingness to a) ban potentially harmful people from events, b) reduce grants we make to people in ways that increase harmful people's power, c) warn organizational leaders about hiring people in positions of power/contact with potentially vulnerable people.

I expect taking this seriously to involve taking on nontrivial costs. However, I think this is probably worth it.

I'm not sure why my opinion here is different from others[1]', however I will try to share some generators of my opinion, in case it's helpful:

A. We should aim to be a community that's empowered to do the most good. This likely entails appropriately navigating the tradeoff of both attempting to reducing the harms of a) contributors feeling or being unwelcome due to sexual harassment or other harms and b) contributors feeling or being unwelcome due to false accusations or overly zealous response.

B. I think some of this is fundamentally a sensitivity vs specificity tradeoff. If we have a detection system that's too tuned to reduce the risk of false positives (wrong accusations being acted on), we will overlook too many false negatives (people being too slow to be banned/censured, or not at all), and vice versa.

Consider the first section of "Difficult Tradeoffs"

In the world we live in, I've yet to hear of a single incidence where, in full context, I strongly suspect CEA CH (or for that matter, other prominent EA organizations) was overzealous in recommending bans due to interpersonal harm. If our institutions are designed to only reduce first-order harm (both from direct interpersonal harm and from accusations), I'd expect to see people err in both directions.

Given the (apparent) lack of false positives, I broadly expect we accept too high a rate of false negatives. More precisely, I do not think CEA CH's current work on interpersonal harm will lead to a conclusion like "We've evaluated all the evidence available for the accusations against X. We currently think there's only a ~45% chance that X has actually committed such harms, but given the magnitude of the potential harm, and our inability to get further clarity with more investigation, we've pre-emptively decided to ban X from all EA Globals pending further evidence."

Instead, I get the impression that substantially more certainty is deemed necessary to take action. This differentially advantages conservatism, and increases the probability and allowance of predatory behavior.

C. I expect an environment with more enforcement is more pleasant than an environment with less enforcement.

I expect an environment where there's a default expectation of enforcement for interpersonal harm is more pleasant for both men and women. Most directly in reducing the first-order harm itself, but secondarily an environment where people are less "on edge" for potential violence is generally more pleasant. As a man, I at least will find it more pleasant to interact with women in a professional context if I'm not worried that they're worried I'll harm them. I expect this to be true for most men, and the loud worries online about men being worried about false accusations to be heavily exaggerated and selection-skewed[2].

Additionally, I note that I expect someone who exhibit traits like reduced empathy, willingness to push past boundaries, sociopathy, etc, to also exhibit similar traits in other domains. So someone who is harmful in (e.g.) sexual matters is likely to also be harmful in friendly and professional matters. For example, in the more prominent cases I'm aware of where people accused of sexual assault were eventually banned, they also appeared to have done other harmful activities like a systematic history of deliberate deception, being very nasty to men, cheating on rent, harassing people online, etc. So I expect more bans to broadly be better for our community.

D. I expect people who are involved in EA for longer to be systematically biased in both which harms we see, and which things are the relevant warning signals.

The negative framing here is "normalization of deviance". The more neutral framing here is that people (including women) who have been around EA for longer a) may be systematically less likely to be targeted (as they have more institutional power and cachet) and b) are selection-biased to be less likely to be harmed within our community (since the people who have received the most harm are more likely to have bounced off).

E. I broadly trust the judgement of CEA CH in general, and Julia Wise in particular.

EDIT 2023/02: I tentatively withhold my endorsement until this allegation is cleared up.

I think their judgement is broadly reasonable, and they act well within the constraints that they've been given. If I did not trust them (e.g. if I was worried that they'd pursue political vendettas in the guise of harm-reduction), I'd be significantly more worried about given them more leeway to make mistakes with banning people.[3]

F. Nonetheless, the CEA CH team is just one group of individuals, and does a lot of work that's not just on interpersonal harm. We should expect them to a) only have a limited amount of information to act on, and b) for the rest of EA to need to pick up some of the slack where they've left off.

For a), I think an appropriate action is for people to be significantly more willing to report issues to them, as well as make sure new members know about the existence of the CEA CH team and Julia Wise's work within it. For b), my understanding is that CEA CH sees themself as having what I call a "limited purview": e.g. they only have the authority to ban people from official CEA and maybe CEA-sponsored events, and not e.g. events hosted by local groups. So I think EA community-builders in a group organizing capacity should probably make it one of their priorities to be aware of the potential broken stairs in their community, and be willing to take decisive actions to reduce interpersonal harms.

Remember: EA is not a legal system. Our objective is to do the most good, not to wait to be absolutely certain of harm before taking steps to further limit harm.

One thing my post does not cover is opportunity cost. I mostly framed things as changing the decision-boundary. However, in practice I can see how having more bans is more costly in time and maybe money than the status quo. I don't have good calculations here, however my intuition is strongly in the direction that having a safer and more cohesive is worth the relevant opportunity costs.

fwiw my guess is that the average person in EA leadership wishes the CEA CH team does more (is currently insufficiently punitive), rather than wish that they did less (is currently overzealous). I expect there's significant variance in this opinion however.

This is a potential crux.

I can imagine this being a crux for people who oppose greater action. If so, I'd like to a) see this argument explicitly being presented and debated, and b) see people propose alternatives for reducing interpersonal harm that routes around CEA CH.