All posts

Today, 3 June 2025Today, 3 Jun 2025

Today, 3 June 2025

Today, 3 Jun 2025

Frontpage Posts

Quick takes

Any hints / info on what to look for in a mentor / how to find one? (Specifically for community building.)

I'm starting as a national group director in september, and among my focus topics for EAG London are group-focused things like "figuring out pointers / out of the box ideas / well-working ideas we haven't tried yet for our future strategy", but also trying to find a mentor.

These were some thoughts I came up with when thinking about this yesterday:

- I'm not looking for accountability or day to day support. I get that from inside our local group.

- I am looking for someone that can take a description of the higher level situation and see different things than I can. Either due to perspective differences or being more experienced and skilled.

- Also someone who can give me useful input on what skills to focus on building in the medium term.

- Someone whose skills and experience I trust, and when they say "plan looks good" it gives me confidence, when I'm trying to do something that feels to me like a long shot / weird / difficult plan and I specifically need validation that it makes sense.

On a concrete level I'm looking for someone to have ~monthly 1-1 calls with and some asynchronous communication. Not about common day to day stuff but larger calls.

An updated draft of a model of consciousness made based on information and complexity theory

This paper proposes a formal, information-theoretic model of consciousness in which awareness is defined as the alignment between an observer’s beliefs and the objective description of an object. Consciousness is quantified as the ratio between the complexity of true beliefs and the complexity of the full inherent description of the object. The model introduces three distinct epistemic states: Consciousness (true beliefs), Schizo-Consciousness (false beliefs), and Unconsciousness (absence of belief). Object descriptions are expressed as structured sets of object–quality (O–Q) statements, and belief dynamics are governed by internal belief-updating functions (brain codes) and attentional codes that determine which beliefs are foregrounded at any given time. Crucially, the model treats internal states—such as emotions, memories, and thoughts—as objects with describable properties, allowing it to account for self-awareness, misbelief about oneself, and psychological distortion. This framework enables a unified treatment of external and internal contents of consciousness, supports the simulation of evolving belief structures, and provides a tool for comparative cognition, mental health modeling, and epistemic alignment in artificial agents.

The link to the paper:

https://drive.google.com/file/d/12eZWsXgPIJhd9c7KrsfW9EmUk0gER4mu/view?usp=drivesdk

Monday, 2 June 2025Mon, 2 Jun 2025

Monday, 2 June 2025

Mon, 2 Jun 2025

Frontpage Posts

Personal Blogposts

Quick takes

The EA Forum moderation team is going to experiment a bit with how we categorize posts. Currently there is a low bar for a Forum post being categorized as “Frontpage” after it’s approved. In comparison, LessWrong is much more opinionated about the content they allow, especially from new users. We’re considering moving in that direction, in order to maintain a higher percentage of valuable content on our Frontpage.

To start, we’re going to allow moderators to move posts from new users from “Frontpage” to “Personal blog”[1], at their discretion, but starting conservatively. We’ll keep an eye on this and, depending on how this goes, we may consider taking further steps such as using the “rejected content” feature (we don’t currently have that on the EA Forum).

Feel free to reply here if you have any questions or feedback.

1. ^

If you’d like to make sure you see “Personal blog” posts in your Frontpage, you can customize your feed.

Having a savings target seems important. (Not financial advice.)

I sometimes hear people in/around EA rule out taking jobs due to low salaries (sometimes implicitly, sometimes a little embarrassedly). Of course, it's perfectly understandable not to want to take a significant drop in your consumption. But in theory, people with high salaries could be saving up so they can take high-impact, low-paying jobs in the future; it just seems like, by default, this doesn't happen. I think it's worth thinking about how to set yourself up to be able to do it if you do find yourself in such a situation; you might find it harder than you expect.

(Personal digression: I also notice my own brain paying a lot more attention to my personal finances than I think is justified. Maybe some of this traces back to some kind of trauma response to being unemployed for a very stressful ~6 months after graduating: I just always could be a little more financially secure. A couple weeks ago, while meditating, it occurred to me that my brain is probably reacting to not knowing how I'm doing relative to my goal, because 1) I didn't actually know what my goal is, and 2) I didn't really have a sense of what I was spending each month. In IFS terms, I think the "social and physical security" part of my brain wasn't trusting that the rest of my brain was competently handling the situation.)

So, I think people in general would benefit from having an explicit target: once I have X in savings, I can feel financially secure. This probably means explicitly tracking your expenses, both now and in a "making some reasonable, not-that-painful cuts" budget, and gaming out the most likely scenarios where you'd need to use a large amount of your savings, beyond the classic 3 or 6 months of expenses in an emergency fund. For people motivated by EA principles, the most likely scenarios might be for impact reasons: maybe you take a public-sector job that pays half your current salary for three years, or maybe you'

Elon Musk recently presented SpaceX's roadmap for establishing a self-sustaining civilisation on Mars (by 2033 lol). Aside from the timeline, I think there may be some important questions to consider with regards to space colonisation and s-risks:

1. In a galactic civilisation of thousands of independent and technologically advanced colonies, what is the probability that one of those colonies will create trillions of suffering digital sentient beings? (probably near 100% if digital sentience is possible… it only takes one)

2. Is it possible to create a governance structure that would prevent any person in a whole galactic civilisation from creating digital sentience capable of suffering? (sounds really hard especially given the huge distances and potential time delays in messaging… no idea)

3. What is the point of no-return where a domino is knocked over that inevitably leads to self-perpetuating human expansion and the creation of galactic civilisation? (somewhere around a self-sustaining civilisation on Mars I think).

If the answer to question 3 is "Mars colony", then it's possible that creating a colony on Mars is a huge s-risk if we don't first answer question 2.

Would appreciate some thoughts.

Stuart Armstrong and Anders Sandberg’s article on expanding throughout the galaxy rapidly, and Charlie Stross’ blog post about griefers influenced this quick take.

I worry that the pro-AI/slow-AI/stop-AI has the salient characteristics of a tribal dividing line that could tear EA apart:

* "I want to accelerate AI" vs "I want to decelerate AI" is a big, clear line in the sand that allows for a lot clearer signaling of one's tribal identity than something more universally agreeable like "malaria is bad"

* Up to the point where AI either kills us or doesn't, there's basically in principle no way to verify that one side or the other is "right", which means everyone can keep arguing about it forever

* The discourse around it is more hostile/less-trust-presuming than the typical EA discussion, which tends to be collegial (to a fault, some might argue)

You might think it's worth having this civl war to clarify what EA is about. I don't. I would like for us to get on a different track.

This thought prompted by discussion around one of Matthew_Barnett's quick takes.

AI-generated video with human scripting and voice-over celebrates Vasili Arkhipov’s decision not to start WWIII.

https://www.instagram.com/p/DKNZkTSOsCk/

Sunday, 1 June 2025Sun, 1 Jun 2025

Sunday, 1 June 2025

Sun, 1 Jun 2025

Frontpage Posts

Personal Blogposts

Quick takes

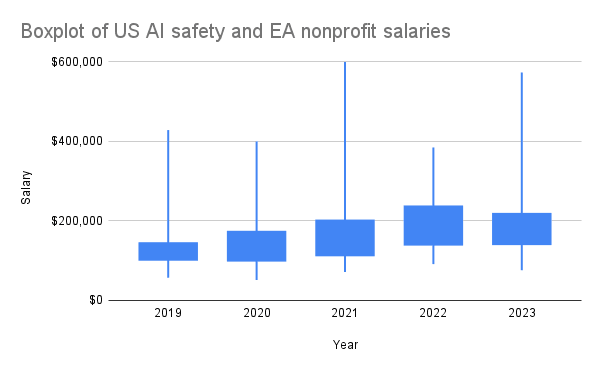

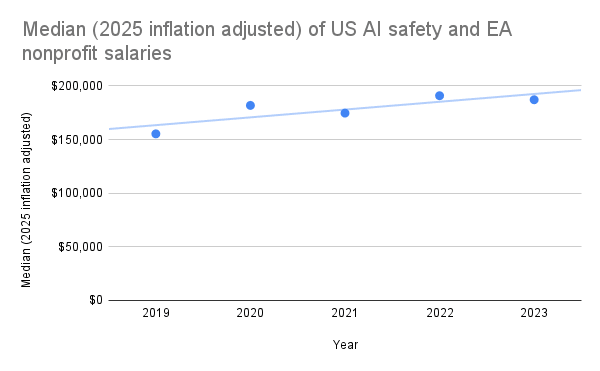

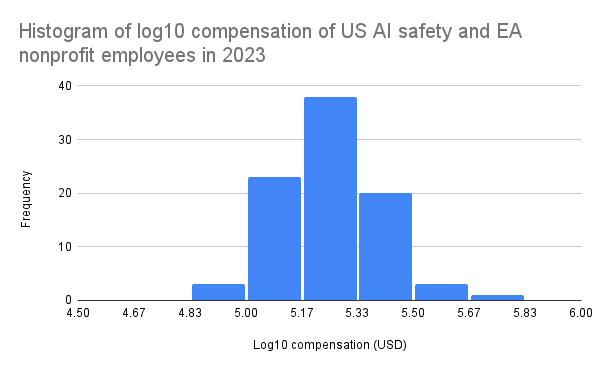

As part of MATS' compensation reevaluation project, I scraped the publicly declared employee compensations from ProPublica's Nonprofit Explorer for many AI safety and EA organizations (data here) in 2019-2023. US nonprofits are required to disclose compensation information for certain highly paid employees and contractors on their annual Form 990 tax return, which becomes publicly available. This includes compensation for officers, directors, trustees, key employees, and highest compensated employees earning over $100k annually. Therefore, my data does not include many individuals earning under $100k, but this doesn't seem to affect the yearly medians much, as the data seems to follow a lognormal distribution, with mode ~$178k in 2023, for example.

I generally found that AI safety and EA organization employees are highly compensated, albeit inconsistently between similar-sized organizations within equivalent roles (e.g., Redwood and FAR AI). I speculate that this is primarily due to differences in organization funding, but inconsistent compensation policies may also play a role.

I'm sharing this data to promote healthy and fair compensation policies across the ecosystem. I believe that MATS salaries are quite fair and reasonably competitive after our recent salary reevaluation, where we also used Payfactors HR market data for comparison. If anyone wants to do a more detailed study of the data, I highly encourage this!

I decided to exclude OpenAI's nonprofit salaries as I didn't think they counted as an "AI safety nonprofit" and their highest paid current employees are definitely employed by the LLC. I decided to include Open Philanthropy's nonprofit employees, despite the fact that their most highly compensated employees are likely those under the Open Philanthropy LLC.

Productive conference meetup format for 5-15 people in 30-60 minutes

I ran an impromptu meetup at a conference this weekend, where 2 of the ~8 attendees told me that they found this an unusually useful/productive format and encouraged me to share it as an EA Forum shortform. So here I am, obliging them:

* Intros… but actually useful

* Name

* Brief background or interest in the topic

* 1 thing you could possibly help others in this group with

* 1 thing you hope others in this group could help you with

* NOTE: I will ask you to act on these imminently so you need to pay attention, take notes etc

* [Facilitator starts and demonstrates by example]

* Round of any quick wins: anything you heard where someone asked for some help and you think you can help quickly, e.g. a resource, idea, offer? Say so now!

* Round of quick requests: Anything where anyone would like to arrange a 1:1 later with someone else here, or request anything else?

* If 15+ minutes remaining:

* Brainstorm whole-group discussion topics for the remaining time. Quickly gather in 1-5 topic ideas in less than 5 minutes.

* Show of hands voting for each of the proposed topics.

* Discuss most popular topics for 8-15 minutes each. (It might just be one topic)

* If less than 15 minutes remaining:

* Quickly pick one topic for group discussion yourself.

* Or just finish early? People can stay and chat if they like.

Note: the facilitator needs to actually facilitate, including cutting off lengthy intros or any discussions that get started during the ‘quick wins’ and ‘quick requests’ rounds. If you have a group over 10 you might need to divide into subgroups for the discussion part.

I think we had around 3 quick wins, 3 quick requests, and briefly discussed 2 topics in our 45 minute session.

Saturday, 31 May 2025Sat, 31 May 2025

Saturday, 31 May 2025

Sat, 31 May 2025

Frontpage Posts

Personal Blogposts

Friday, 30 May 2025Fri, 30 May 2025

Friday, 30 May 2025

Fri, 30 May 2025

Frontpage Posts

Personal Blogposts

Thursday, 29 May 2025Thu, 29 May 2025

Thursday, 29 May 2025

Thu, 29 May 2025

Frontpage Posts

Personal Blogposts

Wednesday, 28 May 2025Wed, 28 May 2025

Wednesday, 28 May 2025

Wed, 28 May 2025

Frontpage Posts

Personal Blogposts

Tuesday, 27 May 2025Tue, 27 May 2025

Tuesday, 27 May 2025

Tue, 27 May 2025

Frontpage Posts

Personal Blogposts

Monday, 26 May 2025Mon, 26 May 2025

Monday, 26 May 2025

Mon, 26 May 2025

Frontpage Posts

Personal Blogposts

Sunday, 25 May 2025Sun, 25 May 2025

Sunday, 25 May 2025

Sun, 25 May 2025

Any hints / info on what to look for in a mentor / how to find one? (Specifically for community building.)

I'm starting as a national group director in september, and among my focus topics for EAG London are group-focused things like "figuring out pointers / out of the box ideas / well-working ideas we haven't tried yet for our future strategy", but also trying to find a mentor.

These were some thoughts I came up with when thinking about this yesterday:

- I'm not looking for accountability or day to day support. I get that from inside our local group.

- I am looking for someone that can take a description of the higher level situation and see different things than I can. Either due to perspective differences or being more experienced and skilled.

- Also someone who can give me useful input on what skills to focus on building in the medium term.

- Someone whose skills and experience I trust, and when they say "plan looks good" it gives me confidence, when I'm trying to do something that feels to me like a long shot / weird / difficult plan and I specifically need validation that it makes sense.

On a concrete level I'm looking for someone to have ~monthly 1-1 calls with and some asynchronous communication. Not about common day to day stuff but larger calls.