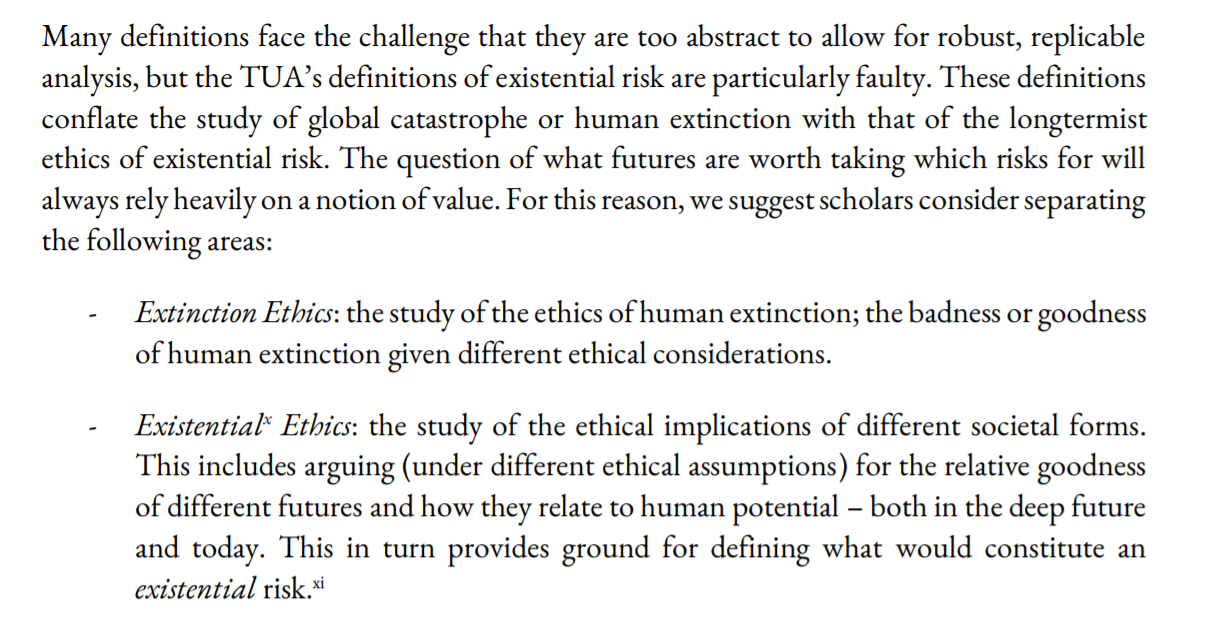

Luke Kemp and I just published a paper which criticises existential risk for lacking a rigorous and safe methodology:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3995225

It could be a promising sign for epistemic health that the critiques of leading voices come from early career researchers within the community. Unfortunately, the creation of this paper has not signalled epistemic health. It has been the most emotionally draining paper we have ever written.

We lost sleep, time, friends, collaborators, and mentors because we disagreed on: whether this work should be published, whether potential EA funders would decide against funding us and the institutions we're affiliated with, and whether the authors whose work we critique would be upset.

We believe that critique is vital to academic progress. Academics should never have to worry about future career prospects just because they might disagree with funders. We take the prominent authors whose work we discuss here to be adults interested in truth and positive impact. Those who believe that this paper is meant as an attack against those scholars have fundamentally misunderstood what this paper is about and what is at stake. The responsibility of finding the right approach to existential risk is overwhelming. This is not a game. Fucking it up could end really badly.

What you see here is version 28. We have had approximately 20 + reviewers, around half of which we sought out as scholars who would be sceptical of our arguments. We believe it is time to accept that many people will disagree with several points we make, regardless of how these are phrased or nuanced. We hope you will voice your disagreement based on the arguments, not the perceived tone of this paper.

We always saw this paper as a reference point and platform to encourage greater diversity, debate, and innovation. However, the burden of proof placed on our claims was unbelievably high in comparison to papers which were considered less “political” or simply closer to orthodox views. Making the case for democracy was heavily contested, despite reams of supporting empirical and theoretical evidence. In contrast, the idea of differential technological development, or the NTI framework, have been wholesale adopted despite almost no underpinning peer-review research. I wonder how much of the ideas we critique here would have seen the light of day, if the same suspicious scrutiny was applied to more orthodox views and their authors.

We wrote this critique to help progress the field. We do not hate longtermism, utilitarianism or transhumanism,. In fact, we personally agree with some facets of each. But our personal views should barely matter. We ask of you what we have assumed to be true for all the authors that we cite in this paper: that the author is not equivalent to the arguments they present, that arguments will change, and that it doesn’t matter who said it, but instead that it was said.

The EA community prides itself on being able to invite and process criticism. However, warm welcome of criticism was certainly not our experience in writing this paper.

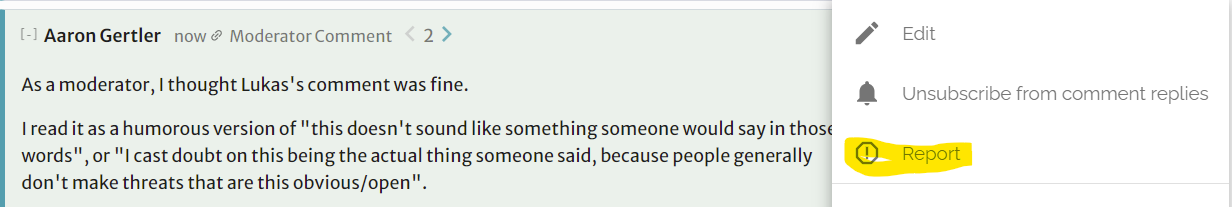

Many EAs we showed this paper to exemplified the ideal. They assessed the paper’s merits on the basis of its arguments rather than group membership, engaged in dialogue, disagreed respectfully, and improved our arguments with care and attention. We thank them for their support and meeting the challenge of reasoning in the midst of emotional discomfort. By others we were accused of lacking academic rigour and harbouring bad intentions.

We were told by some that our critique is invalid because the community is already very cognitively diverse and in fact welcomes criticism. They also told us that there is no TUA, and if the approach does exist then it certainly isn’t dominant. It was these same people that then tried to prevent this paper from being published. They did so largely out of fear that publishing might offend key funders who are aligned with the TUA.

These individuals—often senior scholars within the field—told us in private that they were concerned that any critique of central figures in EA would result in an inability to secure funding from EA sources, such as OpenPhilanthropy. We don't know if these concerns are warranted. Nonetheless, any field that operates under such a chilling effect is neither free nor fair. Having a handful of wealthy donors and their advisors dictate the evolution of an entire field is bad epistemics at best and corruption at worst.

The greatest predictor of how negatively a reviewer would react to the paper was their personal identification with EA. Writing a critical piece should not incur negative consequences on one’s career options, personal life, and social connections in a community that is supposedly great at inviting and accepting criticism.

Many EAs have privately thanked us for "standing in the firing line" because they found the paper valuable to read but would not dare to write it. Some tell us they have independently thought of and agreed with our arguments but would like us not to repeat their name in connection with them. This is not a good sign for any community, never mind one with such a focus on epistemics. If you believe EA is epistemically healthy, you must ask yourself why your fellow members are unwilling to express criticism publicly. We too considered publishing this anonymously. Ultimately, we decided to support a vision of a curious community in which authors should not have to fear their name being associated with a piece that disagrees with current orthodoxy. It is a risk worth taking for all of us.

The state of EA is what it is due to structural reasons and norms (see this article). Design choices have made it so, and they can be reversed and amended. EA fails not because the individuals in it are not well intentioned, good intentions just only get you so far.

EA needs to diversify funding sources by breaking up big funding bodies and by reducing each orgs’ reliance on EA funding and tech billionaire funding, it needs to produce academically credible work, set up whistle-blower protection, actively fund critical work, allow for bottom-up control over how funding is distributed, diversify academic fields represented in EA, make the leaders' forum and funding decisions transparent, stop glorifying individual thought-leaders, stop classifying everything as info hazards…amongst other structural changes. I now believe EA needs to make such structural adjustments in order to stay on the right side of history.

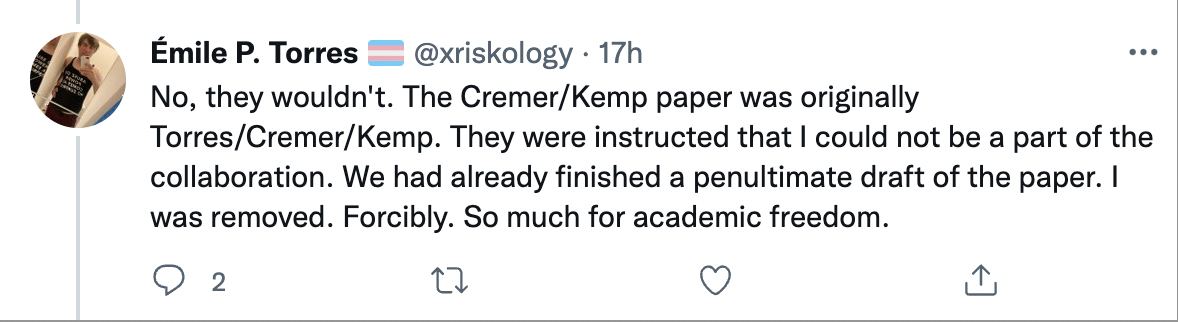

The post in which I speak about EAs being uncomfortable about us publishing the article only talks about interactions with people who did not have any information about initial drafting with Torres. At that stage, the paper was completely different and a paper between Kemp and I. None of the critiques about it or the conversations about it involved concerns about Torres, co-authoring with Torres or arguments by Torres, except in so far as they might have taken Torres an example of the closing doors that can follow a critique. The paper was in such a totally different state and it would have been misplaced to call it a collaboration with Torres.

There was a very early draft of Torres and Kemp which I was invited to look at (in December 2020) and collaborate on. While the arguments seemed promising to me, I thought it needed major re-writing of both tone and content. No one instructed me (maybe someone instructed Luke?) that one could not co-author with Torres. I also don't recall that we were forced to take Torres off the collaboration (I’m not sure who know about the conversations about collaborations we had): we decided to part because we wanted to move the content and tone in a very different direction, because Torres had (to our surprise) unilaterally published major parts of the initial draft as a mini-book already and because we thought that this collaboration was going to be very difficult. I recall video calls in which we discussed the matter with Torres, decided to take out sections that were initially supplied by Torres and cite Torres’ mini-book whereever we deemed it necessary to refer to it. The degree to which the Democratising Risk paper is influenced by Torres is seen in our in-text citations: we don't hide the fact that we find some of the arguments noteworthy! Torres agreed with those plans.

At the time it seemed to me that I and Torres were trying to achieve fundamentally different goals: I wanted to start a critical discussion within EA and Torres was ready by that stage to incoculate others against EA and longtermism. It was clear to me that the tone and style of argumentation of initial drafts had little chance of being taken seriously in EA. My own opinion is that many arguments made by Torres are not rigorous enough to sway me, but that they often contain an initial source of contention that is worth spending time developping further to see whether it has substance. Torres and I agree in so far as we surely both think there are several worthy critiques of EA and longtermism that should be considered, but I think we differ greatly in our credences in the plausibility of different critiques, how we wanted to treat and present critiques and who we wanted to discuss them with.

The emotional contexual embedding of an argument matters greatly to its perception. I thought EAs, like most people, were not protected from assessing arguments emotionally and while I don't follow EA dramas closely (someone also kindly alerted me to this one unfolding), by early 2021 I had gotten the memo that Torres had become an emotional signal for EAs to discount much of what the name was attached to. At the time I thought it would not do the arguments justice to let them be discounted because of an associated name that many in EA seem to have an emotional reaction against and the question of reception did become one factor for why we thought it best not to consider the co-authorship with Torres. One can of course manage perception of a paper via co-authorship and we considered collaborating with respected EAs to give it more credibility but we decided both against name-dropping those people who invested via long conversations and commentary in the piece to boost it as much as we decided not to advertise that there are obvious overlaps with some of Torres’ critiques. There is nothing to hide in my view: one can read Torres' work and Democratising Risk (and in fact many other peoples’ critiques) and see similarities - this should probably strengthen one’s belief that there’s something in that ballpark of arguments that many people feel we should take seriously?

Apart from the fact that it really is an entirely different paper (what you saw is version 26 or something and I think about 30 people have commented on it. I'm not sure it's meaningful to speak about V1 and V20 as being the same paper. And what you see is all there is: all the citations of Torres are indeed pointing to writing by Torres, but they are easily found and you'll see that it is not a disproportionate influence), we did indeed hope to avoid the exact scenario we find ourselves in now! The paper is at risk of being evaluated in light of any connection to Torres rather than on it's own terms, and my trustworthiness in reporting on EAs treatment of critiques is being questioned because I cared about the presentation and reception of the arguments in this paper? A huge amount of work went into adjusting the tone of the paper to EAs (irrespective of Torres, this was a point of contention between Luke and I too), to ensure the arguments would get a fair hearing and we had to balance this against non-EA outsiders who thought we were not forceful enough.

I think we succeeded in this balance, since both sides still to tell us we didn't do quite enough (the tone still seems harsh to EAs and too timid to outsiders) but both EAs and outsiders do engage with the paper and the arguments and I do think it is true that there is a greater awareness about (self-) censorship risk and critiques being valuable. Having published , EAs have so far been kind towards me. This is great! I do hope it'll stay this way. Contrary to popular belief, it's not sexy to be seen as the critic. It doesn't feel great to be told a paper will damage an institution, to have others insinuate that I plug my own papers under pseudonyms in forum comments or that I had malicious intentions in being open about the experience, and it’s annoying to be placed into boxes with other authors who you might strongly disagree with. While I understand that those who don't know me must take any piece of evidence they can get to evaluate the trustworthiness of my claims, I find it a little concerning that anyone should be willing to infer and evaluate character from minor interactions. Shouldn’t we rather say: given that we can’t fully verify her experience, can we think about why such an experience would be bad for the project of EA and what safeguards we have in place such that those experiences don't happen? My hope was that I can serve as a positive example to others who feel the need to voice whatever opinion (“see it’s not so bad!”), so I thank anyone on here who is trying to ease the exhaust that inevitably comes with navigating criticism in a community. The experience so far has made me think that EAs care very much that all arguments (including those they disagree with) are heard. Even if you don’t think I'm trustworthy and earnest in my concerns, do please continue to keep the benefit of doubt in mind towards your perceived critics, I think we all agree they are valuable to have among us and if you care about EA, do keep the process of assessing trustworthiness amicable, if not for me then for future critics who do a better job than I.