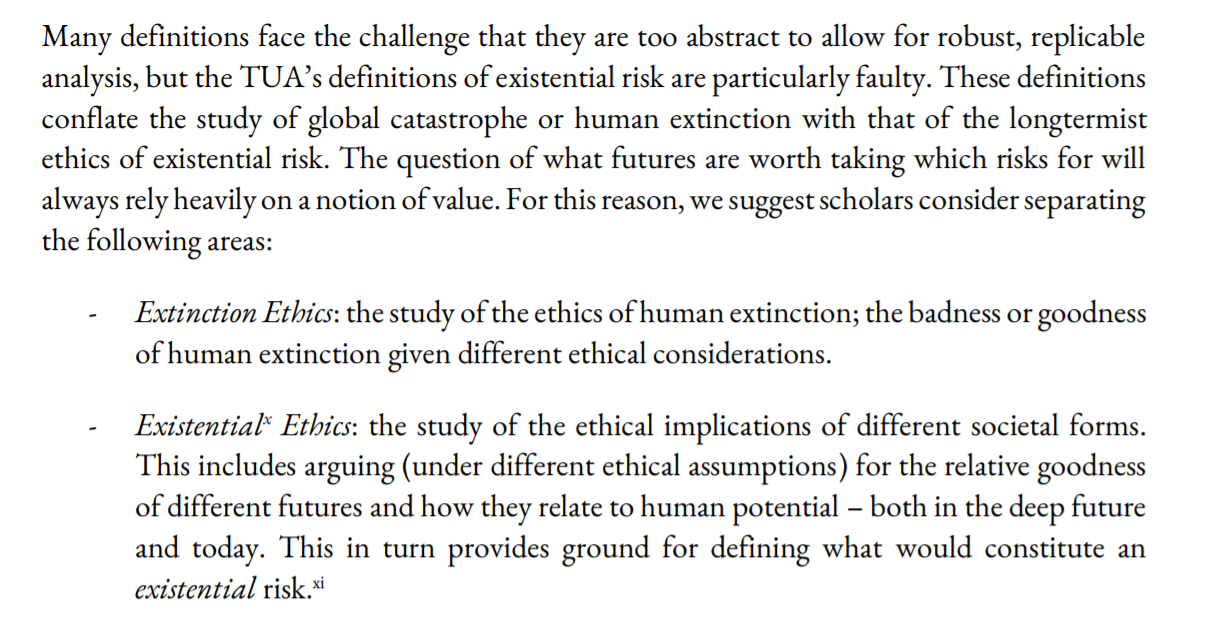

Luke Kemp and I just published a paper which criticises existential risk for lacking a rigorous and safe methodology:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3995225

It could be a promising sign for epistemic health that the critiques of leading voices come from early career researchers within the community. Unfortunately, the creation of this paper has not signalled epistemic health. It has been the most emotionally draining paper we have ever written.

We lost sleep, time, friends, collaborators, and mentors because we disagreed on: whether this work should be published, whether potential EA funders would decide against funding us and the institutions we're affiliated with, and whether the authors whose work we critique would be upset.

We believe that critique is vital to academic progress. Academics should never have to worry about future career prospects just because they might disagree with funders. We take the prominent authors whose work we discuss here to be adults interested in truth and positive impact. Those who believe that this paper is meant as an attack against those scholars have fundamentally misunderstood what this paper is about and what is at stake. The responsibility of finding the right approach to existential risk is overwhelming. This is not a game. Fucking it up could end really badly.

What you see here is version 28. We have had approximately 20 + reviewers, around half of which we sought out as scholars who would be sceptical of our arguments. We believe it is time to accept that many people will disagree with several points we make, regardless of how these are phrased or nuanced. We hope you will voice your disagreement based on the arguments, not the perceived tone of this paper.

We always saw this paper as a reference point and platform to encourage greater diversity, debate, and innovation. However, the burden of proof placed on our claims was unbelievably high in comparison to papers which were considered less “political” or simply closer to orthodox views. Making the case for democracy was heavily contested, despite reams of supporting empirical and theoretical evidence. In contrast, the idea of differential technological development, or the NTI framework, have been wholesale adopted despite almost no underpinning peer-review research. I wonder how much of the ideas we critique here would have seen the light of day, if the same suspicious scrutiny was applied to more orthodox views and their authors.

We wrote this critique to help progress the field. We do not hate longtermism, utilitarianism or transhumanism,. In fact, we personally agree with some facets of each. But our personal views should barely matter. We ask of you what we have assumed to be true for all the authors that we cite in this paper: that the author is not equivalent to the arguments they present, that arguments will change, and that it doesn’t matter who said it, but instead that it was said.

The EA community prides itself on being able to invite and process criticism. However, warm welcome of criticism was certainly not our experience in writing this paper.

Many EAs we showed this paper to exemplified the ideal. They assessed the paper’s merits on the basis of its arguments rather than group membership, engaged in dialogue, disagreed respectfully, and improved our arguments with care and attention. We thank them for their support and meeting the challenge of reasoning in the midst of emotional discomfort. By others we were accused of lacking academic rigour and harbouring bad intentions.

We were told by some that our critique is invalid because the community is already very cognitively diverse and in fact welcomes criticism. They also told us that there is no TUA, and if the approach does exist then it certainly isn’t dominant. It was these same people that then tried to prevent this paper from being published. They did so largely out of fear that publishing might offend key funders who are aligned with the TUA.

These individuals—often senior scholars within the field—told us in private that they were concerned that any critique of central figures in EA would result in an inability to secure funding from EA sources, such as OpenPhilanthropy. We don't know if these concerns are warranted. Nonetheless, any field that operates under such a chilling effect is neither free nor fair. Having a handful of wealthy donors and their advisors dictate the evolution of an entire field is bad epistemics at best and corruption at worst.

The greatest predictor of how negatively a reviewer would react to the paper was their personal identification with EA. Writing a critical piece should not incur negative consequences on one’s career options, personal life, and social connections in a community that is supposedly great at inviting and accepting criticism.

Many EAs have privately thanked us for "standing in the firing line" because they found the paper valuable to read but would not dare to write it. Some tell us they have independently thought of and agreed with our arguments but would like us not to repeat their name in connection with them. This is not a good sign for any community, never mind one with such a focus on epistemics. If you believe EA is epistemically healthy, you must ask yourself why your fellow members are unwilling to express criticism publicly. We too considered publishing this anonymously. Ultimately, we decided to support a vision of a curious community in which authors should not have to fear their name being associated with a piece that disagrees with current orthodoxy. It is a risk worth taking for all of us.

The state of EA is what it is due to structural reasons and norms (see this article). Design choices have made it so, and they can be reversed and amended. EA fails not because the individuals in it are not well intentioned, good intentions just only get you so far.

EA needs to diversify funding sources by breaking up big funding bodies and by reducing each orgs’ reliance on EA funding and tech billionaire funding, it needs to produce academically credible work, set up whistle-blower protection, actively fund critical work, allow for bottom-up control over how funding is distributed, diversify academic fields represented in EA, make the leaders' forum and funding decisions transparent, stop glorifying individual thought-leaders, stop classifying everything as info hazards…amongst other structural changes. I now believe EA needs to make such structural adjustments in order to stay on the right side of history.

Note: I discuss Open Phil to some degree in this comment. I also start work there on January 3rd. These are my personal views, and do not represent my employer.

Epistemic status: Written late at night, in a rush, I'll probably regret some of this in the morning but (a) if I don't publish now, it won't happen, and (b) I did promise extra spice after I retired.

It seems valuable to separate "support for the action of writing the paper" from "support for the arguments in the paper". My read is that the authors had a lot of the former, but less of the latter.

From the original post:

While "invalid" seems like too strong a word for a critic to use (and I'd be disappointed in any critic who did use it), this sounds like people were asked to review/critique the paper and then offered reviews and critiques of the paper.

Still, to the degree that there was any opposition for the action of writing the paper, that's a problem. To address something more concerning:

I'm not sure what "prevent this paper from being published" means, but in the absence of other points, I assume it refers to the next point of discussion (the concern around access to funding).

I'm glad the authors point out that the concerns may not be warranted. But I've seen many people (not necessarily the authors) make arguments like "these concerns could be real, therefore they are real". There's a pervasive belief that Open Philanthropy must have a specific agenda they try to fund where X-risk is concerned, and that entire orgs might be blacklisted because individual authors within those orgs criticize that agenda.

The Future of Humanity Institute (one author's org) has dozens of researchers and has received a consistent flow of new grants from Open Phil. Based on everything I've ever seen Open Phil publish, and my knowledge of FHI's place in the X-risk world, it seems inconceivable that they'd have funding cut because of a single paper that presents a particular point of view.

The same point applies beyond FHI, to other Open Phil grants. They've funded dozens of organizations in the AI field, with (I assume) hundreds of total scholars/thinkers in their employ; could it really be the case that at the time those grants were made, none of the people so funded had written things that ran counter to Open Phil's agenda (for example, calls for greater academic diversity within X-risk)?

Meanwhile, CSER (the other author's org) doesn't appear in Open Phil's grants database at all, and I can't find anything that looks like funding to CSER online at any point after 2015. If you assume this is related to ideological differences between Open Phil and CSER (I have no idea), this particular paper seems like it wouldn't change much. Open Phil can't cut funding it doesn't provide.

That is to say, if senior scholars expressed these concerns, I think they were unwarranted.

*****

Of course, I'm not a senior scholar myself. But I am someone who worked at CEA for three years, attended two Leaders Forums, and heard many internal/"backroom" conversations between senior leaders and/or big funders.

I'm also someone who doesn't rely on the EA world for funding (I have marketable skills and ample savings), is willing to criticize popular people even when it costs time and energy, and cares a lot about getting incentives and funding dynamics right. I created several of the Forum's criticism tags and helped to populate them. I put Zvi's recent critical post in the EA Forum Digest.

I think there are things we don't do well. I've seen important people present weak counterarguments to good criticism without giving the questions as much thought as seemed warranted. I've seen interesting opportunities get lost because people were (in my view) too worried about the criticism that might follow. I've seen the kinds of things Ozzie Gooen talks about here (humans making human mistakes in prioritization, communication, etc.) I think that Ben Hoffman and Zvi have made a number of good points about problems with centralized funding and bad incentives.

But despite all that, I just can't wrap my head around the idea that the major EA figures I've known would see a solid, well-thought-through critique and decide, as a result, to stop funding the people or organizations involved. It seems counter to who they are as people, and counter to the vast effort they expend on reading criticism, asking for criticism, re-evaluating their own work and each other's work with a critical eye, etc.

I do think that I'm more trusting of people than the average person. It's possible that things are happening in backrooms that would appall me, and I just haven't seen them. But whenever one of these conversations comes up, it always seems to end in vague accusations without names attached or supporting documentation, even in cases where someone straight-up left the community. If things were anywhere near as bad as they've been represented, I would expect at least one smoking gun, beyond complaints about biased syllabi or "A was concerned that B would be mad".

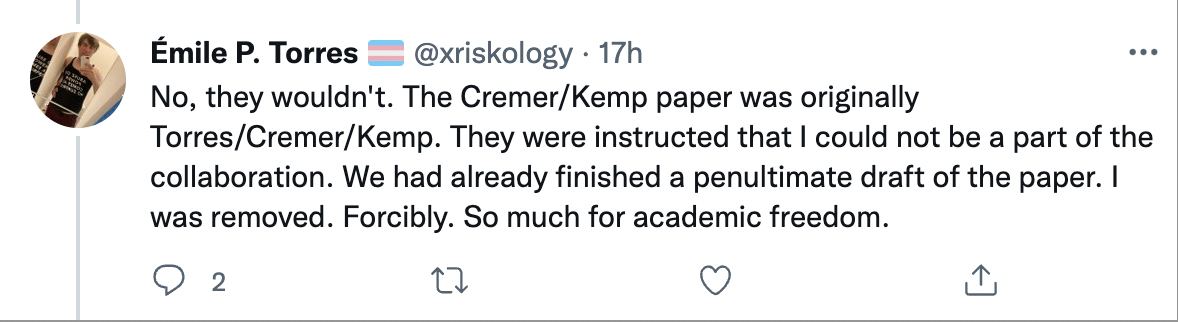

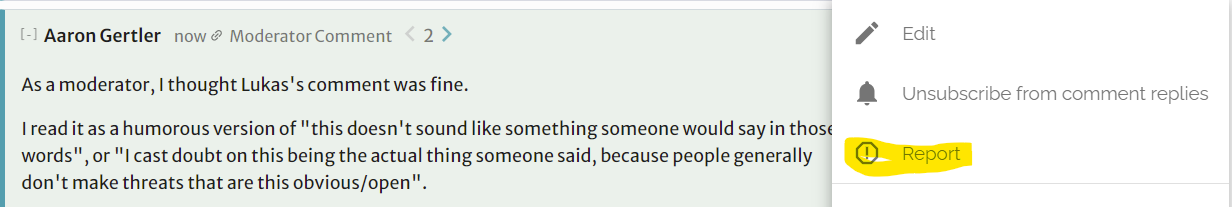

For example: Phil Torres claims to have spent months gathering reports of censorship from people all over EA, but the resulting article was remarkably insubstantial. The single actual incident he mentions in the "canceled" section is a Facebook post being deleted by an unknown moderator in 2013. I know more detail about this case than Phil shares, and he left out some critical points:

If this was Phil's best example, where's the rest?

I'd be sad to see a smoking gun because of what it would mean for my relationship with a community I value. But I've spent a lot of time trying to find one anyway, because if my work is built on sand I want to know sooner rather than later. I've yet to find what I seek.

*****

There was one line that really concerned me:

"Lacking in rigor" sounds like a critique of the type the authors solicited (albeit one that I can imagine being presented unhelpfully).

"Harboring bad intentions" is a serious accusation to throw around, and one I'm actively angry to hear reviewers using in a case like this, where people are trying to present (somewhat) reasonable criticism and doing so with no clear incentive (rather than e.g. writing critical articles in outside publications to build a portfolio, as others have).

I'd rather have meta-discussion of the paper's support be centered on this point, rather than the "hypothetical loss of funding" point, at least until we have evidence that the concerns of the senior scholars are based on actual decisions or conversations.