Bill Gates: "My new deadline: 20 years to give away virtually all my wealth" - https://www.gatesnotes.com/home/home-page-topic/reader/n20-years-to-give-away-virtually-all-my-wealth

All of the headlines are trying to run with the narrative that this is due to Trump pressure, but I can’t see a clear mechanism for this. Does anyone have a good read on why he’s changed his mind? (Recent events feel like: Buffet moving his money to his kids’ foundations & retiring from BH, divorce)

I have only speculation, but it's plausible to me that developments in AI could be playing a role. The original decision in 2000 was to sunset "several decades after [Bill and Melinda Gates'] deaths." Likely the idea was that handpicked successor leadership could carry out the founders' vision and that the world would be similar enough to the world at the time of their death or disability for that plan to make sense for several decades after the founders' deaths. To the extent that Gates thought that the world is going to change more rapidly than he believed in 2000, this plan may look less attractive than it once did.

Why not what seems to be the obvious mechanism: the cuts to USAID making this more urgent and imperative. Or am I missing something?

"A few years ago, I began to rethink that approach. More recently, with the input from our board, I now believe we can achieve the foundation’s goals on a shorter timeline, especially if we double down on key investments and provide more certainty to our partners."

It seems it was more of a question of whether they could grant larger amounts effectively, which he was considering for multiple years (I don't know how much of that may be possible due to aid cuts).

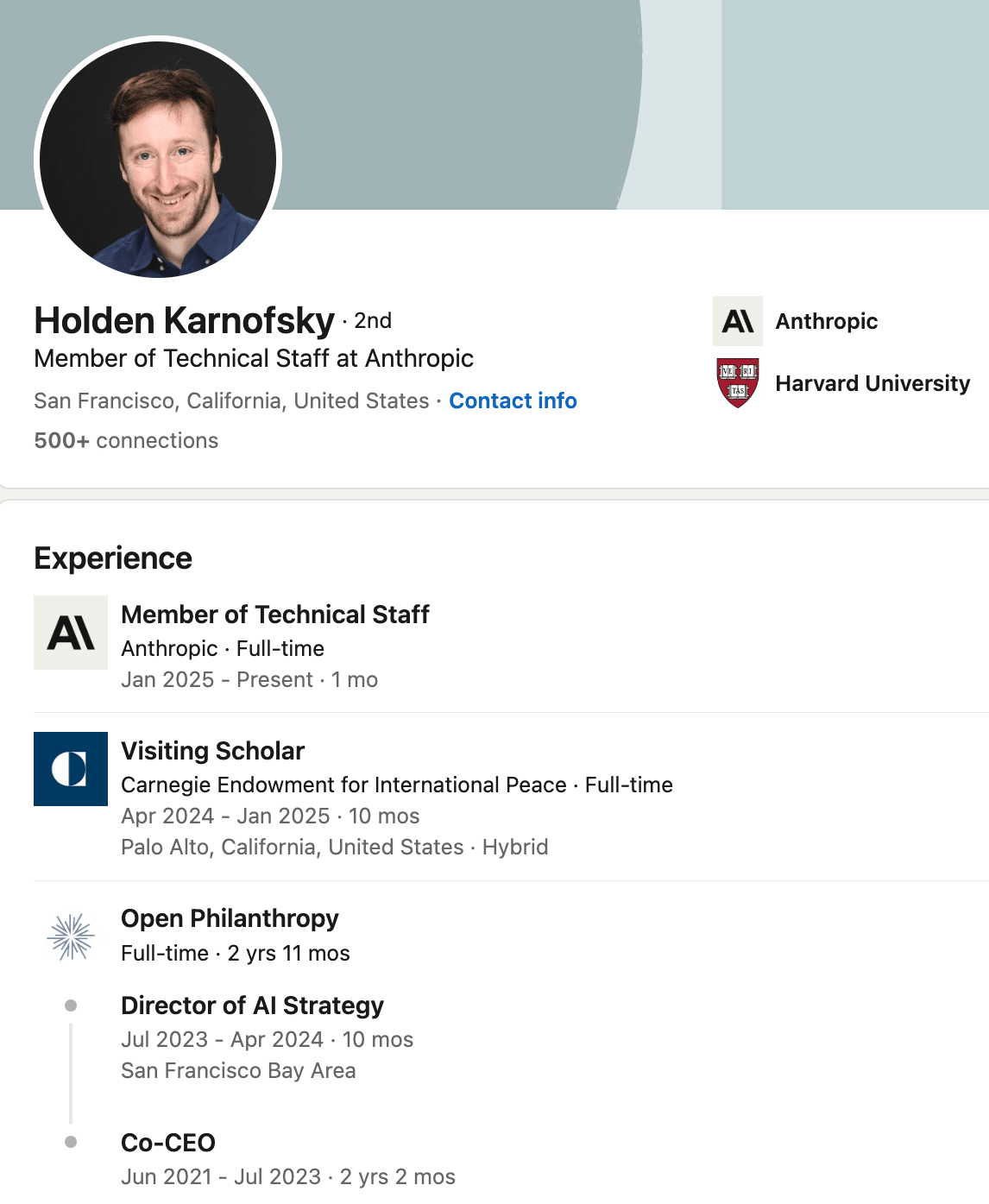

Holden Karnofsky has joined Anthropic (LinkedIn profile). I haven't been able to find more information.

"Member of Technical Staff" - That's surprising. I assumed he was more interested in the policy angle.

Member of Technical Staff is often a catchall term for "we don't want to pigeonhole you into a specific role, you do useful stuff in whatever way seems to add the most value", I wouldn't read much into it

From here it seems that indeed «he focuses on the design of the company's Responsible Scaling Policy and other aspects of preparing for the possibility of highly advanced AI systems in the future.»

It seems that lots of people with all sorts of roles at AI companies have the formal role "member of technical staff"

I'd love to see Joey Savoie on Dwarkesh’s podcast. Can someone make it happen?

Joey with Spencer Greenberg: https://podcast.clearerthinking.org/episode/154/joey-savoie-should-you-become-a-charity-entrepreneur/

The meat-eater problem is under-discussed.

I've spent more than 500 hours consuming EA content and I had never encountered the meat-eater problem until today.

https://forum.effectivealtruism.org/topics/meat-eater-problem

(I had sometimes thought about the problem, but I didn't even know it had a name)

I think the reason is that it doesn't really have a target audience. Animal advocacy interventions are hundreds of times more cost-effective than global poverty interventions. It only makes sense to work on global poverty if you think that animal suffering doesn't matter nearly as much as human suffering. But if you think that, then you won't be convinced to stop working on global poverty because of its effects on animals. Maybe it's relevant for some risk-averse people.

I wonder if Open Philanthropy thinks about it because they fund both animal advocacy and global poverty/health. Animal advocacy funding probably easily offsets its negative global poverty effects on animals. It takes thousands of dollars to save a human life with global health interventions and that human might consume thousands of animals in her lifetime. Chicken welfare reforms can half the suffering of thousands of animals for tens of dollars. However, I don't like this sort of reasoning that much because we may not always have interventions as cost-effective as chicken welfare reforms.

Yeah, perhaps if you care about animal welfare, the main problem with giving money to poverty causes is that you didn't give it to animal welfare instead, and the increased consumption of meat is a relative side issue.

One potential audience is people open to moral trade. Say Pat doesn't care much about animals and is on the fence between global poverty interventions with different animal impacts, and Alex cares a lot about animals and normally donates to animal welfare efforts. Alex could agree with Pat to donate some amount to the better-for-animals global poverty charity if Pat will agree to send all their donations there.

Except if you do the math on it, I think you'll find that it's really hard to come out with a set of charities, values, and impacts that make this work. Pat would have to be so close to indifferent between the two options.

(And if you figure that out, there's also all the normal reasons why moral trade is challenging and practice.)

Also, you can argue against the poor meat eater problem by pointing out that it's very unclear whether increased animal production is good or bad for animals. In short, the argument would be that there are way more wild animals than farmed animals, and animal product consumption might substantially decrease wild animal populations. Decreasing wild animal populations could be good because wild animals suffer a lot, mostly due to natural causes. See https://forum.effectivealtruism.org/topics/logic-of-the-larder I think this issue is also very under-discussed.

I've been thinking about the meat eater problem a lot lately, and while I think it's worth discussing, I've realized that poverty reduction isn't to blame for farmed animal suffering.

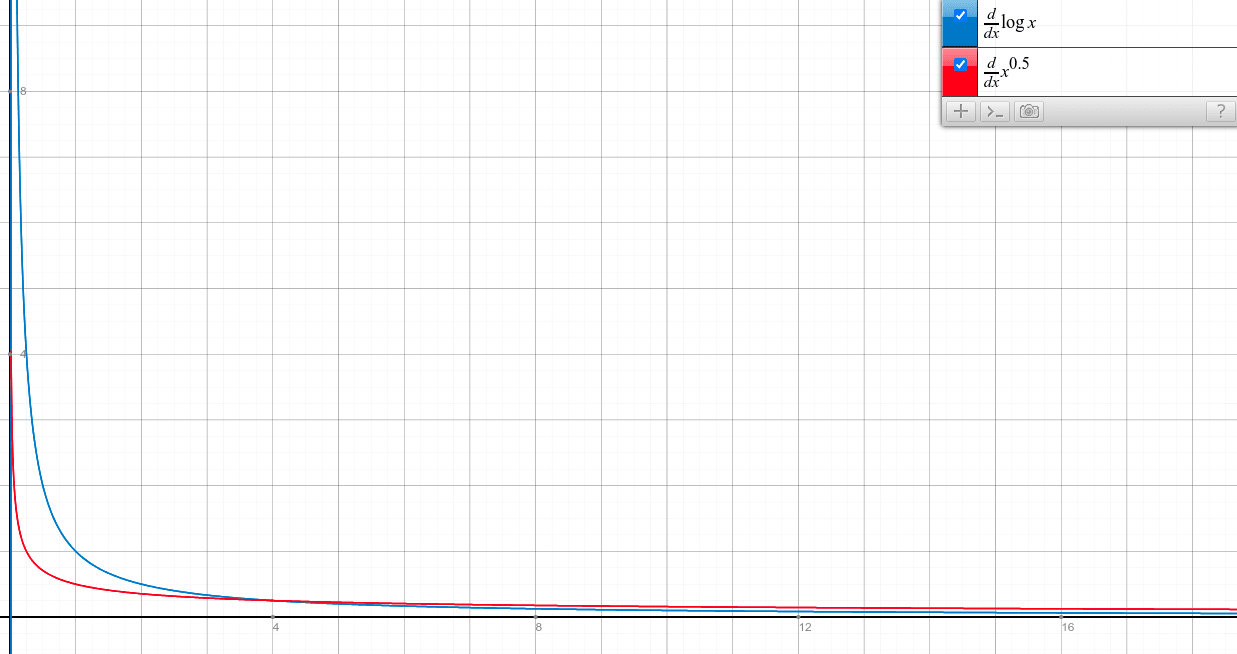

(Content note: dense math incoming)

Assume that humans' utility as a function of income is U(c)=lnc (i.e. isoelastic utility with η=1), and the demand for meat is Q(c)=Acε where ε is the income elasticity of demand. Per Engel's law, ε is typically between 0 and 1. As long as ε<η, U′(c)>Q′(c) at low incomes and U′(c)<Q′(c) at high incomes.

For simplicity, I am assuming that the animal welfare impact of meat production is negative and proportional to −Q(c). (As saulius points out, it's unclear whether meat production is net positive or net negative for animals as a whole. Also, animal welfare regulations and alternative protein technologies are more common in high-income regions like the EU and US, so this assumption may not apply at the high end.) If this is true, then increasing a person or country's income is most valuable when that person/country is in extreme poverty, and least valuable at the high end of the income spectrum.

The upshot: the framing of the meat eater problem as being about poverty obscures the fact that the worst offenders of factory farming are rich countries like the United States, not poor ones, and that increasing the income of a rich person is worse for animal welfare than increasing that of a poor one (as long as both of them are non-vegan). I feel like it's hypocritical for animal advocates and EAs from rich countries to blame poor countries for the suffering caused by factory farming.

I feel like it's hypocritical for animal advocates and EAs from rich countries to blame poor countries for the suffering caused by factory farming.

I don't think this is what the meat-eater problem does. You could imagine a world in which the West is responsible for inventing the entire machinery of factory farming, or even running all the factory farms, and still believe that lifting additional people out of poverty would help the Western factory farmers sell more produce. It's not about blame, just about consequences.

I realise this isn't your main point, and I haven't processed your main argument yet. It would make a lot of sense to me if transferring money from a first-world meat eater to a third-world meat eater resulted in less meat being eaten, but I'd imagine that the people most concerned with this issue are thinking about their own money, and already don't consume meat themselves?

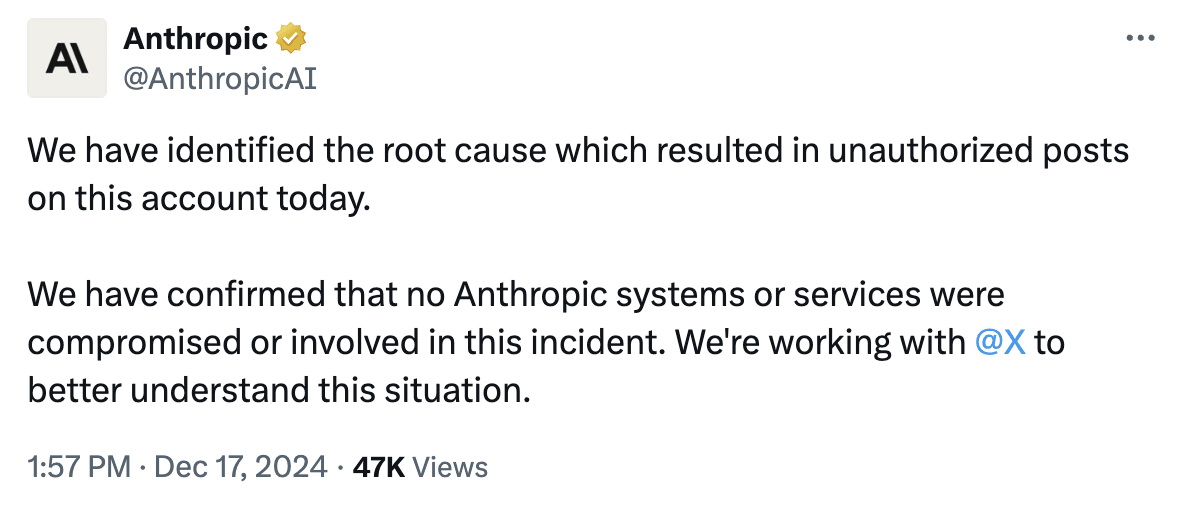

Anthropic's Twitter account was hacked. It's "just" a social media account, but it raises some concerns.

Update: the post has just been deleted. They keep the updates on their status page: https://status.anthropic.com/

Update from Anthropic: https://twitter.com/AnthropicAI/status/1869139895400399183

John Schulman (OpenAI co-founder) has left OpenAI to work on AI alignment at Anthropic.

Maybe a silly question, but does "one shot" for safe AGI mean they aren't going to release models along the way and only try and do reach the superintelligence bar? Would have thought investors wouldn't have been into that...

Or are they basically just like other AI companies and will release commercial products along the way but with a compelling pitch?

I highly recommend the book "How to Launch A High-Impact Nonprofit" to everyone.

I've been EtG for many years and I thought this book wasn't relevant to me, but I'm learning a lot and I'm really enjoying it.

After years of donating to established organizations (top GiveWell charities), I want to start directing a portion of my donations to new/small charities (eg. Presenting nine new charities). I think this book is helping me better understand which new charities might have more potential.

I also really liked "Part II. Making good decisions", which covers many tools that can be useful for personal and professional decision-making (rationality, the scientific method, EA, Weighted Factor Modelling, etc.).

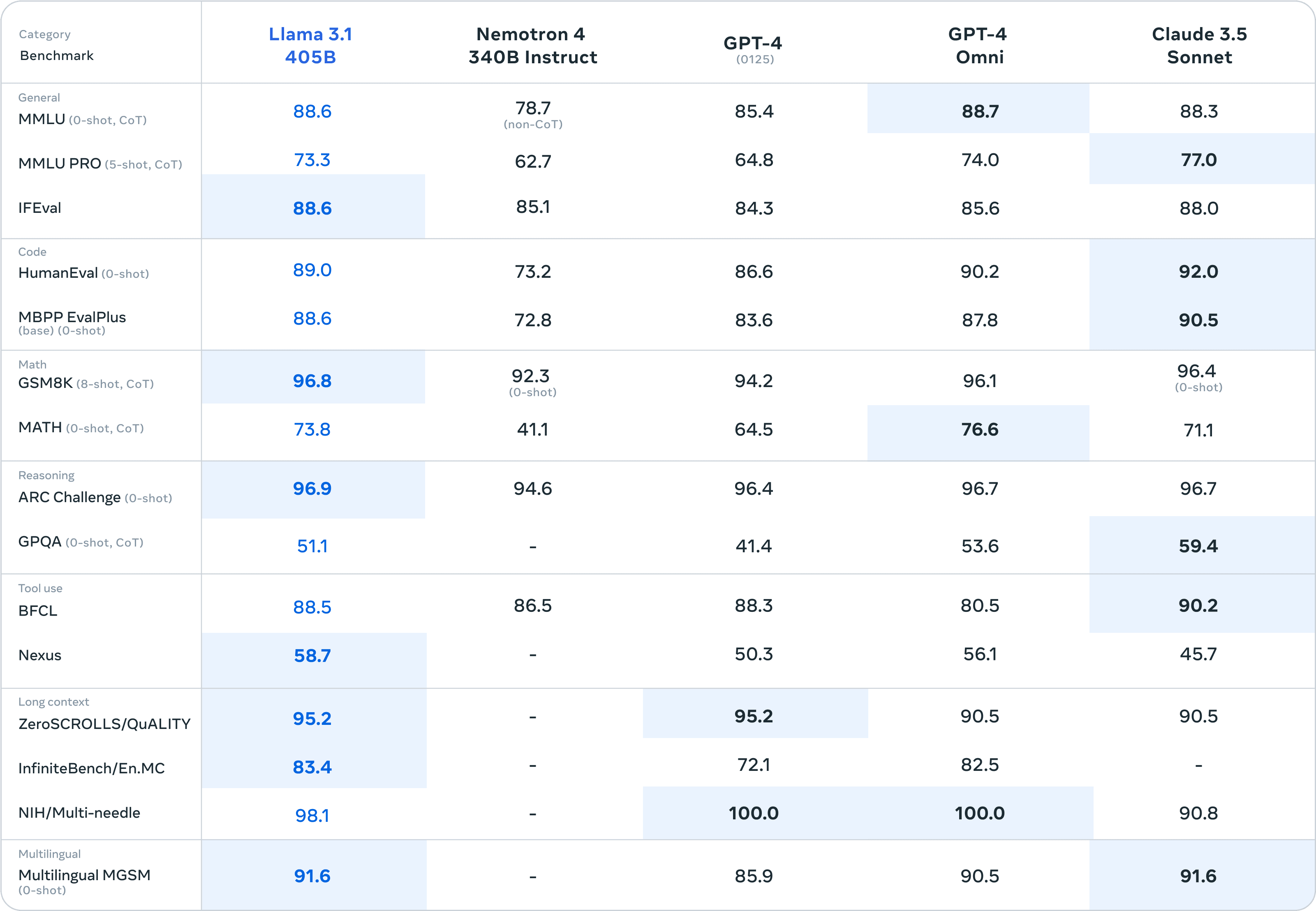

Meta has just released Llama 3.1 405B. It's open-source and in many benchmarks it beats GPT-4o and Claude 3.5 Sonnet:

Zuck's letter "Open Source AI Is the Path Forward".

It's an unfortunate naming clash, there are different ARC Challenges:

ARC-AGI (Chollet et al) - https://github.com/fchollet/ARC-AGI

ARC (AI2 Reasoning Challenge) - https://allenai.org/data/arc

These benchmarks are reporting the second of the two.

LLMs (at least without scaffolding) still do badly on ARC, and I'd wager Llama 405B still doesn't do well on the ARC-AGI challenge, and it's telling that all the big labs release the 95%+ number they get on AI2-ARC, and not whatever default result they get with ARC-AGI...

(Or in general, reporting benchmarks where they can go OMG SOTA!!!! and not helpfully advance the general understanding of what models can do and how far they generalise. Basically, traditional benchmark cards should be seen as the AI equivalent of "IN MICE")

Wait, all the LLMs get 90+ on ARC? I thought LLMs were supposed to do badly on ARC.