All posts

Today, 25 July 2025Today, 25 Jul 2025

Today, 25 July 2025

Today, 25 Jul 2025

Frontpage Posts

Quick takes

Act utilitarians choose actions estimated to increase total happiness. Rule utilitarians follow rules estimated to increase total happiness (e.g. not lying). But you can have the best of both: act utilitarianism where rules are instead treated as moral priors. For example, having a strong prior that killing someone is bad, but which can be overridden in extreme circumstances (e.g. if killing the person ends WWII).

These priors make act utilitarianism more safeguarded against bad assessments. They are grounded in Bayesianism (moral priors are updated the same way as non-moral priors). They also decrease cognitive effort: most of the time, just follow your priors, unless the stakes and uncertainty warrant more complex consequence estimates. You can have a small prior toward inaction, so that not every random action is worth considering. You can also blend in some virtue ethics, by having a prior that virtuous acts often lead to greater total happiness in the long run.

What I described is a more Bayesian version of R. M. Hare's "Two-level utilitarianism", which involves an "intuitive" and a "critical" level of moral thinking.

Thursday, 24 July 2025Thu, 24 Jul 2025

Thursday, 24 July 2025

Thu, 24 Jul 2025

Frontpage Posts

Personal Blogposts

Quick takes

From https://arxiv.org/pdf/1712.03079

[...]

I remember reading something similar on this a while ago, possibly on this forum, but I can't find anything at the moment, does anyone remember any other papers/posts on the topic?

Hey everyone! As a philosophy grad transitioning into AI governance/policy research or AI safety advocacy, I'd love advice: which for-profit roles best build relevant skills while providing financial stability?

Specifically, what kinds of roles (especially outside of obvious research positions) are valuable stepping stones toward AI governance/policy research? I don’t yet have direct research experience, so I’m particularly interested in roles that are more accessible early on but still help me develop transferable skills, especially those that might not be intuitive at first glance.

My secondary interest is in AI safety advocacy. Are there particular entry-level or for-profit roles that could serve as strong preparation for future advocacy or field-building work?

A bit about me:

– I have a strong analytical and critical thinking background from my philosophy BA, including structured and clear writing experience

– I’m deeply engaged with the AI safety space: I’ve completed BlueDot’s AI Governance course, volunteered with AI Safety Türkiye, and regularly read and discuss developments in the field

– I’m curious, organized, and enjoy operations work, in addition to research and strategy

If you've navigated a similar path, have ideas about stepping-stone roles, or just want to chat, I'd be happy to chat over a call as well! Feel free to schedule a 20-min conversation here.

Thanks in advance for any pointers!

Wednesday, 23 July 2025Wed, 23 Jul 2025

Wednesday, 23 July 2025

Wed, 23 Jul 2025

Frontpage Posts

Personal Blogposts

Quick takes

AI risk in Depth, in the mainstream!

Perhaps the most popular British Podcast, the Rest is Politics has just spent 23 minutes in one of the most compelling and straightforward explanations of AI risk I've heard anywhere, let alone in the mainstream media. The first 5 minutes of the discussion is especially good as an explainer and then there's a more wide ranging discussion after that.

Recommended sharing with non-EA friends, especially in England as this is a respected mainstream podcasts that not many people will find weird - Minute 16 to 38. He also discusses (near the end) his personal journey of how he became scared of AI which is super cool.

I don't love his solution of England and EU building their own "honest" models, but hey most of it is great.

Also a shoutout as well to any of you in the background who might have played a part in helping Rory Stewart think about this more deeply.

https://www.nytimes.com/2025/07/23/health/pepfar-shutdown.html

Pepfar maybe still being killed off after all :(

I wonder if it would be interesting to use PPP-adjusted sale price of high-end luxury or Veblen goods as a metric for moral progress of humanity (I suspect on this metric, we'd look like we were getting progressively worse, but I'm unclear if that's a bug or a feature).

Tuesday, 22 July 2025Tue, 22 Jul 2025

Tuesday, 22 July 2025

Tue, 22 Jul 2025

Frontpage Posts

Personal Blogposts

Monday, 21 July 2025Mon, 21 Jul 2025

Monday, 21 July 2025

Mon, 21 Jul 2025

Frontpage Posts

Personal Blogposts

Sunday, 20 July 2025Sun, 20 Jul 2025

Sunday, 20 July 2025

Sun, 20 Jul 2025

Frontpage Posts

Personal Blogposts

Saturday, 19 July 2025Sat, 19 Jul 2025

Saturday, 19 July 2025

Sat, 19 Jul 2025

Frontpage Posts

Personal Blogposts

Quick takes

AI governance could be much more relevant in the EU, if the EU was willing to regulate ASML. Tell ASML they can only service compliant semiconductor foundries, where a "compliant semicondunctor foundry" is defined as a foundry which only allows its chips to be used by compliant AI companies.

I think this is a really promising path for slower, more responsible AI development globally. The EU is known for its cautious approach to regulation. Many EAs believe that a cautious, risk-averse approach to AI development is appropriate. Yet EU regulations are often viewed as less important, since major AI firms are mostly outside the EU. However, ASML is located in the EU, and serves as a chokepoint for the entire AI industry. Regulating ASML addresses the standard complaint that "AI firms will simply relocate to the most permissive jurisdiction". Advocating this path could be a high-leverage way to make global AI development more responsible without the need for an international treaty.

If you're considering a career in AI policy, now is an especially good time to start applying widely as there's a lot of hiring going on right now. I documented in my Substack over a dozen different opportunities that I think are very promising.

Topic Page Edits and Discussion

Friday, 18 July 2025Fri, 18 Jul 2025

Friday, 18 July 2025

Fri, 18 Jul 2025

Frontpage Posts

Topic Page Edits and Discussion

Thursday, 17 July 2025Thu, 17 Jul 2025

Thursday, 17 July 2025

Thu, 17 Jul 2025

Frontpage Posts

Wednesday, 16 July 2025Wed, 16 Jul 2025

Wednesday, 16 July 2025

Wed, 16 Jul 2025

Frontpage Posts

Personal Blogposts

Quick takes

Probably(?) big news on PEPFAR (title: White House agrees to exempt PEPFAR from cuts): https://thehill.com/homenews/senate/5402273-white-house-accepts-pepfar-exemption/. (Credit to Marginal Revolution for bringing this to my attention)

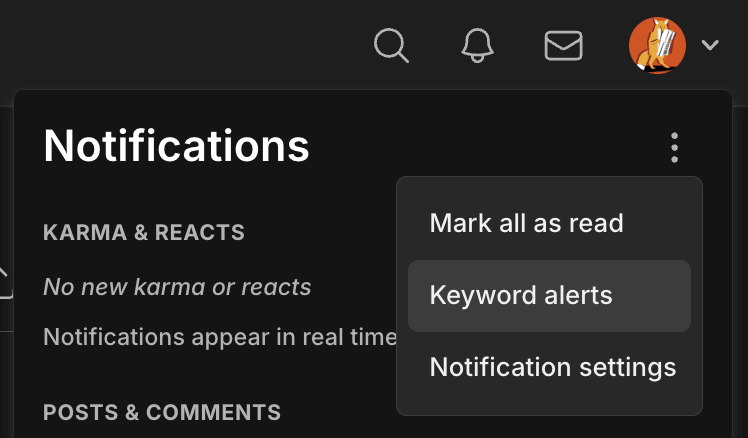

Mini EA Forum Update

We've added two new kinds of notifications that have been requested multiple times before:

1. Notifications when someone links to your post, comment, or quick take

1. These are turned on by default — you can edit your notifications settings via the Account Settings page.

2. Keyword alerts

1. You can manage your keyword alerts here, which you can get to via your Account Settings or by clicking the notification bell and then the three dots icon.

2. You can quickly add an alert by clicking "Get notified" on the search page. (Note that the alerts only use the keyword, not any search filters.)

3. You get alerted when the keyword appears in a newly published post, comment, or quick take (so this doesn't include, for example, new topics).

4. You can also edit the frequency of both the on-site and email versions of these alerts independently via the Account Settings page (at the bottom of the Notifications list).

5. See more details in the PR

I hope you find these useful! 😊 Feel free to reply if you have any feedback or questions.

I've updated the public doc that summarizes the CEA Online Team's OKRs to add Q3.1 (sorry this is a bit late, I just forgot! 😅).

Hello everyone,

I recently came across a book titled “Technical Control Problem and Potential Capabilities of Artificial Intelligence” by Dr. Hüseyin Gürkan Abalı. It claims to offer a technical and philosophical framework regarding the control problem in advanced AI systems, and discusses their potential future capabilities.

As someone interested in AI safety and ethics, I’m curious if anyone here has read the book or has any thoughts on its relevance or quality.

I would appreciate any reviews, critiques, or academic impressions.

Thanks in advance!

Act utilitarians choose actions estimated to increase total happiness. Rule utilitarians follow rules estimated to increase total happiness (e.g. not lying). But you can have the best of both: act utilitarianism where rules are instead treated as moral priors. For example, having a strong prior that killing someone is bad, but which can be overridden in extreme circumstances (e.g. if killing the person ends WWII).

These priors make act utilitarianism more safeguarded against bad assessments. They are grounded in Bayesianism (moral priors are updated the same way as non-moral priors). They also decrease cognitive effort: most of the time, just follow your priors, unless the stakes and uncertainty warrant more complex consequence estimates. You can have a small prior toward inaction, so that not every random action is worth considering. You can also blend in some virtue ethics, by having a prior that virtuous acts often lead to greater total happiness in the long run.

What I described is a more Bayesian version of R. M. Hare's "Two-level utilitarianism", which involves an "intuitive" and a "critical" level of moral thinking.