All posts

Today and yesterdayToday and yesterday

Today and yesterday

Today and yesterday

Frontpage Posts

Personal Blogposts

Quick takes

Act utilitarians choose actions estimated to increase total happiness. Rule utilitarians follow rules estimated to increase total happiness (e.g. not lying). But you can have the best of both: act utilitarianism where rules are instead treated as moral priors. For example, having a strong prior that killing someone is bad, but which can be overridden in extreme circumstances (e.g. if killing the person ends WWII).

These priors make act utilitarianism more safeguarded against bad assessments. They are grounded in Bayesianism (moral priors are updated the same way as non-moral priors). They also decrease cognitive effort: most of the time, just follow your priors, unless the stakes and uncertainty warrant more complex consequence estimates. You can have a small prior toward inaction, so that not every random action is worth considering. You can also blend in some virtue ethics, by having a prior that virtuous acts often lead to greater total happiness in the long run.

What I described is a more Bayesian version of R. M. Hare's "Two-level utilitarianism", which involves an "intuitive" and a "critical" level of moral thinking.

From https://arxiv.org/pdf/1712.03079

[...]

I remember reading something similar on this a while ago, possibly on this forum, but I can't find anything at the moment, does anyone remember any other papers/posts on the topic?

Hey everyone! As a philosophy grad transitioning into AI governance/policy or safety advocacy, I'd love advice: which for-profit roles best build relevant skills while providing financial stability?

Specifically, what kinds of roles (especially outside of obvious research positions) are valuable stepping stones toward AI governance/policy research? I don’t yet have direct research experience, so I’m particularly interested in roles that are more accessible early on but still help me develop transferable skills, especially those that might not be intuitive at first glance.

My secondary interest is in AI safety advocacy. Are there particular entry-level or for-profit roles that could serve as strong preparation for future advocacy or field-building work?

A bit about me:

– I have a strong analytical and critical thinking background from my philosophy BA, including structured and clear writing experience

– I’m deeply engaged with the AI safety space: I’ve completed BlueDot’s AI Governance course, volunteered with AI Safety Türkiye, and regularly read and discuss developments in the field

– I’m curious, organized, and enjoy operations work, in addition to research and strategy

If you've navigated a similar path, have ideas about stepping-stone roles, or just want to chat, I'd be happy to chat over a call as well! Feel free to schedule a 20-min conversation here.

Thanks in advance for any pointers!

Past weekPast week

Past week

Past week

Frontpage Posts

Quick takes

Giving What We Can is about to hit 10,000 pledgers. (9935 at the time of writing)

If you're on the fence and wanna be in the 4 digit club, consider very carefully whether you should make the pledge! An important reminder that Earning to Give is a valid way to engage with EA.

AI governance could be much more relevant in the EU, if the EU was willing to regulate ASML. Tell ASML they can only service compliant semiconductor foundries, where a "compliant semicondunctor foundry" is defined as a foundry which only allows its chips to be used by compliant AI companies.

I think this is a really promising path for slower, more responsible AI development globally. The EU is known for its cautious approach to regulation. Many EAs believe that a cautious, risk-averse approach to AI development is appropriate. Yet EU regulations are often viewed as less important, since major AI firms are mostly outside the EU. However, ASML is located in the EU, and serves as a chokepoint for the entire AI industry. Regulating ASML addresses the standard complaint that "AI firms will simply relocate to the most permissive jurisdiction". Advocating this path could be a high-leverage way to make global AI development more responsible without the need for an international treaty.

If you're considering a career in AI policy, now is an especially good time to start applying widely as there's a lot of hiring going on right now. I documented in my Substack over a dozen different opportunities that I think are very promising.

Fun anecdote from Richard Hamming about checking the calculations used before the Trinity test:

From https://en.wikipedia.org/wiki/Richard_Hamming

https://www.nytimes.com/2025/07/23/health/pepfar-shutdown.html

Pepfar maybe still being killed off after all :(

Past 14 daysPast 14 days

Past 14 days

Past 14 days

Frontpage Posts

Quick takes

An excerpt about the creation of PEPFAR, from "Days of Fire" by Peter Baker. I found this moving.

I am sure someone has mentioned this before, but…

For the longest time, and to a certain extent still, I have found myself deeply blocked from publicly sharing anything that wasn’t significantly original. Whenever I have found an idea existing anywhere, even if it was a footnote on an underrated 5-karma-post, I would be hesitant to write about it, since I thought that I wouldn’t add value to the “marketplace of ideas.” In this abstract concept, the “idea is already out there” - so the job is done, the impact is set in place. I have talked to several people who feel similarly; people with brilliant thoughts and ideas, who proclaim to have “nothing original to write about” and therefore refrain from writing.

I have come to realize that some of the most worldview-shaping and actionable content I have read and seen was not the presentation of a uniquely original idea, but often a better-presented, better-connected, or even just better-timed presentation of existing ideas. I now think of idea-sharing as a much more concrete, but messy contributor to impact, one that requires the right people to read the right content in the right way at the right time; maybe even often enough, sometimes even from the right person on the right platform, etc.

All of that to say, the impact of your idea-sharing goes much beyond the originality of your idea. If you have talked to several cool people in your network about something and they found it interesting and valuable to hear, consider publishing it!

Relatedly, there are many more reasons to write other than sharing original ideas and saving the world :)

Probably(?) big news on PEPFAR (title: White House agrees to exempt PEPFAR from cuts): https://thehill.com/homenews/senate/5402273-white-house-accepts-pepfar-exemption/. (Credit to Marginal Revolution for bringing this to my attention)

Mini EA Forum Update

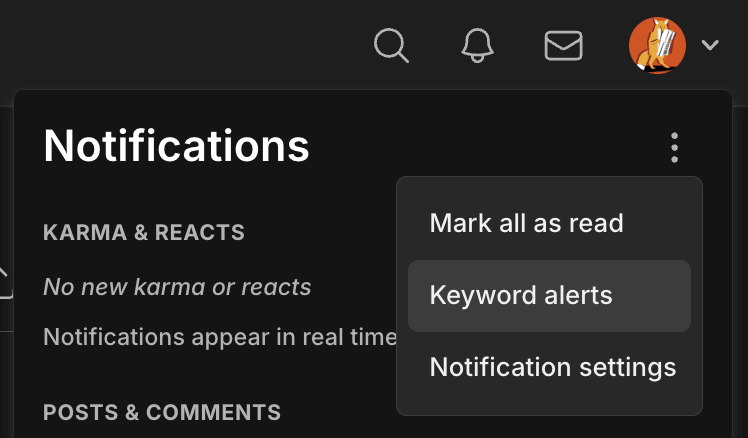

We've added two new kinds of notifications that have been requested multiple times before:

1. Notifications when someone links to your post, comment, or quick take

1. These are turned on by default — you can edit your notifications settings via the Account Settings page.

2. Keyword alerts

1. You can manage your keyword alerts here, which you can get to via your Account Settings or by clicking the notification bell and then the three dots icon.

2. You can quickly add an alert by clicking "Get notified" on the search page. (Note that the alerts only use the keyword, not any search filters.)

3. You get alerted when the keyword appears in a newly published post, comment, or quick take (so this doesn't include, for example, new topics).

4. You can also edit the frequency of both the on-site and email versions of these alerts independently via the Account Settings page (at the bottom of the Notifications list).

5. See more details in the PR

I hope you find these useful! 😊 Feel free to reply if you have any feedback or questions.

I am too young and stupid to be giving career advice, but in the spirit of career conversations week, I figured I'd pass on advice I've received which I ignored at the time, and now think was good advice: you might be underrating the value of good management!

I think lots of young EAish people underrate the importance of good management/learning opportunities, and overrate direct impact. In fact, I claim that if you're looking for your first/second job, you should consider optimising for having a great manager, rather than for direct impact.

Why?

* Having a great manager dramatically increases your rate of learning, assuming you're in a job with scope for taking on new responsibilities or picking up new skills (which covers most jobs).

* It also makes working much more fun!

* Mostly, you just don't know what you don't know. It's been very revealing to me how much I've learnt in the last year, I think it's increased my expected impact, and I wouldn't have predicted this beforehand.

* In particular, if you're just leaving university, you probably haven't really had a manager-type person before, and you've only experienced a narrow slice of all possible work tasks. So you're probably underrating both how useful a very good manager can be, and how much you could learn.

How can you tell if someone will be a great manager?

* This part seems harder. I've thought about it a bit, but hopefully other people have better ideas.

* Ask the org who would manage you and request a conversation with them. Ask about their management style: how do they approach management? How often will you meet, and for how long? Do they plan to give minimal oversight and just check you're on track, or will they be more actively involved? (For new grads, active management is usually better.) You might also want to ask for examples of people they've managed and how those people grew.

* Once you're partway through the application process or have an offer, reach out to current employees fo

Past 31 days

Frontpage Posts

Quick takes

Recently, various groups successfully lobbied to remove the moratorium on state AI bills. This involved a surprising amount of success while competing against substantial investment from big tech (e.g. Google, Meta, Amazon). I think people interested in mitigating catastrophic risks from advanced AI should consider working at these organizations, at least to the extent their skills/interests are applicable. This both because they could often directly work on substantially helpful things (depending on the role and organization) and because this would yield valuable work experience and connections.

I worry somewhat that this type of work is neglected due to being less emphasized and seeming lower status. Consider this an attempt to make this type of work higher status.

Pulling organizations mostly from here and here we get a list of orgs you could consider trying to work (specifically on AI policy) at:

* Encode AI

* Americans for Responsible Innovation (ARI)

* Fairplay (Fairplay is a kids safety organization which does a variety of advocacy which isn't related to AI. Roles/focuses on AI would be most relevant. In my opinion, working on AI related topics at Fairplay is most applicable for gaining experience and connections.)

* Common Sense (Also a kids safety organization)

* The AI Policy Network (AIPN)

* Secure AI project

To be clear, these organizations vary in the extent to which they are focused on catastrophic risk from AI (from not at all to entirely).

There seems to be a pattern where I get excited about some potential projects and ideas during an EA Global, fill EA Global survey suggesting that the conference was extremely useful for me, but then those projects never materialise for various reasons. If others relate, I worry that EA conferences are not as useful as feedback surveys suggest.

Good news! The 10-year AI moratorium on state legislation has been removed from the budget bill.

The Senate voted 99-1 to strike the provision. Senator Blackburn, who originally supported the moratorium, proposed the amendment to remove it after concluding her compromise exemptions wouldn't work.

https://www.yahoo.com/news/us-senate-strikes-ai-regulation-085758901.html?guccounter=1

1. If you have social capital, identify as an EA.

2. Stop saying Effective Altruism is "weird", "cringe" and full of problems - so often

And yes, "weird" has negative connotations to most people. Self flagellation once helped highlight areas needing improvement. Now overcorrection has created hesitation among responsible, cautious, and credible people who might otherwise publicly identify as effective altruists. As a result, the label increasingly belongs to those willing to accept high reputational risks or use it opportunistically, weakening the movement’s overall credibility.

If you're aligned with EA’s core principles, thoughtful in your actions, and have no significant reputational risks, then identifying openly as an EA is especially important. Normalising the term matters. When credible and responsible people embrace the label, they anchor it positively and prevent misuse.

Offline I was early to criticise Effective Altruism’s branding and messaging. Admittedly, the name itself is imperfect. Yet at this point, it is established and carries public recognition. We can't discard it without losing valuable continuity and trust. If you genuinely believe in the core ideas and engage thoughtfully with EA’s work, openly identifying yourself as an effective altruist is a logical next step.

Specifically, if you already have a strong public image, align privately with EA values, and have no significant hidden issues, then you're precisely the person who should step forward and put skin in the game. Quiet alignment isn’t enough. The movement’s strength and reputation depend on credible voices publicly standing behind it.

The book "Careless People" starts as a critique of Facebook — a key EA funding source — and unexpectedly lands on AI safety, x-risk, and global institutional failure.

I just finished Sarah Wynn-Williams' recently published book. I had planned to post earlier — mainly about EA’s funding sources — but after reading the surprising epilogue, I now think both the book and the author might deserve even broader attention within EA and longtermist circles.

1. The harms associated with the origins of our funding

The early chapters examine the psychology and incentives behind extreme tech wealth — especially at Facebook/Meta. That made me reflect on EA’s deep reliance (although unclear how much as OllieBase helpfully pointed out after I first published this Quick Take) on money that ultimately came from:

* harms to adolescent mental health,

* cooperation with authoritarian regimes,

* and the erosion of democracy, even in the US and Europe.

These issues are not new (they weren’t to me), but the book’s specifics and firsthand insights reveal a shocking level of disregard for social responsibility — more than I thought possible from such a valuable and influential company.

To be clear: I don’t think Dustin Moskovitz reflects the culture Wynn-Williams critiques. He left Facebook early and seems unusually serious about ethics.

But the systems that generated that wealth — and shaped the broader tech landscape could still matter.

Especially post-FTX, it feels important to stay aware of where our money comes from. Not out of guilt or purity — but because if you don't occasionally check your blind spot you might cause damage.

2. Ongoing risk from the same culture

Meta is now a major player in the frontier AI race — aggressively releasing open-weight models with seemingly limited concern for cybersecurity, governance, or global risk.

Some of the same dynamics described in the book — greed, recklessness, detachment — could well still be at play. And it would not be comple

Act utilitarians choose actions estimated to increase total happiness. Rule utilitarians follow rules estimated to increase total happiness (e.g. not lying). But you can have the best of both: act utilitarianism where rules are instead treated as moral priors. For example, having a strong prior that killing someone is bad, but which can be overridden in extreme circumstances (e.g. if killing the person ends WWII).

These priors make act utilitarianism more safeguarded against bad assessments. They are grounded in Bayesianism (moral priors are updated the same way as non-moral priors). They also decrease cognitive effort: most of the time, just follow your priors, unless the stakes and uncertainty warrant more complex consequence estimates. You can have a small prior toward inaction, so that not every random action is worth considering. You can also blend in some virtue ethics, by having a prior that virtuous acts often lead to greater total happiness in the long run.

What I described is a more Bayesian version of R. M. Hare's "Two-level utilitarianism", which involves an "intuitive" and a "critical" level of moral thinking.