All posts

Today and yesterdayToday and yesterday

Today and yesterday

Today and yesterday

Frontpage Posts

Past weekPast week

Past week

Past week

Frontpage Posts

Quick takes

Looks like Mechanize is choosing to be even more irresponsible than we previously thought. They're going straight for automating software engineering. Would love to hear their explanation for this.

"Software engineering automation isn't going fast enough" [1] - oh really?

This seems even less defensible than their previous explanation of how their work would benefit the world.

1. ^

Not an actual quote

The EA Forum moderation team is going to experiment a bit with how we categorize posts. Currently there is a low bar for a Forum post being categorized as “Frontpage” after it’s approved. In comparison, LessWrong is much more opinionated about the content they allow, especially from new users. We’re considering moving in that direction, in order to maintain a higher percentage of valuable content on our Frontpage.

To start, we’re going to allow moderators to move posts from new users from “Frontpage” to “Personal blog”[1], at their discretion, but starting conservatively. We’ll keep an eye on this and, depending on how this goes, we may consider taking further steps such as using the “rejected content” feature (we don’t currently have that on the EA Forum).

Feel free to reply here if you have any questions or feedback.

1. ^

If you’d like to make sure you see “Personal blog” posts in your Frontpage, you can customize your feed.

Having a savings target seems important. (Not financial advice.)

I sometimes hear people in/around EA rule out taking jobs due to low salaries (sometimes implicitly, sometimes a little embarrassedly). Of course, it's perfectly understandable not to want to take a significant drop in your consumption. But in theory, people with high salaries could be saving up so they can take high-impact, low-paying jobs in the future; it just seems like, by default, this doesn't happen. I think it's worth thinking about how to set yourself up to be able to do it if you do find yourself in such a situation; you might find it harder than you expect.

(Personal digression: I also notice my own brain paying a lot more attention to my personal finances than I think is justified. Maybe some of this traces back to some kind of trauma response to being unemployed for a very stressful ~6 months after graduating: I just always could be a little more financially secure. A couple weeks ago, while meditating, it occurred to me that my brain is probably reacting to not knowing how I'm doing relative to my goal, because 1) I didn't actually know what my goal is, and 2) I didn't really have a sense of what I was spending each month. In IFS terms, I think the "social and physical security" part of my brain wasn't trusting that the rest of my brain was competently handling the situation.)

So, I think people in general would benefit from having an explicit target: once I have X in savings, I can feel financially secure. This probably means explicitly tracking your expenses, both now and in a "making some reasonable, not-that-painful cuts" budget, and gaming out the most likely scenarios where you'd need to use a large amount of your savings, beyond the classic 3 or 6 months of expenses in an emergency fund. For people motivated by EA principles, the most likely scenarios might be for impact reasons: maybe you take a public-sector job that pays half your current salary for three years, or maybe you'

Mini Forum update: Draft comments, and polls in comments

Draft comments

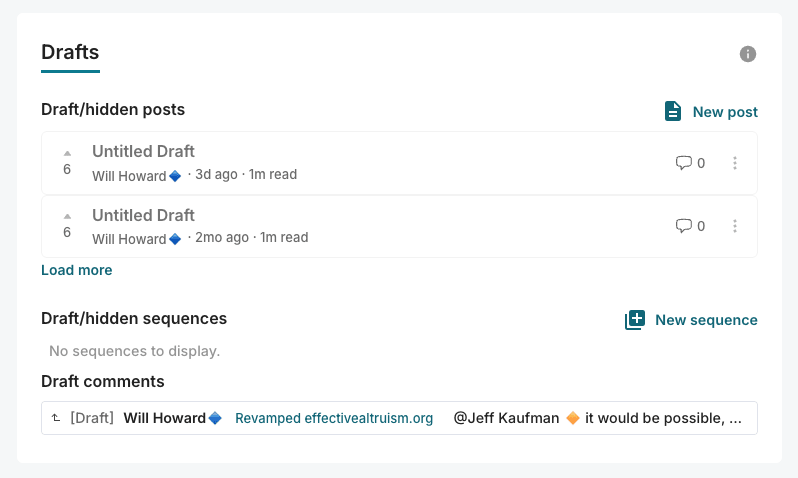

You can now save comments as permanent drafts:

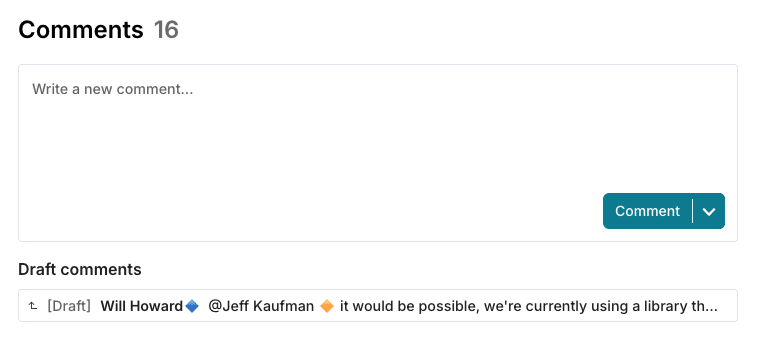

After saving, the draft will appear for you to edit:

1. In-place if it's a reply to another comment (as above)

2. In a "Draft comments" section under the comment box on the post

3. In the drafts section of your profile

The reasons we think this will be useful:

* For writing long, substantive comments (and quick takes!). We think these are the some of the most valuable comments on the forum, and want to encourage more of them

* For starting a comment on mobile and then later continuing on desktop

* To lower the barrier to starting writing a comment, since you know you can always throw it in drafts and then never look at it again

----------------------------------------

Polls in comments

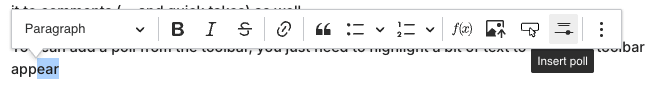

We recently added the ability to put polls in posts, and this was fairly well received, so we're adding it to comments (... and quick takes!) as well.

You can add a poll from the toolbar, you just need to highlight a bit of text to make the toolbar appear:

And the poll will look like this...

Productive conference meetup format for 5-15 people in 30-60 minutes

I ran an impromptu meetup at a conference this weekend, where 2 of the ~8 attendees told me that they found this an unusually useful/productive format and encouraged me to share it as an EA Forum shortform. So here I am, obliging them:

* Intros… but actually useful

* Name

* Brief background or interest in the topic

* 1 thing you could possibly help others in this group with

* 1 thing you hope others in this group could help you with

* NOTE: I will ask you to act on these imminently so you need to pay attention, take notes etc

* [Facilitator starts and demonstrates by example]

* Round of any quick wins: anything you heard where someone asked for some help and you think you can help quickly, e.g. a resource, idea, offer? Say so now!

* Round of quick requests: Anything where anyone would like to arrange a 1:1 later with someone else here, or request anything else?

* If 15+ minutes remaining:

* Brainstorm whole-group discussion topics for the remaining time. Quickly gather in 1-5 topic ideas in less than 5 minutes.

* Show of hands voting for each of the proposed topics.

* Discuss most popular topics for 8-15 minutes each. (It might just be one topic)

* If less than 15 minutes remaining:

* Quickly pick one topic for group discussion yourself.

* Or just finish early? People can stay and chat if they like.

Note: the facilitator needs to actually facilitate, including cutting off lengthy intros or any discussions that get started during the ‘quick wins’ and ‘quick requests’ rounds. If you have a group over 10 you might need to divide into subgroups for the discussion part.

I think we had around 3 quick wins, 3 quick requests, and briefly discussed 2 topics in our 45 minute session.

Past 14 daysPast 14 days

Past 14 days

Past 14 days

Frontpage Posts

Quick takes

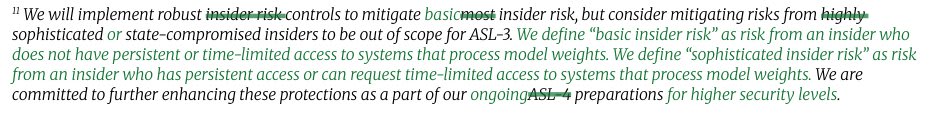

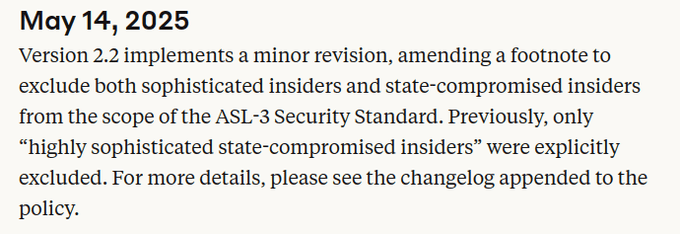

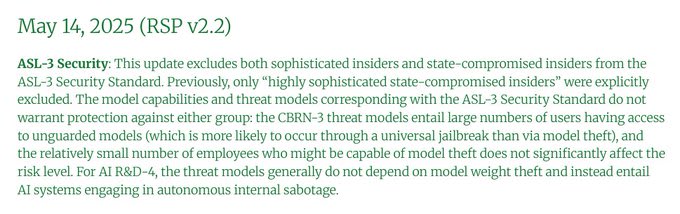

A week ago, Anthropic quietly weakened their ASL-3 security requirements. Yesterday, they announced ASL-3 protections.

I appreciate the mitigations, but quietly lowering the bar at the last minute so you can meet requirements isn't how safety policies are supposed to work.

(This was originally a tweet thread (https://x.com/RyanPGreenblatt/status/1925992236648464774) which I've converted into a quick take. I also posted it on LessWrong.)

What is the change and how does it affect security?

9 days ago, Anthropic changed their RSP so that ASL-3 no longer requires being robust to employees trying to steal model weights if the employee has any access to "systems that process model weights".

Anthropic claims this change is minor (and calls insiders with this access "sophisticated insiders").

But, I'm not so sure it's a small change: we don't know what fraction of employees could get this access and "systems that process model weights" isn't explained.

Naively, I'd guess that access to "systems that process model weights" includes employees being able to operate on the model weights in any way other than through a trusted API (a restricted API that we're very confident is secure). If that's right, it could be a high fraction! So, this might be a large reduction in the required level of security.

If this does actually apply to a large fraction of technical employees, then I'm also somewhat skeptical that Anthropic can actually be "highly protected" from (e.g.) organized cybercrime groups without meeting the original bar: hacking an insider and using their access is typical!

Also, one of the easiest ways for security-aware employees to evaluate security is to think about how easily they could steal the weights. So, if you don't aim to be robust to employees, it might be much harder for employees to evaluate the level of security and then complain about not meeting requirements[1].

Anthropic's justification and why I disagree

Anthropic justified the change by

There is going to be a Netflix series on SBF titled The Altruists, so EA will be back in the media. I don't know how EA will be portrayed in the show, but regardless, now is a great time to improve EA communications. More specifically, being a lot more loud about historical and current EA wins — we just don't talk about them enough!

A snippet from Netflix's official announcement post:

I was extremely disappointed to see this tweet from Liron Shapira revealing that the Centre for AI Safety fired a recent hire, John Sherman, for stating that members of the public would attempt to destroy AI labs if they understood the magnitude of AI risk. Capitulating to this sort of pressure campaign is not the right path for EA, which should have a focus on seeking the truth rather than playing along with social-status games, and is not even the right path for PR (it makes you look like you think the campaigners have valid points, which in this case is not true). This makes me think less of CAIS' decision-makers.

I'm a 36 year old iOS Engineer/Software Engineer who switched to working on Image classification systems via Tensorflow a year ago. Last month I was made redundant with a fairly generous severance package and good buffer of savings to get me by while unemployed.

The risky step I had long considered of quitting my non-impactful job was taken for me. I'm hoping to capitalize on my free time by determining what career path to take that best fits my goals. I'm pretty excited about it.

I created a weighted factor model to figure out what projects or learning to take on first. I welcome feedback on it. There's also a schedule tab for how I'm planning to spend my time this year and a template if anyone wishes to use this spreadsheet their selves.

I got feedback from my 80K hour advisor to get involved in EA communities more often. I'm also want to learn more publicly be it via forums or by blogging. This somewhat unstructured dumping of my thoughts is a first step towards that.

Past 31 days

Frontpage Posts

Quick takes

I have a bunch of disagreements with Good Ventures and how they are allocating their funds, but also Dustin and Cari are plausibly the best people who ever lived.

I've now spoken to ~1,400 people as an advisor with 80,000 Hours, and if there's a quick thing I think is worth more people doing, it's doing a short reflection exercise about one's current situation.

Below are some (cluster of) questions I often ask in an advising call to facilitate this. I'm often surprised by how much purchase one can get simply from this -- noticing one's own motivations, weighing one's personal needs against a yearning for impact, identifying blind spots in current plans that could be triaged and easily addressed, etc.

A long list of semi-useful questions I often ask in an advising call

1. Your context:

1. What’s your current job like? (or like, for the roles you’ve had in the last few years…)

1. The role

2. The tasks and activities

3. Does it involve management?

4. What skills do you use? Which ones are you learning?

5. Is there something in your current job that you want to change, that you don’t like?

2. Default plan and tactics

1. What is your default plan?

2. How soon are you planning to move? How urgently do you need to get a job?

3. Have you been applying? Getting interviews, offers? Which roles? Why those roles?

4. Have you been networking? How? What is your current network?

5. Have you been doing any learning, upskilling? How have you been finding it?

6. How much time can you find to do things to make a job change? Have you considered e.g. a sabbatical or going down to a 3/4-day week?

7. What are you feeling blocked/bottlenecked by?

3. What are your preferences and/or constraints?

1. Money

2. Location

3. What kinds of tasks/skills would you want to use? (writing, speaking, project management, coding, math, your existing skills, etc.)

4. What skills do you want to develop?

5. Are you interested in leadership, management, or individual contribution?

6. Do you want to shoot for impact? H

why do i find myself less involved in EA?

epistemic status: i timeboxed the below to 30 minutes. it's been bubbling for a while, but i haven't spent that much time explicitly thinking about this. i figured it'd be a lot better to share half-baked thoughts than to keep it all in my head — but accordingly, i don't expect to reflectively endorse all of these points later down the line. i think it's probably most useful & accurate to view the below as a slice of my emotions, rather than a developed point of view. i'm not very keen on arguing about any of the points below, but if you think you could be useful toward my reflecting processes (or if you think i could be useful toward yours!), i'd prefer that you book a call to chat more over replying in the comments. i do not give you consent to quote my writing in this short-form without also including the entirety of this epistemic status.

* 1-3 years ago, i was a decently involved with EA (helping organize my university EA program, attending EA events, contracting with EA orgs, reading EA content, thinking through EA frames, etc).

* i am now a lot less involved in EA.

* e.g. i currently attend uc berkeley, and am ~uninvolved in uc berkeley EA

* e.g. i haven't attended a casual EA social in a long time, and i notice myself ughing in response to invites to explicitly-EA socials

* e.g. i think through impact-maximization frames with a lot more care & wariness, and have plenty of other frames in my toolbox that i use to a greater relative degree than the EA ones

* e.g. the orgs i find myself interested in working for seem to do effectively altruistic things by my lights, but seem (at closest) to be EA-community-adjacent and (at furthest) actively antagonistic to the EA community

* (to be clear, i still find myself wanting to be altruistic, and wanting to be effective in that process. but i think describing my shift as merely moving a bit away from the community would be underselling the extent to which i've

As a group organiser I was wildly miscalibrated about the acceptance rate for EAGs! I spoke to the EAG team, and here are the actual figures:

* The overall acceptance rate for undergraduate student is about ¾! (2024)

* For undergraduate first timers, it’s about ½ (Bay Area 2025)

If that’s peaked your interest, EAG London 2025 applications close soon - apply here!

Jemima

I wonder what can be done to make people more comfortable praising powerful people in EA without feeling like sycophants.

A while ago I saw Dustin Moskovitz commenting on the EA Forum. I thought about expressing my positive impressions of his presence and how incredible it was that he even engaged. I didn't do that because it felt like sycophancy. The next day he deleted his account. I don't think my comment would have changed anything in that instance, but I still regretted not commenting.

In general, writing criticism feels more virtuous than writing praise. I used to avoid praising people who had power over me, but now that attitude seems misguided to me. While I'm glad that EA provided an environment where I could feel comfortable criticising the leadership, I'm unhappy about ending up in a situation where occupying leadership positions in EA feels like a curse to potential candidates.

Many community members agree that there is a leadership vacuum in EA. That should lead us to believe people in leadership positions should be rewarded more than they currently are. Part of that reward could be encouragement and I am personally committing to comment on things I like about EA more often.

Since April 1st

Frontpage Posts

Quick takes

This is a post with praise for Good Ventures.[1] I don’t expect anything I’ve written here to be novel, but I think it’s worth saying all the same. [2] (The draft of this was prompted by Dustin M leaving the Forum.)

Over time, I’ve done a lot of outreach to high-net-worth individuals. Almost none of those conversations have led anywhere, even when they say they’re very excited to give, and use words like “impact” and “maximising” a lot.

Instead, people almost always do some combination of:

* Not giving at all, or giving only a tiny fraction of their net worth

* (I remember in the early days of 80,000 Hours, we spent a whole day hosting an UHNW. He ultimately gave £5000. The week afterwards, a one-hour call with Julia Wise - a social worker at the time - resulted in a larger donation.)

* Give to less important causes, often because they have quite quickly decided on some set of causes, with very little in the way of deep reflection or investigation into that choice.

* Give in lower-value ways, because they value their own hot takes rather than giving expert grantmakers enough freedom to make the best grants within causes.

(The story here doesn’t surprise me.)

From this perspective, EA is incredibly lucky that Cari and Dustin came along in the early days. In the seriousness of their giving, and their willingness to follow the recommendations of domain experts, even in unusual areas, they are way out on the tail of the distribution.

I say this even though they’ve narrowed their cause area focus, even though I probably disagree with that decision (although I feel humble about my ability, as an outsider, to know what trade-offs I’d think would be best if I were in their position), and even though because of that narrowing of focus my own work (and Forethought more generally) is unlikely to receive Good Ventures funding, at least for the time being.

My attitude to someone who is giving a lot, but giving fairly ineffectively, is, “Wow, that’s so awesome you’

In light of recent discourse on EA adjacency, this seems like a good time to publicly note that I still identify as an effective altruist, not EA adjacent.

I am extremely against embezzling people out of billions of dollars of money, and FTX was a good reminder of the importance of "don't do evil things for galaxy brained altruistic reasons". But this has nothing to do with whether or not I endorse the philosophy that "it is correct to try to think about the most effective and leveraged ways to do good and then actually act on them". And there are many people in or influenced by the EA community who I respect and think do good and important work.

Anthropic has been getting flak from some EAs for distancing itself from EA. I think some of the critique is fair, but overall, I think that the distancing is a pretty safe move.

Compare this to FTX. SBF wouldn't shut up about EA. He made it a key part of his self-promotion. I think he broadly did this for reasons of self-interest for FTX, as it arguably helped the brand at that time.

I know that at that point several EAs were privately upset about this. They saw him as using EA for PR, and thus creating a key liability that could come back and bite EA.

And come back and bite EA it did, about as poorly as one could have imagined.

So back to Anthropic. They're taking the opposite approach. Maintaining about as much distance from EA as they semi-honestly can. I expect that this is good for Anthropic, especially given EA's reputation post-FTX.

And I think it's probably also safe for EA.

I'd be a lot more nervous if Anthropic were trying to tie its reputation to EA. I could easily see Anthropic having a scandal in the future, and it's also pretty awkward to tie EA's reputation to an AI developer.

To be clear, I'm not saying that people from Anthropic should actively lie or deceive. So I have mixed feelings about their recent quotes for Wired. But big-picture, I feel decent about their general stance to keep distance. To me, this seems likely in the interest of both parties.

Per Bloomberg, the Trump administration is considering restricting the equivalency determination for 501(c)3s as early as Tuesday. The equivalency determination allows for 501(c)3s to regrant money to foreign, non-tax-exempt organisations while maintaining tax-exempt status, so long as an attorney or tax practitioner claims the organisation is equivalent to a local tax-exempt one.

I’m not an expert on this, but it sounds really bad. I guess it remains to be seen if they go through with it.

Regardless, the administration is allegedly also preparing to directly strip environmental and political (i.e. groups he doesn’t like, not necessarily just any policy org) non-profits of their tax exempt status. In the past week, he’s also floated trying to rescind the tax exempt status of Harvard. From what I understand, such an Executive Order is illegal under U.S. law (to whatever extent that matters anymore), unless Trump instructs the State Department to designate them foreign terrorist organisations, at which point all their funds are frozen too.

These are dark times. Stay safe 🖤

“Chief of Staff” models from a long-time Chief of Staff

I have served in Chief of Staff or CoS-like roles to three leaders of CEA (Zach, Ben and Max), and before joining CEA I was CoS to a member of the UK House of Lords. I wrote up some quick notes on how I think about such roles for some colleagues, and one of them suggested they might be useful to other Forum readers. So here you go:

Chief of Staff means many things to different people in different contexts, but the core of it in my mind is that many executive roles are too big to be done by one person (even allowing for a wider Executive or Leadership team, delegation to department leads, etc). Having (some parts of) the role split/shared between the principal and at least one other person increases the capacity and continuity of the exec function.

Broadly, I think of there being two ways to divide up these responsibilities (using CEO and CoS as stand-ins, but the same applies to other principal/deputy duos regardless of titles):

1. Split the CEO's role into component parts and assign responsibility for each part to CEO or CoS

1. Example: CEO does fundraising; CoS does budgets

2. Advantages: focus, accountability

2. Share the CEO's role with both CEO and CoS actively involved in each component part

1. Example: CEO speaks to funders based on materials prepared by CoS; CEO assigns team budget allocations which are implemented by CoS

2. Advantages: flex capacity, gatekeeping

Some things to note about these approaches:

* In practice, it’s inevitably some combination of the two, but I think it’s really important to be intentional and explicit about what’s being split and what’s being shared

* Failure to do this causes confusion, dropped balls, and duplication of effort

* Sharing is especially valuable during the early phases of your collaboration because it facilitates context-swapping and model-building

* I don’t think you’d ever want to get all the way or too far towards split, bec

Looks like Mechanize is choosing to be even more irresponsible than we previously thought. They're going straight for automating software engineering. Would love to hear their explanation for this.

"Software engineering automation isn't going fast enough" [1] - oh really?

This seems even less defensible than their previous explanation of how their work would benefit the world.

Not an actual quote